【kubernetes】k8s集群的搭建安装详细说明【创建集群、加入集群、踢出集群、重置集群

金三银四到了,送上一个小福利!《互联网大厂面试真题解析、进阶开发核心学习笔记、全套讲解视频、实战项目源码讲义》点击传送门即可获取!kubectl子命令tab设置状况: 现在除了kubectl可以tab,剩下的都是不能tab的,你可以先测试一下。查看kubectl的cmd自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。深知大多数Java工

containerd.io x86_64 1.4.6-3.1.el7 docker-ce-stable 34 M

docker-ce-cli x86_64 1:20.10.7-3.el7 docker-ce-stable 33 M

docker-ce-rootless-extras x86_64 20.10.7-3.el7 docker-ce-stable 9.2 M

docker-scan-plugin x86_64 0.8.0-3.el7 docker-ce-stable 4.2 M

fuse-overlayfs x86_64 0.7.2-6.el7_8 extras 54 k

fuse3-libs x86_64 3.6.1-4.el7 extras 82 k

slirp4netns x86_64 0.4.3-4.el7_8 extras 81 k

Transaction Summary

======================================================================================================

Install 1 Package (+8 Dependent packages)

Total download size: 107 M

Installed size: 438 M

Downloading packages:

warning: /var/cache/yum/x86_64/7/extras/packages/container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm: Header V3 RSA/SHA256 Signature, key ID f4a80eb5: NOKEY

Public key for container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm is not installed

(1/9): container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm | 40 kB 00:00:00

warning: /var/cache/yum/x86_64/7/docker-ce-stable/packages/docker-ce-20.10.7-3.el7.x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID 621e9f35: NOKEY

Public key for docker-ce-20.10.7-3.el7.x86_64.rpm is not installed

(2/9): docker-ce-20.10.7-3.el7.x86_64.rpm | 27 MB 00:00:06

(3/9): containerd.io-1.4.6-3.1.el7.x86_64.rpm | 34 MB 00:00:08

(4/9): docker-ce-rootless-extras-20.10.7-3.el7.x86_64.rpm | 9.2 MB 00:00:02

(5/9): fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm | 54 kB 00:00:00

(6/9): slirp4netns-0.4.3-4.el7_8.x86_64.rpm | 81 kB 00:00:00

(7/9): fuse3-libs-3.6.1-4.el7.x86_64.rpm | 82 kB 00:00:00

(8/9): docker-scan-plugin-0.8.0-3.el7.x86_64.rpm | 4.2 MB 00:00:01

(9/9): docker-ce-cli-20.10.7-3.el7.x86_64.rpm | 33 MB 00:00:08

Total 7.1 MB/s | 107 MB 00:00:15

Retrieving key from https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

Importing GPG key 0x621E9F35:

Userid : “Docker Release (CE rpm) docker@docker.com”

Fingerprint: 060a 61c5 1b55 8a7f 742b 77aa c52f eb6b 621e 9f35

From : https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

Retrieving key from http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

Importing GPG key 0xF4A80EB5:

Userid : “CentOS-7 Key (CentOS 7 Official Signing Key) security@centos.org”

Fingerprint: 6341 ab27 53d7 8a78 a7c2 7bb1 24c6 a8a7 f4a8 0eb5

From : http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : 2:container-selinux-2.119.2-1.911c772.el7_8.noarch 1/9

Installing : containerd.io-1.4.6-3.1.el7.x86_64 2/9

Installing : 1:docker-ce-cli-20.10.7-3.el7.x86_64 3/9

Installing : docker-scan-plugin-0.8.0-3.el7.x86_64 4/9

Installing : slirp4netns-0.4.3-4.el7_8.x86_64 5/9

Installing : fuse3-libs-3.6.1-4.el7.x86_64 6/9

Installing : fuse-overlayfs-0.7.2-6.el7_8.x86_64 7/9

Installing : docker-ce-rootless-extras-20.10.7-3.el7.x86_64 8/9

Installing : 3:docker-ce-20.10.7-3.el7.x86_64 9/9

Verifying : containerd.io-1.4.6-3.1.el7.x86_64 1/9

Verifying : fuse3-libs-3.6.1-4.el7.x86_64 2/9

Verifying : docker-scan-plugin-0.8.0-3.el7.x86_64 3/9

Verifying : slirp4netns-0.4.3-4.el7_8.x86_64 4/9

Verifying : 2:container-selinux-2.119.2-1.911c772.el7_8.noarch 5/9

Verifying : 3:docker-ce-20.10.7-3.el7.x86_64 6/9

Verifying : 1:docker-ce-cli-20.10.7-3.el7.x86_64 7/9

Verifying : docker-ce-rootless-extras-20.10.7-3.el7.x86_64 8/9

Verifying : fuse-overlayfs-0.7.2-6.el7_8.x86_64 9/9

Installed:

docker-ce.x86_64 3:20.10.7-3.el7

Dependency Installed:

container-selinux.noarch 2:2.119.2-1.911c772.el7_8 containerd.io.x86_64 0:1.4.6-3.1.el7

docker-ce-cli.x86_64 1:20.10.7-3.el7 docker-ce-rootless-extras.x86_64 0:20.10.7-3.el7

docker-scan-plugin.x86_64 0:0.8.0-3.el7 fuse-overlayfs.x86_64 0:0.7.2-6.el7_8

fuse3-libs.x86_64 0:3.6.1-4.el7 slirp4netns.x86_64 0:0.4.3-4.el7_8

Complete!

[root@master yum.repos.d]#

[root@master ~]# systemctl enable docker --now

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@master ~]#

[root@master ~]# docker info

Client:

Context: default

Debug Mode: false

Plugins:

app: Docker App (Docker Inc., v0.9.1-beta3)

buildx: Build with BuildKit (Docker Inc., v0.5.1-docker)

scan: Docker Scan (Docker Inc., v0.8.0)

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 4

Server Version: 20.10.7

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Cgroup Version: 1

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: io.containerd.runtime.v1.linux runc io.containerd.runc.v2

Default Runtime: runc

Init Binary: docker-init

containerd version: d71fcd7d8303cbf684402823e425e9dd2e99285d

runc version: b9ee9c6314599f1b4a7f497e1f1f856fe433d3b7

init version: de40ad0

Security Options:

seccomp

Profile: default

Kernel Version: 3.10.0-957.el7.x86_64

Operating System: CentOS Linux 7 (Core)

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 3.701GiB

Name: master

ID: 5FW3:5O7N:PZTJ:YUAT:GFXD:QEGA:GOA6:C2IE:I2FJ:FUQE:D2QT:QI6A

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

[root@master ~]#

-

配置这个只是为了获取镜像更快一些罢了

-

直接复制下面命令执行即可

cat > /etc/docker/daemon.json <<EOF

{

“registry-mirrors”: [“https://frz7i079.mirror.aliyuncs.com”]

}

EOF

- 然后重启docker服务

[root@master ~]# systemctl restart docker

[root@master ~]#

由于网桥工作于数据链路层,在iptables没有开启bridge-nf时,数据会直接经过网桥转发,结果就是对forward的设置失效。

cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

- 执行后如下

sysctl -p /etc/sysctl.d/k8s.conf让其立即生效

[root@master ~]# cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@master ~]#

[root@worker-165 docker-ce]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@worker-165 docker-ce]#

[root@master ~]# cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@master ~]#

查看可用版本

命令:yum list --showduplicates kubeadm --disableexcludes=kubernetes

[root@ccx k8s]# yum list --showduplicates kubeadm --disableexcludes=kubernetes

-

Loaded plugins: fastestmirror, langpacks, product-id, search-disabled-repos, subscription-

- manager

This system is not registered with an entitlement server. You can use subscription-manager

to register.

Loading mirror speeds from cached hostfile

Available Packages

kubeadm.x86_64 1.6.0-0 kubernetes

kubeadm.x86_64 1.6.1-0 kubernetes

kubeadm.x86_64 1.6.2-0 kubernetes

kubeadm.x86_64 1.6.3-0 kubernetes

kubeadm.x86_64 1.6.4-0 kubernetes

kubeadm.x86_64 1.6.5-0 kubernetes

kubeadm.x86_64 1.6.6-0 kubernetes

kubeadm.x86_64 1.6.7-0 kubernetes

kubeadm.x86_64 1.6.8-0 kubernetes

kubeadm.x86_64 1.6.9-0 kubernetes

kubeadm.x86_64 1.6.10-0 kubernetes

kubeadm.x86_64 1.6.11-0 kubernetes

kubeadm.x86_64 1.6.12-0 kubernetes

kubeadm.x86_64 1.6.13-0 kubernetes

kubeadm.x86_64 1.7.0-0 kubernetes

kubeadm.x86_64 1.7.1-0 kubernetes

kubeadm.x86_64 1.7.2-0 kubernetes

kubeadm.x86_64 1.7.3-1 kubernetes

kubeadm.x86_64 1.7.4-0 kubernetes

kubeadm.x86_64 1.7.5-0 kubernetes

kubeadm.x86_64 1.7.6-1 kubernetes

kubeadm.x86_64 1.7.7-1 kubernetes

kubeadm.x86_64 1.7.8-1 kubernetes

kubeadm.x86_64 1.7.9-0 kubernetes

kubeadm.x86_64 1.7.10-0 kubernetes

kubeadm.x86_64 1.7.11-0 kubernetes

kubeadm.x86_64 1.7.14-0 kubernetes

kubeadm.x86_64 1.7.15-0 kubernetes

kubeadm.x86_64 1.7.16-0 kubernetes

kubeadm.x86_64 1.8.0-0 kubernetes

kubeadm.x86_64 1.8.0-1 kubernetes

kubeadm.x86_64 1.8.1-0 kubernetes

kubeadm.x86_64 1.8.2-0 kubernetes

kubeadm.x86_64 1.8.3-0 kubernetes

kubeadm.x86_64 1.8.4-0 kubernetes

kubeadm.x86_64 1.8.5-0 kubernetes

kubeadm.x86_64 1.8.6-0 kubernetes

kubeadm.x86_64 1.8.7-0 kubernetes

kubeadm.x86_64 1.8.8-0 kubernetes

kubeadm.x86_64 1.8.9-0 kubernetes

kubeadm.x86_64 1.8.10-0 kubernetes

kubeadm.x86_64 1.8.11-0 kubernetes

kubeadm.x86_64 1.8.12-0 kubernetes

kubeadm.x86_64 1.8.13-0 kubernetes

kubeadm.x86_64 1.8.14-0 kubernetes

kubeadm.x86_64 1.8.15-0 kubernetes

kubeadm.x86_64 1.9.0-0 kubernetes

kubeadm.x86_64 1.9.1-0 kubernetes

kubeadm.x86_64 1.9.2-0 kubernetes

kubeadm.x86_64 1.9.3-0 kubernetes

kubeadm.x86_64 1.9.4-0 kubernetes

kubeadm.x86_64 1.9.5-0 kubernetes

kubeadm.x86_64 1.9.6-0 kubernetes

kubeadm.x86_64 1.9.7-0 kubernetes

kubeadm.x86_64 1.9.8-0 kubernetes

kubeadm.x86_64 1.9.9-0 kubernetes

kubeadm.x86_64 1.9.10-0 kubernetes

kubeadm.x86_64 1.9.11-0 kubernetes

kubeadm.x86_64 1.10.0-0 kubernetes

kubeadm.x86_64 1.10.1-0 kubernetes

kubeadm.x86_64 1.10.2-0 kubernetes

kubeadm.x86_64 1.10.3-0 kubernetes

kubeadm.x86_64 1.10.4-0 kubernetes

kubeadm.x86_64 1.10.5-0 kubernetes

kubeadm.x86_64 1.10.6-0 kubernetes

kubeadm.x86_64 1.10.7-0 kubernetes

kubeadm.x86_64 1.10.8-0 kubernetes

kubeadm.x86_64 1.10.9-0 kubernetes

kubeadm.x86_64 1.10.10-0 kubernetes

kubeadm.x86_64 1.10.11-0 kubernetes

kubeadm.x86_64 1.10.12-0 kubernetes

kubeadm.x86_64 1.10.13-0 kubernetes

kubeadm.x86_64 1.11.0-0 kubernetes

kubeadm.x86_64 1.11.1-0 kubernetes

kubeadm.x86_64 1.11.2-0 kubernetes

kubeadm.x86_64 1.11.3-0 kubernetes

kubeadm.x86_64 1.11.4-0 kubernetes

kubeadm.x86_64 1.11.5-0 kubernetes

kubeadm.x86_64 1.11.6-0 kubernetes

kubeadm.x86_64 1.11.7-0 kubernetes

kubeadm.x86_64 1.11.8-0 kubernetes

kubeadm.x86_64 1.11.9-0 kubernetes

kubeadm.x86_64 1.11.10-0 kubernetes

kubeadm.x86_64 1.12.0-0 kubernetes

kubeadm.x86_64 1.12.1-0 kubernetes

kubeadm.x86_64 1.12.2-0 kubernetes

kubeadm.x86_64 1.12.3-0 kubernetes

kubeadm.x86_64 1.12.4-0 kubernetes

kubeadm.x86_64 1.12.5-0 kubernetes

kubeadm.x86_64 1.12.6-0 kubernetes

kubeadm.x86_64 1.12.7-0 kubernetes

kubeadm.x86_64 1.12.8-0 kubernetes

kubeadm.x86_64 1.12.9-0 kubernetes

kubeadm.x86_64 1.12.10-0 kubernetes

kubeadm.x86_64 1.13.0-0 kubernetes

kubeadm.x86_64 1.13.1-0 kubernetes

kubeadm.x86_64 1.13.2-0 kubernetes

kubeadm.x86_64 1.13.3-0 kubernetes

kubeadm.x86_64 1.13.4-0 kubernetes

kubeadm.x86_64 1.13.5-0 kubernetes

kubeadm.x86_64 1.13.6-0 kubernetes

kubeadm.x86_64 1.13.7-0 kubernetes

kubeadm.x86_64 1.13.8-0 kubernetes

kubeadm.x86_64 1.13.9-0 kubernetes

kubeadm.x86_64 1.13.10-0 kubernetes

kubeadm.x86_64 1.13.11-0 kubernetes

kubeadm.x86_64 1.13.12-0 kubernetes

kubeadm.x86_64 1.14.0-0 kubernetes

kubeadm.x86_64 1.14.1-0 kubernetes

kubeadm.x86_64 1.14.2-0 kubernetes

kubeadm.x86_64 1.14.3-0 kubernetes

kubeadm.x86_64 1.14.4-0 kubernetes

kubeadm.x86_64 1.14.5-0 kubernetes

kubeadm.x86_64 1.14.6-0 kubernetes

kubeadm.x86_64 1.14.7-0 kubernetes

kubeadm.x86_64 1.14.8-0 kubernetes

kubeadm.x86_64 1.14.9-0 kubernetes

kubeadm.x86_64 1.14.10-0 kubernetes

kubeadm.x86_64 1.15.0-0 kubernetes

kubeadm.x86_64 1.15.1-0 kubernetes

kubeadm.x86_64 1.15.2-0 kubernetes

kubeadm.x86_64 1.15.3-0 kubernetes

kubeadm.x86_64 1.15.4-0 kubernetes

kubeadm.x86_64 1.15.5-0 kubernetes

kubeadm.x86_64 1.15.6-0 kubernetes

kubeadm.x86_64 1.15.7-0 kubernetes

kubeadm.x86_64 1.15.8-0 kubernetes

kubeadm.x86_64 1.15.9-0 kubernetes

kubeadm.x86_64 1.15.10-0 kubernetes

kubeadm.x86_64 1.15.11-0 kubernetes

kubeadm.x86_64 1.15.12-0 kubernetes

kubeadm.x86_64 1.16.0-0 kubernetes

kubeadm.x86_64 1.16.1-0 kubernetes

kubeadm.x86_64 1.16.2-0 kubernetes

kubeadm.x86_64 1.16.3-0 kubernetes

kubeadm.x86_64 1.16.4-0 kubernetes

kubeadm.x86_64 1.16.5-0 kubernetes

kubeadm.x86_64 1.16.6-0 kubernetes

kubeadm.x86_64 1.16.7-0 kubernetes

kubeadm.x86_64 1.16.8-0 kubernetes

kubeadm.x86_64 1.16.9-0 kubernetes

kubeadm.x86_64 1.16.10-0 kubernetes

kubeadm.x86_64 1.16.11-0 kubernetes

kubeadm.x86_64 1.16.11-1 kubernetes

kubeadm.x86_64 1.16.12-0 kubernetes

kubeadm.x86_64 1.16.13-0 kubernetes

kubeadm.x86_64 1.16.14-0 kubernetes

kubeadm.x86_64 1.16.15-0 kubernetes

kubeadm.x86_64 1.17.0-0 kubernetes

kubeadm.x86_64 1.17.1-0 kubernetes

kubeadm.x86_64 1.17.2-0 kubernetes

kubeadm.x86_64 1.17.3-0 kubernetes

kubeadm.x86_64 1.17.4-0 kubernetes

kubeadm.x86_64 1.17.5-0 kubernetes

kubeadm.x86_64 1.17.6-0 kubernetes

kubeadm.x86_64 1.17.7-0 kubernetes

kubeadm.x86_64 1.17.7-1 kubernetes

kubeadm.x86_64 1.17.8-0 kubernetes

kubeadm.x86_64 1.17.9-0 kubernetes

kubeadm.x86_64 1.17.11-0 kubernetes

kubeadm.x86_64 1.17.12-0 kubernetes

kubeadm.x86_64 1.17.13-0 kubernetes

kubeadm.x86_64 1.17.14-0 kubernetes

kubeadm.x86_64 1.17.15-0 kubernetes

kubeadm.x86_64 1.17.16-0 kubernetes

kubeadm.x86_64 1.17.17-0 kubernetes

kubeadm.x86_64 1.18.0-0 kubernetes

kubeadm.x86_64 1.18.1-0 kubernetes

kubeadm.x86_64 1.18.2-0 kubernetes

kubeadm.x86_64 1.18.3-0 kubernetes

kubeadm.x86_64 1.18.4-0 kubernetes

kubeadm.x86_64 1.18.4-1 kubernetes

kubeadm.x86_64 1.18.5-0 kubernetes

kubeadm.x86_64 1.18.6-0 kubernetes

kubeadm.x86_64 1.18.8-0 kubernetes

kubeadm.x86_64 1.18.9-0 kubernetes

kubeadm.x86_64 1.18.10-0 kubernetes

kubeadm.x86_64 1.18.12-0 kubernetes

kubeadm.x86_64 1.18.13-0 kubernetes

kubeadm.x86_64 1.18.14-0 kubernetes

kubeadm.x86_64 1.18.15-0 kubernetes

kubeadm.x86_64 1.18.16-0 kubernetes

kubeadm.x86_64 1.18.17-0 kubernetes

kubeadm.x86_64 1.18.18-0 kubernetes

kubeadm.x86_64 1.18.19-0 kubernetes

kubeadm.x86_64 1.18.20-0 kubernetes

kubeadm.x86_64 1.19.0-0 kubernetes

kubeadm.x86_64 1.19.1-0 kubernetes

kubeadm.x86_64 1.19.2-0 kubernetes

kubeadm.x86_64 1.19.3-0 kubernetes

kubeadm.x86_64 1.19.4-0 kubernetes

kubeadm.x86_64 1.19.5-0 kubernetes

kubeadm.x86_64 1.19.6-0 kubernetes

kubeadm.x86_64 1.19.7-0 kubernetes

kubeadm.x86_64 1.19.8-0 kubernetes

kubeadm.x86_64 1.19.9-0 kubernetes

kubeadm.x86_64 1.19.10-0 kubernetes

kubeadm.x86_64 1.19.11-0 kubernetes

kubeadm.x86_64 1.19.12-0 kubernetes

kubeadm.x86_64 1.20.0-0 kubernetes

kubeadm.x86_64 1.20.1-0 kubernetes

kubeadm.x86_64 1.20.2-0 kubernetes

kubeadm.x86_64 1.20.4-0 kubernetes

kubeadm.x86_64 1.20.5-0 kubernetes

kubeadm.x86_64 1.20.6-0 kubernetes

kubeadm.x86_64 1.20.7-0 kubernetes

kubeadm.x86_64 1.20.8-0 kubernetes

kubeadm.x86_64 1.21.0-0 kubernetes

kubeadm.x86_64 1.21.1-0 kubernetes

kubeadm.x86_64 1.21.2-0 kubernetes

[root@ccx k8s]#

安装

- 这里安装的是1.21版本的

yum install -y kubelet-1.21.0-0 kubeadm-1.21.0-0 kubectl-1.21.0-0 --disableexcludes=kubernetes

- 我前面说过,我这3台机子是没有外网的,所以我是通过离线的方式安装的k8s包,解压命令如下了。

[root@master ~]# cd /k8s/

[root@master k8s]# ls

13b4e820d82ad7143d786b9927adc414d3e270d3d26d844e93eff639f7142e50-kubelet-1.21.0-0.x86_64.rpm

conntrack-tools-1.4.4-7.el7.x86_64.rpm

d625f039f4a82eca35f6a86169446afb886ed9e0dfb167b38b706b411c131084-kubectl-1.21.0-0.x86_64.rpm

db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kubernetes-cni-0.8.7-0.x86_64.rpm

dc4816b13248589b85ee9f950593256d08a3e6d4e419239faf7a83fe686f641c-kubeadm-1.21.0-0.x86_64.rpm

libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm

libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm

libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm

socat-1.7.3.2-2.el7.x86_64.rpm

[root@master k8s]# cp * node1:/k8s

cp: target ‘node1:/k8s’ is not a directory

[root@master k8s]#

[root@master k8s]# scp * node1:/k8s

root@node1’s password:

13b4e820d82ad7143d786b9927adc414d3e270d3d26d844e93eff639f7142e50-kubelet 100% 20MB 22.4MB/s 00:00

conntrack-tools-1.4.4-7.el7.x86_64.rpm 100% 187KB 21.3MB/s 00:00

d625f039f4a82eca35f6a86169446afb886ed9e0dfb167b38b706b411c131084-kubectl 100% 9774KB 22.3MB/s 00:00

db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kuberne 100% 19MB 21.9MB/s 00:00

dc4816b13248589b85ee9f950593256d08a3e6d4e419239faf7a83fe686f641c-kubeadm 100% 9285KB 21.9MB/s 00:00

libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm 100% 18KB 1.4MB/s 00:00

libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm 100% 18KB 6.3MB/s 00:00

libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm 100% 23KB 8.6MB/s 00:00

socat-1.7.3.2-2.el7.x86_64.rpm 100% 290KB 23.1MB/s 00:00

[root@master k8s]# scp * node2:/k8s

root@node2’s password:

13b4e820d82ad7143d786b9927adc414d3e270d3d26d844e93eff639f7142e50-kubelet 100% 20MB 20.0MB/s 00:01

conntrack-tools-1.4.4-7.el7.x86_64.rpm 100% 187KB 19.9MB/s 00:00

d625f039f4a82eca35f6a86169446afb886ed9e0dfb167b38b706b411c131084-kubectl 100% 9774KB 21.2MB/s 00:00

db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kuberne 100% 19MB 21.4MB/s 00:00

dc4816b13248589b85ee9f950593256d08a3e6d4e419239faf7a83fe686f641c-kubeadm 100% 9285KB 19.6MB/s 00:00

libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm 100% 18KB 5.9MB/s 00:00

libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm 100% 18KB 2.9MB/s 00:00

libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm 100% 23KB 8.3MB/s 00:00

socat-1.7.3.2-2.el7.x86_64.rpm 100% 290KB 21.1MB/s 00:00

[root@master k8s]# rpm -ivhU * --nodeps --force

Preparing… ################################# [100%]

Updating / installing…

1:socat-1.7.3.2-2.el7 ################################# [ 11%]

2:libnetfilter_queue-1.0.2-2.el7_2 ################################# [ 22%]

3:libnetfilter_cttimeout-1.0.0-7.el################################# [ 33%]

4:libnetfilter_cthelper-1.0.0-11.el################################# [ 44%]

5:conntrack-tools-1.4.4-7.el7 ################################# [ 56%]

6:kubernetes-cni-0.8.7-0 ################################# [ 67%]

7:kubelet-1.21.0-0 ################################# [ 78%]

8:kubectl-1.21.0-0 ################################# [ 89%]

9:kubeadm-1.21.0-0 ################################# [100%]

[root@master k8s]#

启动k8s服务

命令:systemctl enable kubelet --now

意思是加入开启启动并现在启动。

[root@master k8s]# systemctl enable kubelet --now

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@master k8s]# systemctl is-active kubelet

activating

[root@master k8s]#

===========================================================================================

离线环境安装说明

-

如果集群是没有外网的,在一台有外网的机子上做下面操作,然后把所有镜像导入到集群内网环境中,继续执行下面安装kubeadmin步骤即可。

-

我下面主机名是ccx的是一台有网络的虚机,master主机名是内网集群的,所以看到主机名不一样别诧异。

命令说明

-

命令:

kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.21.0 --pod-network-cidr=10.244.0.0/16【最后面的10.244是自定义的】 -

kubeadm init:初始化集群 -

--image-repository registry.aliyuncs.com/google_containers:需要镜像就从registry.aliyuncs.com/google_containers这获取 -

--kubernetes-version=v1.21.0指定版本 -

--pod-network-cidr=10.244.0.0/16:指定pod的网段

命令执行后镜像一览

- 安装前docker中是没有任何镜像的

[root@ccx ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

[root@ccx ~]#

- 下载完毕以后docker会多出这些镜像

这些镜像补全,缺一个dns

[root@ccx ~]# docker images

REPOSITORY TAG IMAGE ID

CREATED SIZEregistry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e

2 months ago 126MBregistry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e

2 months ago 122MBregistry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37

2 months ago 120MBregistry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8

2 months ago 50.6MBregistry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec

5 months ago 683kBregistry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff

10 months ago 253MB[root@ccx ~]#

[root@ccx ~]#

命令执行报错及处理

- 执行命令以后最终报错如下

[root@ccx k8s]# kubeadm init --image-repository registry.aliyuncs.com/google_containers –

kubernetes-version=v1.21.0 --pod-network-cidr=10.244.0.0/16[init] Using Kubernetes version: v1.21.0

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected “cgroupfs” as the Docker cgroup driver. T

he recommended driver is “systemd”. Please follow the guide at https://kubernetes.io/docs/setup/cri/[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet conne

ction[preflight] You can also perform this action in beforehand using 'kubeadm config images pu

ll’error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/co

redns/coredns:v1.8.0: output: Error response from daemon: pull access denied for registry.aliyuncs.com/google_containers/coredns/coredns, repository does not exist or may require ‘docker login’: denied: requested access to the resource is denied, error: exit status 1

[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-

preflight-errors=…`To see the stack trace of this error execute with --v=5 or higher

[root@ccx k8s]#

-

原因是因为:

pull image registry.aliyuncs.com/google_containers/co redns/coredns:v1.8.0下载这个报错了,其实这个镜像是在的,只是命名错了而已,所以通过其他方式下载这个/coredns包然后导入就行了。 -

不想去找的可以下载上传的:

- 导入命令:

docker load -i coredns-1.21.tar

全部过程过程如下

[root@master kubeadm]# rz -E

rz waiting to receive.

zmodem trl+C ȡ

100% 41594 KB 20797 KB/s 00:00:02 0 Errors

[root@master kubeadm]# ls

coredns-1.21.tar

[root@master kubeadm]# docker load -i coredns-1.21.tar

225df95e717c: Loading layer 336.4kB/336.4kB

69ae2fbf419f: Loading layer 42.24MB/42.24MB

Loaded image: registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

[root@master kubeadm]#

[root@master kubeadm]# docker images | grep dns

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 8 months ago 42.5MB

[root@master kubeadm]#

- 全部镜像如下

注意:coredns-1.21.tar这个包需要拷贝到所有节点上【master和node节点】

[root@master k8s]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 months ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 months ago 122MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 months ago 120MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 months ago 50.6MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 5 months ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 8 months ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 10 months ago 253MB

[root@master k8s]#

当上面的镜像全部准备完毕以后,再次执行kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.21.0 --pod-network-cidr=10.244.0.0/16,最下面就会提示这些内容【现在没有启动成功】,说缺认证文件。

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown ( i d − u ) : (id -u): (id−u):(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run “kubectl apply -f [podnetwork].yaml” with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.59.142:6443 --token 29ivpt.l5x5zhqzca4n47kp \

–discovery-token-ca-cert-hash sha256:5a9779259cea2b3a23fa6e713ed02f5b0c6eda2d75049b703dbec78587015e0b

[root@master k8s]#

- 现在我们可以根据提示复制执行下面命令即可:

[root@master k8s]# mkdir -p $HOME/.kube

[root@master k8s]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master k8s]# sudo chown ( i d − u ) : (id -u): (id−u):(id -g) $HOME/.kube/config

[root@master k8s]#

- 上面3个命令执行完,就可以看到当前节点信息了

命令:kubectl get nodes

[root@master k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 8m19s v1.21.0

[root@master k8s]#

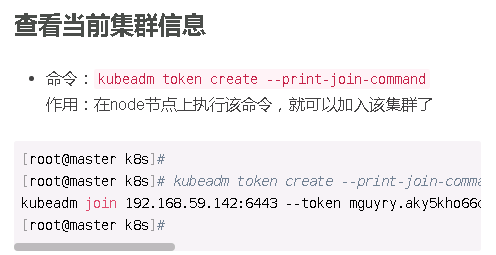

- 命令:

kubeadm token create --print-join-command

作用:在node节点上执行该命令,就可以加入该集群了

[root@master k8s]#

[root@master k8s]# kubeadm token create --print-join-command

kubeadm join 192.168.59.142:6443 --token mguyry.aky5kho66cnjtlsl --discovery-token-ca-cert-hash sha256:5a9779259cea2b3a23fa6e713ed02f5b0c6eda2d75049b703dbec78587015e0b

[root@master k8s]#

==============================================================================

- 命令:就是master上执行

kubeadm token create --print-join-command后的结果。

如,我在master上的结果是如下:

- 那么我就在2个node节点都执行这结果即可:

[root@node1 k8s]# kubeadm join 192.168.59.142:6443 --token sqjhzj.7aloiqau86k8xq54 --discovery-token-ca-cert-hash sha256:5a9779259cea2b3a23fa6e713ed02f5b0c6eda2d75049b703dbec78587015e0b

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected “cgroupfs” as the Docker cgroup driver. The recommended driver is “systemd”. Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster…

[preflight] FYI: You can look at this config file with ‘kubectl -n kube-system get cm kubeadm-config -o yaml’

[kubelet-start] Writing kubelet configuration to file “/var/lib/kubelet/config.yaml”

[kubelet-start] Writing kubelet environment file with flags to file “/var/lib/kubelet/kubeadm-flags.env”

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap…

This node has joined the cluster:

-

Certificate signing request was sent to apiserver and a response was received.

-

The Kubelet was informed of the new secure connection details.

Run ‘kubectl get nodes’ on the control-plane to see this node join the cluster.

[root@node1 k8s]#

- 执行完毕以后去master节点上执行

kubectl get nodes是可以看到这2个节点信息的

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 29m v1.21.0

node1 NotReady 3m59s v1.21.0

node2 NotReady 79s v1.21.0

[root@master ~]#

注:现在上面集群的STATUS状态是NotReady,是不正常的,因为确实网络插件,下面的calico安装完毕以后状态就正常了。

===========================================================================

- 网址:

https://docs.projectcalico.org/

进入以后直接搜索calico.yam

下拉会有一个curl开头的下载网址,我们可以直接用复杂下载连接下载,也可以在linux上用wget下载,把curl换成wget,最后的-O去掉即可。

- 命令:

grep image calico.yaml

我的环境是没外网的,rz是拷贝我下载镜像的命令。

[root@master ~]# rz -E

rz waiting to receive.

zmodem trl+C ȡ

100% 185 KB 185 KB/s 00:00:01 0 Errors

[root@master ~]# ls | grep calico.yaml

calico.yaml

[root@master ~]# grep image calico.yaml

image: docker.io/calico/cni:v3.19.1

image: docker.io/calico/cni:v3.19.1

image: docker.io/calico/pod2daemon-flexvol:v3.19.1

image: docker.io/calico/node:v3.19.1

image: docker.io/calico/kube-controllers:v3.19.1

[root@master ~]#

- 然后去一台有网的主机上下载这些镜像

下载命令:docker pull 上面5个image名,如:docker pull docker.io/calico/cni:v3.19.1

5个镜像均需要下载完【下载很慢,可以在一台服务器上下载,然后打包镜像,拷贝到另外2个镜像上解压即可,不会的我博客中有一篇是docker镜像管理,可以去看看】

- 如果你嫌下载慢【一共差不多400M】,可以使用我已经下载好的,链接:

https://download.csdn.net/download/cuichongxin/19988222

使用方法如下

[root@master ~]# scp calico-3.19-img.tar node1:~

root@node1’s password:

calico-3.19-img.tar 100% 381MB 20.1MB/s 00:18

[root@master ~]#

[root@master ~]# scp calico-3.19-img.tar node2:~

root@node2’s password:

calico-3.19-img.tar 100% 381MB 21.1MB/s 00:18

[root@master ~]#

[root@node1 ~]# docker load -i calico-3.19-img.tar

a4bf22d258d8: Loading layer 88.58kB/88.58kB

d570c523a9c3: Loading layer 13.82kB/13.82kB

b4d08d55eb6e: Loading layer 145.8MB/145.8MB

Loaded image: calico/cni:v3.19.1

01c2272d9083: Loading layer 13.82kB/13.82kB

ad494b1a2a08: Loading layer 2.55MB/2.55MB

d62fbb2a27c3: Loading layer 5.629MB/5.629MB

2ac91a876a81: Loading layer 5.629MB/5.629MB

c923b81cc4f1: Loading layer 2.55MB/2.55MB

aad8b538640b: Loading layer 5.632kB/5.632kB

b3e6b5038755: Loading layer 5.378MB/5.378MB

Loaded image: calico/pod2daemon-flexvol:v3.19.1

4d2bd71609f0: Loading layer 170.8MB/170.8MB

dc8ea6c15e2e: Loading layer 13.82kB/13.82kB

Loaded image: calico/node:v3.19.1

ce740cb4fc7d: Loading layer 13.82kB/13.82kB

fe82f23d6e35: Loading layer 2.56kB/2.56kB

98738437610a: Loading layer 57.53MB/57.53MB

0443f62e8f15: Loading layer 3.082MB/3.082MB

Loaded image: calico/kube-controllers:v3.19.1

[root@node1 ~]#

- 全部镜像准备完毕后如下:

[root@node1 ~]# docker images | grep ca

calico/node v3.19.1 c4d75af7e098 6 weeks ago 168MB

calico/pod2daemon-flexvol v3.19.1 5660150975fb 6 weeks ago 21.7MB

calico/cni v3.19.1 5749e8b276f9 6 weeks ago 146MB

calico/kube-controllers v3.19.1 5d3d5ddc8605 6 weeks ago 60.6MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 months ago 50.6MB

[root@node1 ~]#

-

设置网段【master上操作】

-

编辑配置文件

calico.yaml,将下面2行前面的#和空格删掉【格式保持对齐】 -

将value后面的192.168改成你初始化集群时候的网段,我用的是10.244。

[root@master ~]# cat calico.yaml | egrep -B 1 10.244

- name: CALICO_IPV4POOL_CIDR

value: “10.244.0.0/16”

[root@master ~]#

- 然后执行:

kubectl apply -f calico.yaml

[root@master ~]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created

[root@master ~]#

- 现在执行

kubectl get nodes可以看到STATUS状态正常了

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 70m v1.21.0

node1 Ready 45m v1.21.0

node2 Ready 42m v1.21.0

[root@master ~]#

至此,集群的基本搭建就完成了。

查看calico装在那些节点上的,命令:kubectl get pods -n kube-system -o wide 【了解下就行】

[root@master ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-78d6f96c7b-p4svs 1/1 Running 0 98m 10.244.219.67 master

calico-node-cc4fc 1/1 Running 18 65m 192.168.59.144 node2

calico-node-stdfj 1/1 Running 20 98m 192.168.59.142 master

calico-node-zhhz7 1/1 Running 1 98m 192.168.59.143 node1

coredns-545d6fc579-6kb9x 1/1 Running 0 167m 10.244.219.65 master

coredns-545d6fc579-v74hg 1/1 Running 0 167m 10.244.219.66 master

etcd-master 1/1 Running 1 167m 192.168.59.142 master

kube-apiserver-master 1/1 Running 1 167m 192.168.59.142 master

kube-controller-manager-master 1/1 Running 11 167m 192.168.59.142 master

kube-proxy-45qgd 1/1 Running 1 65m 192.168.59.144 node2

kube-proxy-fdhpw 1/1 Running 1 167m 192.168.59.142 master

kube-proxy-zf6nt 1/1 Running 1 142m 192.168.59.143 node1

kube-scheduler-master 1/1 Running 12 167m 192.168.59.142 master

[root@master ~]#

==============================================================================

- 状况: 现在除了kubectl可以tab,剩下的都是不能tab的,你可以先测试一下。

[root@master ~]# kubectl ge

- 查看kubectl的cmd

[root@master ~]# kubectl --help | grep bash

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Java工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Java开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

如果你觉得这些内容对你有帮助,可以扫码获取!!(备注Java获取)

最后

金三银四到了,送上一个小福利!

《互联网大厂面试真题解析、进阶开发核心学习笔记、全套讲解视频、实战项目源码讲义》点击传送门即可获取!

11 167m 192.168.59.142 master

kube-proxy-45qgd 1/1 Running 1 65m 192.168.59.144 node2

kube-proxy-fdhpw 1/1 Running 1 167m 192.168.59.142 master

kube-proxy-zf6nt 1/1 Running 1 142m 192.168.59.143 node1

kube-scheduler-master 1/1 Running 12 167m 192.168.59.142 master

[root@master ~]#

==============================================================================

- 状况: 现在除了kubectl可以tab,剩下的都是不能tab的,你可以先测试一下。

[root@master ~]# kubectl ge

- 查看kubectl的cmd

[root@master ~]# kubectl --help | grep bash

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Java工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。[外链图片转存中…(img-MzomWRLe-1713398659503)]

[外链图片转存中…(img-dmrTgD4P-1713398659504)]

[外链图片转存中…(img-okl5OycR-1713398659504)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Java开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

如果你觉得这些内容对你有帮助,可以扫码获取!!(备注Java获取)

最后

金三银四到了,送上一个小福利!

[外链图片转存中…(img-ODzVYu9J-1713398659504)]

[外链图片转存中…(img-3GReqWL3-1713398659504)]

[外链图片转存中…(img-4wrW4RDG-1713398659505)]

《互联网大厂面试真题解析、进阶开发核心学习笔记、全套讲解视频、实战项目源码讲义》点击传送门即可获取!

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)