2024年最全Raft Kafka on k8s 部署实战操作,2024年最新从大数据开发语言到AIDL使用与原理讲解

(img-gnghWX7n-1715633862731)]#NodePort 默认范围是 30000-32767。# 默认是带鉴权的,SASL_PLAINTEXT。replicaCount: 3 # 控制器的数量。replicaCount: 3# 代理的数量。

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

制作软连接

ln -s /opt/helm/linux-amd64/helm /usr/local/bin/helm

验证

helm version

helm help

#### 3)配置 Helm chart

如果你使用 Bitnami 的 Kafka Helm chart,你需要创建一个 `values.yaml` 文件来配置 Kafka 集群。在该文件中,你可以启用 **KRaft** 模式并配置其他设置,如认证、端口等。

添加下载源

helm repo add bitnami https://charts.bitnami.com/bitnami

下载

helm pull bitnami/kafka --version 26.0.0

解压

tar -xf kafka-26.0.0.tgz

修改配置

vi kafka/values.yaml

以下是一个 **values.yaml** 的示例配置:

image:

registry: registry.cn-hangzhou.aliyuncs.com

repository: bigdata_cloudnative/kafka

tag: 3.6.0-debian-11-r0

listeners:

client:

containerPort: 9092

# 默认是带鉴权的,SASL_PLAINTEXT

protocol: PLAINTEXT

name: CLIENT

sslClientAuth: “”

controller:

replicaCount: 3 # 控制器的数量

persistence:

storageClass: “kafka-controller-local-storage”

size: “10Gi”

目录需要提前在宿主机上创建

local:

- name: kafka-controller-0

host: “local-168-182-110”

path: “/opt/bigdata/servers/kraft/kafka-controller/data1”

- name: kafka-controller-1

host: “local-168-182-111”

path: “/opt/bigdata/servers/kraft/kafka-controller/data1”

- name: kafka-controller-2

host: “local-168-182-112”

path: “/opt/bigdata/servers/kraft/kafka-controller/data1”

broker:

replicaCount: 3 # 代理的数量

persistence:

storageClass: “kafka-broker-local-storage”

size: “10Gi”

目录需要提前在宿主机上创建

local:

- name: kafka-broker-0

host: “local-168-182-110”

path: “/opt/bigdata/servers/kraft/kafka-broker/data1”

- name: kafka-broker-1

host: “local-168-182-111”

path: “/opt/bigdata/servers/kraft/kafka-broker/data1”

- name: kafka-broker-2

host: “local-168-182-112”

path: “/opt/bigdata/servers/kraft/kafka-broker/data1”

service:

type: NodePort

nodePorts:

#NodePort 默认范围是 30000-32767

client: “32181”

tls: “32182”

Enable Prometheus to access ZooKeeper metrics endpoint

metrics:

enabled: true

kraft:

enabled: true

添加以下几个文件:

* **kafka/templates/broker/pv.yaml**

{{- range .Values.broker.persistence.local }}

apiVersion: v1

kind: PersistentVolume

metadata:

name: {{ .name }}

labels:

name: {{ .name }}

spec:

storageClassName: {{ $.Values.broker.persistence.storageClass }}

capacity:

storage: {{ $.Values.broker.persistence.size }}

accessModes:

- ReadWriteOnce

local:

path: {{ .path }}

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- {{ .host }}

{{- end }}

* **kafka/templates/broker/storage-class.yaml**

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: {{ .Values.broker.persistence.storageClass }}

provisioner: kubernetes.io/no-provisioner

* **kafka/templates/controller-eligible/pv.yaml**

{{- range .Values.controller.persistence.local }}

apiVersion: v1

kind: PersistentVolume

metadata:

name: {{ .name }}

labels:

name: {{ .name }}

spec:

storageClassName: {{ $.Values.controller.persistence.storageClass }}

capacity:

storage: {{ $.Values.controller.persistence.size }}

accessModes:

- ReadWriteOnce

local:

path: {{ .path }}

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- {{ .host }}

{{- end }}

* **kafka/templates/controller-eligible/storage-class.yaml**

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: {{ .Values.controller.persistence.storageClass }}

provisioner: kubernetes.io/no-provisioner

#### 4)使用 Helm 部署 Kafka 集群

先准备好镜像

docker pull docker.io/bitnami/kafka:3.6.0-debian-11-r0

docker tag docker.io/bitnami/kafka:3.6.0-debian-11-r0 registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/kafka:3.6.0-debian-11-r0

docker push registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/kafka:3.6.0-debian-11-r0

开始安装

helm install kraft ./kafka -n kraft --create-namespace

NOTES

[root@local-168-182-110 KRaft-on-k8s]# helm upgrade kraft

Release “kraft” has been upgraded. Happy Helming!

NAME: kraft

LAST DEPLOYED: Sun Mar 24 20:05:04 2024

NAMESPACE: kraft

STATUS: deployed

REVISION: 3

TEST SUITE: None

NOTES:

CHART NAME: kafka

CHART VERSION: 26.0.0

APP VERSION: 3.6.0

** Please be patient while the chart is being deployed **

Kafka can be accessed by consumers via port 9092 on the following DNS name from within your cluster:

kraft-kafka.kraft.svc.cluster.local

Each Kafka broker can be accessed by producers via port 9092 on the following DNS name(s) from within your cluster:

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

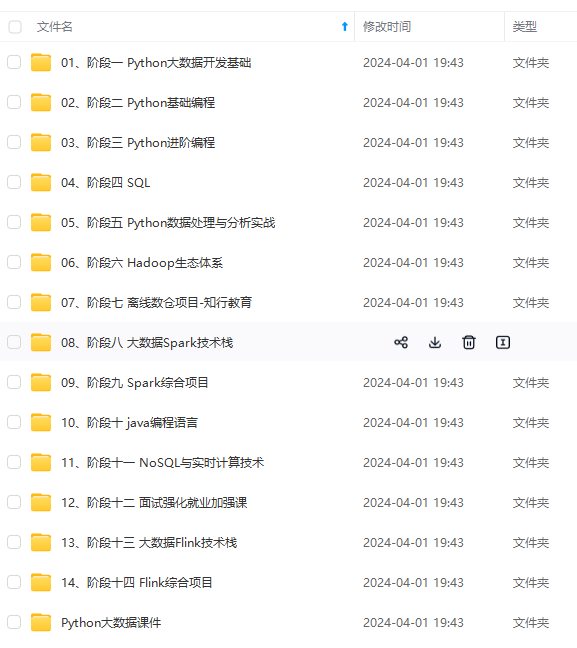

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

1)]

[外链图片转存中…(img-gnghWX7n-1715633862731)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)