【Kubernetes】k8s的安全管理详细说明【role赋权和clusterrole赋权详细配置说明】

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。深知大多数Java工程师,想要提升技能,往往是自己摸索成长,自己不成体系的自学效果低效漫长且无助。因此收集整理了一份《2024年Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

- 首先需要有一套完整的集群

[root@master ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready master 114d v1.21.0 192.168.59.142 CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7

node1 Ready 114d v1.21.0 192.168.59.143 CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7

node2 Ready 114d v1.21.0 192.168.59.144 CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7

[root@master ~]#

[root@master ~]# kubectl cluster-info

Kubernetes control plane is running at https://192.168.59.142:6443

CoreDNS is running at https://192.168.59.142:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Metrics-server is running at https://192.168.59.142:6443/api/v1/namespaces/kube-system/services/https:metrics-server:/proxy

To further debug and diagnose cluster problems, use ‘kubectl cluster-info dump’.

[root@master ~]#

- 然后单独准备一台同网段的虚机用来当客户端使用

[root@master2 ~]# ip a | grep 59

inet 192.168.59.151/24 brd 192.168.59.255 scope global noprefixroute ens33

[root@master2 ~]#

安装命令

[root@master2 ~]#yum install -y kubelet-1.21.0-0 --disableexcludes=kubernetes

#–disableexcludes=kubernetes 禁掉除了这个之外的别的仓库

启动服务

[root@master2 ~]#systemctl enable kubelet && systemctl start kubelet

#让其kubectl能使用tab

[root@master2 ~]# head -n3 /etc/profile

/etc/profile

source <(kubectl completion bash)

[root@master2 ~]#

现在呢是没有集群信息的,报错内容可能会有不一样

[root@master2 ~]# kubectl get nodes

No resources found

[root@master2 ~]#

====================================================================================

内容过多,分开发布,token验证&&kubeconfig验证去这篇博客:

【Kubernetes】k8s的安全管理详细说明【k8s框架说明、token验证和kubeconfig验证详细说明】

=================================================================

- 配置文件:

/etc/kubernetes/manifests/kube-apiserver.yaml

这个里面配置授权规则,大概在20行,规则有如下几项

修改规则以后需要重启服务生效:systemctl restart kubelet

[root@master sefe]# cat -n /etc/kubernetes/manifests/kube-apiserver.yaml| egrep mode

20 - --authorization-mode=Node,RBAC

[root@master sefe]#

–authorization-mode=Node,RBAC #默认

-

–authorization-mode=AlwaysAllow #允许所有请求,无论是否给权限,都能访问

-

–authorization-mode=AlwaysDeny #拒绝所有请求,无论是否给权限,都不允许访问【不影响admin文件的权限/etc/kubernetes/admin.conf】

-

–authorization-mode=ABAC

Attribute-Based Access Control #不够灵活被放弃使用

- –authorization-mode=RBAC #这个最常用,或者说一般情况都使用这个

Role Based Access Control

- –authorization-mode=Node

Node授权器主要用于各个node上的kubelet访问apiserver时使用的,其他一般均由RBAC授权器来授权

- 这个比较直观,就是允许全部和拒绝全部

我这用一个允许全部做测试

现在有授权,先删除授权

[root@master sefe]# kubectl get clusterrolebindings.rbac.authorization.k8s.io test1

NAME ROLE AGE

test1 ClusterRole/cluster-admin 28m

[root@master sefe]# kubectl delete clusterrolebindings.rbac.authorization.k8s.io test1

clusterrolebinding.rbac.authorization.k8s.io “test1” deleted

[root@master sefe]#

我现在用的是kubeconfig文件继续做测试,先去看看我上面的kubeconfig验证,否则这看不懂啊

[root@master sefe]# ls

ca.crt ccx.crt ccx.csr ccx.key csr.yaml kc1

[root@master sefe]#

[root@master sefe]# kubectl --kubeconfig=kc1 get pods

Error from server (Forbidden): pods is forbidden: User “ccx” cannot list resource “pods” in API group “” in the namespace “default”

[root@master sefe]#

- 配置文件

修改为允许,然后重启服务

[root@master sefe]# vi /etc/kubernetes/manifests/kube-apiserver.yaml

[root@master sefe]# cat -n /etc/kubernetes/manifests/kube-apiserver.yaml| egrep mode

20 #- --authorization-mode=Node,RBAC

21 --authorization-mode=AlwaysAllow

[root@master sefe]#

[root@master sefe]# !sys

systemctl restart kubelet

[root@master sefe]#

[root@master ~]# systemctl restart kubelet

- 测试

重启以后呢,挺久时间都是会这样子的报错,是因为apiserver服务没起来。

[root@master ~]# systemctl restart kubelet

[root@master ~]#

[root@master ~]# kubectl get pods

The connection to the server 192.168.59.142:6443 was refused - did you specify the right host or port?

[root@master ~]#

api状态久久不能up,就离谱。

[root@master kubernetes]# docker ps -a | grep api

525821586ed5 4d217480042e “kube-apiserver --ad…” 15 hours ago Exited (137) 7 minutes ago k8s_kube-apiserver_kube-apiserver-master_kube-system_654a890f23facb6552042e41f67f4aef_1

6b64a8bfc748 registry.aliyuncs.com/google_containers/pause:3.4.1 “/pause” 15 hours ago Up 15 hours k8s_POD_kube-apiserver-master_kube-system_654a890f23facb6552042e41f67f4aef_0

[root@master kubernetes]#

- 做不了测试咯,我改了以后,集群就出问题了,api一直起不来不说,kubelet状态还一直报下面错误,messages看到的一样,没找到原因,算了,不搞了,反正只要知道这个东西就行,平常也不建议用全放开或全拒绝。

[root@master ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Thu 2021-11-04 09:55:26 CST; 55s ago

Docs: https://kubernetes.io/docs/

Main PID: 29495 (kubelet)

Tasks: 45

Memory: 64.8M

CGroup: /system.slice/kubelet.service

├─29495 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --network-plugin=cni --pod-infra-container-image=regi…

└─30592 /opt/cni/bin/calico

Nov 04 09:56:19 master kubelet[29495]: I1104 09:56:19.238570 29495 kubelet.go:461] “Kubelet nodes not sync”

Nov 04 09:56:19 master kubelet[29495]: I1104 09:56:19.250440 29495 kubelet.go:461] “Kubelet nodes not sync”

Nov 04 09:56:19 master kubelet[29495]: I1104 09:56:19.394574 29495 kubelet.go:461] “Kubelet nodes not sync”

Nov 04 09:56:19 master kubelet[29495]: I1104 09:56:19.809471 29495 kubelet.go:461] “Kubelet nodes not sync”

Nov 04 09:56:20 master kubelet[29495]: I1104 09:56:20.206978 29495 kubelet.go:461] “Kubelet nodes not sync”

Nov 04 09:56:20 master kubelet[29495]: I1104 09:56:20.237387 29495 kubelet.go:461] “Kubelet nodes not sync”

Nov 04 09:56:20 master kubelet[29495]: I1104 09:56:20.250606 29495 kubelet.go:461] “Kubelet nodes not sync”

Nov 04 09:56:20 master kubelet[29495]: I1104 09:56:20.395295 29495 kubelet.go:461] “Kubelet nodes not sync”

Nov 04 09:56:20 master kubelet[29495]: E1104 09:56:20.501094 29495 controller.go:144] failed to ensure lease exists, will retry in 7s, error: Get "https://192.168.59.142:6443/apis/coordination.k8s.io/v1/namespace…onnection refused

Nov 04 09:56:20 master kubelet[29495]: I1104 09:56:20.809833 29495 kubelet.go:461] “Kubelet nodes not sync”

Hint: Some lines were ellipsized, use -l to show in full.

[root@master ~]#

这里面看到的报错和上面一样

[root@master ~]# tail -f /var/log/messages

感兴趣的可以去看看官网介绍:

查看admin权限

[root@master ~]# kubectl describe clusterrole admin

Name: admin

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

rolebindings.rbac.authorization.k8s.io [] [] [create delete deletecollection get list patch update watch]

roles.rbac.authorization.k8s.io [] [] [create delete deletecollection get list patch update watch]

configmaps [] [] [create delete deletecollection patch update get list watch]

endpoints [] [] [create delete deletecollection patch update get list watch]

persistentvolumeclaims [] [] [create delete deletecollection patch update get list watch]

pods [] [] [create delete deletecollection patch update get list watch]

replicationcontrollers/scale [] [] [create delete deletecollection patch update get list watch]

replicationcontrollers [] [] [create delete deletecollection patch update get list watch]

services [] [] [create delete deletecollection patch update get list watch]

daemonsets.apps [] [] [create delete deletecollection patch update get list watch]

deployments.apps/scale [] [] [create delete deletecollection patch update get list watch]

deployments.apps [] [] [create delete deletecollection patch update get list watch]

replicasets.apps/scale [] [] [create delete deletecollection patch update get list watch]

replicasets.apps [] [] [create delete deletecollection patch update get list watch]

statefulsets.apps/scale [] [] [create delete deletecollection patch update get list watch]

statefulsets.apps [] [] [create delete deletecollection patch update get list watch]

horizontalpodautoscalers.autoscaling [] [] [create delete deletecollection patch update get list watch]

cronjobs.batch [] [] [create delete deletecollection patch update get list watch]

jobs.batch [] [] [create delete deletecollection patch update get list watch]

daemonsets.extensions [] [] [create delete deletecollection patch update get list watch]

deployments.extensions/scale [] [] [create delete deletecollection patch update get list watch]

deployments.extensions [] [] [create delete deletecollection patch update get list watch]

ingresses.extensions [] [] [create delete deletecollection patch update get list watch]

networkpolicies.extensions [] [] [create delete deletecollection patch update get list watch]

replicasets.extensions/scale [] [] [create delete deletecollection patch update get list watch]

replicasets.extensions [] [] [create delete deletecollection patch update get list watch]

replicationcontrollers.extensions/scale [] [] [create delete deletecollection patch update get list watch]

ingresses.networking.k8s.io [] [] [create delete deletecollection patch update get list watch]

networkpolicies.networking.k8s.io [] [] [create delete deletecollection patch update get list watch]

poddisruptionbudgets.policy [] [] [create delete deletecollection patch update get list watch]

deployments.apps/rollback [] [] [create delete deletecollection patch update]

deployments.extensions/rollback [] [] [create delete deletecollection patch update]

localsubjectaccessreviews.authorization.k8s.io [] [] [create]

pods/attach [] [] [get list watch create delete deletecollection patch update]

pods/exec [] [] [get list watch create delete deletecollection patch update]

pods/portforward [] [] [get list watch create delete deletecollection patch update]

pods/proxy [] [] [get list watch create delete deletecollection patch update]

secrets [] [] [get list watch create delete deletecollection patch update]

services/proxy [] [] [get list watch create delete deletecollection patch update]

bindings [] [] [get list watch]

events [] [] [get list watch]

limitranges [] [] [get list watch]

namespaces/status [] [] [get list watch]

namespaces [] [] [get list watch]

persistentvolumeclaims/status [] [] [get list watch]

pods/log [] [] [get list watch]

pods/status [] [] [get list watch]

replicationcontrollers/status [] [] [get list watch]

resourcequotas/status [] [] [get list watch]

resourcequotas [] [] [get list watch]

services/status [] [] [get list watch]

controllerrevisions.apps [] [] [get list watch]

daemonsets.apps/status [] [] [get list watch]

deployments.apps/status [] [] [get list watch]

replicasets.apps/status [] [] [get list watch]

statefulsets.apps/status [] [] [get list watch]

horizontalpodautoscalers.autoscaling/status [] [] [get list watch]

cronjobs.batch/status [] [] [get list watch]

jobs.batch/status [] [] [get list watch]

daemonsets.extensions/status [] [] [get list watch]

deployments.extensions/status [] [] [get list watch]

ingresses.extensions/status [] [] [get list watch]

replicasets.extensions/status [] [] [get list watch]

nodes.metrics.k8s.io [] [] [get list watch]

pods.metrics.k8s.io [] [] [get list watch]

ingresses.networking.k8s.io/status [] [] [get list watch]

poddisruptionbudgets.policy/status [] [] [get list watch]

serviceaccounts [] [] [impersonate create delete deletecollection patch update get list watch]

[root@master ~]#

基本概念

-

RBAC(Role-Based Access Control,基于角色的访问控制),允许通过Kubernetes API动态配置策略。

-

在k8s v1.5中引入,在v1.6版本时升级为Beta版本,并成为kubeadm安装方式下的默认选项,相对于其他访问控制方式,新的RBAC具有如下优势:

-

对集群中的资源和非资源权限均有完整的覆盖

-

整个RBAC完全由几个API对象完成,同其他API对象一样,可以用kubectl或API进行操作

-

可以在运行时进行调整,无需重启API Server

-

要使用RBAC授权模式,需要在API Server的启动参数中加上–authorization-mode=RBAC

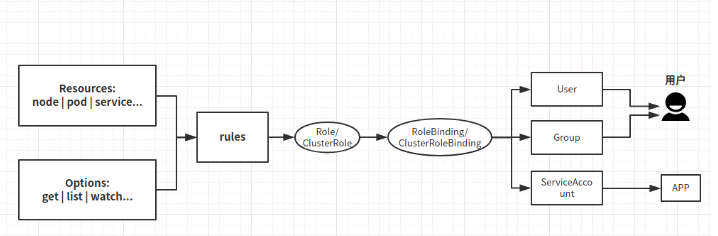

原理

- 流程图如下【下面是对这个流程图的拆解说明】

- 角色

一个角色就是一组权限的集合,这里的权限都是许可形式的,不存在拒绝的规则。

,

角色只能对命名空间内的资源进行授权

-

Role:授权特定命名空间的访问权限【 在一个命名空间中,可以用角色来定义一个角色】 -

ClusterRole:授权所有命名空间的访问权限【如果是集群级别的,就需要使用ClusterRole了。】 -

角色绑定

-

RoleBinding:将角色绑定到主体(即subject)【对应角色的Role】

ClusterRoleBinding:将集群角色绑定到主体【对应角色的ClusterRole】

-

主体(subject)

-

User:用户 -

Group:用户组 -

ServiceAccount:服务账号 -

用户或者用户组,服务账号,与具备某些权限的角色绑定,然后将该角色的权限继承过来,这一点类似阿里云的 ram 授权。这里需要注意 定义的角色是 Role作用域只能在指定的名称空间下有效,如果是ClusterRole可作用于所有名称空间下。

-

Rolebinding 和Role 对应,ClusterRoleBinding 和 ClusterRole 对应。

ClusterRole和Role的参数值说明

- 1、apiGroups可配置参数

这个很重要,是父子级的关系【kubectl api-versions 可以查看】【一般有2种格式 /xx 和xx/yy】

“”,“apps”, “autoscaling”, “batch”

- 2、resources可配置参数

“services”, “endpoints”,“pods”,“secrets”,“configmaps”,“crontabs”,“deployments”,“jobs”,“nodes”,“rolebindings”,“clusterroles”,“daemonsets”,“replicasets”,“statefulsets”,“horizontalpodautoscalers”,“replicationcontrollers”,“cronjobs”

- 3、verbs可配置参数

“get”,“list”,“watch”, “create”,“update”, “patch”, “delete”,“exec”

- 4、apiGroups和resources对应关系

- apiGroups: [“”] # 空字符串""表明使用core API group

resources: [“pods”,“pods/log”,“pods/exec”, “pods/attach”, “pods/status”, “events”, “replicationcontrollers”, “services”, “configmaps”, “persistentvolumeclaims”]

- apiGroups: [ “apps”]

resources: [“deployments”, “daemonsets”, “statefulsets”,“replicasets”]

role测试说明

创建一个role

- 除了我下面的方法以外,还可以使用这种方式写role文件

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

限定可访问的命名空间为 minio

namespace: minio

角色名称

name: role-minio-service-minio

控制 dashboard 中 命名空间模块 中的面板是否有权限查看

rules:

- apiGroups: [“”] # 空字符串""表明使用core API group

#resources: [“pods”,“pods/log”,“pods/exec”, “pods/attach”, “pods/status”, “events”, “replicationcontrollers”, “services”, “configmaps”, “persistentvolumeclaims”]

resources: [“namespaces”,“pods”,“pods/log”,“pods/exec”, “pods/attach”, “pods/status”,“services”]

verbs: [“get”, “watch”, “list”, “create”, “update”, “patch”, “delete”]

- apiGroups: [ “apps”]

resources: [“deployments”, “daemonsets”, “statefulsets”,“replicasets”]

verbs: [“get”, “list”, “watch”]

- 生成role的配置文件

我们可以直接通过这种方式生成yaml文件,后面如果需要做啥操作的话,直接对yaml文件操作就行了

下面生成的yaml中,参数需要修改的,看上面Role参数值说明,上面中有可选参数的详细说明。

[root@master ~]# kubectl create role role1 --verb=get,list --resource=pods --dry-run=client -o yaml > role1.yaml

[root@master ~]# cat role1.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

-

apiGroups:

-

“”

resources:

- pods

#如果需要同时存在多个参数,以下面 get 和list的形式存在。

verbs:

-

get

-

list

[root@master ~]#

如,我现在对verbs增加一个create权限和增加一个node权限

[root@master ~]# mv role1.yaml sefe/

[root@master ~]# cd sefe/

[root@master sefe]# vi role1.yaml

[root@master sefe]# cat role1.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

-

apiGroups:

-

“”

resources:

-

pods

-

nodes

verbs:

-

get

-

list

-

create

[root@master sefe]#

- 生成role

后面修改后可以直接生成就会覆盖之前的权限了,不用删除了再生成。

[root@master ~]# kubectl apply -f role1.yaml

role.rbac.authorization.k8s.io/role1 created

[root@master ~]#

[root@master ~]# kubectl get role

NAME CREATED AT

role1 2021-11-05T07:34:45Z

[root@master ~]#

- 查看详细

可以看到这个role已有权限

[root@master sefe]# kubectl describe role role1

Name: role1

Labels:

Annotations:

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

jobs [] [] [get list create]

nodes [] [] [get list create]

pods [] [] [get list create]

[root@master sefe]#

创建rolebinding【绑定用户】

-

注意,用户是不属于任何命名空间的

-

创建rolebinding需要先创建一个role和对应的用户名哦

#下面中rdind1是自定义的名称

–role=指定一个role

#user=为哪个用户授权

[root@master ~]# kubectl create rolebinding rbind1 --role=role1 --user=ccx

rolebinding.rbac.authorization.k8s.io/rbind1 created

[root@master ~]#

[root@master ~]# kubectl get rolebindings.rbac.authorization.k8s.io

NAME ROLE AGE

rbind1 Role/role1 5s

[root@master ~]#

[root@master sefe]# kubectl describe rolebindings.rbac.authorization.k8s.io rbind1

Name: rbind1

Labels:

Annotations:

Role:

Kind: Role

Name: role1

Subjects:

Kind Name Namespace

User ccx

[root@master sefe]#

- 创建ServiceAccount和Role的绑定

我上面是用命令的形式实现的嘛,这是我在网上看到的其他资料,是用文件的形式实现,感兴趣的小伙伴可以用这种方法一试。。。。

[root@app01 k8s-user]# vim role-bind-minio-service-minio.yaml

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

#namespace: minio

name: role-bind-minio-service-monio #自定义名称

subjects:

- kind: ServiceAccount

#namespace: minio

name: username # 为哪个用户授权

roleRef:

kind: Role

角色名称

name: rolename#role的名称

apiGroup: rbac.authorization.k8s.io

[root@app01 k8s-user]# kubectl apply -f role-bind-minio-service-minio.yaml

rolebinding.rbac.authorization.k8s.io/role-bind-minio-service-monio created

[root@app01 k8s-user]# kubectl get rolebinding -n minio -owide

NAME AGE ROLE USERS GROUPS SERVICEACCOUNTS

role-bind-minio-service-monio 29s Role/role-minio-service-minio minio/service-minio

[root@app01 k8s-user]#

测试

-

注意,我上面已经授权了ccx用户和配置文件【看kubeconfig验证中的操作流程】,所以我这直接用一个集群外的主机来做测试【注意,这个集群ip我在kubeconfig验证中已经做好所有配置了,所以我可以直接使用】

-

查询测试

上面我们已经对ccx用户授权pod权限了,可是直接使用会发现依然报错

[root@master2 ~]# kubectl --kubeconfig=kc1 get pods

Error from server (Forbidden): pods is forbidden: User “ccx” cannot list resource “pods” in API group “” in the namespace “default”

#是因为前面说明role是对命名空间生效的【上面我们在safe命名空间】

#所以我们需要指定命名空间

[root@master2 ~]# kubectl --kubeconfig=kc1 get pods -n safe

No resources found in safe namespace.

[root@master2 ~]#

当然,只要有这个kc1配置文件的,在哪执行都行【当前在集群master上】

[root@master sefe]# ls

ca.crt ccx.crt ccx.csr ccx.key csr.yaml kc1 role1.yaml

[root@master sefe]# kubectl --kubeconfig=kc1 get pods -n safe

No resources found in safe namespace.

[root@master sefe]#

- 创建pod测试

为了更能方便看出这个效果,所以我还在集群外的主机上操作吧。

#拷贝一个pod文件

[root@master sefe]# scp …/pod1.yaml 192.168.59.151:~

root@192.168.59.151’s password:

pod1.yaml 100% 431 424.6KB/s 00:00

[root@master sefe]#

回到测试机上创建一个pod,是可以正常创建成功的

[root@master2 ~]# kubectl --kubeconfig=kc1 get nodes -n safe

^[[AError from server (Forbidden): nodes is forbidden: User “ccx” cannot list resource “nodes” in API group “” at the cluster scope

[root@master2 ~]# kubectl --kubeconfig=kc1 get pods -n safe

No resources found in safe namespace.

[root@master2 ~]#

[root@master2 ~]# export KUBECONFIG=kc1

[root@master2 ~]#

[root@master2 ~]# kubectl apply -f pod1.yaml -n safe

pod/pod1 created

[root@master2 ~]#

[root@master2 ~]# kubectl get pods -n safe

NAME READY STATUS RESTARTS AGE

pod1 1/1 Running 0 8s

[root@master2 ~]#

如果不指定命名空间的话就是创建在本地了,但是本地是没有镜像的,所以状态会一直为pending

[root@master2 ~]# kubectl apply -f pod1.yaml

pod/pod1 created

[root@master2 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

pod1 0/1 Pending 0 3s

[root@master2 ~]#

现在回到集群master上,可以看到这个pod被创建成功的

[root@master sefe]# kubectl get pods

NAME READY STATUS RESTARTS AGE

pod1 1/1 Running 0 32s

[root@master sefe]#

- 删除pod测试

是删除不了的哈,因为这个没有给delete权限

[root@master2 ~]# kubectl delete -f pod1.yaml -n safe

Error from server (Forbidden): error when deleting “pod1.yaml”: pods “pod1” is forbidden: User “ccx” cannot delete resource “pods” in API group “” in the namespace “safe”

[root@master2 ~]#

没关系呀,我们去给delete权限就是了

#集群master节点

[root@master sefe]# cat role1.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

-

apiGroups:

-

“”

resources:

-

nodes

-

pods

-

jobs

verbs:

-

get

-

list

-

create

-

delete

[root@master sefe]# kubectl apply -f role1.yaml

role.rbac.authorization.k8s.io/role1 configured

[root@master sefe]#

#测试节点

[root@master2 ~]# kubectl delete -f pod1.yaml -n safe

pod “pod1” deleted

[root@master2 ~]#

[root@master2 ~]# kubectl get pods -n safe

No resources found in safe namespace.

[root@master2 ~]#

Error from server (Forbidden)报错处理

- 报错内容如下

[root@master2 ~]# kubectl --kubeconfig=kc1 get pods -n safe

Error from server (Forbidden): pods is forbidden: User “ccx” cannot list resource “pods” in API group “” in the namespace “safe”

[root@master2 ~]#

- 之前问讲师

可能讲师也很懵吧,一直没给我解答,最后自己琢磨出来了

- 这种情况并不是role的配置文件问题,而是因为kc1中ccx这个用户的授权出问题了,至于排查方法,去看kubeconfig配置那篇文章,从开始一步步跟着排查【重点看csr和授权这样子】

- 总结:上面config授权好像和这没关系,如果用之前授权的方式给用户授权的话,好像role并没有生效了【本来role就是授权用的,上面的方式直接给了admin权限了,覆盖role了 】,所以,我上面的处理方法好像是错的,但是讲师也没有纠正我,所以,讲师也并没有很负责吧,几千块钱的培训费花的感觉并不值得,反正role这个问题,如果遇到部分权限能用,部分不能用,还是排查role相关的知识吧,我上面这个办法还是别采取了【所以,role着知识我觉得上面配置流程是没有错的,可能是我集群环境试验太多东西,啥配置被我搞乱罢了,后面有时间打新集群了再回过头来重新做一遍role相关的试验吧】

role的resources分开赋权

-

其实apiGroups是一个独立模块,一个apiGroups定义一个功能,所以如果我们想对不同的组建定义不同的功能,我们就可以添加足够多的apiGroups分开写就是了

-

比如现在我们想对pod和deployment分开赋权,那么我们就可以像下面这么写【用2个apiGroups即可】

像这种写法就可以创建deployment和管理副本数了。

[root@master sefe]# cat role2.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: role1

rules:

-

apiGroups:

-

“”

resources:

- pods

verbs:

-

get

-

list

-

create

-

delete

-

apiGroups:

-

“apps”

resources:

-

deployments

-

deployments/scale

verbs:

-

get

-

list

-

create

-

delete

-

patch

[root@master sefe]#

- role的使用到此就完了,后面可以多测试哦

现在我们删除这2样,后面进行ClusterRole的测试吧

[root@master sefe]# kubectl delete -f role1.yaml

role.rbac.authorization.k8s.io “role1” deleted

[root@master sefe]# kubectl delete rolebindings.rbac.authorization.k8s.io rbind1

rolebinding.rbac.authorization.k8s.io “rbind1” deleted

[root@master sefe]#

clusterrole测试说明

创建一个clusterrole

- 除了我下面的方法外,还可以使用这种方式

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

鉴于ClusterRole是集群范围对象,所以这里不需要定义"namespace"字段

角色名称

name: cluster-role-paas-basic-service-minio

控制 dashboard 中 命名空间模块 中的面板是否有权限查看

rules:

- apiGroups: [“rbac.authorization.k8s.io”,“”] # 空字符串""表明使用core API group

#resources: [“pods”,“pods/log”,“pods/exec”, “pods/attach”, “pods/status”, “events”, “replicationcontrollers”, “services”, “configmaps”, “persistentvolumeclaims”]

resources: [“pods”,“pods/log”,“pods/exec”, “pods/attach”, “pods/status”,“services”]

verbs: [“get”, “watch”, “list”, “create”, “update”, “patch”, “delete”]

- apiGroups: [ “apps”]

resources: [“namespaces”,“deployments”, “daemonsets”, “statefulsets”]

verbs: [“get”, “list”, “watch”]

-

注:这个其实好role是一样的配置方法,唯一区别就是,yaml文件中kind的Role需要改为ClusterRole

-

生成clusterrole的配置文件

我们可以直接通过这种方式生成yaml文件,后面如果需要做啥操作的话,直接对yaml文件操作就行了

下面生成的yaml中,参数需要修改的,看上面ClusterRole参数值说明,上面中有可选参数的详细说明。

[root@master sefe]# kubectl create clusterrole crole1 --verb=get,create,delete --resource=deploy,pod,svc --dry-run=client -o yaml > crole1.yaml

[root@master sefe]# cat crole1.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: null

name: crole1

rules:

-

apiGroups:

-

“”

resources:

-

pods

-

services

verbs:

-

get

-

create

-

delete

-

apiGroups:

-

apps

resources:

- deployments

verbs:

-

get

-

create

-

delete

[root@master sefe]#

- 生成role

后面修改后可以直接生成就会覆盖之前的权限了,不用删除了再生成。

[root@master sefe]# kubectl apply -f crole1.yaml

clusterrole.rbac.authorization.k8s.io/crole1 created

[root@master sefe]#

[root@master sefe]# kubectl get clusterrole crole1

NAME CREATED AT

crole1 2021-11-05T10:09:04Z

[root@master sefe]#

- 查看详细

[root@master sefe]# kubectl describe clusterrole crole1

Name: crole1

Labels:

Annotations:

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

pods [] [] [get create delete]

services [] [] [get create delete]

deployments.apps [] [] [get create delete]

创建cluserrolebinding【绑定用户】

-

注意,这是所有命名空间都生效的

-

创建cluserrolebinding需要先创建一个role和对应的用户名哦

#下面中rdind1是自定义的名称

–role=指定一个role

#user=为哪个用户授权

[root@master ~]#

[root@master sefe]# kubectl create clusterrolebinding cbind1 --clusterrole=crole1 --user=ccx

clusterrolebinding.rbac.authorization.k8s.io/cbind1 created

[root@master sefe]#

[root@master sefe]# kubectl get clusterrolebindings.rbac.authorization.k8s.io cbind1

NAME ROLE AGE

cbind1 ClusterRole/crole1 16s

[root@master sefe]#

前面说过,这是对所有命名空间都生效的,所以我们随便查看几个命名都会发现有这个cluser的存在

[root@master sefe]# kubectl get clusterrolebindings.rbac.authorization.k8s.io -n default cbind1

NAME ROLE AGE

cbind1 ClusterRole/crole1 28s

[root@master sefe]#

[root@master sefe]# kubectl get clusterrolebindings.rbac.authorization.k8s.io -n ds cbind1

NAME ROLE AGE

cbind1 ClusterRole/crole1 35s

[root@master sefe]#

- 查看详情

[root@master sefe]# kubectl describe clusterrolebindings.rbac.authorization.k8s.io cbind1

Name: cbind1

Labels:

Annotations:

Role:

Kind: ClusterRole

Name: crole1

Subjects:

Kind Name Namespace

User ccx

[root@master sefe]#

- 除了上面定义的clusterrole以外,也可以直接给amdin的权限给这个用户

[root@master sefe]# kubectl create clusterrolebinding cbind2 --clusterrole=cluster-admin --user=ccx

clusterrolebinding.rbac.authorization.k8s.io/cbind2 created

[root@master sefe]#

[root@master sefe]# kubectl get clusterrolebindings.rbac.authorization.k8s.io cbind2

NAME ROLE AGE

cbind2 ClusterRole/cluster-admin 23s

[root@master sefe]# kubectl describe clusterrolebindings.rbac.authorization.k8s.io cbind2

Name: cbind2

Labels:

Annotations:

Role:

Kind: ClusterRole

Name: cluster-admin

Subjects:

Kind Name Namespace

User ccx

[root@master sefe]#

- 创建ServiceAccount和ClusterRole的绑定

我上面是用命令的形式实现的嘛,这是我在网上看到的其他资料,是用文件的形式实现,感兴趣的小伙伴可以用这种方法一试。。。。

[root@app01 k8s-user]# vim cluster-role-bind-paas-basic-service-minio.yaml

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cluster-role-bind-paas-basic-service-monio #自定义名称

subjects:

- kind: ServiceAccount

namespace: minio

name: username# 为哪个用户授权

roleRef:

kind: ClusterRole

角色名称

name: cluster-role#clusterrole的名称

apiGroup: rbac.authorization.k8s.io

[root@app01 k8s-user]# kubectl apply -f cluster-role-bind-paas-basic-service-minio.yaml

rolebinding.rbac.authorization.k8s.io/cluster-role-bind-paas-basic-service-monio created

最后

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Java工程师,想要提升技能,往往是自己摸索成长,自己不成体系的自学效果低效漫长且无助。

因此收集整理了一份《2024年Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Java开发知识点,不论你是刚入门Android开发的新手,还是希望在技术上不断提升的资深开发者,这些资料都将为你打开新的学习之门!

如果你觉得这些内容对你有帮助,需要这份全套学习资料的朋友可以戳我获取!!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

2

NAME ROLE AGE

cbind2 ClusterRole/cluster-admin 23s

[root@master sefe]# kubectl describe clusterrolebindings.rbac.authorization.k8s.io cbind2

Name: cbind2

Labels:

Annotations:

Role:

Kind: ClusterRole

Name: cluster-admin

Subjects:

Kind Name Namespace

User ccx

[root@master sefe]#

- 创建ServiceAccount和ClusterRole的绑定

我上面是用命令的形式实现的嘛,这是我在网上看到的其他资料,是用文件的形式实现,感兴趣的小伙伴可以用这种方法一试。。。。

[root@app01 k8s-user]# vim cluster-role-bind-paas-basic-service-minio.yaml

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cluster-role-bind-paas-basic-service-monio #自定义名称

subjects:

- kind: ServiceAccount

namespace: minio

name: username# 为哪个用户授权

roleRef:

kind: ClusterRole

角色名称

name: cluster-role#clusterrole的名称

apiGroup: rbac.authorization.k8s.io

[root@app01 k8s-user]# kubectl apply -f cluster-role-bind-paas-basic-service-minio.yaml

rolebinding.rbac.authorization.k8s.io/cluster-role-bind-paas-basic-service-monio created

最后

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Java工程师,想要提升技能,往往是自己摸索成长,自己不成体系的自学效果低效漫长且无助。

因此收集整理了一份《2024年Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

[外链图片转存中…(img-U57t3kR6-1715146094175)]

[外链图片转存中…(img-bXc6hNRb-1715146094176)]

[外链图片转存中…(img-Bn2OERN2-1715146094176)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Java开发知识点,不论你是刚入门Android开发的新手,还是希望在技术上不断提升的资深开发者,这些资料都将为你打开新的学习之门!

如果你觉得这些内容对你有帮助,需要这份全套学习资料的朋友可以戳我获取!!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)