【kubernetes】k8s集群高可用部署安装和概念详细说明【含离线部署】,客户端连接haproxy访问高可用流程(1)

看完美团、字节、腾讯这三家的面试问题,是不是感觉问的特别多,可能咱们又得开启面试造火箭、工作拧螺丝的模式去准备下一次的面试了。开篇有提及我可是足足背下了1000道题目,多少还是有点用的呢,我看了下,上面这些问题大部分都能从我背的题里找到的,所以今天给大家分享一下互联网工程师必备的面试1000题。注意不论是我说的互联网面试1000题,还是后面提及的算法与数据结构、设计模式以及更多的Java学习笔记等

(3/3): openssl-libs-1.0.2k-22.el7_9.x86_64.rpm | 1.2 MB 00:00:00

Total 353 kB/s | 2.5 MB 00:00:07

exiting because “Download Only” specified

[root@ccx yum.repos.d]# cd /root/haproxy/

[root@ccx haproxy]# ls

haproxy-1.5.18-9.el7_9.1.x86_64.rpm openssl-libs-1.0.2k-22.el7_9.x86_64.rpm

openssl-1.0.2k-22.el7_9.x86_64.rpm

[root@ccx haproxy]#

- 把上面3个包导入到内网环境并安装

[root@haproxy-164 haproxy]# ls

haproxy-1.5.18-9.el7_9.1.x86_64.rpm openssl-libs-1.0.2k-22.el7_9.x86_64.rpm

openssl-1.0.2k-22.el7_9.x86_64.rpm

[root@haproxy-164 haproxy]# rpm -ivhU * --nodeps --force

准备中… ################################# [100%]

正在升级/安装…

1:openssl-libs-1:1.0.2k-22.el7_9 ################################# [ 20%]

2:haproxy-1.5.18-9.el7_9.1 ################################# [ 40%]

3:openssl-1:1.0.2k-22.el7_9 ################################# [ 60%]

正在清理/删除…

4:openssl-1:1.0.2k-8.el7 ################################# [ 80%]

5:openssl-libs-1:1.0.2k-8.el7 ################################# [100%]

[root@haproxy-164 haproxy]#

编辑配置文件

- 在配置文件

/etc/haproxy/haproxy.cfg最后加上下面5行内容

[root@haproxy-164 haproxy]# tail -n 5 /etc/haproxy/haproxy.cfg

listen k8s-lb *:6443

mode tcp

balance roundrobin

server s1 192.168.59.163:6443 weight 1

server s2 192.168.59.162:6443 weight 1

[root@haproxy-164 haproxy]#

k8s-lb #自定义名称

192.168.59.163/162 # 2个master节点ip

- 然后重启该服务并加入自启动

[root@haproxy-164 haproxy]# systemctl enable haproxy.service --now

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

[root@haproxy-164 haproxy]#

[root@haproxy-164 haproxy]# systemctl is-active haproxy.service

active

[root@haproxy-164 haproxy]#

- 这时候可以看到6443端口已经被监听了

[root@haproxy-164 haproxy]# netstat -ntlp | grep 6443

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 2504/haproxy

[root@haproxy-164 haproxy]#

- 2台etcd需要同步操作

安装etcd

- 有外网直接执行

yum -y install etcd

- 没有外网的,去下载这个包

[root@ccx haproxy]# mkdir /root/etcd

[root@ccx haproxy]# yum -y install etcd --downloadonly --downloaddir=/root/etcd

Loaded plugins: fastestmirror, langpacks

Loading mirror speeds from cached hostfile

-

base: mirror.lzu.edu.cn

-

extras: mirrors.aliyun.com

-

updates: mirrors.aliyun.com

Resolving Dependencies

–> Running transaction check

—> Package etcd.x86_64 0:3.3.11-2.el7.centos will be installed

–> Finished Dependency Resolution

Dependencies Resolved

==========================================================================================

Package Arch Version Repository Size

==========================================================================================

Installing:

etcd x86_64 3.3.11-2.el7.centos extras 10 M

Transaction Summary

==========================================================================================

Install 1 Package

Total download size: 10 M

Installed size: 45 M

Background downloading packages, then exiting:

warning: /root/etcd/etcd-3.3.11-2.el7.centos.x86_64.rpm.55125.tmp: Header V3 RSA/SHA256 Si

gnature, key ID f4a80eb5: NOKEYPublic key for etcd-3.3.11-2.el7.centos.x86_64.rpm.55125.tmp is not installed

etcd-3.3.11-2.el7.centos.x86_64.rpm | 10 MB 00:07:24

exiting because “Download Only” specified

[root@ccx haproxy]# cd /root/etcd/

[root@ccx etcd]# ls

etcd-3.3.11-2.el7.centos.x86_64.rpm

[root@ccx etcd]#

- 然后导入内网并安装

两台etcd均需要安装

[root@etcd-161 etcd]# ls

etcd-3.3.11-2.el7.centos.x86_64.rpm

[root@etcd-161 etcd]#

[root@etcd-161 etcd]# rpm -ivhU * --nodeps --force

准备中… ################################# [100%]

正在升级/安装…

1:etcd-3.3.11-2.el7.centos ################################# [100%]

[root@etcd-161 etcd]#

[root@etcd-161 etcd]# scp etcd-3.3.11-2.el7.centos.x86_64.rpm 192.168.59.160:~

The authenticity of host ‘192.168.59.160 (192.168.59.160)’ can’t be established.

ECDSA key fingerprint is SHA256:zRtVBoNePoRXh9aA8eppKwwduS9Rjjr/kT5a7zijzjE.

ECDSA key fingerprint is MD5:b8:53:cc:da:86:2a:97:dc:bd:64:6b:b1:d0:f3:02:ce.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘192.168.59.160’ (ECDSA) to the list of known hosts.

root@192.168.59.160’s password:

Permission denied, please try again.

root@192.168.59.160’s password:

etcd-3.3.11-2.el7.centos.x86_64.rpm 100% 10MB 38.8MB/s 00:00

[root@etcd-161 etcd]#

#另一台

[root@etcd-160 ~]# mkdir etcd

[root@etcd-160 ~]# mv etcd-3.3.11-2.el7.centos.x86_64.rpm etcd

[root@etcd-160 ~]# cd etcd

[root@etcd-160 etcd]# ls

etcd-3.3.11-2.el7.centos.x86_64.rpm

[root@etcd-160 etcd]#

[root@etcd-160 etcd]# rpm -ivhU * --nodeps --force

准备中… ################################# [100%]

正在升级/安装…

1:etcd-3.3.11-2.el7.centos ################################# [100%]

[root@etcd-160 etcd]#

编辑配置文件

- 两台都需要编辑,注意看主机名【需要对应修改ip】

看不懂的去我之前对etcd的安装说明博客,里面有详细介绍,我这就不做说明了

k8s的核心组件etcd的安装使用、快照说明及etcd命令详解【含单节点,多节点和新节点加入说明】

- 编辑配置文件

记得修改ip和ETCD_NAME行

[root@etcd-161 ~]# ip a | grep 59

inet 192.168.59.161/24 brd 192.168.59.255 scope global ens32

[root@etcd-161 ~]#

[root@etcd-161 ~]# cat /etc/etcd/etcd.conf

ETCD_DATA_DIR=“/var/lib/etcd/cluster.etcd”

ETCD_LISTEN_PEER_URLS=“http://192.168.59.161:2380,http://localhost:2380”

ETCD_LISTEN_CLIENT_URLS=“http://192.168.59.161:2379,http://localhost:2379”

ETCD_NAME=“etcd-161”

ETCD_INITIAL_ADVERTISE_PEER_URLS=“http://192.168.59.161:2380”

ETCD_ADVERTISE_CLIENT_URLS=“http://localhost:2379,http://192.168.59.161:2379”

ETCD_INITIAL_CLUSTER=“etcd-161=http://192.168.59.161:2380,etcd-160=http://192.168.59.160:2380”

ETCD_INITIAL_CLUSTER_TOKEN=“etcd-cluster”

ETCD_INITIAL_CLUSTER_STATE=“new”

[root@etcd-161 ~]#

另一台

[root@etcd-160 etcd]# ip a | grep 59

inet 192.168.59.160/24 brd 192.168.59.255 scope global ens32

[root@etcd-160 etcd]#

[root@etcd-160 etcd]# cat /etc/etcd/etcd.conf

ETCD_DATA_DIR=“/var/lib/etcd/cluster.etcd”

ETCD_LISTEN_PEER_URLS=“http://192.168.59.160:2380,http://localhost:2380”

ETCD_LISTEN_CLIENT_URLS=“http://192.168.59.160:2379,http://localhost:2379”

ETCD_NAME=“etcd-160”

ETCD_INITIAL_ADVERTISE_PEER_URLS=“http://192.168.59.160:2380”

ETCD_ADVERTISE_CLIENT_URLS=“http://localhost:2379,http://192.168.59.160:2379”

ETCD_INITIAL_CLUSTER=“etcd-161=http://192.168.59.161:2380,etcd-160=http://192.168.59.160:2380”

ETCD_INITIAL_CLUSTER_TOKEN=“etcd-cluster”

ETCD_INITIAL_CLUSTER_STATE=“new”

[root@etcd-160 etcd]#

- 然后启动etcd服务

[root@etcd-161 ~]# systemctl start etcd

[root@etcd-161 ~]# systemctl is-active etcd

active

[root@etcd-161 ~]# systemctl enable etcd

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

[root@etcd-161 ~]#

[root@etcd-160 etcd]# systemctl start etcd

[root@etcd-160 etcd]# systemctl is-active etcd

active

[root@etcd-160 etcd]# systemctl enable etcd

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

[root@etcd-160 etcd]#

环境配置【master和work都做】

- 这个呢,如果对集群配置不熟悉,去看看这篇文章

【kubernetes】k8s集群的搭建安装详细说明【创建集群、加入集群、踢出集群、重置集群…】【含离线搭建方法】

- 解析设置

master和node节点解析配置成一致,且互相之间都需要加上。

[root@master1-163 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.59.163 master1-163

192.168.59.162 master2-162

192.168.59.165 worker-165

[root@master1-163 ~]#

[root@master1-163 ~]# scp /etc/hosts 192.168.59.162:/etc/hosts

The authenticity of host ‘192.168.59.162 (192.168.59.162)’ can’t be established.

ECDSA key fingerprint is SHA256:zRtVBoNePoRXh9aA8eppKwwduS9Rjjr/kT5a7zijzjE.

ECDSA key fingerprint is MD5:b8:53:cc:da:86:2a:97:dc:bd:64:6b:b1:d0:f3:02:ce.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘192.168.59.162’ (ECDSA) to the list of known hosts.

root@192.168.59.162’s password:

hosts 100% 238 297.8KB/s 00:00

[root@master1-163 ~]#

[root@master1-163 ~]# scp /etc/hosts 192.168.59.165:/etc/hosts

The authenticity of host ‘192.168.59.165 (192.168.59.165)’ can’t be established.

ECDSA key fingerprint is SHA256:zRtVBoNePoRXh9aA8eppKwwduS9Rjjr/kT5a7zijzjE.

ECDSA key fingerprint is MD5:b8:53:cc:da:86:2a:97:dc:bd:64:6b:b1:d0:f3:02:ce.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘192.168.59.165’ (ECDSA) to the list of known hosts.

root@192.168.59.165’s password:

hosts 100% 238 294.3KB/s 00:00

[root@master1-163 ~]#

- 关闭swap

全部都要做

[root@master1-163 ~]# swapoff -a ; sed -i ‘/swap/d’ /etc/fstab

[root@master1-163 ~]#

[root@master1-163 ~]# swapon -s

[root@worker-165 ~]# swapon -s

文件名 类型 大小 已用 权限

/dev/sda2 partition 10485756 0 -1

[root@worker-165 ~]#

[root@worker-165 ~]# swapoff -a ; sed -i ‘/swap/d’ /etc/fstab

[root@worker-165 ~]# swapon -s

[root@worker-165 ~]#

[root@master2-162 ~]# swapon -s

文件名 类型 大小 已用 权限

/dev/sda2 partition 10485756 0 -1

[root@master2-162 ~]# swapoff -a ; sed -i ‘/swap/d’ /etc/fstab

[root@master2-162 ~]#

[root@master2-162 ~]# swapon -s

[root@master2-162 ~]#

- 关闭防火墙

master和node都需要执行

[root@master1-163 ~]# systemctl stop firewalld.service ; systemctl disable firewalld.service

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master1-163 ~]#

- 关闭selinux

master和node都需要执行

[root@master2-162 docker-ce]# cat /etc/sysconfig/selinux | grep dis

disabled - No SELinux policy is loaded.

SELINUX=disabled

[root@master2-162 docker-ce]#

[root@master2-162 docker-ce]# getenforce

Disabled

[root@master2-162 docker-ce]#

- 配置加速器

master和node都需要执行

[root@master2-162 docker-ce]# cat > /etc/docker/daemon.json <<EOF

{

“registry-mirrors”: [“https://frz7i079.mirror.aliyuncs.com”]

}

EOF

[root@master2-162 docker-ce]# systemctl restart docker

[root@master2-162 docker-ce]#

- 设置内核参数

master和node都需要执行

[root@worker-165 docker-ce]# cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@worker-165 docker-ce]#

[root@worker-165 docker-ce]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@worker-165 docker-ce]#

安装docker-ce【master和work都安装】

- 需要先配置一个yum源

[root@ccx etcd]# wget ftp://ftp.rhce.cc/k8s/* -P /etc/yum.repos.d/

–2021-11-26 16:47:07-- ftp://ftp.rhce.cc/k8s/*

=> ‘/etc/yum.repos.d/.listing’

Resolving ftp.rhce.cc (ftp.rhce.cc)… 101.37.152.41

…

-

如果有外网,直接执行

-

没有外网的,去下载这个包

[root@ccx etcd]# yum -y install docker-ce --downloadonly --downloaddir=/root/docker-ce

Loaded plugins: fastestmirror, langpacks

docker-ce-stable | 3.5 kB 00:00:00

epel | 4.7 kB 00:00:00

kubernetes/signature | 844 B 00:00:00

Retrieving key from https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

Importing GPG key 0x307EA071:

Userid : "Rapture Automatic Signing Key (cloud-rapture-signing-key-2021-03-01-08_01_0

…大量输出

(30/30): docker-ce-cli-20.10.11-3.el7.x86_64.rpm | 29 MB 00:00:24

Total 2.4 MB/s | 110 MB 00:00:44

exiting because “Download Only” specified

[root@ccx etcd]# cd /root/docker-ce/

[root@ccx docker-ce]# ls

containerd.io-1.4.12-3.1.el7.x86_64.rpm

container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm

cryptsetup-2.0.3-6.el7.x86_64.rpm

cryptsetup-libs-2.0.3-6.el7.x86_64.rpm

cryptsetup-python-2.0.3-6.el7.x86_64.rpm

docker-ce-20.10.11-3.el7.x86_64.rpm

docker-ce-cli-20.10.11-3.el7.x86_64.rpm

docker-ce-rootless-extras-20.10.11-3.el7.x86_64.rpm

docker-scan-plugin-0.9.0-3.el7.x86_64.rpm

fuse3-libs-3.6.1-4.el7.x86_64.rpm

fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm

libgudev1-219-78.el7_9.3.x86_64.rpm

libseccomp-2.3.1-4.el7.x86_64.rpm

libselinux-2.5-15.el7.x86_64.rpm

libselinux-python-2.5-15.el7.x86_64.rpm

libselinux-utils-2.5-15.el7.x86_64.rpm

libsemanage-2.5-14.el7.x86_64.rpm

libsemanage-python-2.5-14.el7.x86_64.rpm

libsepol-2.5-10.el7.x86_64.rpm

lz4-1.8.3-1.el7.x86_64.rpm

policycoreutils-2.5-34.el7.x86_64.rpm

policycoreutils-python-2.5-34.el7.x86_64.rpm

selinux-policy-3.13.1-268.el7_9.2.noarch.rpm

selinux-policy-targeted-3.13.1-268.el7_9.2.noarch.rpm

setools-libs-3.3.8-4.el7.x86_64.rpm

slirp4netns-0.4.3-4.el7_8.x86_64.rpm

systemd-219-78.el7_9.3.x86_64.rpm

systemd-libs-219-78.el7_9.3.x86_64.rpm

systemd-python-219-78.el7_9.3.x86_64.rpm

systemd-sysv-219-78.el7_9.3.x86_64.rpm

[root@ccx docker-ce]#

- 导入到内网并安装

master和word都需要安装

[root@master1-163 docker-ce]# ls | wc -l

30

[root@master1-163 docker-ce]# du -sh

110M .

[root@master1-163 docker-ce]#

[root@master1-163 docker-ce]# rpm -ivhU * --nodeps --force

警告:containerd.io-1.4.12-3.1.el7.x86_64.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID 621e9f35: NOKEY

准备中… ################################# [100%]

正在升级/安装…

1:libsepol-2.5-10.el7 ################################# [ 2%]

2:libselinux-2.5-15.el7 ################################# [ 5%]

3:libsemanage-2.5-14.el7 ################################# [ 7%]

4:lz4-1.8.3-1.el7 ################################# [ 9%]

5:systemd-libs-219-78.el7_9.3 ################################# [ 12%]

6:libseccomp-2.3.1-4.el7 ################################# [ 14%]

7:cryptsetup-libs-2.0.3-6.el7 ################################# [ 16%]

8:systemd-219-78.el7_9.3 ################################# [ 19%]

9:libselinux-utils-2.5-15.el7 ################################# [ 21%]

10:policycoreutils-2.5-34.el7 ################################# [ 23%]

11:selinux-policy-3.13.1-268.el7_9.2################################# [ 26%]

12:docker-scan-plugin-0:0.9.0-3.el7 ################################# [ 28%]

13:docker-ce-cli-1:20.10.11-3.el7 ################################# [ 30%]

14:selinux-policy-targeted-3.13.1-26################################# [ 33%]

15:slirp4netns-0.4.3-4.el7_8 ################################# [ 35%]

16:libsemanage-python-2.5-14.el7 ################################# [ 37%]

17:libselinux-python-2.5-15.el7 ################################# [ 40%]

18:setools-libs-3.3.8-4.el7 ################################# [ 42%]

19:policycoreutils-python-2.5-34.el7################################# [ 44%]

20:container-selinux-2:2.119.2-1.911################################# [ 47%]

setsebool: SELinux is disabled.

21:containerd.io-1.4.12-3.1.el7 ################################# [ 49%]

22:fuse3-libs-3.6.1-4.el7 ################################# [ 51%]

23:fuse-overlayfs-0.7.2-6.el7_8 ################################# [ 53%]

24:docker-ce-rootless-extras-0:20.10################################# [ 56%]

25:docker-ce-3:20.10.11-3.el7 ################################# [ 58%]

26:systemd-python-219-78.el7_9.3 ################################# [ 60%]

27:systemd-sysv-219-78.el7_9.3 ################################# [ 63%]

28:cryptsetup-2.0.3-6.el7 ################################# [ 65%]

29:cryptsetup-python-2.0.3-6.el7 ################################# [ 67%]

30:libgudev1-219-78.el7_9.3 ################################# [ 70%]

正在清理/删除…

31:selinux-policy-targeted-3.13.1-16################################# [ 72%]

32:selinux-policy-3.13.1-166.el7 ################################# [ 74%]

33:systemd-sysv-219-42.el7 ################################# [ 77%]

34:policycoreutils-2.5-17.1.el7 ################################# [ 79%]

35:systemd-219-42.el7 ################################# [ 81%]

36:libselinux-utils-2.5-11.el7 ################################# [ 84%]

37:libsemanage-2.5-8.el7 ################################# [ 86%]

38:systemd-libs-219-42.el7 ################################# [ 88%]

39:libselinux-python-2.5-11.el7 ################################# [ 91%]

40:libselinux-2.5-11.el7 ################################# [ 93%]

41:libsepol-2.5-6.el7 ################################# [ 95%]

42:cryptsetup-libs-1.7.4-3.el7 ################################# [ 98%]

43:libseccomp-2.3.1-3.el7 ################################# [100%]

[root@master1-163 docker-ce]#

[root@master1-163 docker-ce]# scp * master2-162:/root/docker-ce

[root@master1-163 docker-ce]# scp * worker-165:/root/docker-ce

#另一个master

[root@master2-162 docker-ce]# ls | wc -l

30

[root@master2-162 docker-ce]# du -sh

110M .

[root@master2-162 docker-ce]#

[root@master2-162 docker-ce]# rpm -ivhU * --nodeps --force

node节点

[root@worker-165 docker-ce]# ls | wc -l

30

[root@worker-165 docker-ce]# du -sh

110M .

[root@worker-165 docker-ce]#

[root@worker-165 docker-ce]# rpm -ivhU * --nodeps --force

- 全部节点启动docker服务

[root@worker-165 docker-ce]# systemctl enable docker --now

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@worker-165 docker-ce]#

[root@worker-165 docker-ce]# systemctl is-active docker

active

[root@worker-165 docker-ce]#

[root@master1-163 docker-ce]# systemctl enable docker --now

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@master1-163 docker-ce]# systemctl is-active docker

active

[root@master1-163 docker-ce]#

[root@master2-162 docker-ce]# systemctl enable docker --now

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@master2-162 docker-ce]# systemctl is-active docker

active

[root@master2-162 docker-ce]#

安装kubelet【master和work都安装】

- 有外网的直接执行下面命令

我这安装的是1.21.1版本

yum install -y kubelet-1.21.1-0 kubeadm-1.21.1-0 kubectl-1.21.1-0 --disableexcludes=kubernetes

- 没有外网的去下载包

[root@ccx kubelet]# yum install -y kubelet-1.21.1-0 kubeadm-1.21.1-0 kubectl-1.21.1-0 --di

sableexcludes=kubernetes --downloadonly --downloaddir=/root/kubelet

…

(11/11): libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm | 18 kB 00:00:05

Total 4.8 MB/s | 64 MB 00:00:13

exiting because “Download Only” specified

[root@ccx docker-ce]# cd /root/kubelet/

[root@ccx kubelet]# ls

3944a45bec4c99d3489993e3642b63972b62ed0a4ccb04cc7655ce0467fddfef-kubectl-1.21.1-0.x86_64.r

pm67ffa375b03cea72703fe446ff00963919e8fce913fbc4bb86f06d1475a6bdf9-cri-tools-1.19.0-0.x86_64

.rpmc47efa28c5935ed2ffad234e2b402d937dde16ab072f2f6013c71d39ab526f40-kubelet-1.21.1-0.x86_64.r

pmconntrack-tools-1.4.4-7.el7.x86_64.rpm

db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kubernetes-cni-0.8.7-0.x8

6_64.rpme0511a4d8d070fa4c7bcd2a04217c80774ba11d44e4e0096614288189894f1c5-kubeadm-1.21.1-0.x86_64.r

pmlibnetfilter_conntrack-1.0.6-1.el7_3.x86_64.rpm

libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm

libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm

libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm

socat-1.7.3.2-2.el7.x86_64.rpm

- 然后导入到内网环境并安装,然后加入自启动和启动kubelet服务

3个节点都重复下面步骤安装,我下面只放一个了。

[root@master1-163 kubelet]# systemctl restart kubelet.service ;systemctl enable kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@master1-163 kubelet]#

[root@master1-163 kubelet]# systemctl status kubelet.service

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since 五 2021-11-26 18:03:50 CST; 7s ago

Docs: https://kubernetes.io/docs/

Process: 2532 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=exited, status=1/FAILURE)

Main PID: 2532 (code=exited, status=1/FAILURE)

11月 26 18:03:50 master1-163 systemd[1]: Unit kubelet.service entered failed state.

11月 26 18:03:50 master1-163 systemd[1]: kubelet.service failed.

[root@master1-163 kubelet]# systemctl is-active kubelet.service

active

[root@master1-163 kubelet]#

- 我现在安装在:

master1-163这台master节点上

config文件准备

- 找一台之前安装好的master节点,直接导出现有配置文件即可

[root@master ~]# kubeadm config view

Command “view” is deprecated, This command is deprecated and will be removed in a future release, please use ‘kubectl get cm -o yaml -n kube-system kubeadm-config’ to get the kubeadm config directly.

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.21.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

[root@master ~]# kubeadm config view > config.yaml

Command “view” is deprecated, This command is deprecated and will be removed in a future release, please use ‘kubectl get cm -o yaml -n kube-system kubeadm-config’ to get the kubeadm config directly.

[root@master ~]# scp config.yaml 192.168.59.163:~

The authenticity of host ‘192.168.59.163 (192.168.59.163)’ can’t be established.

ECDSA key fingerprint is SHA256:zRtVBoNePoRXh9aA8eppKwwduS9Rjjr/kT5a7zijzjE.

ECDSA key fingerprint is MD5:b8:53:cc:da:86:2a:97:dc:bd:64:6b:b1:d0:f3:02:ce.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘192.168.59.163’ (ECDSA) to the list of known hosts.

root@192.168.59.163’s password:

config.yaml 100% 491 355.0KB/s 00:00

[root@master ~]#

- 现在,我master1上已经有这个config文件了

[root@master1-163 kubelet]# ls /root/ | grep config

config.yaml

[root@master1-163 kubelet]#

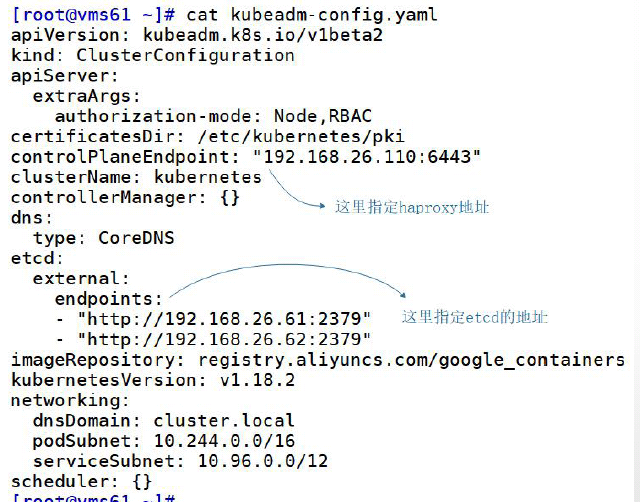

- 对config文件进行更改

因为我们现在不使用单独的etcd方法了,需要改为控制器的方式,所以需要增加一些配置

#修改前

[root@master1-163 ~]# cat config.yaml

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.21.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

[root@master1-163 ~]#

#修改后

[root@master1-163 ~]# cat config.yaml

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2 #版本

certificatesDir: /etc/kubernetes/pki

controlPlaneEndpoint: “192.168.59.164:6443” #haproxy的ip

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

external:

endpoints:

-

“http://192.168.59.161:2379” #etcd1的ip

-

“http://192.168.59.160:2379” #etcd2的ip

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.21.1 #这个要改为上面安装的版本一样

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

[root@master1-163 ~]#

apiVersion:# 可以确定下现在的master是不是这个版本,这个可以替换为和你现在已有master一样的版本【kubeadm config view查看】

初始化集群

镜像准备【离线环境必做】

- 自己去有外网的环境搭一套环境,下载v1.21.1所需所有镜像

不想折腾的可以直接打包我这已经下载好了的【包含v1.21.1和v1.21.0两个版本】,导出来有如下图中的所有镜像

kubeadm_images.rar(含v1.21.0和v1.21.1初始化所需所有镜像包,解压出来1.2G)

- 导入到环境中

导入到所有master和node节点上,外网环境不用准备是因为其他节点会自动下载相关所需镜像的,离线环境必须手动把这些镜像导入到所有节点

[root@master1-163 ~]# du -sh kubeadm_images.tar

1.2G kubeadm_images.tar

[root@master1-163 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

[root@master1-163 ~]#

[root@master1-163 ~]# docker load -i kubeadm_images.tar

417cb9b79ade: Loading layer 3.062MB/3.062MB

b50131762317: Loading layer 1.71MB/1.71MB

1e6ed7621dee: Loading layer 122.1MB/122.1MB

Loaded image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.21.1

28699c71935f: Loading layer 3.062MB/3.062MB

c300f5fa3d7b: Loading layer 1.71MB/1.71MB

aa42f0ff58e4: Loading layer 116.3MB/116.3MB

Loaded image: registry.aliyuncs.com/google_containers/kube-controller-manager:v1.21.0

5714c0da2d88: Loading layer 47.11MB/47.11MB

Loaded image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.21.0

915e8870f7d1: Loading layer 684.5kB/684.5kB

Loaded image: registry.aliyuncs.com/google_containers/pause:3.4.1

225df95e717c: Loading layer 336.4kB/336.4kB

69ae2fbf419f: Loading layer 42.24MB/42.24MB

Loaded image: registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

d72a74c56330: Loading layer 3.031MB/3.031MB

d61c79b29299: Loading layer 2.13MB/2.13MB

1a4e46412eb0: Loading layer 225.3MB/225.3MB

bfa5849f3d09: Loading layer 2.19MB/2.19MB

bb63b9467928: Loading layer 21.98MB/21.98MB

Loaded image: registry.aliyuncs.com/google_containers/etcd:3.4.13-0

a55f783b98c2: Loading layer 122.1MB/122.1MB

Loaded image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.21.0

077075ef2723: Loading layer 47.11MB/47.11MB

Loaded image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.21.1

13fb781d48d3: Loading layer 53.89MB/53.89MB

8c9959c71363: Loading layer 22.25MB/22.25MB

8cf972e62246: Loading layer 4.894MB/4.894MB

a388391a5fc4: Loading layer 4.608kB/4.608kB

93c6e9a2ab1e: Loading layer 8.192kB/8.192kB

21d84a192aca: Loading layer 8.704kB/8.704kB

84ce83eaa4cd: Loading layer 43.14MB/43.14MB

Loaded image: registry.aliyuncs.com/google_containers/kube-proxy:v1.21.0

105a75f15167: Loading layer 62.35MB/62.35MB

3cf1454ee1bf: Loading layer 22.33MB/22.33MB

1e16c975f0a9: Loading layer 4.884MB/4.884MB

9fb8c8a7b75b: Loading layer 4.608kB/4.608kB

72682df7cfdd: Loading layer 8.192kB/8.192kB

6fb6238e9c25: Loading layer 8.704kB/8.704kB

016157fde486: Loading layer 43.14MB/43.14MB

Loaded image: registry.aliyuncs.com/google_containers/kube-proxy:v1.21.1

954115f32d73: Loading layer 91.22MB/91.22MB

Loaded image: registry.cn-hangzhou.aliyuncs.com/kube-iamges/dashboard:v2.0.0-beta8

89ac18ee460b: Loading layer 238.6kB/238.6kB

878c5d3194b0: Loading layer 39.87MB/39.87MB

1dc71700363a: Loading layer 2.048kB/2.048kB

Loaded image: registry.cn-hangzhou.aliyuncs.com/kube-iamges/metrics-scraper:v1.0.1

2c68eaf5f19f: Loading layer 116.3MB/116.3MB

Loaded image: registry.aliyuncs.com/google_containers/kube-controller-manager:v1.21.1

[root@master1-163 ~]#

[root@master1-163 ~]#

[root@master1-163 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.1 771ffcf9ca63 6 months ago 126MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.1 a4183b88f6e6 6 months ago 50.6MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.1 e16544fd47b0 6 months ago 120MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.1 4359e752b596 6 months ago 131MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 7 months ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 7 months ago 122MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 7 months ago 50.6MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 7 months ago 120MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 10 months ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 13 months ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 15 months ago 253MB

registry.cn-hangzhou.aliyuncs.com/kube-iamges/dashboard v2.0.0-beta8 eb51a3597525 24 months ago 90.8MB

registry.cn-hangzhou.aliyuncs.com/kube-iamges/metrics-scraper v1.0.1 709901356c11 2 years ago 40.1MB

[root@master1-163 ~]#

初始化集群并增加变量环境

- 外网环境直接执行下面命令即可,会自动下载镜像,但需要提前导入coredns镜像【提前下载这一个,否则会报错。

内网环境,把上面所有镜像都导入以后,再执行下面命令。

kubeadm init --config=config.yaml

- 执行过程如下图【内网和外网最后显示均一样才是正常的】

虽然都是初始化集群,但可以和之前单纯初始化的时候最后的提示是不一样的,可以自行对比一下,最重要的初始化完成后最下面这个多了个--control-plane参数呢,是以控制权限的形式加入【加入多master时用】

[root@master1-163 ~]# kubeadm init --config=config.yaml

[init] Using Kubernetes version: v1.21.1

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected “cgroupfs” as the Docker cgroup driver. The recommended driver is “systemd”. Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using ‘kubeadm config images pull’

[certs] Using certificateDir folder “/etc/kubernetes/pki”

[certs] Generating “ca” certificate and key

[certs] Generating “apiserver” certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master1-163] and IPs [10.96.0.1 192.168.59.163 192.168.59.164]

[certs] Generating “apiserver-kubelet-client” certificate and key

[certs] Generating “front-proxy-ca” certificate and key

[certs] Generating “front-proxy-client” certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating “sa” key and public key

[kubeconfig] Using kubeconfig folder “/etc/kubernetes”

[kubeconfig] Writing “admin.conf” kubeconfig file

[kubeconfig] Writing “kubelet.conf” kubeconfig file

[kubeconfig] Writing “controller-manager.conf” kubeconfig file

[kubeconfig] Writing “scheduler.conf” kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file “/var/lib/kubelet/kubeadm-flags.env”

[kubelet-start] Writing kubelet configuration to file “/var/lib/kubelet/config.yaml”

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder “/etc/kubernetes/manifests”

[control-plane] Creating static Pod manifest for “kube-apiserver”

[control-plane] Creating static Pod manifest for “kube-controller-manager”

[control-plane] Creating static Pod manifest for “kube-scheduler”

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory “/etc/kubernetes/manifests”. This can take up to 4m0s

[apiclient] All control plane components are healthy after 24.542204 seconds

[upload-config] Storing the configuration used in ConfigMap “kubeadm-config” in the “kube-system” Namespace

[kubelet] Creating a ConfigMap “kubelet-config-1.21” in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master1-163 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master1-163 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: nej47e.xlge9gc2usn6sky7

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the “cluster-info” ConfigMap in the “kube-public” namespace

[kubelet-finalize] Updating “/etc/kubernetes/kubelet.conf” to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown ( i d − u ) : (id -u): (id−u):(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run “kubectl apply -f [podnetwork].yaml” with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.59.164:6443 --token nej47e.xlge9gc2usn6sky7 \

–discovery-token-ca-cert-hash sha256:2e4d2ca7c162f57de5edc80b89b75ad9493874746cc7c596af7f29ba91eccffe \

–control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.59.164:6443 --token nej47e.xlge9gc2usn6sky7 \

–discovery-token-ca-cert-hash sha256:2e4d2ca7c162f57de5edc80b89b75ad9493874746cc7c596af7f29ba91eccffe

[root@master1-163 ~]#

- 执行环境变量创建【上面其实有提示的,自行看一下】

可以直接复制你上面提示中的这3个命令哈

[root@master1-163 ~]# mkdir -p $HOME/.kube

[root@master1-163 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master1-163 ~]# sudo chown ( i d − u ) : (id -u): (id−u):(id -g) $HOME/.kube/config

[root@master1-163 ~]#

word加入集群

- 命令:就是master上执行

kubeadm token create --print-join-command后的结果。

也可以是初始化后最后显示的内容【命令查看和初始化最后中间有一个值不一样,无所谓的】

- 我这使用的是初始化后最后的结果了

注:work不能有--control-plane

[root@worker-165 kubelet]# kubeadm join 192.168.59.164:6443 --token nej47e.xlge9gc2usn6sky7 --discovery-token-ca-cert-hash sha256:2e4d2ca7c162f57de5edc80b89b75ad9493874746cc7c596af7f29ba91eccffe --control-plane y7 --discovery-token-ca-cert-hash sha256:2e4d2ca7c162f57de5edc80b89b75ad9493874746cc7c596af7f29ba91eccffe

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected “cgroupfs” as the Docker cgroup driver. The recommended driver is “systemd”. Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster…

[preflight] FYI: You can look at this config file with ‘kubectl -n kube-system get cm kubeadm-config -o yaml’

[kubelet-start] Writing kubelet configuration to file “/var/lib/kubelet/config.yaml”

[kubelet-start] Writing kubelet environment file with flags to file “/var/lib/kubelet/kubeadm-flags.env”

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap…

This node has joined the cluster:

-

Certificate signing request was sent to apiserver and a response was received.

-

The Kubelet was informed of the new secure connection details.

Run ‘kubectl get nodes’ on the control-plane to see this node join the cluster.

[root@worker-165 kubelet]#

- 加完以后,回到master,就可以看到这个节点信息了

[root@master1-163 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1-163 NotReady control-plane,master 37m v1.21.1

worker-165 NotReady 4m6s v1.21.1

[root@master1-163 ~]#

master加入集群

说明

-

注:我上面安装kubeadm只安装的master1,我这是用master2加入的,加入方式和wordk形式一样。

-

但是master形式加入需要在后面加

--control-plane

但是直接加会报错,如下

是因为master节点需要pki证书的,而这里面是没有的,所以需要准备好各种证书才能以master的形式加入。

[root@master2-162 kubelet]# kubeadm join 192.168.59.164:6443 --token nej47e.xlge9gc2usn6sky7 --discovery-token-ca-cert-hash sha256:2e4d2ca7c162f57de5edc80b89b75ad9493874746cc7c596af7f29ba91eccffe --control-plane

…删除了部分内容

To see the stack trace of this error execute with --v=5 or higher

[root@master2-162 kubelet]#

[root@master2-162 kubelet]# ls /etc/kubernetes/pki

ls: 无法访问/etc/kubernetes/pki: 没有那个文件或目录

[root@master2-162 kubelet]#

镜像准备

因为这个是用来做master节点的,所以也需要准备master所需的所有镜像,方法见上面初始化集群时候的镜像准备,因为我这内网环境,所以我这直接导入镜像了,导入后如下

[root@master2-162 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.1 771ffcf9ca63 6 months ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.1 4359e752b596 6 months ago 131MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.1 a4183b88f6e6 6 months ago 50.6MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.1 e16544fd47b0 6 months ago 120MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 7 months ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 7 months ago 122MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 7 months ago 50.6MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 7 months ago 120MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 10 months ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 13 months ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 15 months ago 253MB

registry.cn-hangzhou.aliyuncs.com/kube-iamges/dashboard v2.0.0-beta8 eb51a3597525 24 months ago 90.8MB

registry.cn-hangzhou.aliyuncs.com/kube-iamges/metrics-scraper v1.0.1 709901356c11 2 years ago 40.1MB

[root@master2-162 ~]#

准备pki证书

-

这个方法也适用于master节点执行reset之后,想重新加入以master身份加入集群。

-

必须以这种形式拷贝,scp拷贝是不能用的。

在master1上【上面初始化集群那个master节点】随便编辑一个文件,写入下面内容

#需要打包的名称

[root@master1-163 ~]# cat cert.txt

/etc/kubernetes/pki/ca.crt

/etc/kubernetes/pki/ca.key

/etc/kubernetes/pki/sa.key

/etc/kubernetes/pki/sa.pub

/etc/kubernetes/pki/front-proxy-ca.crt

/etc/kubernetes/pki/front-proxy-ca.key

[root@master1-163 ~]#

打包

[root@master1-163 ~]# tar czf cert.tar.gz -T cert.txt

tar: 从成员名中删除开头的“/”

[root@master1-163 ~]#

查看打包内容

[root@master1-163 ~]# tar tf cert.tar.gz

etc/kubernetes/pki/ca.crt

etc/kubernetes/pki/ca.key

etc/kubernetes/pki/sa.key

etc/kubernetes/pki/sa.pub

etc/kubernetes/pki/front-proxy-ca.crt

etc/kubernetes/pki/front-proxy-ca.key

[root@master1-163 ~]#

#把打包的文件拷贝到master2上去

[root@master1-163 ~]# scp cert.tar.gz master2-162:~

root@master2-162’s password:

cert.tar.gz 100% 5363 4.5MB/s 00:00

[root@master1-163 ~]#

- 去master2上导入pki文件

因为打包原因,所以需要解压到/下。

[root@master2-162 ~]# tar tf cert.tar.gz

etc/kubernetes/pki/ca.crt

etc/kubernetes/pki/ca.key

etc/kubernetes/pki/sa.key

etc/kubernetes/pki/sa.pub

etc/kubernetes/pki/front-proxy-ca.crt

etc/kubernetes/pki/front-proxy-ca.key

[root@master2-162 ~]#

[root@master2-162 ~]# tar zxvf cert.tar.gz -C /

etc/kubernetes/pki/ca.crt

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Java工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Java开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

如果你觉得这些内容对你有帮助,可以扫码获取!!(备注Java获取)

最后

看完美团、字节、腾讯这三家的面试问题,是不是感觉问的特别多,可能咱们又得开启面试造火箭、工作拧螺丝的模式去准备下一次的面试了。

开篇有提及我可是足足背下了1000道题目,多少还是有点用的呢,我看了下,上面这些问题大部分都能从我背的题里找到的,所以今天给大家分享一下互联网工程师必备的面试1000题。

注意不论是我说的互联网面试1000题,还是后面提及的算法与数据结构、设计模式以及更多的Java学习笔记等,皆可分享给各位朋友

互联网工程师必备的面试1000题

而且从上面三家来看,算法与数据结构是必备不可少的呀,因此我建议大家可以去刷刷这本左程云大佬著作的《程序员代码面试指南 IT名企算法与数据结构题目最优解》,里面近200道真实出现过的经典代码面试题。

《互联网大厂面试真题解析、进阶开发核心学习笔记、全套讲解视频、实战项目源码讲义》点击传送门即可获取!

ubernetes/pki/ca.key

etc/kubernetes/pki/sa.key

etc/kubernetes/pki/sa.pub

etc/kubernetes/pki/front-proxy-ca.crt

etc/kubernetes/pki/front-proxy-ca.key

[root@master1-163 ~]#

#把打包的文件拷贝到master2上去

[root@master1-163 ~]# scp cert.tar.gz master2-162:~

root@master2-162’s password:

cert.tar.gz 100% 5363 4.5MB/s 00:00

[root@master1-163 ~]#

- 去master2上导入pki文件

因为打包原因,所以需要解压到/下。

[root@master2-162 ~]# tar tf cert.tar.gz

etc/kubernetes/pki/ca.crt

etc/kubernetes/pki/ca.key

etc/kubernetes/pki/sa.key

etc/kubernetes/pki/sa.pub

etc/kubernetes/pki/front-proxy-ca.crt

etc/kubernetes/pki/front-proxy-ca.key

[root@master2-162 ~]#

[root@master2-162 ~]# tar zxvf cert.tar.gz -C /

etc/kubernetes/pki/ca.crt

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Java工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。[外链图片转存中…(img-GxJnYxo6-1713398693145)]

[外链图片转存中…(img-kq2CXw6x-1713398693146)]

[外链图片转存中…(img-1NPUlHoS-1713398693146)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Java开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

如果你觉得这些内容对你有帮助,可以扫码获取!!(备注Java获取)

最后

看完美团、字节、腾讯这三家的面试问题,是不是感觉问的特别多,可能咱们又得开启面试造火箭、工作拧螺丝的模式去准备下一次的面试了。

开篇有提及我可是足足背下了1000道题目,多少还是有点用的呢,我看了下,上面这些问题大部分都能从我背的题里找到的,所以今天给大家分享一下互联网工程师必备的面试1000题。

注意不论是我说的互联网面试1000题,还是后面提及的算法与数据结构、设计模式以及更多的Java学习笔记等,皆可分享给各位朋友

[外链图片转存中…(img-wEZ03lEZ-1713398693147)]

互联网工程师必备的面试1000题

而且从上面三家来看,算法与数据结构是必备不可少的呀,因此我建议大家可以去刷刷这本左程云大佬著作的《程序员代码面试指南 IT名企算法与数据结构题目最优解》,里面近200道真实出现过的经典代码面试题。

[外链图片转存中…(img-ZNd5QT5B-1713398693147)]

《互联网大厂面试真题解析、进阶开发核心学习笔记、全套讲解视频、实战项目源码讲义》点击传送门即可获取!

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)