离线部署K8S 1.18.9 (三Master+Haproxy+Keepalived)

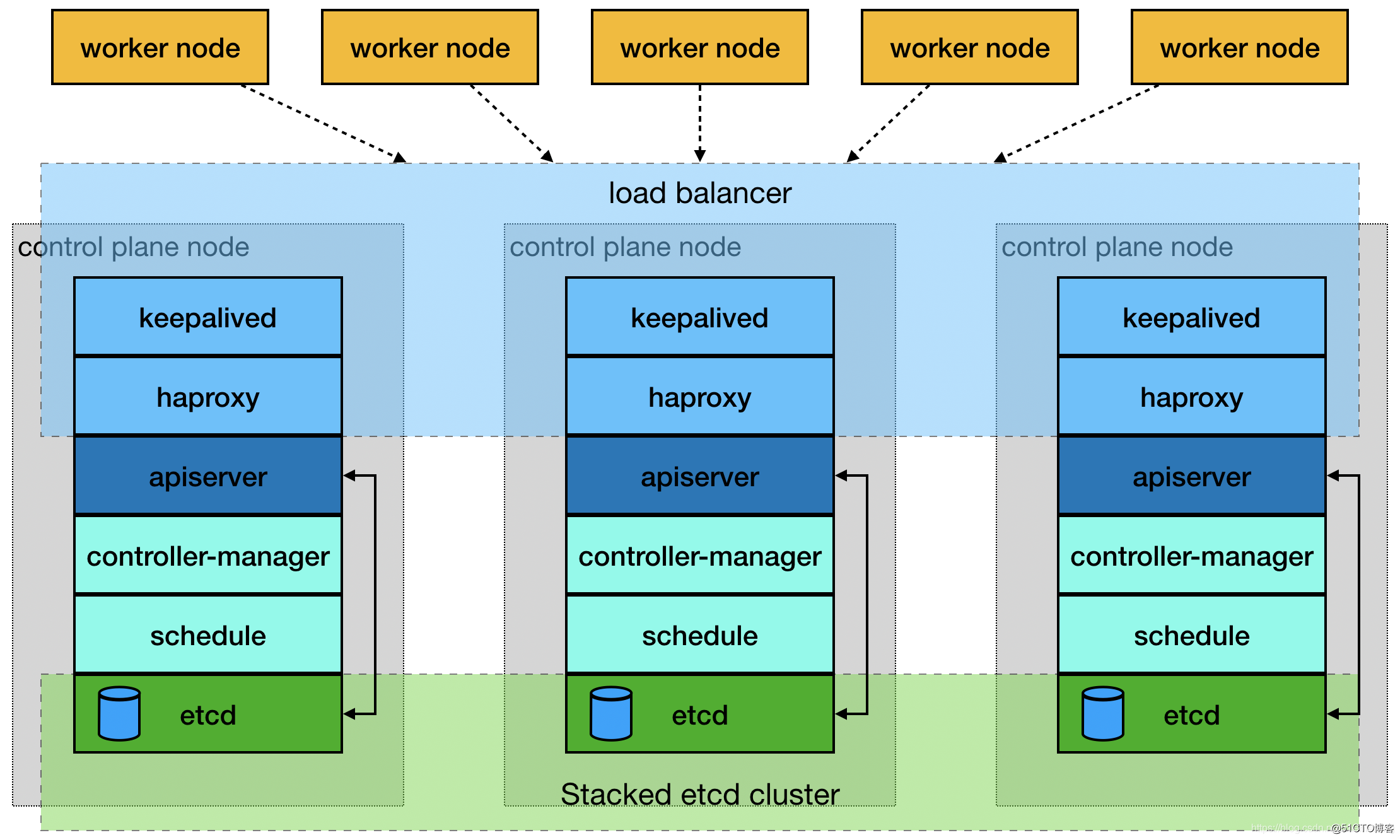

一、系统架构图使用Keepalived + HAProxy搭建高可用Load balancer,完整的拓扑图如下:二、服务器资源清单三、安装包及工具清单k8s-install-tool/├── calico│├── calico-cni.tar│├── calicoctl│├── calico-kube-controllers.tar│├── calico-no...

相关镜像Docker

docker pull 下来之后改镜像tag就可以使用了~

一、系统架构图

使用Keepalived + HAProxy搭建高可用Load balancer,完整的拓扑图如下:

二、服务器资源清单

三、安装包及工具清单

k8s-install-tool/

├── calico

│ ├── calico-cni.tar

│ ├── calicoctl

│ ├── calico-kube-controllers.tar

│ ├── calico-node.tar

│ ├── calico-pod2daemon-flexvol.tar

│ └── calico.yaml

├── docker

│ └── docker-19.03.9.tgz

├── helm

│ └── helm-v3.2.0-rc.1-linux-amd64.tar.gz

├── image

│ ├── busybox.tar

│ ├── coredns.tar

│ ├── etcd.tar

│ ├── kube-apiserver.tar

│ ├── kube-controller-manager.tar

│ ├── kube-proxy.tar

│ ├── kube-scheduler.tar

│ └── pause3.2.tar

├── rpm

│ ├── conntrack-tools-1.4.4-7.el7.x86_64.rpm

│ ├── kubeadm-1.18.9-0.x86_64.rpm

│ ├── kubectl-1.18.9-0.x86_64.rpm

│ ├── kubelet-1.18.9-0.x86_64.rpm

│ ├── kubernetes-cni-0.8.7-0.x86_64.rpm

│ └── socat-1.7.3.2-2.el7.x86_64.rpm

├── test

│ ├── nginx.tar

│ └── nginx.yaml

└── tool

├── bash-completion-2.1-8.el7.noarch.rpm

├── haproxy-1.5.18-9.el7.x86_64.rpm

├── haproxy.cfg

├── keepalived-2.1.5.tar.gz

└── keepalived.conf

7 directories, 29 files

四、环境初始化 (三台机器都需要执行)

cat >> /etc/hosts << EOF

192.168.10.218 haproxy-kubernetes-apiserver

192.168.10.215 k8s-Master1-offline-215

192.168.10.216 k8s-Master2-offline-216

192.168.10.217 k8s-Master3-offline-217

EOF

关闭禁用firewalld

systemctl stop firewalld && systemctl disable firewalld

禁用selinux

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config && setenforce 0

关闭 swap 分区

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

内核调整,将桥接的IPv4流量传递到iptables的链

echo "1" >> /proc/sys/net/ipv4/ip_forward

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

修改limits值

cat /etc/security/limits.d/20-nproc.con

* soft nproc 4096000

* hard nproc 4096000五、离线安装Keepalived (三台机器都需要安装)

安装前系统需要具备gcc编译环境

cat >> /etc/sysctl.conf << EOF

net.ipv4.ip_forward = 1

EOF

sysctl -p

yum -y install gcc

yum -y install openssl-devel

tar xf keepalived-2.1.5.tar.gz

cd keepalived-2.1.5

./configure --prefix=/usr/local/keepalived

make

make install

mkdir /etc/keepalived

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens192

virtual_router_id 51

priority 250

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.10.218

}

track_script {

check_haproxy

}

}

EOF

systemctl enable keepalived.service && systemctl start keepalived.service && systemctl status keepalived.service注:其余的两个节点为BACKUP ,并修改priority,该值越大越优先。

六、安装haproxy (三台机器都需要安装)

cd /opt/k8s-install-tool/tool/

yum -y install haproxy-1.5.18-9.el7.x86_64.rpm

cp haproxy.cfg /etc/haproxy/.

cat haproxy.cfg

global

log 127.0.0.1 local2

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

/# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# kubernetes apiserver frontend which proxys to the backends

#---------------------------------------------------------------------

frontend haproxy-kubernetes-apiserver

mode tcp

option tcplog

bind *:9443

default_backend haproxy-kubernetes-apiserver

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend haproxy-kubernetes-apiserver

mode tcp

balance roundrobin

server k8s-Master1-offline-215 192.168.10.215:6443 check

server k8s-Master2-offline-216 192.168.10.216:6443 check

server k8s-Master3-offline-217 192.168.10.217:6443 check

注⚠️:该配置文件需要修改的地方为frontend、default_backend、backend 修改为vip的域名,配置backend后端apiserver。

启动haproxy,设置开机自启

systemctl restart haproxy && systemctl enable haproxy && systemctl status haproxy七、离线安装docker (三台机器都需要执行)

移动到docker安装目录下,之后解压

cd /opt/k8s-install-tool/docker

解压

tar xf docker-19.03.9.tgz

将解压出来的docker文件内容移动到 /usr/bin/ 目录下

cp docker/* /usr/bin/

将docker注册为service

vim /etc/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd --graph=/opt/docker/ #指定docker数据目录

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

修改Cgroup Driver,否则在安装k8s时会有警告

mkdir /etc/docker

vim /etc/docker/daemon.json

添加如下内容:

{

"exec-opts":["native.cgroupdriver=systemd"]

}

启动docker

chmod +x /etc/systemd/system/docker.service #添加文件权限并启动docker

systemctl daemon-reload #重载unit配置文件

systemctl start docker #启动Docker

systemctl enable docker.service #设置开机自启

验证docker是否启动

systemctl status docker #查看Docker状态

docker info #查看Docker版本八、离线部署k8s (215-master)

导入k8s相关镜像

cd /opt/k8s-install-tool/image

docker load -i coredns.tar

docker load -i etcd.tar

docker load -i kube-apiserver.tar

docker load -i kube-controller-manager.tar

docker load -i kube-proxy.tar

docker load -i kube-scheduler.tar

docker load -i pause3.2.tar

docker load -i busybox.tar

检查是否导入镜像

docker images

开始安装k8s相关组件二进制包

1.rpm -ivh kubectl-1.18.9-0.x86_64.rpm

2.rpm -ivh socat-1.7.3.2-2.el7.x86_64.rpm

3.rpm -ivh conntrack-tools-1.4.4-7.el7.x86_64.rpm --nodeps

4.rpm -ivh kubelet-1.18.9-0.x86_64.rpm kubernetes-cni-0.8.7-0.x86_64.rpm

5.rpm -ivh kubeadm-1.18.9-0.x86_64.rpm --nodeps

启动kubelet并设置开机自启动

systemctl start kubelet && systemctl enable kubelet

安装k8s工具包 命令tab补全

cd /opt/k8s-install-tool/tool

rpm -ivh bash-completion-2.1-8.el7.noarch.rpm

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

初始化集群

kubeadm init --kubernetes-version 1.18.9 --control-plane-endpoint "haproxy-kubernetes-apiserver:9443" --pod-network-cidr=10.244.0.0/16 #需要注意的是这儿替换为vip及haproxy端口号

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

scp -r /etc/kubernetes/ root@192.168.10.216:/etc/

scp -r /etc/kubernetes/ root@192.168.10.217:/etc/

验证是否安装成功

此时节点状态为NotReady安装网络插件后会自动变为Ready

kubectl get nodes

查看集群POD,这时会发现coredns两个副本的状态Pending,原因也是因为没有安装网络插件的原因,安装网络插件成功后则会变为running

kubectl get pod -A九、配置安装网络插件calico

导入相关镜像文件

cd /opt/k8s-install-tool/calico

docker load -i calico-cni.tar

docker load -i calico-kube-controllers.tar

docker load -i calico-node.tar

docker load -i calico-pod2daemon-flexvol.tar

安装执行calico.yaml,并验证安装结果

kubectl apply -f calico.yaml

此时coredns的状态会变为running,master状态会变为Ready

kubectl get pod -A

kubectl get nodes

安装calicoctl二进制工具

cp calicoctl /usr/local/bin/.

calicoctl version十、其他master节点(216 217)

导入安装包所有的镜像,安装所有的rpm包

删除215发送过来的多余文件,加入集群时会自动创建部分文件,如果不删,则会报错。

rm -rf /etc/kubernetes/kubelet.conf /etc/kubernetes/manifests/

rm -rf /etc/kubernetes/pki/etcd/server.* /etc/kubernetes/pki/etcd/peer.*

rm -rf /etc/kubernetes/pki/apiserver*

启动kubelet并设置开机自启动

systemctl start kubelet && systemctl enable kubelet

加入集群

kubeadm join haproxy-kubernetes-apiserver:9443 --token 5khyil.3mwqohp5gqugvoj6 \

--discovery-token-ca-cert-hash sha256:6affedfb99ad17006f2998cd2632f846f03b7ebce12881c16e0a5bd229b77cb4 \

--control-plane

之后仍然需要执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get nodes -o wide

kubectl get pod -o wide -n kube-system

十一、安装helm3

cd /opt/k8s-install-tool/helm

tar xf helm-v3.2.0-rc.1-linux-amd64.tar.gz

cp linux-amd64/helm /usr/local/bin/.

helm version十二、在k8s跑一个应用,测试k8s集群是否健康

允许master创建pod

kubectl taint nodes --all node-role.kubernetes.io/master-

cd /opt/k8s-install-tool/test

docker load -i nginx.tar

kubectl apply -f nginx.yaml

稍等大概一分钟左右。。。。浏览器访问http://192.168.10.216:32111/

十三、修改NodePort端口范围 (三台都需要执行)

vim /etc/kubernetes/manifests/kube-apiserver.yaml

添加如下这行,之后大概过10秒,k8s会自动应用该配置

- --service-node-port-range=1-65535

十四、离线下载rpm包和依赖包

参考:yum下载rpm包及相关依赖保存到本地_yum下载包及依赖到本地_xuwq2021的博客-CSDN博客

方法一:

yum --showduplicates list kubeadm.x86_64

yum install kubectl-1.18.9-0 --downloadonly --downloaddir=.

如果机器上已经安装了这个包,就得使用 reinstall方法二:

安装插件

yum install yum-plugin-downloadonly -y

下载示例

yum install --downloadonly --downloaddir=/usr/local libXScrnSaveryum

yum install --downloadonly --downloaddir=路径 安装包名。方法三:

安装yumdownloader

yum install yum-utils -y

下载示例

yumdownloader --resolve --destdir /tmp/ansible ansible方法四:

以上两个方法仅会将主软件包和基于你现在的操作系统所缺少的依赖关系包一并下载。若是将下载的rpm包上传至其他机器进行离线安装很有可能还是会缺少依赖,这时可以使用repotrack进行下载。

repotrack安装方法

yum -y install yum-utils

使用方法

repotrack --download_path=/tmp 要安装的软件

–download_path:指定下载目录更多推荐

已为社区贡献16条内容

已为社区贡献16条内容

所有评论(0)