Centos7安装kubernetes

k8s安装与应用部署

Centos7安装kubernetes

一、k8s简介

二、k8s安装

一台主节点,两台工作节点

master CPU的个数,至少为2个

192.168.59.131 master

192.168.59.134 node01

192.168.59.135 node02

vi /etc/sysconfig/network-scripts/ifcfg-ens33 #修改网络ip

service NetworkManager stop #关闭

systemctl disable NetworkManager #永久关闭

systemctl restart network #重启网络

docker安装 (systemctl start docker 启动)

yum remove docker-ce #卸载

yum install docker-ce-19.03.3 #安装指定版本

1、主从节点需要完成

1.分别修改主从节点的主机名

#主节点

[root@master k8s]# hostnamectl set-hostname master

#从节点

[root@master k8s]# hostnamectl set-hostname node01

[root@master k8s]# hostnamectl set-hostname node02

2.修改hosts文件,追加域名映射

[root@master k8s]# cat >>/etc/hosts<<EOF

192.168.59.131 master

192.168.59.134 node01

192.168.59.135 node02

EOF

3.关闭防火墙和关闭SELinux

#关闭

[root@master k8s]# systemctl stop firewalld

#设置开机禁启动

[root@master k8s]# systemctl disable firewalld

# 临时关闭

[root@master k8s]# setenforce 0

# 永久关闭,改为 SELINUX=disabled

[root@master k8s]# vi /etc/sysconfig/selinux

4.设置系统参数:设置允许路由转发,不对bridge的数据进行处理

# 创建文件

[root@master k8s]# vi /etc/sysctl.d/k8s.conf

# 内容如下

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

#执行命令

[root@master k8s]# modprobe br_netfilter

[root@master k8s]# ls /proc/sys/net/bridge

[root@master k8s]# sysctl -p /etc/sysctl.d/k8s.conf

5.kube-proxy 开启ipvs的前置条件

# 覆写ipvs.modules文件

[root@master k8s]# cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

# 执行ipvs.modules文件

[root@master k8s]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs

6.所有节点关闭swap

# 永久关闭,注释掉以下字段/dev/mapper/centos-swap swap swap defaults 0 0

[root@master k8s]# vi /etc/fstab

重启

reboot

7.安装kubelet、kubeadm、kubectl

设置yum源

[root@master k8s]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

指定版本安装kubelet kubeadm kubectl

[root@master k8s]# yum install -y kubelet-1.18.2 kubeadm-1.18.2 kubectl-1.18.2

kubelet 设置开机启动

[root@master k8s]# systemctl enable kubelet

查看版本

[root@master k8s]# kubelet --version

2、主节点需要完成

1、初始化主节点之前,前置工作;

1.1. 修改Docker的配置,Kubernetes推荐的Docker驱动程序是“systemd”

[root@master k8s]# vi /etc/docker/daemon.json

# 加入如下配置

{

"registry-mirrors": ["https://slycxkzw.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"max-concurrent-downloads": 10,

"max-concurrent-uploads": 5,

"log-opts": {

"max-size": "300m",

"max-file": "2"

},

"live-restore": true

}

#重启docker

[root@master k8s] systemctl daemon-reload

[root@master k8s] systemctl restart docker

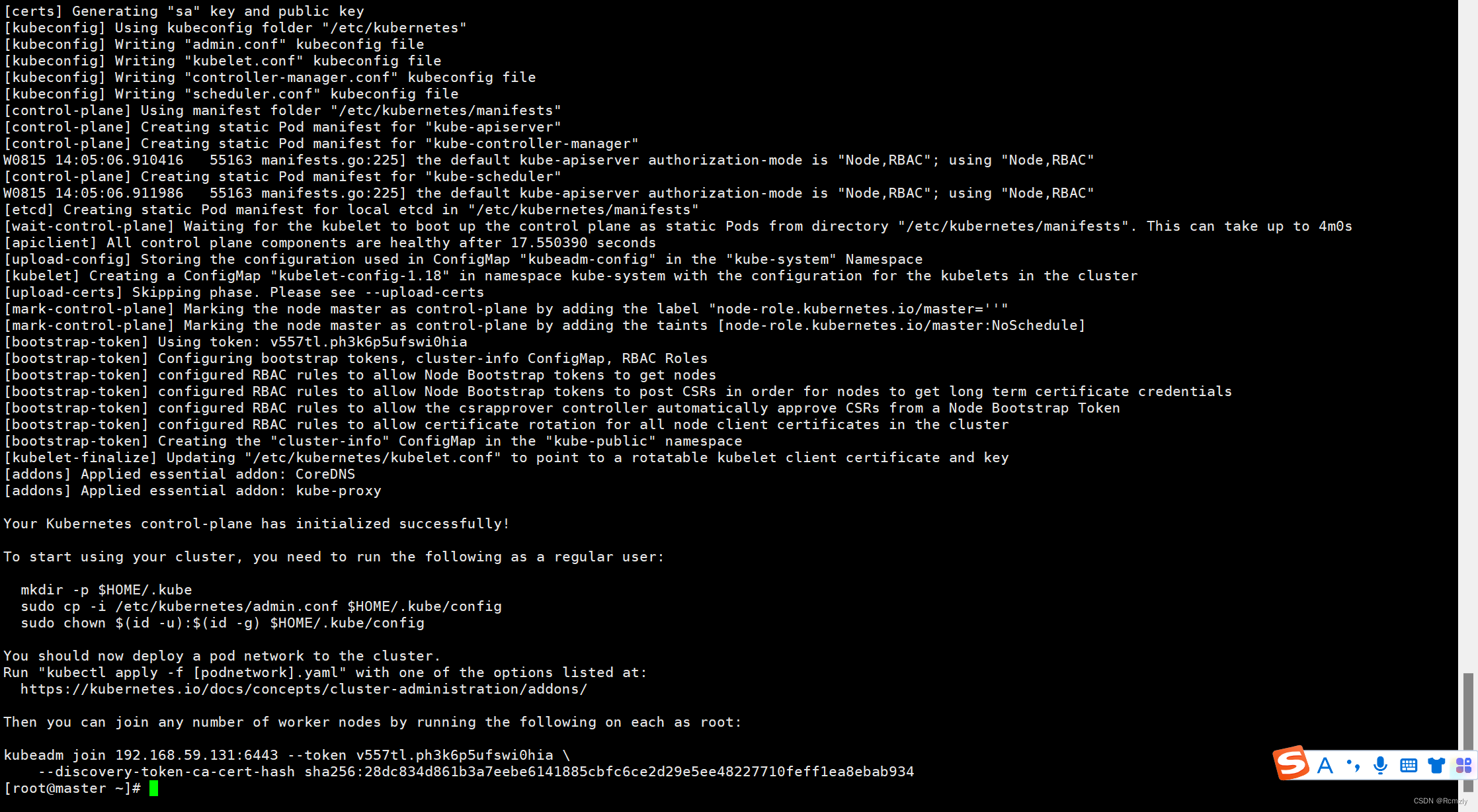

2.运行初始化命令

kubernetes-version:具体安装的实际版本;

apiserver-advertise-address:master机器的IP;

[root@master k8s]# kubeadm init --kubernetes-version=1.18.2 \

--apiserver-advertise-address=192.168.59.131 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

3.初始化结束后,会提示节点安装的命令。node节点加入时会用到

kubeadm join 192.168.59.131:6443 --token v557tl.ph3k6p5ufswi0hia \

--discovery-token-ca-cert-hash sha256:28dc834d861b3a7eebe6141885cbfc6ce2d29e5ee48227710feff1ea8ebab934

4.配置kubectl工具

[root@master k8s]# mkdir -p $HOME/.kube

[root@master k8s]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master k8s]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

5.安装Calico

[root@master k8s]# mkdir k8s

[root@master k8s]# cd k8s

[root@master k8s]# wget https://docs.projectcalico.org/v3.10/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml --no-check-certificate

sed -i 's/192.168.0.0/10.244.0.0/g' calico.yaml

[root@master k8s]# kubectl apply -f calico.yaml

6.等待几分钟,查看所有Pod的状态,确保所有Pod都是Running状态

[root@master k8s]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-57546b46d6-hd54k 1/1 Running 0 17m 10.244.219.66 master <none> <none>

kube-system calico-node-6mxll 1/1 Running 0 17m 192.168.59.131 master <none> <none>

kube-system calico-node-tgcsd 1/1 Running 0 15m 192.168.59.134 node01 <none> <none>

kube-system calico-node-xcdjs 1/1 Running 1 16m 192.168.59.135 node02 <none> <none>

kube-system coredns-7ff77c879f-7kxjn 1/1 Running 0 21m 10.244.219.65 master <none> <none>

kube-system coredns-7ff77c879f-krx9x 1/1 Running 0 21m 10.244.219.67 master <none> <none>

kube-system etcd-master 1/1 Running 0 21m 192.168.59.131 master <none> <none>

kube-system kube-apiserver-master 1/1 Running 0 21m 192.168.59.131 master <none> <none>

kube-system kube-controller-manager-master 1/1 Running 0 21m 192.168.59.131 master <none> <none>

kube-system kube-proxy-9zg5g 1/1 Running 0 15m 192.168.59.134 node01 <none> <none>

kube-system kube-proxy-b4mlf 1/1 Running 1 16m 192.168.59.135 node02 <none> <none>

kube-system kube-proxy-znkgk 1/1 Running 0 21m 192.168.59.131 master <none> <none>

kube-system kube-scheduler-master 1/1 Running 0 21m 192.168.59.131 master <none> <none>

3、从节点需要完成

1.让所有节点加入集群环境

[root@master k8s]# kubeadm join 192.168.59.131:6443 --token v557tl.ph3k6p5ufswi0hia \

--discovery-token-ca-cert-hash sha256:28dc834d861b3a7eebe6141885cbfc6ce2d29e5ee48227710feff1ea8ebab934

2.启动kubelet

[root@master k8s]# systemctl start kubelet

3.回到Master节点查看,如果Status全部为Ready,代表集群环境搭建成功!!!

[root@master k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 9m20s v1.18.2

node01 Ready <none> 3m19s v1.18.2

node02 Ready <none> 3m29s v1.18.2

三、利用k8s部署程序

部署nginx

1.创建

创建nginx.yaml文件

[root@master k8syml]# vi nginx.yaml

#添加以下内容

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment #deployment名称

labels:

app: nginx

spec:

replicas: 3 #副本数

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2 #引用的镜像

ports:

- containerPort: 80 #端口号

运行以下命令创建部署

[root@master k8syml]# kubectl apply -f nginx.yml

deployment.apps/nginx-deployment created

2.查看应用部署状态

检查是否创建部署

[root@master k8syml]# kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 3/3 3 3 37m

查看创建的pod

[root@master k8syml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-6b474476c4-5dz6g 1/1 Running 0 39m 10.244.196.133 node01 <none> <none>

nginx-deployment-6b474476c4-g5gqx 1/1 Running 0 39m 10.244.140.69 node02 <none> <none>

nginx-deployment-6b474476c4-gp2jd 1/1 Running 0 39m 10.244.196.132 node01 <none> <none>

3.对外暴露应用服务

利用负载均衡器暴露服务

[root@master k8syml]# kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.3/manifests/namespace.yaml

[root@master k8syml]# kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.3/manifests/metallb.yaml

[root@master k8syml]# kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

创建config.yaml提供IP池

[root@master k8syml]# vim example-layer2-config.yaml

#添加以下内容

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.59.200-192.168.59.210 #跟k8s集群节点的宿主机ip地址段保持一致

执行yaml文件

[root@master k8syml]# kubectl apply -f example-layer2-config.yaml

创建service

[root@master k8syml]# vi nginxService.yaml

#添加以下内容

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

ports:

- name: http

port: 13301 #暴露外部的端口

protocol: TCP

targetPort: 80 #内部端口

selector:

app: nginx

type: LoadBalancer #暴露方式

查看暴露的端口

[root@master k8syml]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 2d2h

nginx-svc LoadBalancer 10.1.56.144 192.168.59.200 13301:31043/TCP 8s

4.外部访问

四、其他程序部署

nfs共享

以当前环境为例

nfs服务端ip:192.168.59.131

nfs客户端1ip:192.168.59.134

nfs客户端2ip:192.168.59.135

安装(服务端、客户端 都执行)

1、安装nfs和rpc的软件包

yum install -y nfs-utils rpcbind

2、开启nfs、rpcbind服务

systemctl start nfs

systemctl start rpcbind

3、开机自启

systemctl enable nfs

systemctl enable rpcbind

配置(服务端 执行)

1、设置服务端共享目录

mkdir /home/nfs

vim /etc/exports

/home/nfs 192.168.59.0/24(rw,sync,no_root_squash)

NFS 的配置文件为 /etc/exports

常用选项:

rw 表示允许读写

ro 表示为只读

sync 表示同步写入到内存与硬盘中

no_root_squash表示当客户机以root身份访问时赋予本地root权限(默认是root_squash),如果不加那么客户端无法在里面编辑或写入文件,因为默认以nfsnobody的权限

root_squash 表示客户机用root用户访问该共享目录时,将root用户映射成匿名用户

2、使配置立即生效

exports -r

3、验证共享

showmount -e localhost

挂载nfs存储(客户端执行)

1、mount -t nfs [服务端ip]:[服务端路径] [客户端路径]

mount -t nfs 192.168.59.131:/home/nfs /home/nfs

2、解除挂载

umount -fl /home/nfs

k8s 常用命令

查看所有主从节点的状态:kubectl get nodes

获取所有namespace资源:kubectl get ns

获取指定namespace的pod:kubectl get pods -n {KaTeX parse error: Expected 'EOF', got '}' at position 10: nameSpace}̲ 查看某个pod的执行过程:…nameSpace}

查看日志:kubectl logs --tail=1000 {pod} | less

通过配置文件创建一个集群资源对象:kubectl create -f xxx.yml

通过配置文件删除一个集群资源对象:kubectl delete -f xxx.yml

通过pod删除集群资源:kubectl delete {pod} -n {KaTeX parse error: Expected 'EOF', got '}' at position 10: nameSpace}̲ 查看pod的service…nameSpace}

进入容器:kubectl exec -it {pod} -n {namespace} – bash

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)