Intro and Goal

In this blog post I would like to describe my journey with Netherite as storage provider for Azure Durable Functions and the deployment of this combination to a Kubernetes cluster. My main goal was to get things running and see where there are some rough edges. Consequently, this blog post does not serve as a guide for a productive setup, it is more a first step towards such a setup.

Remark: If you have not yet heard about Netherite as a storage provider for Azure Durable Functions I recommend to start here 🧐: https://microsoft.github.io/durabletask-netherite/#/

Sample Code

All the code used for this blog post is available on GitHub under:

https://github.com/lechnerc77/netherite-kyma-sample

Setup

The setup for the journey is quite basic. We use the Durable Functions sample that comes along with the Azure Functions Extension of VS Code. We will use TypeScript as language. You should be able to do the same with C#/.NET Core 3.1, but I use a different language than that, as there are often some surprises when leaving the .NET area.

Code-wise we have an HTTP Starter Function that triggers the Orchestrator Function. This Function then calls an Activity Function three times with different parameter values:

import * as df from "durable-functions"

const orchestrator = df.orchestrator(function* (context) {

const outputs = []

outputs.push(yield context.df.callActivity("HelloCity", "Tokyo"))

outputs.push(yield context.df.callActivity("HelloCity", "Seattle"))

outputs.push(yield context.df.callActivity("HelloCity", "London"))

return outputs

})

export default orchestrator

The Activity Function returns a string containing the parameter handed over to the Function:

import { AzureFunction, Context } from "@azure/functions"

const activityFunction: AzureFunction = async function (context: Context): Promise<string> {

return `Hello ${context.bindings.name}!`

}

export default activityFunction

This setup runs on the "usual" Azure Storage. Now let us bring that over to Netherite.

Transfer to Netherite

As Netherite is not yet supported via the extension bundle mechanism, we remove the extension bundle section from the host.json and add the extension for Netherite via:

func extensions install --package Microsoft.Azure.DurableTask.Netherite --version 0.5.0-alpha`

This gives us a extensions.csproj file containing the relevant dependencies.

In addition, we add a configuration in the host.json file to make the host aware of the new storage provider:

"extensions": {

"durableTask": {

"hubName": "HelloNetherite",

"useGracefulShutdown": true,

"storageProvider": {

"type": "Netherite",

"StorageConnectionName": "AzureWebJobsStorage",

"EventHubsConnectionName": "EventHubsConnection",

"CacheOrchestrationCursors": "false"

}

}

}

⚠ Be aware of the parameter

"CacheOrchestrationCursors": "false": this setting is necessary for the non-.NET world to keep the orchestration running. Otherwise your processing will abort after the firstyield(see https://github.com/microsoft/durabletask-netherite/issues/69)

This is a very lean configuration that makes things work, but there are a lot more finetuning possible as laid out in the documentation of Netherite.

Try out locally

As a first test we run the function locally, so we must adjust the local.settings.json to point to the local storage emulator. In addition (and in contrast to “classical” Durable Functions) we also need to specify the connection to the EventHub which for local execution points to the memory:

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

"EventHubsConnection": "MemoryF",

"FUNCTIONS_WORKER_RUNTIME": "node"

}

}

As storage emulator we use Azurite i. e. the Azurite VSCode extension.

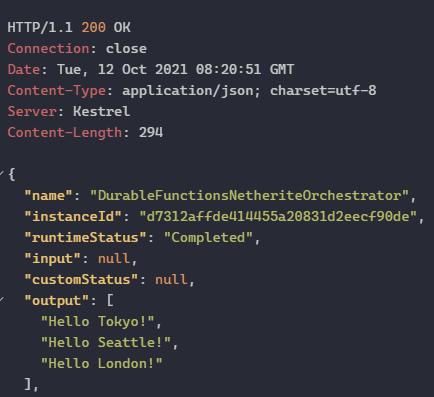

Executing the Function should produce the expected output:

Build the Docker Image and run it

As the local setup works, it's time to build the Docker image with the Azure Function inside. The func CLI helps us with that via:

func init --docker-only

This command creates the Dockerfile as well as the .dockerignorefile

When using the Azurite VSCode extension, several files have been created by it. To avoid copying them to the Docker image, we put the following lines into your .dockerignore file:

__azurite_db*__.json

__blobstorage__

__queuestorage__

With that we can build your image. We use a Makefile for the build and push that looks like this:

RELEASE=0.0.1

APP=containered_netherite

DOCKER_ACCOUNT=<YOUR DOCKER ACCOUNT NAME>

CONTAINER_IMAGE=${DOCKER_ACCOUNT}/${APP}:${RELEASE}

.PHONY: build-image push-image

build-image:

docker build -t $(CONTAINER_IMAGE) --no-cache --rm .

push-image: build-image

docker push $(CONTAINER_IMAGE)

To validate that the container is running as expected, we create the necessary resources on Azure using the scripts provided in the samples of the Netherite repository (https://github.com/microsoft/durabletask-netherite/tree/main/samples/scripts) namely the init.ps1 script to create the Azure storage as well as the Event Hub. Before running them make sure that you adjusted the settings.ps1 file as needed esp. put in a fitting name (or names) for the resources.

After successful creation of the resources we take the connection strings available (e.g. in the Azure portal) for the two resources and put them in a env.list file. This makes the injection into the container easier.

We put that commando to start the container also in the in the Makefile to have everything around Docker in one place:

docker run --env-file env.list -it -p 8080:80 $(CONTAINER_IMAGE)

When we spin up the container we will see … an error:

Microsoft.Azure.WebJobs.Host.FunctionInvocationException: Exception while executing function:

---> System.InvalidOperationException: Webhooks are not

at Microsoft.Azure.WebJobs.Extensions.DurableTask.HttpApiHandler.ThrowIfWebhooksNotConfigured()

Hmm … doing some serious senior deveveloper research to figure that out 🤪 aka google-fu and searching on stack overflow , I came across this: https://stackoverflow.com/questions/64400695/azure-durable-function-httpstart-failure-webhooks-are-not-configured/64404153#64404153

So we need one more parameter namely the WEBSITE_HOSTNAME to inject into the container to get things going. The parameter must point to the HTTP hostname used by the Azure Function.

For the local execution via Docker this means to add the following line to the env.list:

WEBSITE_HOSTNAME=localhost:8080

With this adjustment the Docker container works as expected. We now push it to the Docker registry via the corresponding command in the Makefile.

After that let us move to the logical next step and bring the thing to Kubernetes.

Deploy it to Kubernetes … ehh Kyma

I am using Kyma as opinionated stack on top of Kubernetes (to be precise on a Gardener cluster) for this exercise. The setup should be similar for vanilla stacks, but the API gateway must be adjusted accordingly depending on what you use.

Remark: In case you want to try out Kyma you can do so for free via the SAP Business Technology Platform trial.

For the deployment to Kyma we need the following files:

-

deployment.yaml: containing your app aka container and the references to the config map and the secrets and the service -

secrets.yaml: containing the connection strings to the Azure storage and the Event Hub -

apirule.yaml: containing the configuration of the API Gateway provided by Kyma to expose the HTTP endpoint. Attention - the API rule has no authentication in it!

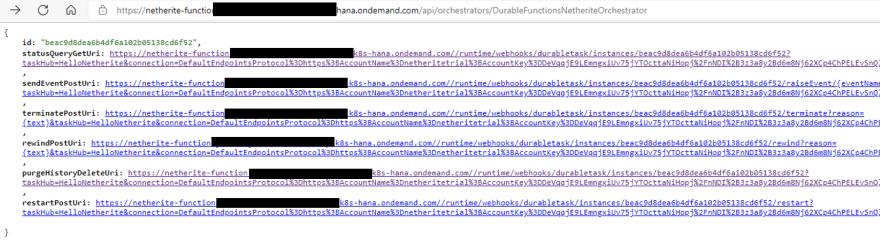

After applying those files to the Kyma cluster via kubectl apply -f we need to look up the endpoint at which the API is hosted in the Kyma Dashboard and add that to the configmap.yaml to provide the endpoint of the WEBSITE_HOSTNAME parameter. After applying this last file, the setup should be up and running. So giving it a try we see output like this when the Starter Function is executed:

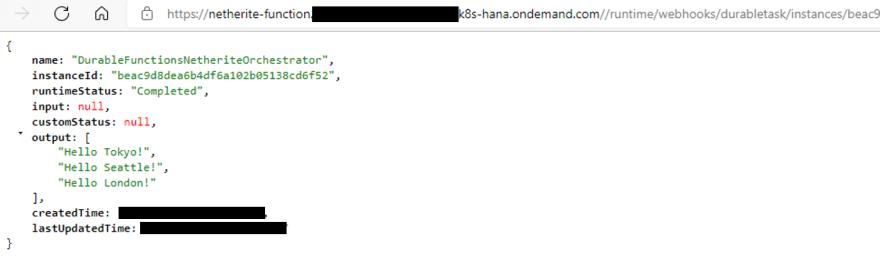

Navigating to the status URI we will see the expected result:

Mission accomplished 🥳: Durable Functions with Netherite as storage provider are up and running on Kyma (Kubernetes)!

Cleanup

As the resources on Azure will cost money you can clean things up using the script delete.ps1 from the GitHub repository which will delete the complete resource group on Azure.

Be aware that when you restart the services to exchange the connection strings in the secrets.yaml file and re-apply it to your Kubernetes deployment.

Summary

Although Netherite is still in alpha, you can already make your hands dirty with it. In this blog post we went through a scenario where this storage provider use-case is deployed to Kubernetes. Besides two small obstacles we had to overcome to get things running the setup works as expected.

However, this is just the very first step when using Netherite and there are some more steps to move forward to achieve a production grade setup.

One future topic that certainly makes sense is the integration of the Azure services in a more “natural” way into the Kubernetes cluster and not just via calls to the outside world. So, stuff for more blog posts - see you then 🤠

已为社区贡献4375条内容

已为社区贡献4375条内容

所有评论(0)