kubernetes1.7集群创建过程

前言关于kubernetes时什么以及kubernetes的相关介绍,可以去网络搜索或着去我的另一篇博客kubernetes(k8s)集群搭建一、实验环境系统:Red Hat Enterprise Linux Server release 7.2 (Maipo)hostanmeiproleserver2172.25.27.2k8s-masterserver3

·

前言

关于kubernetes时什么以及kubernetes的相关介绍,可以去网络搜索或着去我的另一篇博客kubernetes(k8s)集群搭建

一、实验环境

系统:Red Hat Enterprise Linux Server release 7.2 (Maipo)

| hostanme | ip | role |

|---|---|---|

| server2 | 172.25.27.2 | k8s-master |

| server3 | 172.25.27.3 | k8s-node1 |

| server4 | 172.25.27.4 | k8s-node2 |

- 关闭防火墙和selinux

[root@server2 ~]# vim env.sh

#!/bin/bash

systemctl disable firewalld

systemctl stop firewalld

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

iptables -F

setenforce 0

[root@server2 ~]# chmod +x env.sh

[root@server2 ~]# ./env.sh

[root@server2 ~]# scp fire.sh server3:

[root@server2 ~]# scp fire.sh server4:

[root@server3 ~]# ./env.sh

[root@server4 ~]# ./env.sh二、下载并安装kubernetes

- 使用阿里云yum

##docker yum源

cat >> /etc/yum.repos.d/docker.repo <<EOF

[docker-repo]

name=Docker Repository

baseurl=http://mirrors.aliyun.com/docker-engine/yum/repo/main/centos/7

enabled=1

gpgcheck=0

EOF

##kubernetes yum源

cat >> /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF- docker安装

Kubernetes 1.6还没有针对docker 1.13和最新的docker 17.03上做测试和验证,所以这里安装Kubernetes官方推荐的Docker 1.12版本。

[root@server2 ~]# yum list docker-engine --showduplicates ##查看docker版本

[root@server2 ~]# yum install -y docker-engine-1.12.6-1.el7.centos.x86_64 ##安装docker直接这样装会报错。需要同时安装docker-engine-selinux-1.12.6-1.el7.centos.noarch.rpm,如下

[root@server2 ~]# yum install -y docker-engine-1.12.6-1.el7.centos.x86_64 docker-engine-selinux-1.12.6-1.el7.centos.noarch

##或者将这两个包下载下来,再一起安装

[root@server2 ~]# wget https://mirrors.aliyun.com/docker-engine/yum/repo/main/centos/7/Packages/docker-engine-1.12.6-1.el7.centos.x86_64.rpm

[root@server2 ~]# wget https://mirrors.aliyun.com/docker-engine/yum/repo/main/centos/7/Packages/docker-engine-selinux-1.12.6-1.el7.centos.noarch.rpm

[root@server2 ~]# yum install -y docker-engine-1.12.6-1.el7.centos.x86_64.rpm docker-engine-selinux-1.12.6-1.el7.centos.noarch.rpm- kubernetes安装:

[root@server2 ~]# yum list kubeadm --showduplicates

[root@server2 ~]# yum list kubernetes-cni --showduplicates

[root@server2 ~]# yum list kubelet --showduplicates

[root@server2 ~]# yum list kubectl --showduplicates

##以上为查看可用版本,选择合适版本安装即可

[root@server2 ~]# yum install -y kubernetes-cni-0.5.1-0.x86_64 kubelet-1.7.2-0.x86_64 kubectl-1.7.2-0.x86_64 kubeadm-1.7.2-0.x86_64三、下载kubernetes镜像

- 下载和上传镜像脚本

[root@server2 ~]# vim images.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

KUBE_VERSION=v1.7.2

KUBE_PAUSE_VERSION=3.0

ETCD_VERSION=3.0.17

DNS_VERSION=1.14.4

GCR_URL=gcr.io/google_containers

ALIYUN_URL=registry.cn-hangzhou.aliyuncs.com/szss_k8s

images=(kube-proxy-amd64:${KUBE_VERSION}

kube-scheduler-amd64:${KUBE_VERSION}

kube-controller-manager-amd64:${KUBE_VERSION}

kube-apiserver-amd64:${KUBE_VERSION}

pause-amd64:${KUBE_PAUSE_VERSION}

etcd-amd64:${ETCD_VERSION}

k8s-dns-sidecar-amd64:${DNS_VERSION}

k8s-dns-kube-dns-amd64:${DNS_VERSION}

k8s-dns-dnsmasq-nanny-amd64:${DNS_VERSION})

for imageName in ${images[@]} ; do

docker pull $GCR_URL/$imageName

docker tag $GCR_URL/$imageName $ALIYUN_URL/$imageName

docker push $ALIYUN_URL/$imageName

docker rmi $ALIYUN_URL/$imageName

done

[root@server2 ~]# systemctl start docker

[root@server2 ~]# chmod +x images.sh

[root@server2 ~]# ./images.sh 四、配置kubelet

- 配置pod的基础镜像

[root@server2 ~]# vim /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

Environment="KUBELET_EXTRA_ARGS=--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/szss_k8s/pause-amd64:3.0" ##添加这一行

##如果怕搞混,可以新建个文件写入,两者选其一即可

[root@server2 ~]# cat > /etc/systemd/system/kubelet.service.d/20-pod-infra-image.conf <<EOF

[Service]

Environment="KUBELET_EXTRA_ARGS=--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/szss_k8s/pause-amd64:3.0"

EOF- 设置cgroup-driver

[root@server2 ~]# sed -i.bak 's/cgroup-driver=systemd/cgroup-driver=cgroupfs/g' /etc/systemd/system/kubelet.service.d/10-kubeadm.conf五、启动相关组件

[root@server2 ~]# systemctl enable docker

[root@server2 ~]# systemctl enable kubelet

[root@server2 ~]# systemctl start docker

[root@server2 ~]# systemctl start kubelet

六、创建集群

首先在master上执行init操作,api-advertise-addresses为master ip,pod-network-cidr指定IP段需要和kube-flannel.yml文件中配置的一致(kube-flannel.yaml在下面flannel的安装中会用到)

[root@server2 ~]# export KUBE_REPO_PREFIX="registry.cn-hangzhou.aliyuncs.com/szss_k8s"

[root@server2 ~]# export KUBE_ETCD_IMAGE="registry.cn-hangzhou.aliyuncs.com/szss_k8s/etcd-amd64:3.0.17"

[root@server2 ~]# echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

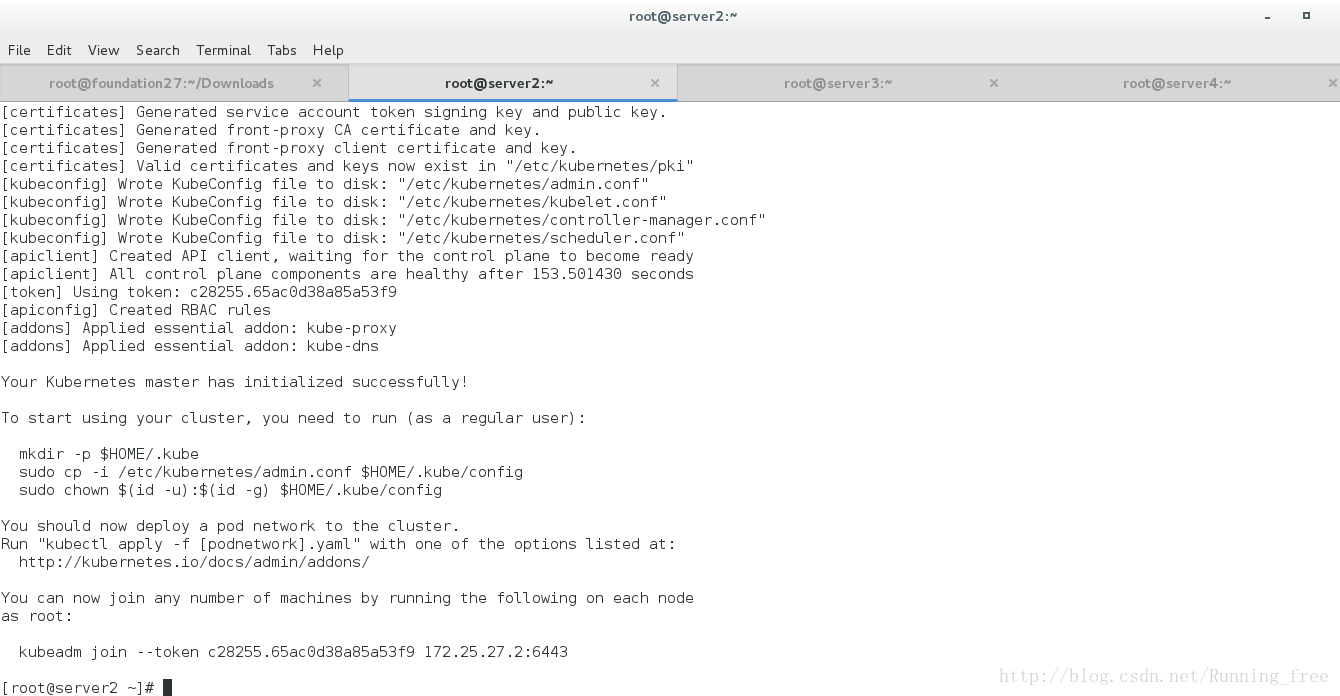

[root@server2 ~]# kubeadm init --apiserver-advertise-address=172.25.27.2 --kubernetes-version=v1.7.2 --pod-network-cidr=172.25.0.0/16执行结果:

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[init] Using Kubernetes version: v1.7.2

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks

[kubeadm] WARNING: starting in 1.8, tokens expire after 24 hours by default (if you require a non-expiring token use --token-ttl 0)

[certificates] Generated CA certificate and key.

[certificates] Generated API server certificate and key.

[certificates] API Server serving cert is signed for DNS names [server2 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.25.27.2]

[certificates] Generated API server kubelet client certificate and key.

[certificates] Generated service account token signing key and public key.

[certificates] Generated front-proxy CA certificate and key.

[certificates] Generated front-proxy client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[apiclient] Created API client, waiting for the control plane to become ready

[apiclient] All control plane components are healthy after 153.501430 seconds

[token] Using token: c28255.65ac0d38a85a53f9

[apiconfig] Created RBAC rules

[addons] Applied essential addon: kube-proxy

[addons] Applied essential addon: kube-dns

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run (as a regular user):

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

http://kubernetes.io/docs/admin/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join --token c28255.65ac0d38a85a53f9 172.25.27.2:6443

- Kubernetes根据在/etc/kubernetes/manifests目录下的manifests生成API server, controller manager and scheduler等静态pod。

[root@server2 ~]# ls /etc/kubernetes/manifests

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml- kube-apiserver.yaml文件,可以查看到启动kube-apiserver需要的参数(可定制,修改该文件即可,kubelet会监控该文件变化,一旦变化会立即重新生成pod)、image、health check探针、QoS等配置,也可以把image提前下载下来加快部署速度。

- kube-apiserver yaml文件kube-apiserver.yaml中的选项–insecure-port设置为0,说明kube-apiserver并未监听默认http 8080端口,而是监听了https 6443端口

[root@server2 ~]# netstat -nltp | grep 6443

tcp6 0 0 :::6443 :::* LISTEN 2883/kube-apiserver - 注:从上面信息可知kube-dns已经以pod形式运行但处于pending状态,主要因为pod网络flannel还未部署,另外因下文中的Master Isolation特性导致kube-dns无节点可部署。加入节点以及解除Master Isolation均可以使kube-dns成功运行、处于running状态

注意

- kubeadm init命令最后生成的“kubeadm join –token c28255.65ac0d38a85a53f9 172.25.27.2:6443”需要记录,用于节点加入集群,token也可通过 kubeadm token list获取。

[root@server2 ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION

c28255.65ac0d38a85a53f9 <forever> <never> authentication,signing The default bootstrap token generated by 'kubeadm init'.

七、配置kubectl的kubeconfig

##均来自上面初始化的提示

[root@server2 ~]# mkdir -p $HOME/.kube

[root@server2 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@server2 ~]# chown $(id -u):$(id -g) $HOME/.kube/config- 全部namespace下的pod

[root@server2 ~]# netstat -nltp | grep 6443

tcp6 0 0 :::6443 :::* LISTEN 2883/kube-apiserver

[root@server2 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system etcd-server2 1/1 Running 0 31m

kube-system kube-apiserver-server2 1/1 Running 0 31m

kube-system kube-controller-manager-server2 1/1 Running 0 32m

kube-system kube-dns-2030594980-htbvk 3/3 Running 0 31m

kube-system kube-flannel-ds-w3h4h 2/2 Running 0 24m

kube-system kube-proxy-k38m7 1/1 Running 0 31m

kube-system kube-scheduler-server2 1/1 Running 0 32m

八、安装flannel

- 在master节点安装flannel

[root@server2 ~]# kubectl --namespace kube-system apply -f https://raw.githubusercontent.com/coreos/flannel/v0.8.0/Documentation/kube-flannel-rbac.yml

clusterrole "flannel" created

clusterrolebinding "flannel" created

[root@server2 ~]# wget https://raw.githubusercontent.com/coreos/flannel/v0.8.0/Documentation/kube-flannel.yml

[root@server2 ~]# sed -i 's/quay.io\/coreos\/flannel:v0.8.0-amd64/registry.cn-hangzhou.aliyuncs.com\/szss_k8s\/flannel:v0.8.0-amd64/g' ./kube-flannel.yml

[root@server2 ~]# kubectl --namespace kube-system apply -f ./kube-flannel.yml

serviceaccount "flannel" created

configmap "kube-flannel-cfg" created

daemonset "kube-flannel-ds" created

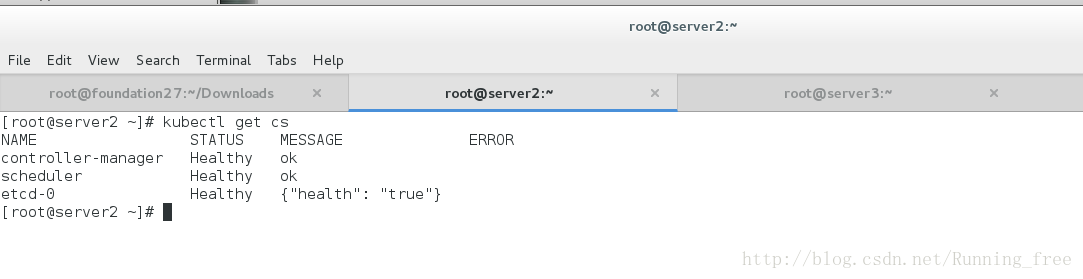

九、master节点安装验证

[root@server2 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"} - 验证dns是否工作

创建busybox.yml,内容如下:

[root@server2 ~]# vim busybox.yml

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- image: kube-registry:5000/busybox

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

name: busybox

restartPolicy: Always

- 通过kubectl create -f busybox.yaml创建pod,并验证通过kubectl exec -ti busybox – nslookup kubernetes.default验证dns是否工作。

[root@server2 ~]# kubectl create -f busybox.yaml

pod "busybox" created

[root@server2 ~]# kubectl exec busybox -- nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local十、node节点安装和加入集群

node的节点需要执行1~3的安装步骤,安装完后执行下面的命令将node的节点加入集群:

[root@server3 ~]# yum install -y docker-engine-1.12.6-1.el7.centos.x86_64 docker-engine-selinux-1.12.6-1.el7.centos.noarch kubernetes-cni-0.5.1-0.x86_64 kubelet-1.7.2-0.x86_64 kubectl-1.7.2-0.x86_64 kubeadm-1.7.2-0.x86_64

[root@server3 ~]# export KUBE_REPO_PREFIX="registry.cn-hangzhou.aliyuncs.com/szss_k8s"

[root@server3 ~]# export KUBE_ETCD_IMAGE="registry.cn-hangzhou.aliyuncs.com/szss_k8s/etcd-amd64:3.0.17"

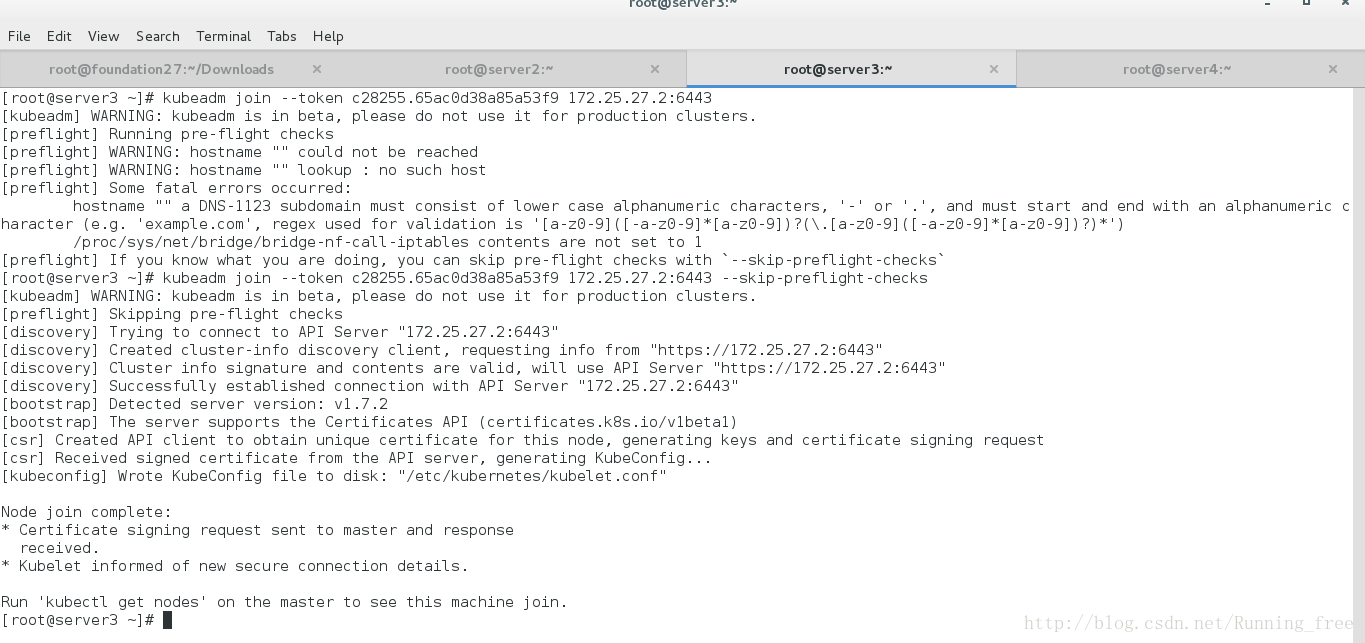

[root@server3 ~]# kubeadm join --token c28255.65ac0d38a85a53f9 172.25.27.2:6443 ##上面master初始化提示信息的最后一行

- 报了一堆错误呀,挨个解决吧

CGROUPS_FREEZER: enabled

CGROUPS_MEMORY: enabled

[preflight] WARNING: hostname "" could not be reached

[preflight] WARNING: hostname "" lookup : no such host

[preflight] WARNING: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] WARNING: docker service is not enabled, please run 'systemctl enable docker.service'

[preflight] Some fatal errors occurred:

failed to get docker info: Cannot connect to the Docker daemon. Is the docker daemon running on this host?

hostname "" a DNS-1123 subdomain must consist of lower case alphanumeric characters, '-' or '.', and must start and end with an alphanumeric character (e.g. 'example.com', regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*')

docker service is not active, please run 'systemctl start docker.service'

/proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

[preflight] If you know what you are doing, you can skip pre-flight checks with `--skip-preflight-checks`先按提示来

[root@server3 ~]# systemctl enable kubelet.service

[root@server3 ~]# systemctl enable docker.service

[root@server3 ~]# systemctl start docker.service

[root@server3 ~]# kubeadm reset

[root@server3 ~]# kubeadm join --token c28255.65ac0d38a85a53f9 172.25.27.2:6443

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[preflight] Running pre-flight checks

[preflight] WARNING: hostname "" could not be reached

[preflight] WARNING: hostname "" lookup : no such host

[preflight] Some fatal errors occurred:

hostname "" a DNS-1123 subdomain must consist of lower case alphanumeric characters, '-' or '.', and must start and end with an alphanumeric character (e.g. 'example.com', regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*')

/proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

[preflight] If you know what you are doing, you can skip pre-flight checks with `--skip-preflight-checks`- 预检查报了一个Fatal错误,这应该是kubeadm1.7的一个bug,可用–skip-preflight-checks取消预检查

[root@server3 ~]# kubeadm join --token c28255.65ac0d38a85a53f9 172.25.27.2:6443 --skip-preflight-checks

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[preflight] Skipping pre-flight checks

[discovery] Trying to connect to API Server "172.25.27.2:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://172.25.27.2:6443"

[discovery] Cluster info signature and contents are valid, will use API Server "https://172.25.27.2:6443"

[discovery] Successfully established connection with API Server "172.25.27.2:6443"

[bootstrap] Detected server version: v1.7.2

[bootstrap] The server supports the Certificates API (certificates.k8s.io/v1beta1)

[csr] Created API client to obtain unique certificate for this node, generating keys and certificate signing request

[csr] Received signed certificate from the API server, generating KubeConfig...

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

Node join complete:

* Certificate signing request sent to master and response

received.

* Kubelet informed of new secure connection details.

Run 'kubectl get nodes' on the master to see this machine join.- 注:node上需要安装kubeadm,kubelet安装并启动,否则join提示成功但实际加入失败

十一、node节点安装验证

- 通过kubectl get nodes查看节点是否成功加入集群

[root@server2 ~]# kubectl get nodes

NAME STATUS AGE VERSION

server2 Ready 15m v1.7.2

server3 Ready 2m v1.7.2

server4 Ready 1m v1.7.2十二、测试集群是否正常工作

[root@server2 ~]# vim rc-nginx.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: rc-nginx-2

spec:

replicas: 2

template:

metadata:

labels:

app: nginx-2

spec:

containers:

- name: nginx-2

image: xingwangc.docker.rg/nginx

ports:

- containerPort: 80

[root@server2 ~]# kubectl create -f rc-nginx.yaml

replicationcontroller "rc-nginx-2" created

[root@server2 ~]# kubectl get rc

NAME DESIRED CURRENT READY AGE

rc-nginx-2 2 2 0 12s

[root@server2 ~]# kubectl create -f https://raw.githubusercontent.com/kubernetes/kubernetes.github.io/master/d

[root@server2 ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE

busybox 1/1 Running 0 41m 172.25.0.4 server2

rc-nginx-2-1crh0 0/1 ContainerCreating 0 1m <none> server2

rc-nginx-2-89pdr 0/1 ErrImagePull 0 1m 172.25.0.5 server2通过kubectl get po -o wide查看,2个Nginx实例分别部署到2个node上

[root@server2 ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-deployment-431080787-2z167 1/1 Running 0 3m 10.244.0.15 bjo-ep-dep-039.dev.fwmrm.net

nginx-deployment-431080787-55fl8 1/1 Running 0 3m 10.16.103.5 bjo-ep-svc-017.dev.fwmrm.net 更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)