HUE配置zookeeper,HDFS报错Failed to access filesystem root解决

在运行HUE的时候, webhdfs进入总会报错,Failed to access filesystem root我是在集群的三个server(namenode, node1, node2)里面,选的压力最小的一个namenode跑的docker的HUE版本. 版本号3.9ssh namenode`进入namenode server`docker exec -ti hue_test ba

在运行HUE的时候, webhdfs进入总会报错, Failed to access filesystem root

我是在集群的三个server(namenode, node1, node2)里面,选的压力最小的一个namenode跑的docker的HUE版本. 版本号3.9

ssh namenode `进入namenode server`

docker exec -ti hue_test bash `进入hue正在运行的container`

cat /etc/debian_version `原来hue的docker是跑在了debian sid上`退出到namenode, 执行 hadoop fs -ls /

可以拿到hdfs的根.

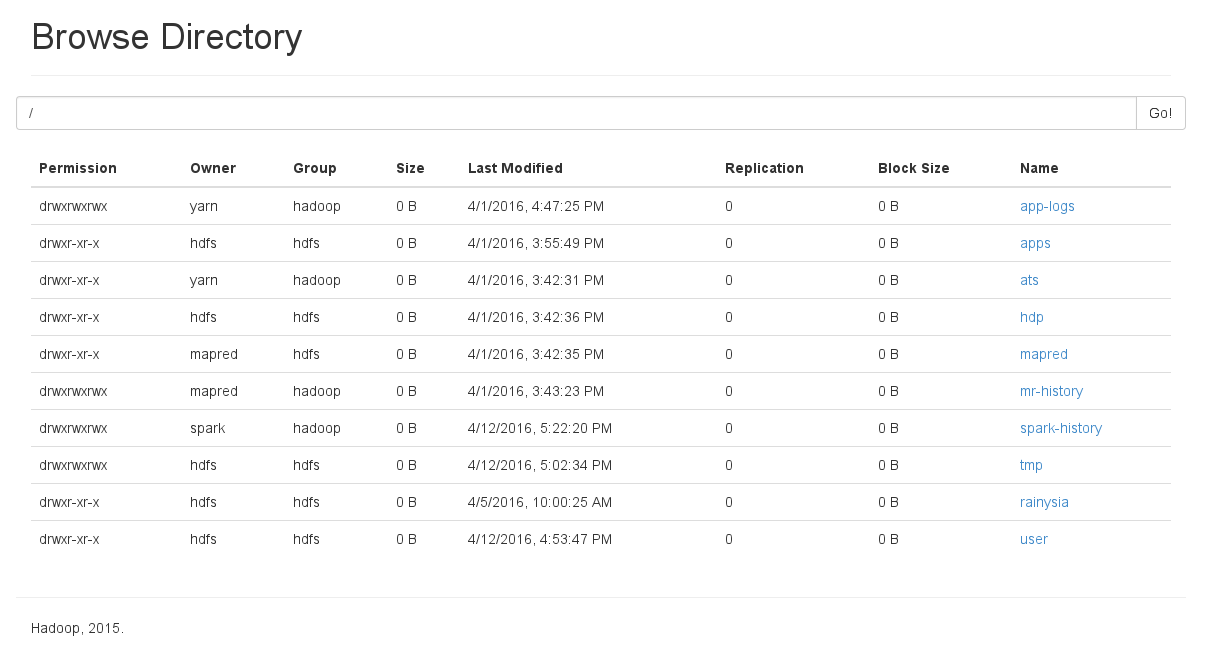

通过HDFS的webhdfs UI地址,

http://namenode.domain.org:50070/explorer.html#/

可以正常浏览到里面的内容

查看HUE的配置文件pseudo-distributed.ini, 下面只是一些关键的配置, 这里为了启用zookeeper和webhdfs

[desktop]

secret_key=NGM2NWI5NWQ5YzA4NWNhMzRiYjMwYWFl

# Webserver runs as this user

server_user=hue

server_group=hue

# This should be the hadoop cluster admin

default_hdfs_superuser=hdfs

default_site_encoding=utf-8

[hadoop]

[[hdfs_clusters]]

[[[default]]]

fs_defaultfs=hdfs://namenode.domain.org:8020, \

hdfs://node1.domain.org:8020, \

hdfs://node2.domain.org:8020

webhdfs_url=http://namenode.domain.org:50070/webhdfs/v1

[hbase]

hbase_clusters=(Cluster|node2.domain.org:9090)

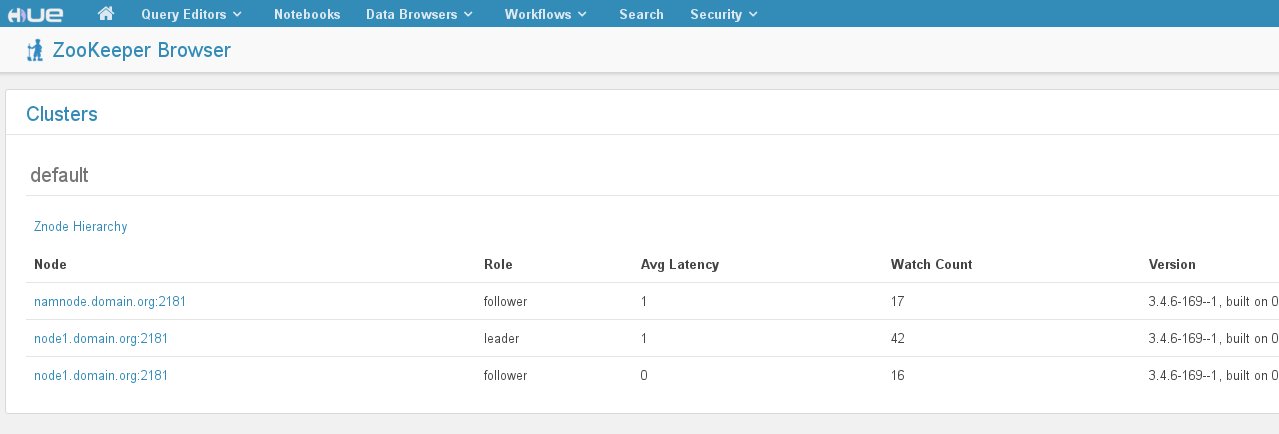

[zookeeper]

[[clusters]]

[[[default]]]

# Zookeeper ensemble. Comma separated list of Host/Port.

# e.g. localhost:2181,localhost:2182,localhost:2183

host_ports=namenode.domain.org:2181,node1.domain.org:2181,node2.domain.org:2181

[libzookeeper]

# ZooKeeper ensemble. Comma separated list of Host/Port.

# e.g. localhost:2181,localhost:2182,localhost:2183

ensemble=namenode.domain.org:2181,node1.domain.org:2181,node2.domain.org:2181对namenode和node1, node2的server,

作了如下操作

adduser --system --shell /bin/bash hue

usermod -a -G hadoop,hdfs hue添加了hue的用户,并且把hue加到了hadoop和hdfs组里面去.

编辑hadoop配置文件core-site.xml

增加了hadoop.proxyuser.hue.groups =* 和hadoop.proxyuser.hue.hosts = *

登录进hue的容器里面, 发现以下的error

root@890a6b506316:/hue/logs# cat error.log | grep -n3 localhost

63-Traceback (most recent call last):

64- File "/hue/apps/impala/src/impala/conf.py", line 186, in config_validator

65- raise ex

66:StructuredThriftTransportException: Could not connect to localhost:21050 (code THRIFTTRANSPORT): TTransportException('Could not connect to localhost:21050',)

67-[12/Apr/2016 01:53:56 -0700] conf ERROR failed to get oozie status

68-Traceback (most recent call last):

69- File "/hue/desktop/libs/liboozie/src/liboozie/conf.py", line 61, in get_oozie_status

--

168-Traceback (most recent call last):

169- File "/hue/apps/impala/src/impala/conf.py", line 186, in config_validator

170- raise ex

171:StructuredThriftTransportException: Could not connect to localhost:21050 (code THRIFTTRANSPORT): TTransportException('Could not connect to localhost:21050',)

172-[12/Apr/2016 02:00:43 -0700] conf ERROR failed to get oozie status

173-Traceback (most recent call last):

174- File "/hue/desktop/libs/liboozie/src/liboozie/conf.py", line 61, in get_oozie_status

--

273-Traceback (most recent call last):

274- File "/hue/apps/impala/src/impala/conf.py", line 186, in config_validator

275- raise ex

276:StructuredThriftTransportException: Could not connect to localhost:21050 (code THRIFTTRANSPORT): TTransportException('Could not connect to localhost:21050',)

277-[12/Apr/2016 02:02:35 -0700] conf ERROR failed to get oozie status

278-Traceback (most recent call last):

279- File "/hue/desktop/libs/liboozie/src/liboozie/conf.py", line 61, in get_oozie_status发现是抛出的 Could not connect to localhost:port

奇怪的是我明明已经配置了namenode.domain.org 作为远端的地址, 为什么会走localhost,

随便找了一台机器, 执行

[root@node2 ~]# curl -i http://namenode.domain.org:50070/webhdfs/v1/?op=LISTSTATUS

HTTP/1.1 200 OK

Cache-Control: no-cache

Expires: Tue, 12 Apr 2016 09:45:20 GMT

Date: Tue, 12 Apr 2016 09:45:20 GMT

Pragma: no-cache

Expires: Tue, 12 Apr 2016 09:45:20 GMT

Date: Tue, 12 Apr 2016 09:45:20 GMT

Pragma: no-cache

Content-Type: application/json

Transfer-Encoding: chunked

Server: Jetty(6.1.26.hwx)

{"FileStatuses":{"FileStatus":[

{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":16392,"group":"hadoop","length":0,"modificationTime":1459500445841,"owner":"yarn","pathSuffix":"app-logs","permission":"777","replication":0,"storagePolicy":0,"type":"DIRECTORY"},

{"accessTime":0,"blockSize":0,"childrenNum":4,"fileId":16452,"group":"hdfs","length":0,"modificationTime":1459497349580,"owner":"hdfs","pathSuffix":"apps","permission":"755","replication":0,"storagePolicy":0,"type":"DIRECTORY"},

{"accessTime":0,"blockSize":0,"childrenNum":2,"fileId":16389,"group":"hadoop","length":0,"modificationTime":1459496551426,"owner":"yarn","pathSuffix":"ats","permission":"755","replication":0,"storagePolicy":0,"type":"DIRECTORY"},

{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":16399,"group":"hdfs","length":0,"modificationTime":1459496556023,"owner":"hdfs","pathSuffix":"hdp","permission":"755","replication":0,"storagePolicy":0,"type":"DIRECTORY"},

{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":16395,"group":"hdfs","length":0,"modificationTime":1459496555129,"owner":"mapred","pathSuffix":"mapred","permission":"755","replication":0,"storagePolicy":0,"type":"DIRECTORY"},

{"accessTime":0,"blockSize":0,"childrenNum":2,"fileId":16397,"group":"hadoop","length":0,"modificationTime":1459496603705,"owner":"mapred","pathSuffix":"mr-history","permission":"777","replication":0,"storagePolicy":0,"type":"DIRECTORY"},

{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":16409,"group":"hadoop","length":0,"modificationTime":1460454315757,"owner":"spark","pathSuffix":"spark-history","permission":"777","replication":0,"storagePolicy":0,"type":"DIRECTORY"},

{"accessTime":0,"blockSize":0,"childrenNum":3,"fileId":16386,"group":"hdfs","length":0,"modificationTime":1460451754986,"owner":"hdfs","pathSuffix":"tmp","permission":"777","replication":0,"storagePolicy":0,"type":"DIRECTORY"},

{"accessTime":0,"blockSize":0,"childrenNum":2,"fileId":17410,"group":"hdfs","length":0,"modificationTime":1459821625524,"owner":"hdfs","pathSuffix":"rainysia","permission":"755","replication":0,"storagePolicy":0,"type":"DIRECTORY"},

{"accessTime":0,"blockSize":0,"childrenNum":9,"fileId":16387,"group":"hdfs","length":0,"modificationTime":1460451227484,"owner":"hdfs","pathSuffix":"user","permission":"755","replication":0,"storagePolicy":0,"type":"DIRECTORY"}

]}}可以成功取到数据.

进入docker container后, 运行相同命令, 提示没有curl

apt-get install curl -y 安装一下.

运行后提示

root@namenode1:/hue# curl: (7) Failed to connect to namenode1.domain.org port 50070: Connection refused原来是hostname作怪.

root@namenode:/hue#hostname

namenode

root@namenode:/hue#cat /etc/hostname

namenode.domain.org难怪了, docker 容器里面去连接容器里面的端口.

查看当时容器启动的命令

docker run -tid --name hue8888 --hostname namenode.domain.org \

-p 8888:8888 -v /usr/hdp:/usr/hdp -v /etc/hadoop:/etc/hadoop \

-v /etc/hive:/etc/hive -v /etc/hbase:/etc/hbase \

-v /docker-config/pseudo-distributed.ini /hue/desktop/conf/pseudo-distributed.ini \

c-docker.domain.org:5000/hue:latest \

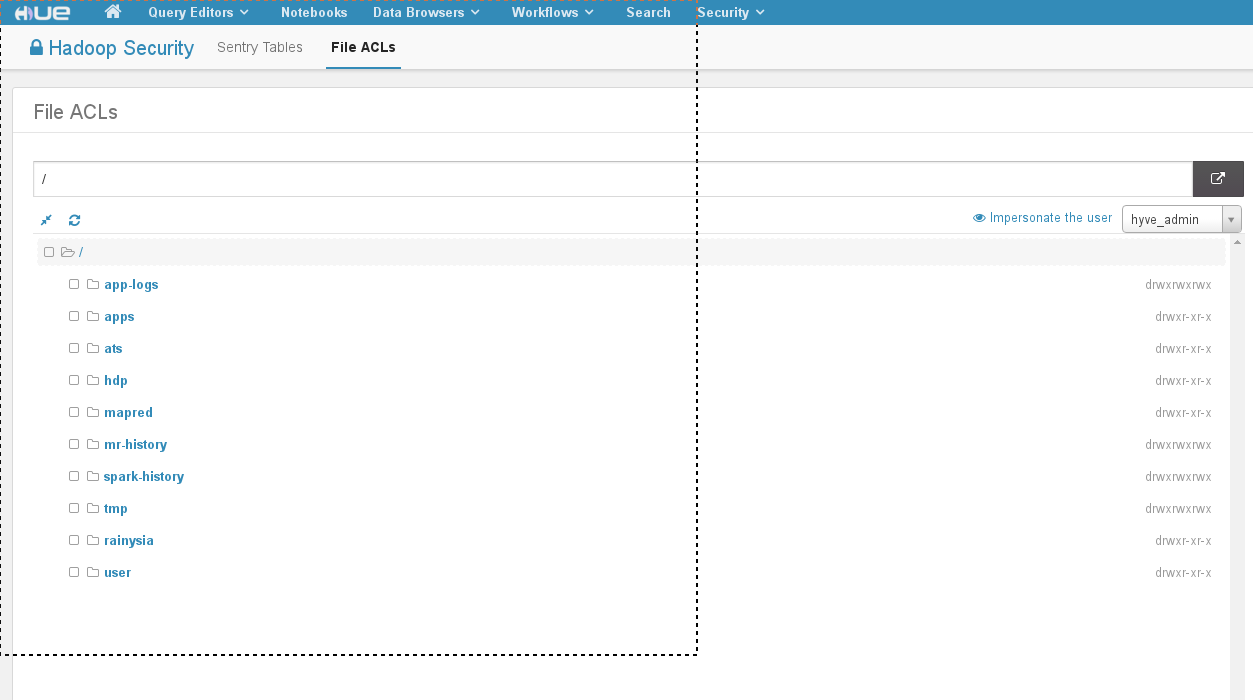

./build/env/bin/hue runserver_plus 0.0.0.0:8888原来当时指定了hostname, 干掉–hostname, hue成功启动了webhdfs和zookeeper的node browser

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)