【复现】在CUB_200_2011数据集上复现分类程序IELT

我是在程序中,IELT-main/utils/dataset.py中设置download=True让它自己下载的。或者直接下zip也挺好用,有的时候git clone会出问题,下zip目前没什么问题。唯独还没看到测试的代码,没找得到测试方法,没找到可视化方法,未能应用于自己的数据集。缺啥装啥,由于代码比较新(复现于2023/12/8),我没遇到版本问题。由于代码中使用了较多的 os.path.jo

几个网站

细粒度领域的资源整合:Awesome Fine-Grained Image Analysis – Papers, Codes and Datasets (weixiushen.com)

深度学习各个领域的排行榜网站:Browse the State-of-the-Art in Machine Learning | Papers With Code

在CUB-200-2011上的排行:

CUB-200-2011 Benchmark (Fine-Grained Image Classification) | Papers With Code

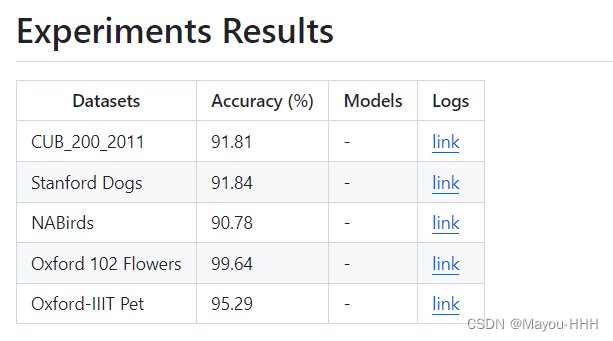

IELT效果差不多,年份也近,所以选择了该网络。

项目情况

Article: Fine-Grained Visual Classification via Internal Ensemble Learning Transformer

Published in: IEEE Transactions on Multimedia ( Early Access )

注:什么是Early Access的论文? 为了学术成果更快更广泛的传播、交流,有些论文在正式见刊(印刷)之前便已网络见刊,这些论文已经被数据库收录,除了没有分配卷、期、页码外,论文的其他信息(内容、DOI等)均已确定,可以检索、下载、阅读和引用,被称为“Early Access论文”。

-------------------------------------------------------------------------------------------------------------------------------

我的复现

由于代码中使用了较多的 os.path.join ,会有正反斜杠问题,在windows10上训练时出了很多问题,我直接换平台,问题一个没有,代码很好跑。特别是没有版本问题,很好,这可能就是代码年份较新的好处吧。

唯独还没看到测试的代码,没找得到测试方法,没找到可视化方法,未能应用于自己的数据集。

TBC

硬件环境:

数据集: CUB_200_2011

我是在程序中,IELT-main/utils/dataset.py中设置download=True让它自己下载的。(建议)

也能自己下载好放到合适的目录。

在dataset.py中,作者给出的参考网址:

http://www.vision.caltech.edu/visipedia-data/CUB-200-2011/CUB_200_2011.tgz

目录组织形式参考:

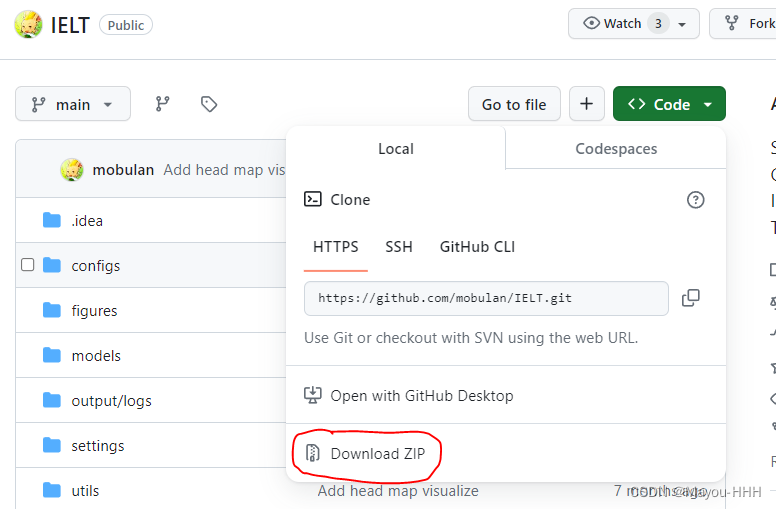

clone到本地

git clone https://github.com/mobulan/ielt或者直接下zip也挺好用,有的时候git clone会出问题,下zip目前没什么问题。

准备虚拟环境

conda create -n IELT python=3.9

conda activate IELT

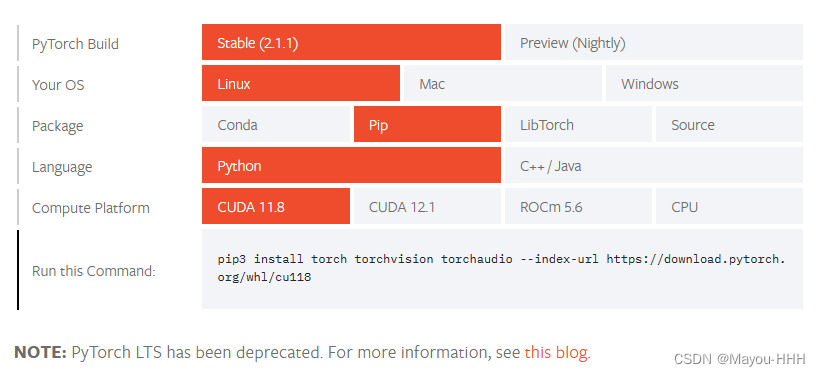

直接pytorch官网安装,这样最保险、最全面、最好用:Start Locally | PyTorch

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118缺啥装啥,由于代码比较新(复现于2023/12/8),我没遇到版本问题。

pip install timm tensorboard ml_collections scipy yacs pandas 下载预训练模型

需要一个谷歌账号,没有就直接买一个吧hh。

参考组织形式

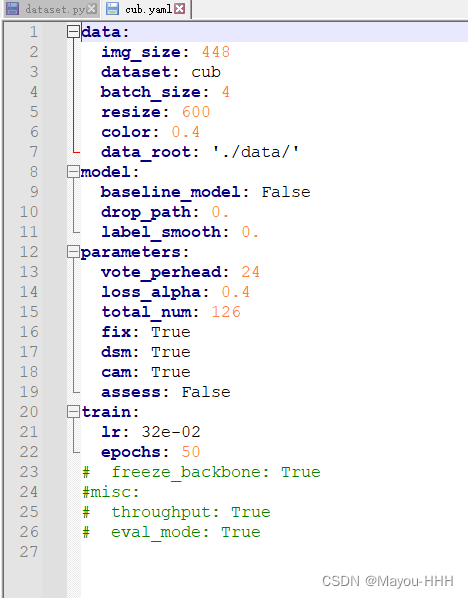

修改配置文件

cub.yaml

参考

根目录setup.py文件

主要是选一下cfg_file,显卡

from settings.defaults import _C

from settings.setup_functions import *

config = _C.clone()

cfg_file = os.path.join('configs', 'cub.yaml')

# 可以修改log名称

# cfg_file = os.path.join('..','configs', 'cub.yaml')

config = SetupConfig(config, cfg_file)

config.defrost()

## Log Name and Perferences

config.write = True # comment it to disable all the log writing

config.train.checkpoint = True # comment it to disable saving the checkpoint

config.misc.exp_name = f'{config.data.dataset}'

config.misc.log_name = f'IELT'

config.cuda_visible = '0'

# 可以选择使用的显卡

# Environment Settings

config.data.log_path = os.path.join(config.misc.output, config.misc.exp_name, config.misc.log_name

+ time.strftime(' %m-%d_%H-%M', time.localtime()))

config.model.pretrained = os.path.join(config.model.pretrained,

config.model.name + config.model.pre_version + config.model.pre_suffix)

os.environ['CUDA_VISIBLE_DEVICES'] = config.cuda_visible

os.environ['OMP_NUM_THREADS'] = '1'

# Setup Functions

config.nprocess, config.local_rank = SetupDevice()

config.data.data_root, config.data.batch_size = LocateDatasets(config)

config.train.lr = ScaleLr(config)

log = SetupLogs(config, config.local_rank)

if config.write and config.local_rank in [-1, 0]:

with open(config.data.log_path + '/config.json', "w") as f:

f.write(config.dump())

config.freeze()

SetSeed(config)

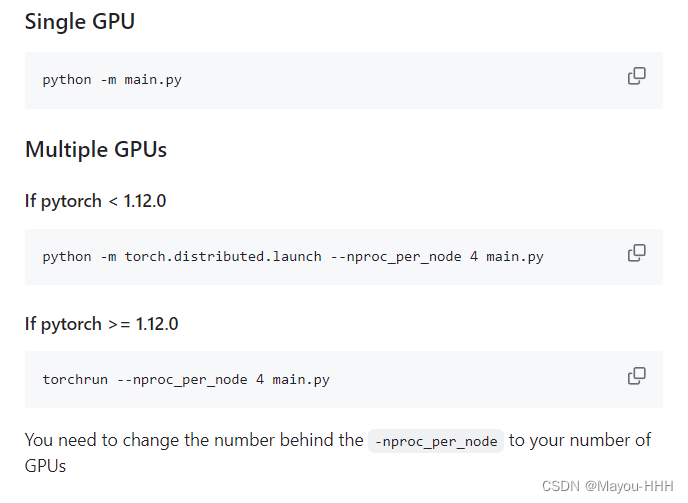

开始训练

上述官方命令有点小问题,修改如下:

python main.py

或者

python -m main 输出

log

================================================================================

Fine-Grained Visual Classification via Internal Ensemble Learning Transformer

Pytorch Implementation

================================================================================

Author: Xu Qin, Wang Jiahui, Jiang Bo, Luo Bin

Institute: Anhui University Date: 2023-02-13

--------------------------------------------------------------------------------

Python Version: 3.9.18 Pytorch Version: 2.1.1+cu118 Cuda Version: 11.8

--------------------------------------------------------------------------------

============================ Data Settings ============================

dataset cub batch_size 4

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

data_root ./data/ img_size 448

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

resize 600 padding 0

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

no_crop 0 autoaug 0

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

blur 0.0 color 0.4

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

saturation 0.0 hue 0.0

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

mixup 0.0 cutmix 0.0

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

rotate 0.0 log_path ./output/cub/IELT 12-07_23-43

============================ Hyper Parameters ============================

vote_perhead 24 update_warm 10 loss_alpha 0.4

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

total_num 126 fix 1 dsm 1

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

cam 1 assess 0

============================ Training Settings ============================

start_epoch 0 epochs 50 warmup_epochs 0

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

weight_decay 0 clip_grad None checkpoint 1

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

lr 0.0025 scheduler cosine lr_epoch_update0

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

optimizer SGD freeze_backbone0 eps 1e-08

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

betas 0.9,0.999 momentum 0.9

============================ Other Settings ============================

amp 1 output ./output exp_name cub

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

log_name IELT eval_every 1 seed 42

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

eval_mode 0 throughput 0 fused_window 1

============================ Model Structure ============================

type ViT name ViT-B_16 baseline_model0

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

pretrained pretrained/ViT-B_16.npz pre_version pre_suffix .npz

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

resume num_classes 200 drop_path 0.0

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

dropout 0.0 label_smooth 0.0 parameters 93.493M

============================ Training Information ============================

Train samples 5994 Test samples 5794

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Total Batch Size 4 Load Time 3s

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Train Steps 74900 Warm Epochs 0

============================ Start Training ============================

Epoch 1 /50 : Accuracy 66.051 BA 66.051 BE 1 Loss 1.1079 TA 45.91

Epoch 2 /50 : Accuracy 69.814 BA 69.814 BE 2 Loss 0.9985 TA 62.67

Epoch 3 /50 : Accuracy 71.246 BA 71.246 BE 3 Loss 1.0025 TA 71.04

Epoch 4 /50 : Accuracy 72.006 BA 72.006 BE 4 Loss 1.0287 TA 78.81

Epoch 5 /50 : Accuracy 72.523 BA 72.523 BE 5 Loss 1.0503 TA 82.93

Epoch 6 /50 : Accuracy 75.768 BA 75.768 BE 6 Loss 0.9947 TA 87.40

Epoch 7 /50 : Accuracy 74.836 BA 75.768 BE 6 Loss 1.0967 TA 88.85

Epoch 8 /50 : Accuracy 78.961 BA 78.961 BE 8 Loss 0.9542 TA 91.51

Epoch 9 /50 : Accuracy 81.101 BA 81.101 BE 9 Loss 0.8824 TA 92.99

Epoch 10 /50 : Accuracy 80.290 BA 81.101 BE 9 Loss 0.9127 TA 95.11

Epoch 11 /50 : Accuracy 79.703 BA 81.101 BE 9 Loss 0.9738 TA 94.04

Epoch 12 /50 : Accuracy 78.840 BA 81.101 BE 9 Loss 1.0418 TA 95.51

Epoch 13 /50 : Accuracy 82.171 BA 82.171 BE 13 Loss 0.8814 TA 96.76

Epoch 14 /50 : Accuracy 82.137 BA 82.171 BE 13 Loss 0.9301 TA 97.55

Epoch 15 /50 : Accuracy 82.206 BA 82.206 BE 15 Loss 0.9095 TA 97.91

Epoch 16 /50 : Accuracy 82.171 BA 82.206 BE 15 Loss 0.9413 TA 98.87

Epoch 17 /50 : Accuracy 83.345 BA 83.345 BE 17 Loss 0.8929 TA 98.93

Epoch 18 /50 : Accuracy 83.828 BA 83.828 BE 18 Loss 0.8763 TA 99.33

Epoch 19 /50 : Accuracy 84.311 BA 84.311 BE 19 Loss 0.8594 TA 99.73

Epoch 20 /50 : Accuracy 84.001 BA 84.311 BE 19 Loss 0.8794 TA 99.65

Epoch 21 /50 : Accuracy 84.122 BA 84.311 BE 19 Loss 0.8902 TA 99.47

Epoch 22 /50 : Accuracy 85.243 BA 85.243 BE 22 Loss 0.8365 TA 99.93

Epoch 23 /50 : Accuracy 84.984 BA 85.243 BE 22 Loss 0.8427 TA 99.77

Epoch 24 /50 : Accuracy 84.829 BA 85.243 BE 22 Loss 0.8708 TA 99.77

Epoch 25 /50 : Accuracy 85.243 BA 85.243 BE 22 Loss 0.8370 TA 99.77

Epoch 26 /50 : Accuracy 86.055 BA 86.055 BE 26 Loss 0.8122 TA 99.97

Epoch 27 /50 : Accuracy 86.210 BA 86.210 BE 27 Loss 0.8063 TA 99.98

Epoch 28 /50 : Accuracy 85.882 BA 86.210 BE 27 Loss 0.8244 TA 99.95

Epoch 29 /50 : Accuracy 85.658 BA 86.210 BE 27 Loss 0.8194 TA 99.97

Epoch 30 /50 : Accuracy 85.934 BA 86.210 BE 27 Loss 0.8201 TA 99.97

Epoch 31 /50 : Accuracy 85.968 BA 86.210 BE 27 Loss 0.8277 TA 99.93

Epoch 32 /50 : Accuracy 85.986 BA 86.210 BE 27 Loss 0.8212 TA 99.97

Epoch 33 /50 : Accuracy 86.210 BA 86.210 BE 27 Loss 0.8157 TA 99.95

Epoch 34 /50 : Accuracy 86.124 BA 86.210 BE 27 Loss 0.8106 TA 100.00

Epoch 35 /50 : Accuracy 85.986 BA 86.210 BE 27 Loss 0.8110 TA 99.98

Epoch 36 /50 : Accuracy 86.158 BA 86.210 BE 27 Loss 0.8093 TA 100.00

Epoch 37 /50 : Accuracy 86.020 BA 86.210 BE 27 Loss 0.8084 TA 99.98

Epoch 38 /50 : Accuracy 86.141 BA 86.210 BE 27 Loss 0.8073 TA 100.00

Epoch 39 /50 : Accuracy 86.158 BA 86.210 BE 27 Loss 0.8032 TA 99.93

Epoch 40 /50 : Accuracy 86.175 BA 86.210 BE 27 Loss 0.8025 TA 100.00

Epoch 41 /50 : Accuracy 86.210 BA 86.210 BE 27 Loss 0.8016 TA 100.00

Epoch 42 /50 : Accuracy 86.193 BA 86.210 BE 27 Loss 0.8015 TA 100.00

Epoch 43 /50 : Accuracy 86.193 BA 86.210 BE 27 Loss 0.8014 TA 100.00

Epoch 44 /50 : Accuracy 86.175 BA 86.210 BE 27 Loss 0.8024 TA 99.97

Epoch 45 /50 : Accuracy 86.175 BA 86.210 BE 27 Loss 0.8018 TA 99.95

Epoch 46 /50 : Accuracy 86.193 BA 86.210 BE 27 Loss 0.8020 TA 100.00

Epoch 47 /50 : Accuracy 86.227 BA 86.227 BE 47 Loss 0.8017 TA 99.98

Epoch 48 /50 : Accuracy 86.175 BA 86.227 BE 47 Loss 0.8017 TA 100.00

Epoch 49 /50 : Accuracy 86.210 BA 86.227 BE 47 Loss 0.8018 TA 99.98

Epoch 50 /50 : Accuracy 86.175 BA 86.227 BE 47 Loss 0.8017 TA 99.97

============================ Finish Training ============================

Best Accuracy 86.227 Best Epoch 47

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Training Time 126.95 min Testing Time 44.73 min

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Total Time 171.68 min TBC

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)