openEuler 20.03 (LTS-SP2) aarch64 cephadm 部署ceph18.2.0【3】bootstrap 解决firewalld防火墙导致的故障

接上篇。

接上篇

openEuler 20.03 (LTS-SP2) aarch64 cephadm 部署ceph18.2.0【1】离线部署 准备基础环境-CSDN博客

指定私服镜像bootstrap

--image 指定采用私服镜像

--registry-username=x --registry-password=x 随便写,脚本限制了--image后必须添加此参数

--log-to-file 输出日志到文件,方便查找分析

cephadm --image 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0 bootstrap --registry-url=10.2.1.176:5000 --registry-username=x --registry-password=x --mon-ip 10.2.1.176 --log-to-file

故障:/usr/local/sbin/cephadm: /usr/libexec/platform-python: bad interpreter: No such file or directory

暴力解决

[root@10-2-1-176 ~]# ln -s /usr/bin/python3 /usr/libexec/platform-python -v

'/usr/libexec/platform-python' -> '/usr/bin/python3'

再次bootstrap

cephadm --image 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0 bootstrap --registry-url=10.2.1.176:5000 --registry-username=x --registry-password=x --mon-ip 10.2.1.176 --log-to-file

故障/usr/libexec/podman/catatonit: no such file or directory

github只提供了x86版本

源码编译aarch64 catatonit

git clone https://github.com/openSUSE/catatonit

cd catatonit

bash autogen.sh

./configure

make

make install默认安装路径

作软连接

ln -sv /usr/local/bin/catatonit /usr/libexec/podman/catatonit文件信息

再再次bootstrap

cephadm --image 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0 bootstrap --registry-url=10.2.1.176:5000 --registry-username=x --registry-password=x --mon-ip 10.2.1.176 --log-to-file

日志

[root@10-2-1-176 ~]# cephadm --image 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0 bootstrap --registry-url=10.2.1.176:5000 --registry-username=x --registry-password=x --mon-ip 10.2.1.176 --log-to-file

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit ntpd.service is enabled and running

Repeating the final host check...

podman (/usr/bin/podman) version 3.4.4 is present

systemctl is present

lvcreate is present

Unit ntpd.service is enabled and running

Host looks OK

Cluster fsid: ae4a6358-933b-11ee-8079-faa4b605ed00

Verifying IP 10.2.1.176 port 3300 ...

Verifying IP 10.2.1.176 port 6789 ...

Mon IP `10.2.1.176` is in CIDR network `10.2.1.0/24`

Mon IP `10.2.1.176` is in CIDR network `10.2.1.0/24`

Internal network (--cluster-network) has not been provided, OSD replication will default to the public_network

Logging into custom registry.

Pulling container image 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0...

Ceph version: ceph version 18.2.0 (5dd24139a1eada541a3bc16b6941c5dde975e26d) reef (stable)

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

firewalld ready

Enabling firewalld service ceph-mon in current zone...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting mon public_network to 10.2.1.0/24

Wrote config to /etc/ceph/ceph.conf

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Creating mgr...

Verifying port 9283 ...

Verifying port 8765 ...

Verifying port 8443 ...

firewalld ready

Enabling firewalld service ceph in current zone...

firewalld ready

Enabling firewalld port 9283/tcp in current zone...

Enabling firewalld port 8765/tcp in current zone...

Enabling firewalld port 8443/tcp in current zone...

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/15)...

mgr not available, waiting (2/15)...

mgr not available, waiting (3/15)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for mgr epoch 5...

mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to /etc/ceph/ceph.pub

Adding key to root@localhost authorized_keys...

Adding host 10-2-1-176...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Deploying ceph-exporter service with default placement...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for mgr epoch 9...

mgr epoch 9 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

firewalld ready

Ceph Dashboard is now available at:

URL: https://10-2-1-176:8443/

User: admin

Password: un0sk94e9f

Enabling client.admin keyring and conf on hosts with "admin" label

Saving cluster configuration to /var/lib/ceph/ae4a6358-933b-11ee-8079-faa4b605ed00/config directory

Enabling autotune for osd_memory_target

You can access the Ceph CLI as following in case of multi-cluster or non-default config:

sudo /usr/local/sbin/cephadm shell --fsid ae4a6358-933b-11ee-8079-faa4b605ed00 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Or, if you are only running a single cluster on this host:

sudo /usr/local/sbin/cephadm shell

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/en/latest/mgr/telemetry/

Bootstrap complete.

依赖项指定采用私有源地址

查看所有容器镜像参数

[ceph: root@10-2-1-176 /]# ceph config ls | grep container_image

container_image

mgr/cephadm/container_image_alertmanager

mgr/cephadm/container_image_base

mgr/cephadm/container_image_elasticsearch

mgr/cephadm/container_image_grafana

mgr/cephadm/container_image_haproxy

mgr/cephadm/container_image_jaeger_agent

mgr/cephadm/container_image_jaeger_collector

mgr/cephadm/container_image_jaeger_query

mgr/cephadm/container_image_keepalived

mgr/cephadm/container_image_loki

mgr/cephadm/container_image_node_exporter

mgr/cephadm/container_image_prometheus

mgr/cephadm/container_image_promtail

mgr/cephadm/container_image_snmp_gateway

设置容器镜像均采用内网服务器地址

ceph config set mgr mgr/cephadm/container_image_alertmanager 10.2.1.176:5000/quay.io/prometheus/alertmanager:v0.25.0

ceph config set mgr mgr/cephadm/container_image_base 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0

ceph config set mgr mgr/cephadm/container_image_elasticsearch 10.2.1.176:5000/quay.io/omrizeneva/elasticsearch:6.8.23

ceph config set mgr mgr/cephadm/container_image_grafana 10.2.1.176:5000/quay.io/ceph/ceph-grafana:9.4.7

ceph config set mgr mgr/cephadm/container_image_haproxy 10.2.1.176:5000/quay.io/ceph/haproxy:2.3

ceph config set mgr mgr/cephadm/container_image_jaeger_agent 10.2.1.176:5000/quay.io/jaegertracing/jaeger-agent:1.29

ceph config set mgr mgr/cephadm/container_image_jaeger_collector 10.2.1.176:5000/quay.io/jaegertracing/jaeger-collector:1.29

ceph config set mgr mgr/cephadm/container_image_jaeger_query 10.2.1.176:5000/quay.io/jaegertracing/jaeger-query:1.29

ceph config set mgr mgr/cephadm/container_image_keepalived 10.2.1.176:5000/quay.io/ceph/keepalived:2.2.4

ceph config set mgr mgr/cephadm/container_image_loki 10.2.1.176:5000/docker.io/grafana/loki:2.4.0

ceph config set mgr mgr/cephadm/container_image_node_exporter 10.2.1.176:5000/quay.io/prometheus/node-exporter:v1.5.0

ceph config set mgr mgr/cephadm/container_image_prometheus 10.2.1.176:5000/quay.io/prometheus/prometheus:v2.43.0

ceph config set mgr mgr/cephadm/container_image_promtail 10.2.1.176:5000/docker.io/grafana/promtail:2.4.0# ceph config set mgr mgr/cephadm/container_image_snmp_gateway # cephadm没有找到信息,暂时不管

重新部署容器

ceph orch redeploy prometheus

ceph orch redeploy grafana

ceph orch redeploy alertmanager

ceph orch redeploy node-exporterredeploy报错

故障:无法登录仓库(failed to login to 10.2.1.176:5000 as x with given password)

登录到仓库报错,跟x86_64上还不一样

手动测试登录仓库,卡主了!

测试拉取镜像也卡主了

应该是网络转发故障,确认下端口开放情况,重启registry

[root@10-2-1-176 ~]# firewall-cmd --list-port

123/udp 5000/tcp 9283/tcp 8765/tcp 8443/tcp

防火墙问题

systemctl restart firewalld

podman restart registry

重新生成iptables规则后,手动验证podman login成功

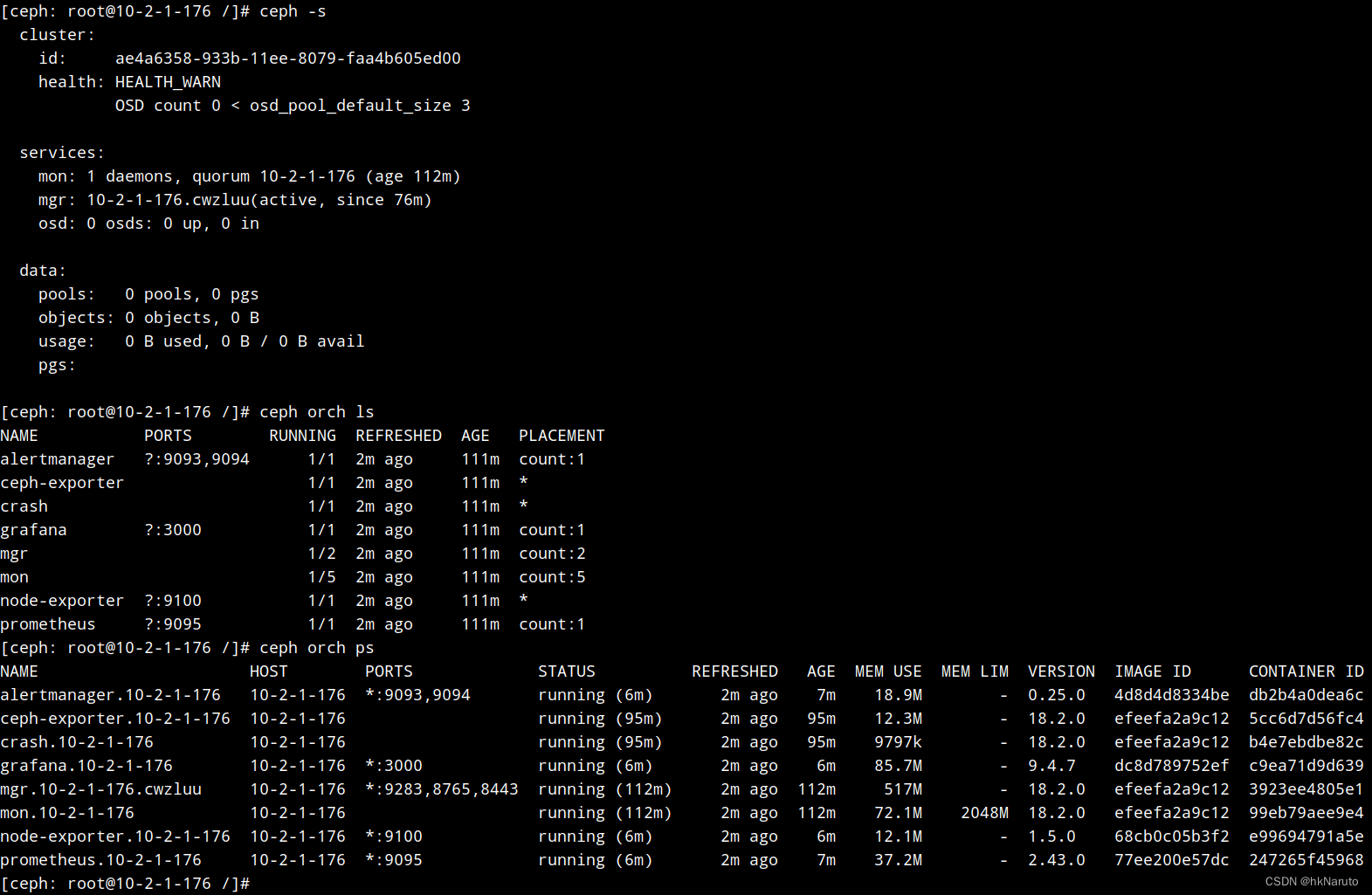

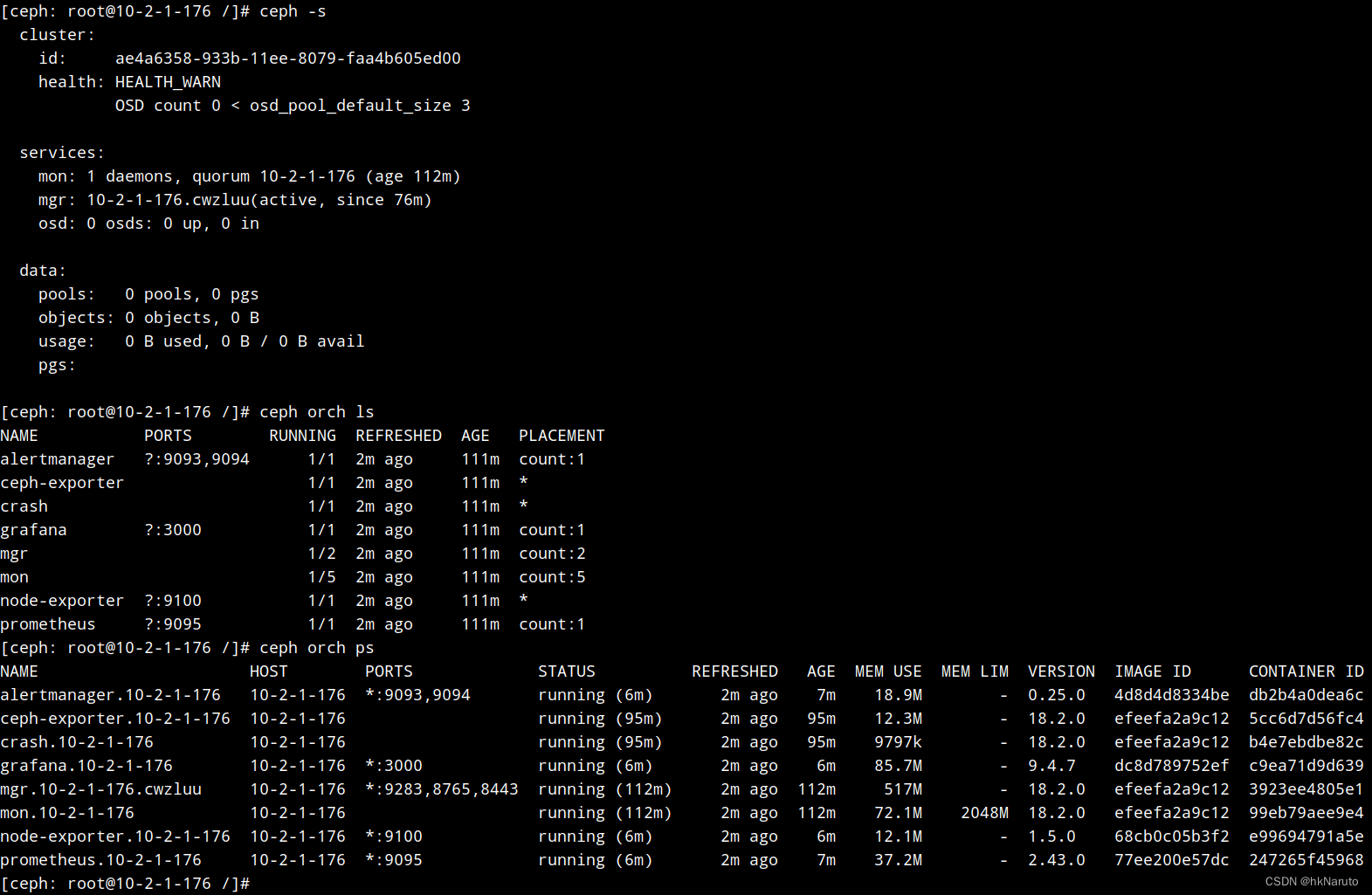

等待一段时间,再观察ceph状态

成功了

参考

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)