达到chatgpt 90%效果的llama,Chinese-Alpaca-Plus-13B合并使用全过程分享

基于llama的开源项目有很多,本次测试的是一个基于7b的llama二次训练的项目,本项目开源了中文LLaMA模型和指令精调的Alpaca大模型。这些模型在原版LLaMA的基础上扩充了中文词表并使用了中文数据进行二次预训练,进一步提升了中文基础语义理解能力。同时,中文Alpaca模型进一步使用了中文指令数据进行精调,显著提升了模型对指令的理解和执行能力。在预训练阶段使用了20G中文语料的预训练。

基于llama的开源项目有很多,本次测试的是一个基于7b的llama二次训练的项目,本项目开源了中文LLaMA模型和指令精调的Alpaca大模型。这些模型在原版LLaMA的基础上扩充了中文词表并使用了中文数据进行二次预训练,进一步提升了中文基础语义理解能力。同时,中文Alpaca模型进一步使用了中文指令数据进行精调,显著提升了模型对指令的理解和执行能力。

在预训练阶段使用了20G中文语料的预训练。但即使LLaMA本身已经过充分的预训练并且具备一定的跨语言能力,但看起来20G的中文预训练还是非常不充分的。

因此,做了如下改进,并发布了Plus版本:

1、进一步扩充了训练数据,其中预训练语料扩充至120G文本,指令精调扩充至4M指令数据(重点增加了STEM相关数据),以覆盖一部分相关专业的领域知识。

2、预训练阶段和精调阶段改用两个LoRA,rank分别为8和64。我们期望更多的参数量可以承载更多的知识和能力。

3、完善了评测样例,样例总量从160个扩充至200个,每个任务20个样例;评测模型由Q4改为Q8,更接近于原始模型的效果,更具有参考价值;修正了部分prompt,使得指令意图更明确。

4、项目指南更丰富:更新并简化了模型合并流程,并提供了词表合并代码、预训练代码,完善了各种帮助文档。

项目分为两部分:

中文LLaMA模型

中文LLaMA模型在原版的基础上扩充了中文词表,使用了中文通用纯文本数据进行二次预训练。

| 模型名称 | 训练数据 | 重构模型[1] | 大小[2] | |

|---|---|---|---|---|

| Chinese-LLaMA-7B | 通用20G | 原版LLaMA-7B | 770M | |

| Chinese-LLaMA-Plus-7B ⭐️ | 通用120G | 原版LLaMA-7B | 790M | |

| Chinese-LLaMA-13B | 通用20G | 原版LLaMA-13B | 1G | |

| Chinese-LLaMA-Plus-13B ⭐️ | 通用120G | 原版LLaMA-13B | 1G |

中文Alpaca模型

中文Alpaca模型在上述中文LLaMA模型的基础上进一步使用了指令数据进行精调。如希望体验类ChatGPT对话交互,请使用Alpaca模型,而不是LLaMA模型。

| 模型名称 | 训练数据 | 重构模型[1] | 大小[2] | |

|---|---|---|---|---|

| Chinese-Alpaca-7B | 指令2M | 原版LLaMA-7B | 790M | |

| Chinese-Alpaca-Plus-7B ⭐️ | 指令4M | 原版LLaMA-7B & Chinese-LLaMA-Plus-7B[4] | 1.1G | |

| Chinese-Alpaca-13B | 指令3M | 原版LLaMA-13B | 1.1G | |

| Chinese-Alpaca-Plus-13B ⭐️ | 指令4.3M | 原版LLaMA-13B & Chinese-LLaMA-Plus-13B[4] | 1.3G |

官方提供了7B和13B,笔者机器有限,于是果断选择了7B

首先,本项目是进行两次训练,第一次是基于llama训练中文语料,得到了chinese-llama和chinese-llama-plus,然后在这两个基础上又添加了指令数据进行精调,如果希望体验类ChatGPT对话交互,请使用Alpaca模型,而不是LLaMA模型,这里就涉及到模型的下载和合并,我们以chinese-alpaca-plus-7b为例子

模型下载

这里需要三个模型,1、llama原版模型,2、chinese-llama-plus-7B,3、chinese-alpaca-plus-7B

git上说下载llama原模型,转成hf版本,然后进行合并,但是我再hf上直接找到了hf版的,哈哈省了一步,链接如下

https://huggingface.co/decapoda-research/llama-7b-hf然后下载chinese-llama-plus-7B

https://pan.baidu.com/s/1zvyX9FN-WSRDdrtMARxxfw?pwd=2gtr最后下载chinese-alpaca-plus-7B

https://pan.baidu.com/s/12tjjxmDWwLBM8Tj_7FAjHg?pwd=32hc合并模型代码

import argparseimport jsonimport osimport gcimport torchimport peftfrom peft import PeftModelfrom transformers import LlamaForCausalLM, LlamaTokenizerfrom huggingface_hub import hf_hub_downloadparser = argparse.ArgumentParser()parser.add_argument('--base_model', default=None, required=True,type=str, help="Please specify a base_model")parser.add_argument('--lora_model', default=None, required=True,type=str, help="Please specify LoRA models to be merged (ordered); use commas to separate multiple LoRA models.")parser.add_argument('--offload_dir', default=None, type=str,help="(Optional) Please specify a temp folder for offloading (useful for low-RAM machines). Default None (disable offload).")parser.add_argument('--output_type', default='pth',choices=['pth','huggingface'], type=str,help="save the merged model in pth or huggingface format.")parser.add_argument('--output_dir', default='./', type=str)emb_to_model_size = {4096 : '7B',5120 : '13B',6656 : '30B',8192 : '65B',}num_shards_of_models = {'7B': 1, '13B': 2}params_of_models = {'7B':{"dim": 4096,"multiple_of": 256,"n_heads": 32,"n_layers": 32,"norm_eps": 1e-06,"vocab_size": -1,},'13B':{"dim": 5120,"multiple_of": 256,"n_heads": 40,"n_layers": 40,"norm_eps": 1e-06,"vocab_size": -1,},}def transpose(weight, fan_in_fan_out):return weight.T if fan_in_fan_out else weight# Borrowed and modified from https://github.com/tloen/alpaca-loradef translate_state_dict_key(k):k = k.replace("base_model.model.", "")if k == "model.embed_tokens.weight":return "tok_embeddings.weight"elif k == "model.norm.weight":return "norm.weight"elif k == "lm_head.weight":return "output.weight"elif k.startswith("model.layers."):layer = k.split(".")[2]if k.endswith(".self_attn.q_proj.weight"):return f"layers.{layer}.attention.wq.weight"elif k.endswith(".self_attn.k_proj.weight"):return f"layers.{layer}.attention.wk.weight"elif k.endswith(".self_attn.v_proj.weight"):return f"layers.{layer}.attention.wv.weight"elif k.endswith(".self_attn.o_proj.weight"):return f"layers.{layer}.attention.wo.weight"elif k.endswith(".mlp.gate_proj.weight"):return f"layers.{layer}.feed_forward.w1.weight"elif k.endswith(".mlp.down_proj.weight"):return f"layers.{layer}.feed_forward.w2.weight"elif k.endswith(".mlp.up_proj.weight"):return f"layers.{layer}.feed_forward.w3.weight"elif k.endswith(".input_layernorm.weight"):return f"layers.{layer}.attention_norm.weight"elif k.endswith(".post_attention_layernorm.weight"):return f"layers.{layer}.ffn_norm.weight"elif k.endswith("rotary_emb.inv_freq") or "lora" in k:return Noneelse:print(layer, k)raise NotImplementedErrorelse:print(k)raise NotImplementedErrordef unpermute(w):return (w.view(n_heads, 2, dim // n_heads // 2, dim).transpose(1, 2).reshape(dim, dim))def save_shards(model_sd, num_shards: int):# Add the no_grad context managerwith torch.no_grad():if num_shards == 1:new_state_dict = {}for k, v in model_sd.items():new_k = translate_state_dict_key(k)if new_k is not None:if "wq" in new_k or "wk" in new_k:new_state_dict[new_k] = unpermute(v)else:new_state_dict[new_k] = vos.makedirs(output_dir, exist_ok=True)print(f"Saving shard 1 of {num_shards} into {output_dir}/consolidated.00.pth")torch.save(new_state_dict, output_dir + "/consolidated.00.pth")with open(output_dir + "/params.json", "w") as f:json.dump(params, f)else:new_state_dicts = [dict() for _ in range(num_shards)]for k in list(model_sd.keys()):v = model_sd[k]new_k = translate_state_dict_key(k)if new_k is not None:if new_k=='tok_embeddings.weight':print(f"Processing {new_k}")assert v.size(1)%num_shards==0splits = v.split(v.size(1)//num_shards,dim=1)elif new_k=='output.weight':print(f"Processing {new_k}")if v.size(0)%num_shards==0:splits = v.split(v.size(0)//num_shards,dim=0)else:size_list = [v.size(0)//num_shards] * num_shardssize_list[-1] += v.size(0)%num_shardssplits = v.split(size_list, dim=0) # 13B: size_list == [24976,24977]elif new_k=='norm.weight':print(f"Processing {new_k}")splits = [v] * num_shardselif 'ffn_norm.weight' in new_k:print(f"Processing {new_k}")splits = [v] * num_shardselif 'attention_norm.weight' in new_k:print(f"Processing {new_k}")splits = [v] * num_shardselif 'w1.weight' in new_k:print(f"Processing {new_k}")splits = v.split(v.size(0)//num_shards,dim=0)elif 'w2.weight' in new_k:print(f"Processing {new_k}")splits = v.split(v.size(1)//num_shards,dim=1)elif 'w3.weight' in new_k:print(f"Processing {new_k}")splits = v.split(v.size(0)//num_shards,dim=0)elif 'wo.weight' in new_k:print(f"Processing {new_k}")splits = v.split(v.size(1)//num_shards,dim=1)elif 'wv.weight' in new_k:print(f"Processing {new_k}")splits = v.split(v.size(0)//num_shards,dim=0)elif "wq.weight" in new_k or "wk.weight" in new_k:print(f"Processing {new_k}")v = unpermute(v)splits = v.split(v.size(0)//num_shards,dim=0)else:print(f"Unexpected key {new_k}")raise ValueErrorfor sd,split in zip(new_state_dicts,splits):sd[new_k] = split.clone()del splitdel splitsdel model_sd[k],vgc.collect() # Effectively enforce garbage collectionos.makedirs(output_dir, exist_ok=True)for i,new_state_dict in enumerate(new_state_dicts):print(f"Saving shard {i+1} of {num_shards} into {output_dir}/consolidated.0{i}.pth")torch.save(new_state_dict, output_dir + f"/consolidated.0{i}.pth")with open(output_dir + "/params.json", "w") as f:print(f"Saving params.json into {output_dir}/params.json")json.dump(params, f)if __name__=='__main__':args = parser.parse_args()base_model_path = args.base_modellora_model_paths = [s.strip() for s in args.lora_model.split(',') if len(s.strip())!=0]output_dir = args.output_diroutput_type = args.output_typeoffload_dir = args.offload_dirprint(f"Base model: {base_model_path}")print(f"LoRA model(s) {lora_model_paths}:")if offload_dir is not None:# Load with offloading, which is useful for low-RAM machines.# Note that if you have enough RAM, please use original method instead, as it is faster.base_model = LlamaForCausalLM.from_pretrained(base_model_path,load_in_8bit=False,torch_dtype=torch.float16,offload_folder=offload_dir,offload_state_dict=True,low_cpu_mem_usage=True,device_map={"": "cpu"},)else:# Original method without offloadingbase_model = LlamaForCausalLM.from_pretrained(base_model_path,load_in_8bit=False,torch_dtype=torch.float16,device_map={"": "cpu"},)## infer the model size from the checkpointembedding_size = base_model.get_input_embeddings().weight.size(1)model_size = emb_to_model_size[embedding_size]print(f"Peft version: {peft.__version__}")print(f"Loading LoRA for {model_size} model")lora_model = Nonelora_model_sd = Nonefor lora_index, lora_model_path in enumerate(lora_model_paths):print(f"Loading LoRA {lora_model_path}...")tokenizer = LlamaTokenizer.from_pretrained(lora_model_path)print(f"base_model vocab size: {base_model.get_input_embeddings().weight.size(0)}")print(f"tokenizer vocab size: {len(tokenizer)}")model_vocab_size = base_model.get_input_embeddings().weight.size(0)assert len(tokenizer) >= model_vocab_size, \(f"The vocab size of the tokenizer {len(tokenizer)} is smaller than the vocab size of the base model {model_vocab_size}\n""This is not the intended use. Please check your model and tokenizer.")if model_vocab_size != len(tokenizer):base_model.resize_token_embeddings(len(tokenizer))print(f"Extended vocabulary size to {len(tokenizer)}")first_weight = base_model.model.layers[0].self_attn.q_proj.weightfirst_weight_old = first_weight.clone()print(f"Loading LoRA weights")if hasattr(peft.LoraModel,'merge_and_unload'):try:lora_model = PeftModel.from_pretrained(base_model,lora_model_path,device_map={"": "cpu"},torch_dtype=torch.float16,)except RuntimeError as e:if '[49953, 4096]' in str(e):print("The vocab size of the tokenizer does not match the vocab size of the LoRA weight. \n""Did you misuse the LLaMA tokenizer with the Alpaca-LoRA weight?\n""Make sure that you use LLaMA tokenizer with the LLaMA-LoRA weight and Alpaca tokenizer with the Alpaca-LoRA weight!")raise eassert torch.allclose(first_weight_old, first_weight)print(f"Merging with merge_and_unload...")base_model = lora_model.merge_and_unload()else:base_model_sd = base_model.state_dict()try:lora_model_sd = torch.load(os.path.join(lora_model_path,'adapter_model.bin'),map_location='cpu')except FileNotFoundError:print("Cannot find lora model on the disk. Downloading lora model from hub...")filename = hf_hub_download(repo_id=lora_model_path,filename='adapter_model.bin')lora_model_sd = torch.load(filename,map_location='cpu')if 'base_model.model.model.embed_tokens.weight' in lora_model_sd:assert lora_model_sd['base_model.model.model.embed_tokens.weight'].shape[0]==len(tokenizer), \("The vocab size of the tokenizer does not match the vocab size of the LoRA weight. \n""Did you misuse the LLaMA tokenizer with the Alpaca-LoRA weight?\n""Make sure that you use LLaMA tokenizer with the LLaMA-LoRA weight and Alpaca tokenizer with the Alpaca-LoRA weight!")lora_config = peft.LoraConfig.from_pretrained(lora_model_path)lora_scaling = lora_config.lora_alpha / lora_config.rfan_in_fan_out = lora_config.fan_in_fan_outlora_keys = [k for k in lora_model_sd if 'lora_A' in k]non_lora_keys = [k for k in lora_model_sd if not 'lora_' in k]for k in non_lora_keys:print(f"merging {k}")original_k = k.replace('base_model.model.','')base_model_sd[original_k].copy_(lora_model_sd[k])for k in lora_keys:print(f"merging {k}")original_key = k.replace('.lora_A','').replace('base_model.model.','')assert original_key in base_model_sdlora_a_key = klora_b_key = k.replace('lora_A','lora_B')base_model_sd[original_key] += (transpose(lora_model_sd[lora_b_key].float() @ lora_model_sd[lora_a_key].float(),fan_in_fan_out) * lora_scaling)assert base_model_sd[original_key].dtype == torch.float16# did we do anything?assert not torch.allclose(first_weight_old, first_weight)tokenizer.save_pretrained(output_dir)if output_type=='huggingface':print("Saving to Hugging Face format...")LlamaForCausalLM.save_pretrained(base_model, output_dir) #, state_dict=deloreanized_sd)else: # output_type=='pthprint("Saving to pth format...")base_model_sd = base_model.state_dict()del lora_model, base_model, lora_model_sdparams = params_of_models[model_size]num_shards = num_shards_of_models[model_size]n_layers = params["n_layers"]n_heads = params["n_heads"]dim = params["dim"]dims_per_head = dim // n_headsbase = 10000.0inv_freq = 1.0 / (base ** (torch.arange(0, dims_per_head, 2).float() / dims_per_head))save_shards(model_sd=base_model_sd, num_shards=num_shards)

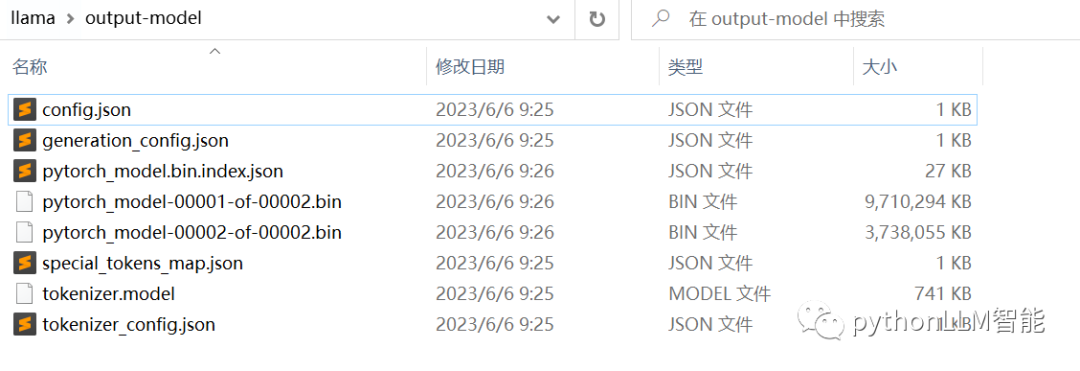

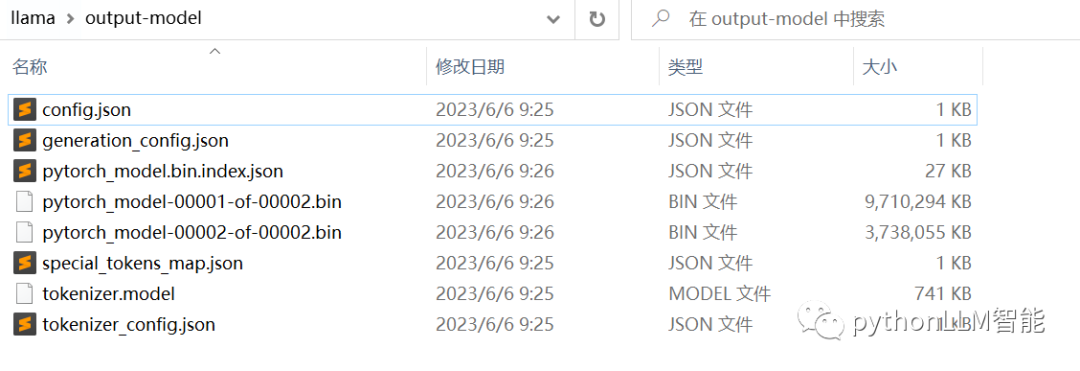

这里记得建一个新的模型保存目录我是 output-model

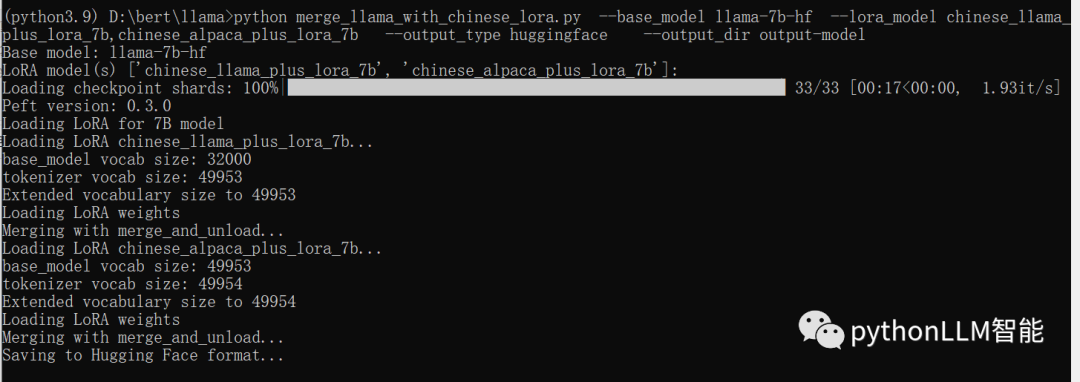

执行上述代码,命令如下

python merge_llama_with_chinese_lora.py \--base_model llama-7b-hf \--lora_model chinese_llama_plus_lora_7b,chinese_alpaca_plus_lora_7b \--output_type huggingface \--output_dir output-model

执行效果

最终的合并模型会在output-model中,如下图

webui记载测试

依旧是gradio,哈哈

import sysimport gradio as grimport argparseimport osimport mdtex2htmlparser = argparse.ArgumentParser()parser.add_argument('--base_model', default=None, type=str, required=True)parser.add_argument('--lora_model', default=None, type=str,help="If None, perform inference on the base model")parser.add_argument('--tokenizer_path',default=None,type=str)parser.add_argument('--gpus', default="0", type=str)parser.add_argument('--only_cpu',action='store_true',help='only use CPU for inference')args = parser.parse_args()if args.only_cpu is True:args.gpus = ""os.environ["CUDA_VISIBLE_DEVICES"] = args.gpusimport torchfrom transformers import LlamaForCausalLM, LlamaTokenizer, GenerationConfigfrom peft import PeftModeldef postprocess(self, y):if y is None:return []for i, (message, response) in enumerate(y):y[i] = (None if message is None else mdtex2html.convert((message)),None if response is None else mdtex2html.convert(response),)return ygr.Chatbot.postprocess = postprocessgeneration_config = dict(temperature=0.2,top_k=40,top_p=0.9,do_sample=True,num_beams=1,repetition_penalty=1.1,max_new_tokens=400)load_type = torch.float16if torch.cuda.is_available():device = torch.device(0)else:device = torch.device('cpu')if args.tokenizer_path is None:args.tokenizer_path = args.lora_modelif args.lora_model is None:args.tokenizer_path = args.base_modeltokenizer = LlamaTokenizer.from_pretrained(args.tokenizer_path)base_model = LlamaForCausalLM.from_pretrained(args.base_model,load_in_8bit=False,offload_folder="offload",torch_dtype=load_type,low_cpu_mem_usage=True,device_map='auto',)model_vocab_size = base_model.get_input_embeddings().weight.size(0)tokenzier_vocab_size = len(tokenizer)print(f"Vocab of the base model: {model_vocab_size}")print(f"Vocab of the tokenizer: {tokenzier_vocab_size}")if model_vocab_size!=tokenzier_vocab_size:assert tokenzier_vocab_size > model_vocab_sizeprint("Resize model embeddings to fit tokenizer")base_model.resize_token_embeddings(tokenzier_vocab_size)if args.lora_model is not None:print("loading peft model")model = PeftModel.from_pretrained(base_model, args.lora_model,torch_dtype=load_type,device_map='auto',)else:model = base_modelif device==torch.device('cpu'):model.float()model.eval()def reset_user_input():return gr.update(value='')def reset_state():return [], []def generate_prompt(instruction):return f"""Below is an instruction that describes a task. Write a response that appropriately completes the request.### Instruction:{instruction}### Response: """if torch.__version__ >= "2" and sys.platform != "win32":model = torch.compile(model)def predict(input,chatbot,history,max_new_tokens=128,top_p=0.75,temperature=0.1,top_k=40,num_beams=4,repetition_penalty=1.0,max_memory=256,**kwargs,):now_input = inputchatbot.append((input, ""))history = history or []if len(history) != 0:input = "".join(["### Instruction:\n" + i[0] +"\n\n" + "### Response: " + i[1] + "\n\n" for i in history]) + \"### Instruction:\n" + inputinput = input[len("### Instruction:\n"):]if len(input) > max_memory:input = input[-max_memory:]prompt = generate_prompt(input)inputs = tokenizer(prompt, return_tensors="pt")input_ids = inputs["input_ids"].to(device)generation_config = GenerationConfig(temperature=temperature,top_p=top_p,top_k=top_k,num_beams=num_beams,**kwargs,)with torch.no_grad():generation_output = model.generate(input_ids=input_ids,generation_config=generation_config,return_dict_in_generate=True,output_scores=False,max_new_tokens=max_new_tokens,repetition_penalty=float(repetition_penalty),)s = generation_output.sequences[0]output = tokenizer.decode(s, skip_special_tokens=True)output = output.split("### Response:")[-1].strip()history.append((now_input, output))chatbot[-1] = (now_input, output)return chatbot, historywith gr.Blocks() as demo:gr.HTML("""<h1 align="center">Chinese LLaMA & Alpaca LLM</h1>""")current_file_path = os.path.abspath(os.path.dirname(__file__))gr.Image(f'banner.png', label = 'Chinese LLaMA & Alpaca LLM')gr.Markdown("> 为了促进大模型在中文NLP社区的开放研究,本项目开源了中文LLaMA模型和指令精调的Alpaca大模型。这些模型在原版LLaMA的基础上扩充了中文词表并使用了中文数据进行二次预训练,进一步提升了中文基础语义理解能力。同时,中文Alpaca模型进一步使用了中文指令数据进行精调,显著提升了模型对指令的理解和执行能力")chatbot = gr.Chatbot()with gr.Row():with gr.Column(scale=4):with gr.Column(scale=12):user_input = gr.Textbox(show_label=False, placeholder="Input...", lines=10).style(container=False)with gr.Column(min_width=32, scale=1):submitBtn = gr.Button("Submit", variant="primary")with gr.Column(scale=1):emptyBtn = gr.Button("Clear History")max_length = gr.Slider(0, 4096, value=128, step=1.0, label="Maximum length", interactive=True)top_p = gr.Slider(0, 1, value=0.8, step=0.01,label="Top P", interactive=True)temperature = gr.Slider(0, 1, value=0.7, step=0.01, label="Temperature", interactive=True)history = gr.State([]) # (message, bot_message)submitBtn.click(predict, [user_input, chatbot, history, max_length, top_p, temperature], [chatbot, history],show_progress=True)submitBtn.click(reset_user_input, [], [user_input])emptyBtn.click(reset_state, outputs=[chatbot, history], show_progress=True)demo.queue().launch(share=False, inbrowser=True, server_name = '0.0.0.0', server_port=19324)

你可以支用cpu,当然gpu最好

启动webui命令

python gradio_demo.py --base_model output_model --only_cpu测试效果

官方给出的效果 plus-7B比plus-13B差不太多

| 测试任务 | 样例数 | Alpaca-13B | Alpaca-Plus-7B | Alpaca-Plus-13B |

|---|---|---|---|---|

| 💯总平均分 | 200 | 74.3 | 78.2 | 👍🏻80.8 |

| 知识问答 | 20 | 70 | 74 | 👍🏻79 |

| 开放式问答 | 20 | 77 | 77 | 77 |

| 数值计算、推理 | 20 | 61 | 61 | 60 |

| 诗词、文学、哲学 | 20 | 65 | 👍🏻76 | 👍🏻76 |

| 音乐、体育、娱乐 | 20 | 68 | 73 | 👍🏻80 |

| 写信、写文章 | 20 | 83 | 82 | 👍🏻87 |

| 文本翻译 | 20 | 84 | 87 | 👍🏻90 |

| 多轮交互 | 20 | 88 | 89 | 89 |

| 代码编程 | 20 | 65 | 64 | 👍🏻70 |

| 伦理、拒答 | 20 | 82 | 👍🏻99 | 👍🏻100 |

剩下的交给你们了,测试起来,哈哈。。。。。

官方还给出了精调的脚本,大家有需要的可以去看看

git:https://github.com/ymcui/Chinese-LLaMA-Alpaca

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)