YOLOv5s剪枝+量化+安卓部署学习记录───模型剪枝

使用yolov5s体验一下模型剪枝、量化和安卓部署过程

最近上嵌入式人工智能系统课,对模型的剪枝量化等内容产生兴趣,参考网上诸多教程展开学习。记录一下操作过程和代码理解。

一、模型剪枝

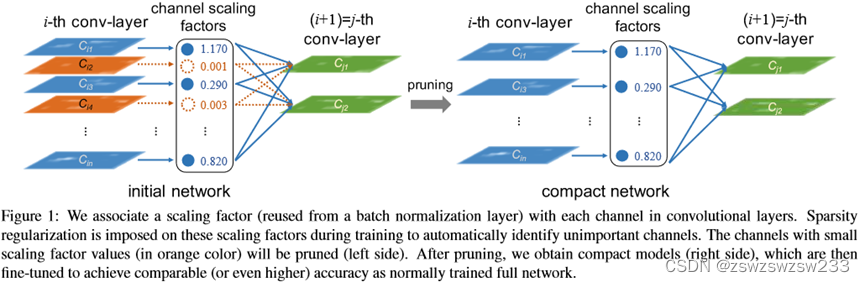

选用的基础模型是YOLOv5s,剪枝方法是Learning Efficient Convolutional Networks through Network Slimming这篇论文提出的通过给损失函数添加一个bn层缩放因子的L1正则项来稀疏化缩放因子,从而能自动识别出重要的通道。在这里缩放因子充当了“通道选择的代理角色”。稀疏化训练之后不重要的通道其缩放因子接近0,可以直接拿掉这些通道而不影响推理过程。

1.稀疏化训练

代码参考了GitHub - midasklr/yolov5prune at v6.0。train_sparsity.py中这段代码找到符合要求的bn层,给gamma和beta(pytorch中是weight和bias)加上l1正则。

srtmp = opt.sr * (1 - 0.9 * epoch / epochs)

if opt.st:

ignore_bn_list = []

for k, m in model.named_modules():

if isinstance(m, Bottleneck):

if m.add:

ignore_bn_list.append(k.rsplit(".", 2)[0] + ".cv1.bn")

ignore_bn_list.append(k + '.cv1.bn')

ignore_bn_list.append(k + '.cv2.bn')

if isinstance(m, nn.BatchNorm2d) and (k not in ignore_bn_list):

m.weight.grad.data.add_(srtmp * torch.sign(m.weight.data)) # L1

m.bias.grad.data.add_(opt.sr * 10 * torch.sign(m.bias.data)) # L1对于yolov5s的某些C3模块中的bottleneck结构,其存在跳跃连接和相加操作,需要保证相加的两路通道数一致。所以对于涉及到相加操作的bottleneck中的bn层不能剪枝。代码中通过m.add来判断当前遍历到的bottleneck是否有相加操作。在bottleneck这个类的定义中可以看到当输入通道数等于输出通道数且C3模块中参数shortcut为true的时候才出现相加:

self.add = shortcut and c1 == c2需要把这种bottleneck中的两个bn层以及它上面那个卷积模块中的bn层添加进不剪枝列表(这三个是一路串下来的,如果当中有通道数改变了相加的时候就对不上)。以backbone中第一个C3模块为例,剪枝情况如下图所示:

ignore_bn_list中添加的是model.2.cv1.bn、model.2.m.0.cv1.bn、model.2.m.0.cv2.bn。注意左边那个卷积模块是要剪的,因为下面是个concat拼接而不是相加。

gamma项这里乘了一个系数srtmp,其随着训练轮数增加逐渐减小。参数opt.sr非常重要,过大时gamma逼近0的速度很快,但是mAP值可能下降严重;过小时可能训练完了稀疏化程度也不够。所以可能要训练好几次,仔细调节。

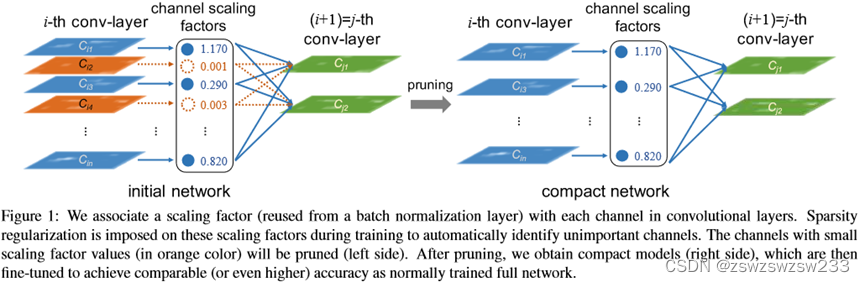

训练的数据集是在网上找的一个3000多张图片的交通灯数据集(时间成本有限),分为红绿黄和熄灭四类。使用自带的权重跑了50个epoch,验证集上结果如下,mAP_0.5为0.949。

使用得到的last.pt作为baseline,模型大小为13.7MB。

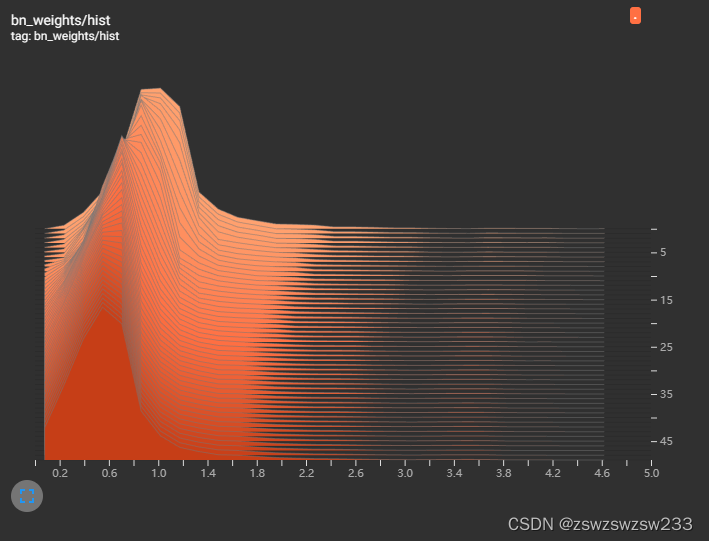

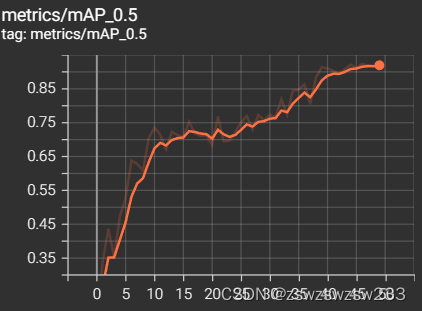

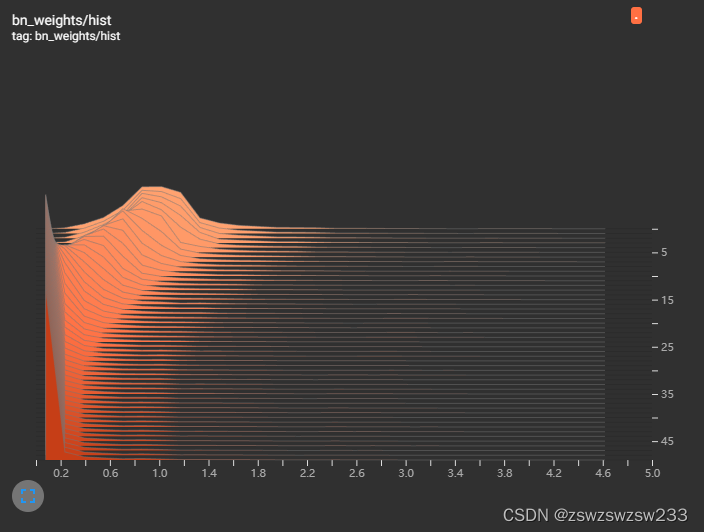

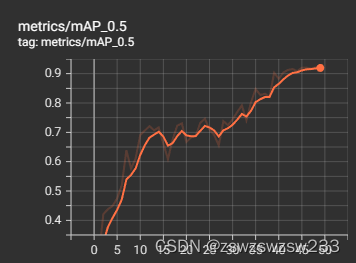

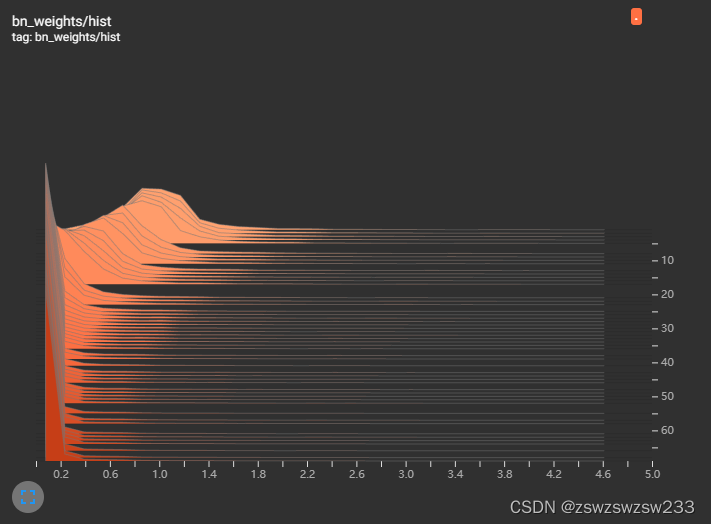

正式开始稀疏化训练,首先sr取0.001,batch_size取16(后面几次都不变),跑50轮试一试。训练时使用tensorboard实时查看结果。下图纵轴是训练轮数,横轴是gamma取值,可以看到训练结束整体分布向0偏了一点,但还远远不够。

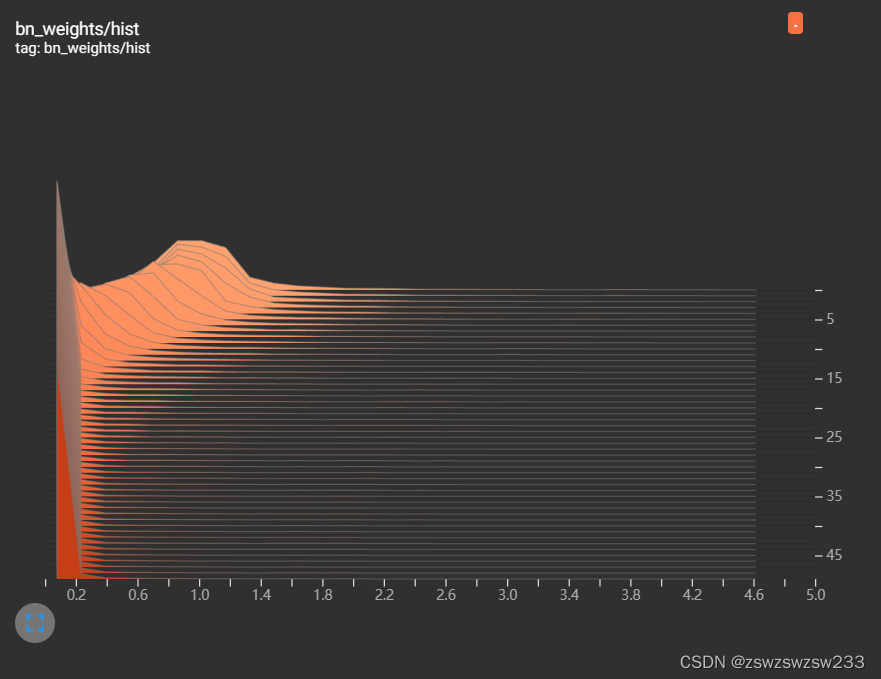

第二次取sr取0.005,可以看到gamma向0靠的非常快,但是mAP下降严重,训练结束才0.6几。

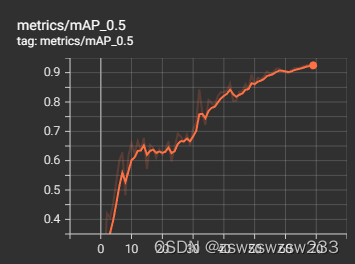

又试了sr取0.002,到训练结束gamma偏向0的程度还不够,mAP还有上涨的趋势,这里可以选择加大sr或者继续训练。选择0.003试一试,感觉又砍的猛了,快结束时mAP涨的不太够。

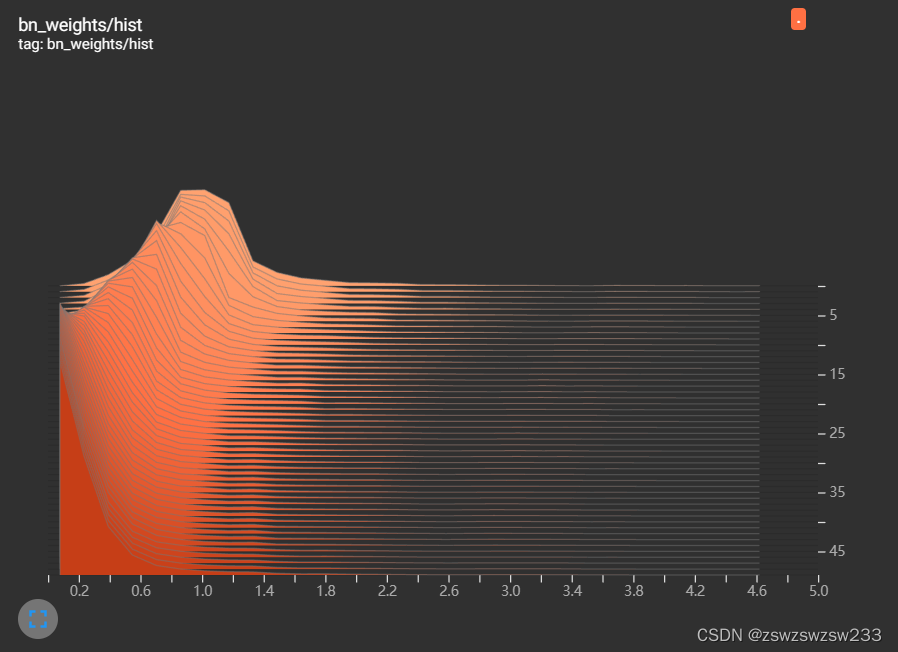

继续试sr取0.0025,这次训练结束时mAP终于过了0.9。gamma还可以再靠近0一些。

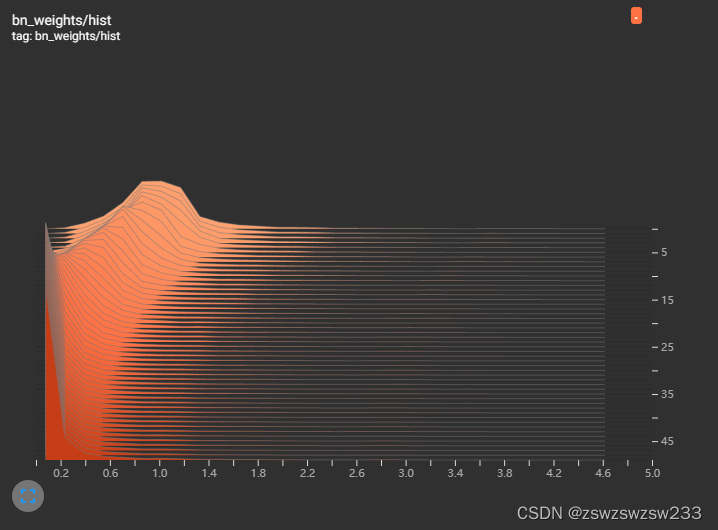

这次选择保持sr不变,加大训练轮数到70轮,最终结果如下。可以看到mAP掉了一点,可以通过微调解决。

稀疏化训练部分到此结束,下面针对sr=0.0025,epoch=70的这个模型进行剪枝。

2.剪枝

剪枝的代码在prune.py中,下面这段代码是得到最大剪枝率,并根据我们自己输入的简直率计算出对应的阈值:

# =========================================== prune model ====================================#

# print("model.module_list:",model.named_children())

model_list = {}

ignore_bn_list = []

for i, layer in model.named_modules():

# if isinstance(layer, nn.Conv2d):

# print("@Conv :",i,layer)

if isinstance(layer, Bottleneck):

if layer.add:

ignore_bn_list.append(i.rsplit(".",2)[0]+".cv1.bn")

ignore_bn_list.append(i + '.cv1.bn')

ignore_bn_list.append(i + '.cv2.bn')

if isinstance(layer, torch.nn.BatchNorm2d):

if i not in ignore_bn_list:

model_list[i] = layer

# print(i, layer)

# bnw = layer.state_dict()['weight']

model_list = {k:v for k,v in model_list.items() if k not in ignore_bn_list}

# print("prune module :",model_list.keys())

prune_conv_list = [layer.replace("bn", "conv") for layer in model_list.keys()]

# print(prune_conv_list)

bn_weights = gather_bn_weights(model_list)

sorted_bn = torch.sort(bn_weights)[0]

# print("model_list:",model_list)

# print("bn_weights:",bn_weights)

# 避免剪掉所有channel的最高阈值(每个BN层的gamma的最大值的最小值即为阈值上限)

highest_thre = []

for bnlayer in model_list.values():

highest_thre.append(bnlayer.weight.data.abs().max().item())

# print("highest_thre:",highest_thre)

highest_thre = min(highest_thre)

# 找到highest_thre对应的下标对应的百分比

percent_limit = (sorted_bn == highest_thre).nonzero()[0, 0].item() / len(bn_weights)

print(f'Suggested Gamma threshold should be less than {highest_thre:.4f}.')

print(f'The corresponding prune ratio is {percent_limit:.3f}.')

# assert opt.percent < percent_limit, f"Prune ratio should less than {percent_limit}, otherwise it may cause error!!!"

# model_copy = deepcopy(model)

thre_index = int(len(sorted_bn) * opt.percent)

thre = sorted_bn[thre_index]

print(f'Gamma value that less than {thre:.4f} are set to zero!')

print("=" * 94)

print(f"|\t{'layer name':<25}{'|':<10}{'origin channels':<20}{'|':<10}{'remaining channels':<20}|")

remain_num = 0

modelstate = model.state_dict()首先还是找到符合要求的bn层,注意第二个if这里会暂时误把bottleneck上面那个卷积层加入剪枝列表,所以在model_list那一行需要排除一下。model_list得到的就是所有符合要求的bn层,再把bn换成conv就得到所有可以剪枝的卷积层。gather_bn_weights这个函数是获得上述bn层的所有gamma值存放在bn_weights中。

def gather_bn_weights(module_list):

prune_idx = list(range(len(module_list)))

size_list = [idx.weight.data.shape[0] for idx in module_list.values()]

bn_weights = torch.zeros(sum(size_list))

index = 0

for i, idx in enumerate(module_list.values()):

size = size_list[i]

bn_weights[index:(index + size)] = idx.weight.data.abs().clone()

index += size

return bn_weights![]()

接着对bn_weights从小到大进行排序存放在sorted_bn中。我们需要找到每个bn层中最大的gamma值,所有层的这些最大gamma值中取最小作为剪枝的最大阈值。在sorted_bn中找到这个阈值的索引,除以所有bn层gamma值的数量之和就得到了剪枝的百分比。我们输入的剪枝率opt.percent不能超过这个百分比,否则会报错。通过opt.percent*len(sorted_bn)得到我们输入的剪枝率对应的阈值索引,在sorted_bn中找到这个阈值命名为thre。

下面这一段是重新构建模型的yaml,把原来的C3和SPPF替换成C3Pruned和SPPFPruned。

# ============================== save pruned model config yaml =================================#

pruned_yaml = {}

nc = model.model[-1].nc

with open(cfg, encoding='ascii', errors='ignore') as f:

model_yamls = yaml.safe_load(f) # model dict

# # Define model

pruned_yaml["nc"] = model.model[-1].nc

pruned_yaml["depth_multiple"] = model_yamls["depth_multiple"]

pruned_yaml["width_multiple"] = model_yamls["width_multiple"]

pruned_yaml["anchors"] = model_yamls["anchors"]

anchors = model_yamls["anchors"]

pruned_yaml["backbone"] = [

[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3Pruned, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3Pruned, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3Pruned, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3Pruned, [1024]],

[-1, 1, SPPFPruned, [1024, 5]], # 9

]

pruned_yaml["head"] = [

[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3Pruned, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3Pruned, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3Pruned, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3Pruned, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

# ============================================================================== #对比一下可以看到C3Pruned中相比原来的C3多了一些参数,包括cv1out和cv2out以及bottle_args,对应C3模块中cv1和cv2的输出通道数,以及botteneck相关的输入输出和中间层的通道数。SPPFPruned中多了一个cv1out,对应SPPF模块中cv1的输出通道数。

class C3Pruned(nn.Module):

# CSP Bottleneck with 3 convolutions

def __init__(self, cv1in, cv1out, cv2out, cv3out, bottle_args, n=1, shortcut=True, g=1): # ch_in, ch_out, number, shortcut, groups, expansion

super(C3Pruned, self).__init__()

cv3in = bottle_args[-1][-1]

self.cv1 = Conv(cv1in, cv1out, 1, 1)

self.cv2 = Conv(cv1in, cv2out, 1, 1)

self.cv3 = Conv(cv3in+cv2out, cv3out, 1)

self.m = nn.Sequential(*[BottleneckPruned(*bottle_args[k], shortcut, g) for k in range(n)])

def forward(self, x):

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))

class SPPFPruned(nn.Module):

# Spatial pyramid pooling layer used in YOLOv3-SPP

def __init__(self, cv1in, cv1out, cv2out, k=5):

super(SPPFPruned, self).__init__()

self.cv1 = Conv(cv1in, cv1out, 1, 1)

self.cv2 = Conv(cv1out * 4, cv2out, 1, 1)

self.m = nn.MaxPool2d(kernel_size=k, stride=1, padding=k // 2)

def forward(self, x):

x = self.cv1(x)

with warnings.catch_warnings():

warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warning

y1 = self.m(x)

y2 = self.m(y1)

return self.cv2(torch.cat([x, y1, y2, self.m(y2)], 1))回到prune.py中,下面这一段是遍历模型中的bn层,通过obtain_bn_mask这个函数为每个bn层的gamma值创建剪枝mask:大于之前求得的thre值的gamma处为1,否则为0。得到的mask存放在字典maskbndict中。用这个mask乘以gamma和beta的数据就完成了剪枝操作(小于阈值thre的gamma和其对应的beta被置0,大于阈值的保留)。

maskbndict = {}

for bnname, bnlayer in model.named_modules():

if isinstance(bnlayer, nn.BatchNorm2d):

bn_module = bnlayer

mask = obtain_bn_mask(bn_module, thre) # 获得剪枝mask

if bnname in ignore_bn_list:

mask = torch.ones(bnlayer.weight.data.size()).cuda()

maskbndict[bnname] = mask

# print("mask:",mask)

remain_num += int(mask.sum())

bn_module.weight.data.mul_(mask)

bn_module.bias.data.mul_(mask)

# print("bn_module:", bn_module.bias)

print(f"|\t{bnname:<25}{'|':<10}{bn_module.weight.data.size()[0]:<20}{'|':<10}{int(mask.sum()):<20}|")

assert int(mask.sum()) > 0, "Number of remaining channels must greater than 0! please set lower prune percent."

print("=" * 94)

# print(maskbndict.keys())接着构建ModelPruned这个类,在parse_pruned_model这个函数中根据之前修改的yaml执行具体的搭建过程。和yolov5原本的parse_model相比会多返回一个self.from_to_map字典,记录的是整个网络所有bn层的连接关系。

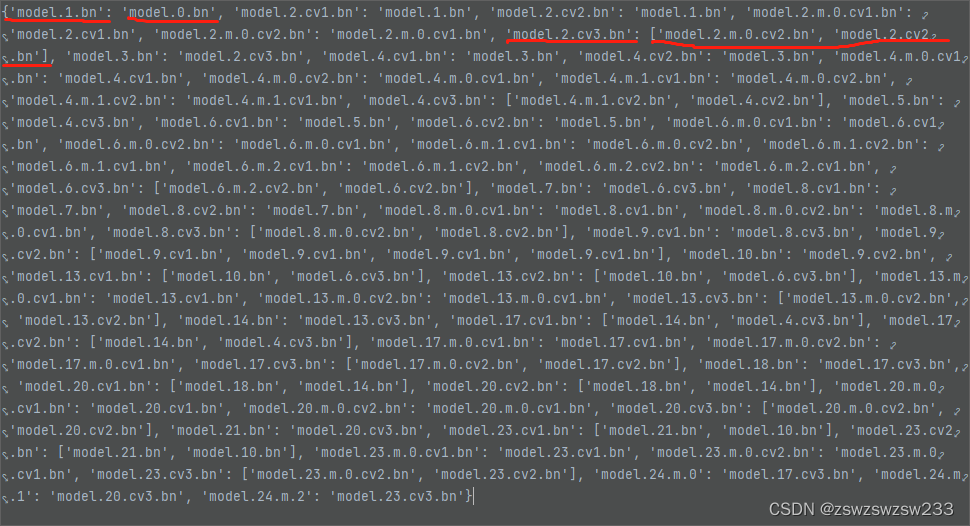

self.model, self.save, self.from_to_map = parse_pruned_model(self.maskbndict, deepcopy(self.yaml), ch=[ch]) # model, savelistfrom_to_map字典内容如下,以划红线的两个连接为例:model.0.bn是整个网络第一个bn层,他后面一个bn层是model.1.bn。而第一个C3中的model.2.cv3.bn,由于其上方有个concat操作,所以它前两个bn层是model.2.m.0.cv2.bn和model.2.cv2.bn。

下面这一堆代码是同时遍历剪枝前和剪枝后的模型,把剪枝后剩下的bn层中的gamma和beta值拷贝过来。核心是使用np.argwhere整个函数找到mask中1的位置,转换成索引值,根据索引值找到那些保留的gamma和beta位置。输出的索引是out_idx,输入的索引是in_idx。注意if isinstance(former,str)和if instance(former,list)两种情况,分别对应上文中from_to_map中举的两个例子:一个bn层之前只有一个bn层时former是字符串(str)类型,而有多个bn层时former是一个列表(list)类型。当遍历到model.24,即最后一个detect层时只需要获得in_idx。

最后把剪枝前后的模型都保存一下。

# ======================================================================================= #

changed_state = []

for ((layername, layer),(pruned_layername, pruned_layer)) in zip(model.named_modules(), pruned_model.named_modules()):

assert layername == pruned_layername

if isinstance(layer, nn.Conv2d) and not layername.startswith("model.24"):

convname = layername[:-4]+"bn"

if convname in from_to_map.keys():

former = from_to_map[convname]

if isinstance(former, str):

out_idx = np.squeeze(np.argwhere(np.asarray(maskbndict[layername[:-4] + "bn"].cpu().numpy())))

in_idx = np.squeeze(np.argwhere(np.asarray(maskbndict[former].cpu().numpy())))

w = layer.weight.data[:, in_idx, :, :].clone()

if len(w.shape) ==3: # remain only 1 channel.

w = w.unsqueeze(1)

w = w[out_idx, :, :, :].clone()

pruned_layer.weight.data = w.clone()

changed_state.append(layername + ".weight")

if isinstance(former, list):

orignin = [modelstate[i+".weight"].shape[0] for i in former]

formerin = []

for it in range(len(former)):

name = former[it]

tmp = [i for i in range(maskbndict[name].shape[0]) if maskbndict[name][i] == 1]

if it > 0:

tmp = [k + sum(orignin[:it]) for k in tmp]

formerin.extend(tmp)

out_idx = np.squeeze(np.argwhere(np.asarray(maskbndict[layername[:-4] + "bn"].cpu().numpy())))

w = layer.weight.data[out_idx, :, :, :].clone()

pruned_layer.weight.data = w[:,formerin, :, :].clone()

changed_state.append(layername + ".weight")

else:

out_idx = np.squeeze(np.argwhere(np.asarray(maskbndict[layername[:-4] + "bn"].cpu().numpy())))

w = layer.weight.data[out_idx, :, :, :].clone()

assert len(w.shape) == 4

pruned_layer.weight.data = w.clone()

changed_state.append(layername + ".weight")

if isinstance(layer,nn.BatchNorm2d):

out_idx = np.squeeze(np.argwhere(np.asarray(maskbndict[layername].cpu().numpy())))

pruned_layer.weight.data = layer.weight.data[out_idx].clone()

pruned_layer.bias.data = layer.bias.data[out_idx].clone()

pruned_layer.running_mean = layer.running_mean[out_idx].clone()

pruned_layer.running_var = layer.running_var[out_idx].clone()

changed_state.append(layername + ".weight")

changed_state.append(layername + ".bias")

changed_state.append(layername + ".running_mean")

changed_state.append(layername + ".running_var")

changed_state.append(layername + ".num_batches_tracked")

if isinstance(layer, nn.Conv2d) and layername.startswith("model.24"):

former = from_to_map[layername]

in_idx = np.squeeze(np.argwhere(np.asarray(maskbndict[former].cpu().numpy())))

pruned_layer.weight.data = layer.weight.data[:, in_idx, :, :]

pruned_layer.bias.data = layer.bias.data

changed_state.append(layername + ".weight")

changed_state.append(layername + ".bias")

missing = [i for i in pruned_model_state.keys() if i not in changed_state]

pruned_model.eval()

pruned_model.names = model.names

# =============================================================================================== #

torch.save({"model": model}, "original_model.pt")

model = pruned_model

torch.save({"model":model}, "pruned_model.pt")

model.cuda().eval()针对我的经过稀疏化训练后的模型,运行prune.py后给出的建议剪枝率和阈值如下:

Suggested Gamma threshold should be less than 0.0208.

The corresponding prune ratio is 0.831.

Gamma value that less than 0.0007 are set to zero!提示符合的剪枝率为0.831,所以可以取opt.percent为0.8。打印出剪枝前后的通道数变化,可以看到深层的网络剪的多。

==============================================================================================

| layer name | origin channels | remaining channels |

| model.0.bn | 32 | 30 |

| model.1.bn | 64 | 64 |

| model.2.cv1.bn | 32 | 32 |

| model.2.cv2.bn | 32 | 17 |

| model.2.cv3.bn | 64 | 55 |

| model.2.m.0.cv1.bn | 32 | 32 |

| model.2.m.0.cv2.bn | 32 | 32 |

| model.3.bn | 128 | 86 |

| model.4.cv1.bn | 64 | 64 |

| model.4.cv2.bn | 64 | 3 |

| model.4.cv3.bn | 128 | 67 |

| model.4.m.0.cv1.bn | 64 | 64 |

| model.4.m.0.cv2.bn | 64 | 64 |

| model.4.m.1.cv1.bn | 64 | 64 |

| model.4.m.1.cv2.bn | 64 | 64 |

| model.5.bn | 256 | 34 |

| model.6.cv1.bn | 128 | 128 |

| model.6.cv2.bn | 128 | 1 |

| model.6.cv3.bn | 256 | 39 |

| model.6.m.0.cv1.bn | 128 | 128 |

| model.6.m.0.cv2.bn | 128 | 128 |

| model.6.m.1.cv1.bn | 128 | 128 |

| model.6.m.1.cv2.bn | 128 | 128 |

| model.6.m.2.cv1.bn | 128 | 128 |

| model.6.m.2.cv2.bn | 128 | 128 |

| model.7.bn | 512 | 10 |

| model.8.cv1.bn | 256 | 256 |

| model.8.cv2.bn | 256 | 1 |

| model.8.cv3.bn | 512 | 13 |

| model.8.m.0.cv1.bn | 256 | 256 |

| model.8.m.0.cv2.bn | 256 | 256 |

| model.9.cv1.bn | 256 | 8 |

| model.9.cv2.bn | 512 | 7 |

| model.10.bn | 256 | 6 |

| model.13.cv1.bn | 128 | 3 |

| model.13.cv2.bn | 128 | 8 |

| model.13.cv3.bn | 256 | 11 |

| model.13.m.0.cv1.bn | 128 | 3 |

| model.13.m.0.cv2.bn | 128 | 5 |

| model.14.bn | 128 | 14 |

| model.17.cv1.bn | 64 | 28 |

| model.17.cv2.bn | 64 | 11 |

| model.17.cv3.bn | 128 | 113 |

| model.17.m.0.cv1.bn | 64 | 29 |

| model.17.m.0.cv2.bn | 64 | 54 |

| model.18.bn | 128 | 43 |

| model.20.cv1.bn | 128 | 25 |

| model.20.cv2.bn | 128 | 25 |

| model.20.cv3.bn | 256 | 165 |

| model.20.m.0.cv1.bn | 128 | 25 |

| model.20.m.0.cv2.bn | 128 | 57 |

| model.21.bn | 256 | 44 |

| model.23.cv1.bn | 256 | 12 |

| model.23.cv2.bn | 256 | 17 |

| model.23.cv3.bn | 512 | 266 |

| model.23.m.0.cv1.bn | 256 | 40 |

| model.23.m.0.cv2.bn | 256 | 46 |

==============================================================================================剪枝后网络结构和各层参数量如下:

from n params module arguments

0 -1 1 3300 models.common.Conv [3, 30, 6, 2, 2]

1 -1 1 17408 models.common.Conv [30, 64, 3, 2]

2 -1 1 16407 models.pruned_common.C3Pruned [64, 32, 17, 55, [[32, 32, 32]], 1, 128]

3 -1 1 42742 models.common.Conv [55, 86, 3, 2]

4 -1 2 92951 models.pruned_common.C3Pruned [86, 64, 3, 67, [[64, 64, 64], [64, 64, 64]], 2, 256]

5 -1 1 20570 models.common.Conv [67, 34, 3, 2]

6 -1 3 502809 models.pruned_common.C3Pruned [34, 128, 1, 39, [[128, 128, 128], [128, 128, 128], [128, 128, 128]], 3, 512]

7 -1 1 3530 models.common.Conv [39, 10, 3, 2]

8 -1 1 662835 models.pruned_common.C3Pruned [10, 256, 1, 13, [[256, 256, 256]], 1, 1024]

9 -1 1 358 models.pruned_common.SPPFPruned [13, 8, 7, 5]

10 -1 1 54 models.common.Conv [7, 6, 1, 1]

11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

12 [-1, 6] 1 0 models.common.Concat [1]

13 -1 1 842 models.pruned_common.C3Pruned [45, 3, 8, 11, [[3, 3, 5]], 1, False]

14 -1 1 182 models.common.Conv [11, 14, 1, 1]

15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

16 [-1, 4] 1 0 models.common.Concat [1]

17 -1 1 25880 models.pruned_common.C3Pruned [81, 28, 11, 113, [[28, 29, 54]], 1, False]

18 -1 1 43817 models.common.Conv [113, 43, 3, 2]

19 [-1, 14] 1 0 models.common.Concat [1]

20 -1 1 30424 models.pruned_common.C3Pruned [57, 25, 25, 165, [[25, 25, 57]], 1, False]

21 -1 1 65428 models.common.Conv [165, 44, 3, 2]

22 [-1, 10] 1 0 models.common.Concat [1]

23 -1 1 36010 models.pruned_common.C3Pruned [50, 12, 17, 266, [[12, 40, 46]], 1, False]

24 [17, 20, 23] 1 14769 models.yolo.Detect [4, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [113, 165, 266]]剪枝后的模型pruned_model_0.8.pt,大小为6.25MB。

3.微调

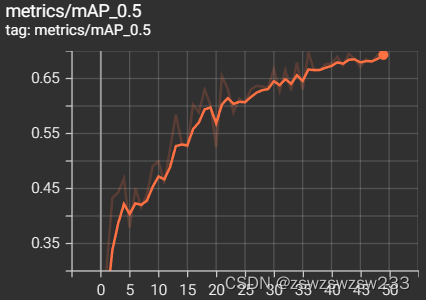

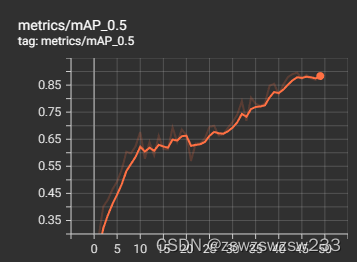

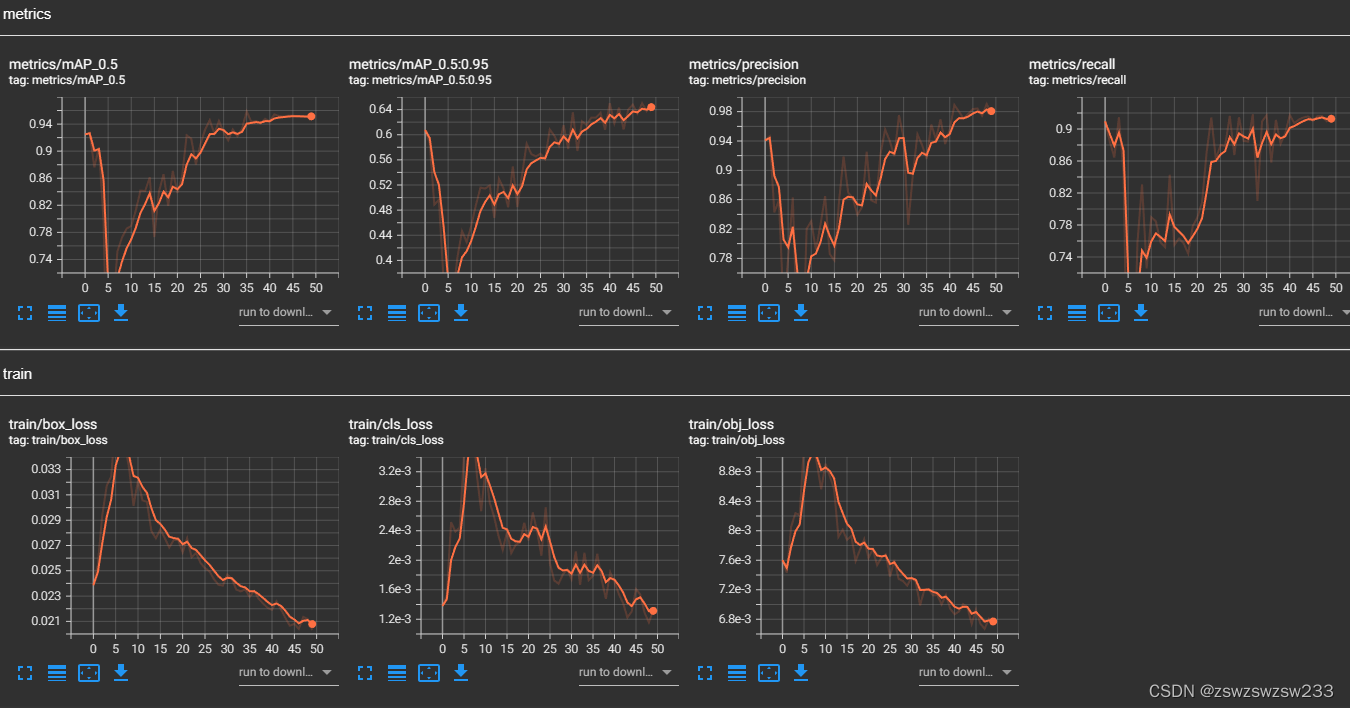

剪枝后的模型pruned_model_0.8.pt在数据集上训练50轮,微调一下。微调训练过程中参数变化如下,可以看到刚开始mAP有个急速下降的过程,5轮之后就涨上来了。下降可能是网络结构的变化导致,但经过剪枝后冗余的结构都被剔除,保留的权值足以满足推理过程,所以mAP很快又涨上来了。这个过程说明我们的剪枝是有效的。

最后在验证集上的结果如下,和baseline相比还涨了一点,mAP_0.5达到了0.951。

得到的last.pt大小为3.37MB,只有baseline的24.6%。

最后模型的推理速度和baseline相比好像变慢了,查找资料,原因可能有:

1.通道数不再是2^n,不利于并行加速计算。

2.剪去的大多是深层的网络结构,这一部分参数量较多,但featurmap较小,导致flops计算量反而较少。

参考资料:

1.https://blog.csdn.net/qq_42835363/article/details/129125376?spm=1001.2014.3001.5506

2.https://blog.csdn.net/litt1e/article/details/125818244?spm=1001.2014.3001.5506

3.https://blog.csdn.net/m0_46093829/article/details/128157589?spm=1001.2014.3001.5506

4.https://blog.csdn.net/m0_37264397/article/details/126292621?spm=1001.2014.3001.5506

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)