kubeadm 搭建 k8s-1.20.13

ignore-preflight-errors=:忽略运行时的错误,例如执行时存在[ERROR NumCPU]和[ERROR Swap],忽略这两个报错就是增加–ignore-preflight-errors=NumCPU 和–ignore-preflight-errors=Swap的配置即可。默认情况下, kubelet不允许所在的主机存在交换分区,后期规划的时候,可以考虑在系统安装的时候不创建

一、安装环境准备

1.机器列表(修改主机名)

| 主机名 | IP | 操作系统 | 角色 | 安装软件 |

|---|---|---|---|---|

| k8s-master | 172.19.58.188 | Centos7.9 | 管理节点 | docker、kube-apiserver、kube-schduler、kube-controller-manager、kubelet、etcd、kube-proxy、calico |

| k8s-node1 | 172.19.58.189 | Centos7.9 | 工作节点 | docker、kubelet、kube-proxy、calico |

| k8s-node2 | 172.19.58.190 | Centos7.9 | 工作节点 | docker、kubelet、kube-proxy、calico |

2.环境初始化(所有机器执行)

2.1关闭防火墙及selinux、设置句柄

[root@k8s-master k8s]# echo "* soft nofile 65536" >> /etc/security/limits.conf

[root@k8s-master k8s]# echo "* hard nofile 65536" >> /etc/security/limits.conf

[root@k8s-master k8s]# systemctl stop firewalld && systemctl disable firewalld

2.2关闭swap

[root@k8s-master log]# swapoff -a

[root@k8s-master log]# sed -i '/swap/s/^/#/' /etc/fstab

默认情况下, kubelet不允许所在的主机存在交换分区,后期规划的时候,可以考虑在系统安装的时候不创建交换分区,针对已经存在交换分区的可以设置忽略禁止使用swap的限制,不然无法启动kubelet。一般直接禁用swap就可以了,不需要执行此步骤。

修改完主机名、句柄、swap、host解析需要重启一下服务器,如果条件允许的话,可以做master到其他节点的互信任,方便后期运维工作

2.3添加yum仓库

docker-ce仓库

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

k8s仓库

[root@k8s-master ~]# vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

enabled=1

2.4安装docker和kubeadm

默认安装最新版,也可以手动指定版本,如 kubelet-1.20.13

yum install docker-ce-cli-19.03.15 docker-ce-19.03.15 kubelet-1.20.13 kubeadm-1.20.13 kubectl-1.20.13 -y

如果有个性化需求,可以在安装完基础服务后修改对应的配置文件,配置文件在/etc/kubernetes/manifests

2.5修改docker引擎

[root@k8s-master log]# vim /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"]

}

2.6启动docker和kubelet

systemctl start docker && systemctl enable docker

systemctl start kubelet && systemctl enable kubelet

注意,此时kubelet是无法正常启动的,可以查看/var/log/messages有报错信息,等待master节点初始化之后即可正常运行。

2.7下载所需镜像

[root@k8s-master ~]# vim k8s-image-download.sh

#!/bin/bash

# download k8s 1.20.13 images

# get image-list by 'kubeadm config images list --kubernetes-version=v1.20.1'

# gcr.azk8s.cn/google-containers == k8s.gcr.io

if [ $# -ne 1 ];then

echo "USAGE: bash `basename $0` KUBERNETES-VERSION"

exit 1

fi

version=$1

images=`kubeadm config images list --kubernetes-version=${version} |awk -F'/' '{print $2}'`

for imageName in ${images[@]};do

docker pull registry.aliyuncs.com/google_containers/$imageName

# docker pull gcr.azk8s.cn/google-containers/$imageName

# docker tag gcr.azk8s.cn/google-containers/$imageName k8s.gcr.io/$imageName

# docker rmi gcr.azk8s.cn/google-containers/$imageName

done

[root@k8s-master k8s]# sh k8s-image-download.sh 1.20.13

v1.20.13: Pulling from google_containers/kube-apiserver

0d7d70899875: Pull complete

d373bafe570e: Pull complete

05c76ed817d7: Pull complete

Digest: sha256:4b4ce4f923b9949893e51d7ddd4b70a279bd15c85604896c740534a3984b5dc4

Status: Downloaded newer image for registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.13

registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.13

v1.20.13: Pulling from google_containers/kube-controller-manager

0d7d70899875: Already exists

d373bafe570e: Already exists

fb4f67eb9ac0: Pull complete

Digest: sha256:677a2eaa379fe1316be1ed8b8d9cac0505a9474d59f2641b55bff4c065851dfe

Status: Downloaded newer image for registry.aliyuncs.com/google_containers/kube-controller-manager:v1.20.13

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.20.13

v1.20.13: Pulling from google_containers/kube-scheduler

0d7d70899875: Already exists

d373bafe570e: Already exists

8f6ac6b96c59: Pull complete

Digest: sha256:f47e67e53dca3c2a715a85617cbea768a7c69ebbd41556c0b228ce13434c5fc0

Status: Downloaded newer image for registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.13

registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.13

v1.20.13: Pulling from google_containers/kube-proxy

20b09fbd3037: Pull complete

b63d7623b1ee: Pull complete

Digest: sha256:df40eaf6eaa87aa748974e102fad6865bfaa01747561e4d01a701ae69e7c785d

Status: Downloaded newer image for registry.aliyuncs.com/google_containers/kube-proxy:v1.20.13

registry.aliyuncs.com/google_containers/kube-proxy:v1.20.13

3.2: Pulling from google_containers/pause

c74f8866df09: Pull complete

Digest: sha256:927d98197ec1141a368550822d18fa1c60bdae27b78b0c004f705f548c07814f

Status: Downloaded newer image for registry.aliyuncs.com/google_containers/pause:3.2

registry.aliyuncs.com/google_containers/pause:3.2

3.4.13-0: Pulling from google_containers/etcd

4000adbbc3eb: Pull complete

d72167780652: Pull complete

d60490a768b5: Pull complete

4a4b5535d134: Pull complete

0dac37e8b31a: Pull complete

Digest: sha256:4ad90a11b55313b182afc186b9876c8e891531b8db4c9bf1541953021618d0e2

Status: Downloaded newer image for registry.aliyuncs.com/google_containers/etcd:3.4.13-0

registry.aliyuncs.com/google_containers/etcd:3.4.13-0

1.7.0: Pulling from google_containers/coredns

c6568d217a00: Pull complete

6937ebe10f02: Pull complete

Digest: sha256:73ca82b4ce829766d4f1f10947c3a338888f876fbed0540dc849c89ff256e90c

Status: Downloaded newer image for registry.aliyuncs.com/google_containers/coredns:1.7.0

registry.aliyuncs.com/google_containers/coredns:1.7.0

二、集群搭建(calico搭建优先选择第3步)

1.master节点执行

初始化配置文件建议保存

kubeadm init --kubernetes-version=v1.20.13 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--apiserver-advertise-address=172.19.58.188 \

--ignore-preflight-errors=Swap \

--ignore-preflight-errors=NumCPU \

--image-repository registry.aliyuncs.com/google_containers

参数说明:

–kubernetes-version=v1.20.13:指定要安装的版本号。

–apiserver-advertise-address:指定用 Master 的哪个IP地址与 Cluster的其他节点通信。

–service-cidr:指定Service网络的范围,即负载均衡VIP使用的IP地址段。

–pod-network-cidr:指定Pod网络的范围,即Pod的IP地址段。

–ignore-preflight-errors=:忽略运行时的错误,例如执行时存在[ERROR NumCPU]和[ERROR Swap],忽略这两个报错就是增加–ignore-preflight-errors=NumCPU 和–ignore-preflight-errors=Swap的配置即可。

–image-repository:Kubenetes默认Registries地址是 k8s.gcr.io,一般在国内并不能访问 gcr.io,可以将其指定为阿里云镜像地址:registry.aliyuncs.com/google_containers。

如果有多个网卡,最好指定一下 apiserver-advertise 地址

执行过程显示如下:

[init] Using Kubernetes version: v1.20.13

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.12. Latest validated version: 19.03

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.19.58.188]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [172.19.58.188 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [172.19.58.188 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 13.002119 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.20" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: q1flre.6kx5j2p2zx5c11l9

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully! ##到这一步就已经完成初始化了

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube ###这一步需要在家目录下生成kube_config文件,相当于给root授权

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf ###如果做了上一步,这一步就不用做了,二者选一

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.19.58.188:6443 --token q1flre.6kx5j2p2zx5c11l9 \ ###创建完calico之后再添加node到集群

--discovery-token-ca-cert-hash sha256:6ebfd7bf6ff846f7bb405f5f63d0d7febaa7d645816a994465994ab186ec8203

初始化操作主要经历了下面15个步骤,每个阶段均输出均使用[步骤名称]作为开头:

- [init]:指定版本进行初始化操作

- [preflight] :初始化前的检查和下载所需要的Docker镜像文件。

- [kubelet-start] :生成kubelet的配置文件”/var/lib/kubelet/config.yaml”,没有这个文件kubelet无法启动,所以初始化之前的kubelet实际上启动失败。

- [certificates]:生成Kubernetes使用的证书,存放在/etc/kubernetes/pki目录中。

- [kubeconfig] :生成 KubeConfig 文件,存放在/etc/kubernetes目录中,组件之间通信需要使用对应文件。

- [control-plane]:使用/etc/kubernetes/manifest目录下的YAML文件,安装 Master 组件。

- [etcd]:使用/etc/kubernetes/manifest/etcd.yaml安装Etcd服务。

- [wait-control-plane]:等待control-plan部署的Master组件启动。

- [apiclient]:检查Master组件服务状态。

- [upload-config]:更新配置

- [kubelet]:使用configMap配置kubelet。

- [patchnode]:更新CNI信息到Node上,通过注释的方式记录。

- [mark-control-plane]:为当前节点打标签,打了角色Master,和不可调度标签,这样默认就不会使用Master节点来运行Pod。

- [bootstrap-token]:生成token记录下来,后边使用kubeadm join往集群中添加节点时会用到

- [addons]:安装附加组件CoreDNS和kube-proxy

PS:如果安装失败,可以执行 kubeadm reset 命令将主机恢复原状,重新执行 kubeadm init 命令再次进行安装。

kubectl默认会在执行的用户家目录下面的.kube目录下寻找config文件。这里是将在初始化时[kubeconfig]步骤生成的admin.conf拷贝到.kube/config。

2.安装网络插件calico

(参照calico官网的安装步骤进行安装,对比过calico.yaml和tigera-operator.yaml,发现内容大致一直,但注意都要改cidr)

安装 Tigera Calico 运算符和自定义资源定义,这里选择的是下载文件,再创建pod,方便后期维护

wget https://docs.projectcalico.org/manifests/tigera-operator.yaml

wget https://docs.projectcalico.org/manifests/custom-resources.yaml

修改配置文件中的cidr(选择calico的IPIP模式一定要注意修改kube-proxy的工作模式为ipvs,在kube-proxy的配置中修改,并且要在所有主机安装IPVS)

[root@k8s-master calico]# vim custom-resources.yaml

# This section includes base Calico installation configuration.

# For more information, see: https://docs.projectcalico.org/v3.21/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

calicoNetwork:

# Note: The ipPools section cannot be modified post-install.

ipPools:

- blockSize: 26

cidr: 10.244.0.0/16

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

---

# This section configures the Calico API server.

# For more information, see: https://docs.projectcalico.org/v3.21/reference/installation/api#operator.tigera.io/v1.APIServer

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {}

创建calico

[root@k8s-master calico]# kubectl create -f tigera-operator.yaml

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/apiservers.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/imagesets.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/installations.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/tigerastatuses.operator.tigera.io created

namespace/tigera-operator created

podsecuritypolicy.policy/tigera-operator created

serviceaccount/tigera-operator created

clusterrole.rbac.authorization.k8s.io/tigera-operator created

clusterrolebinding.rbac.authorization.k8s.io/tigera-operator created

deployment.apps/tigera-operator created

[root@k8s-master calico]# kubectl create -f custom-resources.yaml

installation.operator.tigera.io/default created

apiserver.operator.tigera.io/default created

3.用calico.yaml搭建calico网络(优先选择)

下载文件

wget https://docs.projectcalico.org/v3.20/manifests/calico.yaml --no-check-certificate

k8s安装calico有版本要求,详情看每个calico的版本支持,例:https://docs.tigera.io/archive/v3.20/getting-started/kubernetes/requirements

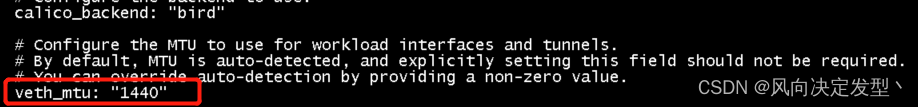

修改配置

创建

kubectl apply -f calico.yaml

查看calico状态

4.node加入集群

kubeadm join 172.19.58.188:6443 --token q1flre.6kx5j2p2zx5c11l9 \

--discovery-token-ca-cert-hash sha256:6ebfd7bf6ff846f7bb405f5f63d0d7febaa7d645816a994465994ab186ec8203

5.至此集群搭建完成

[root@k8s-master calico]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready control-plane,master 7m24s v1.20.13 172.19.58.188 <none> CentOS Linux 7 (Core) 3.10.0-1160.45.1.el7.x86_64 docker://20.10.12

k8s-node1 Ready <none> 2m52s v1.20.13 172.19.58.189 <none> CentOS Linux 7 (Core) 3.10.0-1160.45.1.el7.x86_64 docker://20.10.12

k8s-node2 Ready <none> 2m48s v1.20.13 172.19.58.190 <none> CentOS Linux 7 (Core) 3.10.0-1160.45.1.el7.x86_64 docker://20.10.12

6.检查k8s集群状态

[root@k8s-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused ##这里能看出controllermanager和scheduler健康检查状态拿不到,需要修改配置文件

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}

7.搜索–port=0,把这一行注释掉 ##如果scheduler和controllermanager是静态pod启动,那只需要修改配置文件,不需要重启kubelet

[root@k8s-master ~]# vim /etc/kubernetes/manifests/kube-scheduler.yaml

[root@k8s-master ~]# vim /etc/kubernetes/manifests/kube-controller-manager.yaml

# 搜索port=0,把这一行注释掉# - --port=0

[root@k8s-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

controller-manager Healthy ok

8.修改kubeproxy模式为ipvs模式

查看系统是否开启ipvs模块

[root@iZ8vb05skym8uiglcfnnslZ ~]# lsmod | grep ip_vs

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 139264 10 ip_vs,nf_nat,nf_nat_ipv4,nf_nat_ipv6,xt_conntrack,nf_nat_masquerade_ipv4,nf_nat_masquerade_ipv6,nf_conntrack_netlink,nf_conntrack_ipv4,nf_conntrack_ipv6

libcrc32c 12644 3 ip_vs,nf_nat,nf_conntrack

修改configmap

[root@k8s-master ~]# kubectl edit configmaps kube-proxy -n kube-system

...

detectLocalMode: ""

enableProfiling: false

healthzBindAddress: ""

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: false

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

kind: KubeProxyConfiguration

metricsBindAddress: ""

mode: "ipvs" ##默认这里是空值,需要修改为ipvs

...

重启kube-proxy

[root@k8s-master ~]# kubectl delete pods -n kube-system -l k8s-app=kube-proxy

安装ipvsadm来管理ipvs规则

[root@k8s-master test-namespace]# yum -y install ipvsadm

[root@k8s-master test-namespace]# ipvsadm -L -n --stats

TCP 10.96.240.115:8090 2 8 4 432 224

-> 10.244.36.79:80 1 4 2 216 112

-> 10.244.36.80:80 1 4 2 216 112

三、安装calicoctl(默认用k8s存储模式)

1.安装

[root@k8s-master ~]# wget https://docs.projectcalico.org/v3.20/manifests/calicoctl.yaml

[root@k8s-master ~]# kubectl apply -f calicoctl.yaml

[root@k8s-master ~]# wget https://github.com/projectcalico/calicoctl/releases/download/v3.21.2/calicoctl -O /usr/bin/calicoctl

[root@k8s-master ~]# chmod +x /usr/bin/calicoctl

[root@k8s-master ~]# mkdir /etc/calico

[root@k8s-master ~]# vim /etc/calico/calicoctl.cfg

apiVersion: projectcalico.org/v3

kind: CalicoAPIConfig

metadata:

spec:

datastoreType: "kubernetes"

kubeconfig: "/root/.kube/config"

2.验证

[root@k8s-master calico]# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------+-------------------+-------+----------+-------------+

| 172.19.58.189 | node-to-node mesh | up | 06:52:11 | Established |

| 172.19.58.190 | node-to-node mesh | up | 06:52:11 | Established |

+---------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

更多推荐

已为社区贡献12条内容

已为社区贡献12条内容

所有评论(0)