k8s部署服务+日志收集+监控系统+CICD自动化

k8s部署服务+日志收集+监控系统+CICD自动化

k8s部署服务+日志收集+监控系统+CICD自动化

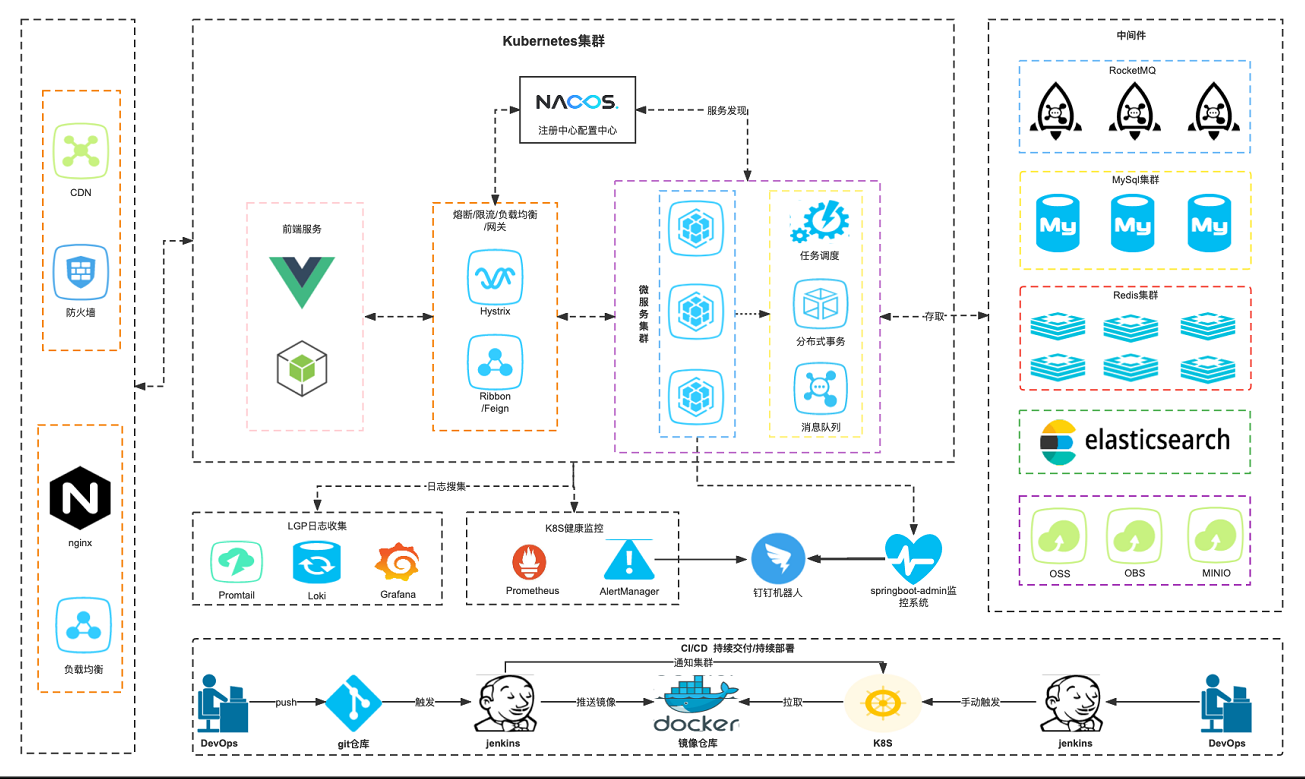

公司服务架构图

kubernetes集群组件功能

高可用Keepalived组件功能

整体架构图,可以自己使用或者编写

好工具一定要分享给最好的朋友,ProcessOn助您和好友一起高效办公!https://www.processon.com/i/63252cbf76213167e4824185

这边是我们部署的kubernetes集群可以借鉴我们的部署方法

部署日志收集-loki+grafana+promtail

创建命令空间

kubectl create namespace logging

创建grafana

编写grafana.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

labels:

app: grafana

namespace: logging

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana:8.4.7

imagePullPolicy: IfNotPresent

env:

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

resources:

requests:

cpu: 100m

memory: 200Mi

limits:

cpu: '1'

memory: 2Gi

readinessProbe:

httpGet:

path: /login

port: 3000

volumeMounts:

- name: storage

mountPath: /var/lib/grafana

volumes:

- name: storage

hostPath:

path: /data/grafana

---

apiVersion: v1

kind: Service

metadata:

name: grafana

labels:

app: grafana

namespace: logging

spec:

type: NodePort

ports:

- port: 3000

targetPort: 3000

nodePort: 30030

selector:

app: grafana

创建loki

编写loki-statefulset.yaml

root@k8s-master1:/lgp/monitor-master/loki# cat loki-statefulset.yaml

apiVersion: v1

kind: Service

metadata:

name: loki

namespace: logging

labels:

app: loki

spec:

type: NodePort

ports:

- port: 3100

protocol: TCP

name: http-metrics

targetPort: http-metrics

nodePort: 30100

selector:

app: loki

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: loki

namespace: logging

labels:

app: loki

spec:

podManagementPolicy: OrderedReady

replicas: 1

selector:

matchLabels:

app: loki

serviceName: loki

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

app: loki

spec:

serviceAccountName: loki

initContainers:

- name: chmod-data

image: busybox:1.28.4

imagePullPolicy: IfNotPresent

command: ["chmod","-R","777","/loki/data"]

volumeMounts:

- name: storage

mountPath: /loki/data

containers:

- name: loki

image: grafana/loki:2.3.0

imagePullPolicy: IfNotPresent

args:

- -config.file=/etc/loki/loki.yaml

volumeMounts:

- name: config

mountPath: /etc/loki

- name: storage

mountPath: /data

ports:

- name: http-metrics

containerPort: 3100

protocol: TCP

livenessProbe:

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 45

readinessProbe:

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 45

securityContext:

readOnlyRootFilesystem: true

terminationGracePeriodSeconds: 4800

volumes:

- name: config

configMap:

name: loki

- name: storage

hostPath:

path: /data/loki

编写loki-configmap.yaml

root@k8s-master1:/lgp/monitor-master/loki# cat loki-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: loki

namespace: logging

labels:

app: loki

data:

loki.yaml: |

auth_enabled: false

ingester:

chunk_idle_period: 3m

chunk_block_size: 262144

chunk_retain_period: 1m

max_transfer_retries: 0

lifecycler:

ring:

kvstore:

store: inmemory

replication_factor: 1

limits_config:

enforce_metric_name: false

reject_old_samples: true

reject_old_samples_max_age: 168h

schema_config:

configs:

- from: "2022-05-15"

store: boltdb-shipper

object_store: filesystem

schema: v11

index:

prefix: index_

period: 24h

server:

http_listen_port: 3100

storage_config:

boltdb_shipper:

active_index_directory: /data/loki/boltdb-shipper-active

cache_location: /data/loki/boltdb-shipper-cache

cache_ttl: 24h

shared_store: filesystem

filesystem:

directory: /data/loki/chunks

chunk_store_config:

max_look_back_period: 0s

table_manager:

retention_deletes_enabled: true

retention_period: 48h

compactor:

working_directory: /data/loki/boltdb-shipper-compactor

shared_store: filesystem

编写loki-rbac.yaml

root@k8s-master1:/lgp/monitor-master/loki# cat loki-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: loki

namespace: logging

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: loki

namespace: logging

rules:

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

verbs: ["use"]

resourceNames: [loki]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: loki

namespace: logging

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: loki

subjects:

- kind: ServiceAccount

name: loki

创建promtail

编写promtail-daemonset.yaml

root@k8s-master1:/lgp/monitor-master/promtail# cat promtail-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: loki-promtail

namespace: logging

labels:

app: promtail

spec:

selector:

matchLabels:

app: promtail

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: promtail

spec:

serviceAccountName: loki-promtail

containers:

- name: promtail

image: grafana/promtail:2.3.0

imagePullPolicy: IfNotPresent

args:

- -config.file=/etc/promtail/promtail.yaml

- -client.url=http://192.168.2.215:30100/loki/api/v1/push

env:

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

volumeMounts:

- mountPath: /etc/promtail

name: config

- mountPath: /run/promtail

name: run

- mountPath: /var/lib/docker/containers

name: docker

readOnly: true

- mountPath: /var/log/pods

name: pods

readOnly: true

ports:

- containerPort: 3101

name: http-metrics

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsGroup: 0

runAsUser: 0

readinessProbe:

failureThreshold: 5

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

volumes:

- name: config

configMap:

name: loki-promtail

- name: run

hostPath:

path: /run/promtail

type: ""

- name: docker

hostPath:

path: /var/lib/docker/containers

- name: pods

hostPath:

path: /var/log/pods

编写promtail-configmap.yaml

root@k8s-master1:/lgp/monitor-master/promtail# cat promtail-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: loki-promtail

namespace: logging

labels:

app: promtail

data:

promtail.yaml: |

client:

backoff_config:

max_period: 5m

max_retries: 10

min_period: 500ms

batchsize: 1048576

batchwait: 1s

external_labels: {}

timeout: 10s

positions:

filename: /run/promtail/positions.yaml

server:

http_listen_port: 3101

target_config:

sync_period: 10s

scrape_configs:

- job_name: kubernetes-pods-name

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels:

- __meta_kubernetes_pod_label_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-app

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

source_labels:

- __meta_kubernetes_pod_label_name

- source_labels:

- __meta_kubernetes_pod_label_app

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-direct-controllers

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

separator: ''

source_labels:

- __meta_kubernetes_pod_label_name

- __meta_kubernetes_pod_label_app

- action: drop

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

- source_labels:

- __meta_kubernetes_pod_controller_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-indirect-controller

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

separator: ''

source_labels:

- __meta_kubernetes_pod_label_name

- __meta_kubernetes_pod_label_app

- action: keep

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

- action: replace

regex: '([0-9a-z-.]+)-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-static

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: ''

source_labels:

- __meta_kubernetes_pod_annotation_kubernetes_io_config_mirror

- action: replace

source_labels:

- __meta_kubernetes_pod_label_component

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_annotation_kubernetes_io_config_mirror

- __meta_kubernetes_pod_container_name

target_label: __path__

编写promtail-rbac.yaml

root@k8s-master1:/lgp/monitor-master/promtail# cat promtail-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: loki-promtail

labels:

app: promtail

namespace: logging

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

app: promtail

name: promtail-clusterrole

namespace: logging

rules:

- apiGroups: [""]

resources: ["nodes","nodes/proxy","services","endpoints","pods"]

verbs: ["get", "watch", "list"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: promtail-clusterrolebinding

labels:

app: promtail

namespace: logging

subjects:

- kind: ServiceAccount

name: loki-promtail

namespace: logging

roleRef:

kind: ClusterRole

name: promtail-clusterrole

apiGroup: rbac.authorization.k8s.io

重点每个节点都创建下面这俩个目录

mkdir -p /run/promtail

mkdir -p /var/log/pods

访问grafana

选择查看pod日志

查看结果

部署Prometheus监控系统

我这里是直接通过kuboard页面化创建

选择集群套件

查看套件信息

安装脚本

告警发送配置

告警规则

告警事件

验证查看

随便选择一个pod查看

查看工作负载

查看网络负载

选择这个

可以选择资源

进去查看

查看node

选择节点

查看k8s集群的资源

整个集群的资源监控

部署CICD-jenkins-pipeline流水线

我们的k8s集群构建服务完成我们想要开发人员推送代码,我们需要配置自动化构建,开发人员提交代码,要打tag要打tag要打tag

代码仓库配置

编写dockerfile

#因为是jar包,我们dockerfile是这样的

FROM openjdk:8u312-jdk-oracle

MAINTAINER yangyu

ENV JAVA_OPTS='-Xmx512m -Xms256m'

ADD ./keeper-provider/target/keeper-provider.jar /opt/app.jar

EXPOSE 8080

ENTRYPOINT java ${JAVA_OPTS} -Djava.security.egd=file:/dev/./urandom -Duser.timezone=Asia/Shanghai -Dfile.encoding=UTF-8 -jar /opt/app.jar

jenkisn配置

创建项目

编写配置

流水线语法

最后一个语法我们使用的是kuboard,jenkins构建完成通知kuboard,他去更新pod拉取最新的镜像

pipeline {

agent any

stages {

stage('拉取代码') {

steps {

script{

checkout([$class: 'GitSCM', branches: [[name: '${tag}']], extensions: [], userRemoteConfigs: [[credentialsId: '100b584e-7f67-466f-8b93-9b9038e117a0', url: 'xxxxxxx']]])

}

}

}

stage('编译打包') {

steps {

sh "cd /root/.jenkins/workspace/k8s-pipeline-keeper"

sh "mvn clean compile package install -Dmaven.test.skip=true -D profiles.active=stage"

}

}

stage('构建镜像') {

steps {

sh "docker build -t keeper-provider:${tag} ." //上一步已经切换到了Dockerfile所在的目录

}

}

stage ("上传镜像到harbor仓库") {

steps {

sh "docker login --username=xxxx --password=xxx xxxxharbor仓库地址"

sh "docker tag keeper-provider:${tag} 镜像仓库地址:${tag}"

sh "docker push 镜像仓库地址:${tag} && docker rmi 镜像仓库地址:${tag} keeper-provider:${tag}"

}

}

stage ("kobard") {

steps {

sh '''curl -X PUT \\

-H "content-type: application/json" \\

-H "Cookie: KuboardUsername=admin; KuboardAccessKey=8rex2s2yhmdn.76bwpcmtrypjcwczw58msiiwar3b8364" \\

-d \'{"kind":"deployments","namespace":"bimuyu","name":"keeper-provider","images":{"镜像仓库地":"镜像仓库地址:\'${tag}\'"}}\' \\

"http://192.168.2.216/kuboard-api/cluster/bimuyu/kind/CICDApi/admin/resource/updateImageTag"'''

}

}

}

}

遇到到问题jenkins

images":{"镜像仓库地":"镜像仓库地址:${tag}"}}\' 错误的

images":{"镜像仓库地":"镜像仓库地址:\'${tag}\'"}}\' 被转义的,可以使用

最后构建

查看pod有没有变化,或者重启

tag} && docker rmi 镜像仓库地址:

t

a

g

k

e

e

p

e

r

−

p

r

o

v

i

d

e

r

:

{tag} keeper-provider:

tagkeeper−provider:{tag}"

}

}

stage (“kobard”) {

steps {

sh ‘’‘curl -X PUT \

-H “content-type: application/json” \

-H “Cookie: KuboardUsername=admin; KuboardAccessKey=8rex2s2yhmdn.76bwpcmtrypjcwczw58msiiwar3b8364” \

-d ‘{“kind”:“deployments”,“namespace”:“bimuyu”,“name”:“keeper-provider”,“images”:{“镜像仓库地”:"镜像仓库地址:’${tag}’"}}’ \

“http://192.168.2.216/kuboard-api/cluster/bimuyu/kind/CICDApi/admin/resource/updateImageTag”‘’’

}

}

}

}

[外链图片转存中...(img-oCuvHCkQ-1663409613313)]

### 遇到到问题jenkins

```shell

images":{"镜像仓库地":"镜像仓库地址:${tag}"}}\' 错误的

images":{"镜像仓库地":"镜像仓库地址:\'${tag}\'"}}\' 被转义的,可以使用

[外链图片转存中…(img-e6odmmn9-1663409613313)]

最后构建

[外链图片转存中…(img-TyqyEjLQ-1663409613313)]

查看pod有没有变化,或者重启

更多推荐

已为社区贡献35条内容

已为社区贡献35条内容

所有评论(0)