Kubernetes监控日志

K8s监控和查看命令

·

Kubernetes监控日志

查看资源集群状态

``

查看master组件的状态: kubectl get cs

[root@k8s-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

etcd-0 Healthy {"health":"true"}

查看node状态:kubectl get node

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 130d v1.21.0

k8s-node1 Ready <none> 130d v1.21.0

k8s-node2 Ready <none> 130d v1.21.0

查看资源的详细:kubectl describe <资源类型> <资源名称>

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deployment-probe-55bb6bb858-49ftf 1/1 Running 0 6d4h

deployment-probe-55bb6bb858-4kv4d 1/1 Running 0 6d4h

deployment-probe-55bb6bb858-c6gqm 1/1 Running 0 6d4h

nginx-6799fc88d8-vrjdr 1/1 Running 0 6d4h

pod-123-k8s-master 1/1 Running 5 97d

pod-abc-k8s-node1 1/1 Running 4 97d

web-tomcat-69bd46bc65-8qnxn 1/1 Running 0 33m

[root@k8s-master ~]# kubectl describe pod web-tomcat-69bd46bc65-8qnxn

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 130d v1.21.0

k8s-node1 Ready <none> 130d v1.21.0

k8s-node2 Ready <none> 130d v1.21.0

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl describe node k8s-master

查看资源信息:kubectl get <资源类型> <资源名称> # -o wide、-o yaml

[root@k8s-master ~]# kubectl get pod 查看pod

NAME READY STATUS RESTARTS AGE

deployment-probe-55bb6bb858-49ftf 1/1 Running 0 6d4h

deployment-probe-55bb6bb858-4kv4d 1/1 Running 0 6d4h

deployment-probe-55bb6bb858-c6gqm 1/1 Running 0 6d4

[root@k8s-master ~]# kubectl get pod -o wide 查看pod详细信息

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-probe-55bb6bb858-49ftf 1/1 Running 0 6d4h 10.244.36.70 k8s-node1 <none> <none>

[root@k8s-master ~]# kubectl get pod web-tomcat-69bd46bc65-8qnxn -o yaml 查案pod的yaml文件信息

查看Node资源消耗:kubectl top node

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 130d v1.21.0

k8s-node1 Ready <none> 130d v1.21.0

k8s-node2 Ready <none> 130d v1.21.0

[root@k8s-master ~]# kubectl top node k8s-node1

W0705 06:30:50.192939 60099 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-node1 71m 1% 524Mi 27%

查看Pod资源消耗:kubectl top pod

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deployment-probe-55bb6bb858-49ftf 1/1 Running 0 6d4h

deployment-probe-55bb6bb858-4kv4d 1/1 Running 0 6d4h

deployment-probe-55bb6bb858-c6gqm 1/1 Running 0 6d4h

nginx-6799fc88d8-vrjdr 1/1 Running 0 6d4h

pod-123-k8s-master 1/1 Running 5 97d

pod-abc-k8s-node1 1/1 Running 4 97d

web-tomcat-69bd46bc65-8qnxn 1/1 Running 0 40m

[root@k8s-master ~]# kubectl top pod web-tomcat-69bd46bc65-8qnxn

W0705 06:32:51.306247 62211 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) MEMORY(bytes)

web-tomcat-69bd46bc65-8qnxn 1m 57Mi

执行时会提示错误:error: Metrics API not available 这是因为这个命令需要由metric-server服务提供数据,而这个服务默认没 有安装,还需要手动部署下

Metrics Server是一个集群范围的资源使用情况的数据聚合器。作为一 个应用部署在集群中。Metric server从每个节点上Kubelet API收集指 标,通过Kubernetes聚合器注册在Master APIServer中。 为集群提供Node、Pods资源利用率指标 项目地址:https://github.com/kubernetes-sigs/metrics-server

Metrics Server部署:

wget https://github.com/kubernetes-sigs/metricsserver/releases/download/v0.3.7/components.yaml

# vi components.yaml

...

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP

- --kubelet-use-node-status-port

- --kubelet-insecure-tls

image: lizhenliang/metrics-server:v0.4.1

增加一个kubelet-insecure-tls参数,这个参数作用是告诉metrics-server不验证kubelet提供的https证书

检查是否部署成功:

kubectl get apiservices |grep metrics

kubectl get --raw /apis/metrics.k8s.io/v1beta1/nodes

如果状态True并能返回数据说明Metrics Server服务工作正常

管理K8S组件日志

systemd守护进程管理的组件

journalctl -u kubelet.service

Pod部署的组件:kubectl logs kube-proxy-btz4p -n kube-system

[root@k8s-master ~]# kubectl get pod -n kube-system ##命名空间

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-8db96c76-g4km5 1/1 Running 12 130d

calico-node-4fm62 1/1 Running 11 130d

calico-node-9d44m 1/1 Running 12 130d

calico-node-h44pz 1/1 Running 12 130d

coredns-545d6fc579-cmdzs 1/1 Running 1 6d4h

coredns-545d6fc579-h7fjt 1/1 Running 1 6d4h

etcd-k8s-master 1/1 Running 13 130d

kube-apiserver-k8s-master 1/1 Running 18 130d

kube-controller-manager-k8s-master 1/1 Running 13 130d

kube-proxy-bplr9 1/1 Running 11 130d

kube-proxy-bsghd 1/1 Running 12 130d

kube-proxy-smx8q 1/1 Running 12 130d

kube-scheduler-k8s-master 1/1 Running 14 130d

metrics-server-84f9866fdf-8pglt 1/1 Running 0 6d4h

[root@k8s-master ~]# kubectl logs kube-proxy-bplr9 -n kube-system ##查看指定的命名空间的pod日志

I0520 13:10:53.075763 1 node.go:172] Successfully retrieved node IP: 192.168.246.113

I0520 13:10:53.075856 1 server_others.go:140] Detected node IP 192.168.246.113

W0520 13:10:53.075912 1 server_others.go:592] Unknown proxy mode "", assuming iptables proxy

I0520 13:10:53.137692 1 server_others.go:206] kube-proxy running in dual-stack mode, IPv4-primary

I0520 13:10:53.137744 1 server_others.go:212] Using iptables Proxier.

I0520 13:10:53.137755 1 server_others.go:219] creating dualStackProxier for iptables.

W0520 13:10:53.137783 1 server_others.go:506] detect-local-mode set to ClusterCIDR, but no IPv6 cluster CIDR defined, , defaulting to no-op detect-local for IPv6

系统日志:/var/log/messages

管理K8S应用日志

查看容器标准输出日志:kubectl logs pod名称、

kubectl logs -f pod名称

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deployment-probe-55bb6bb858-49ftf 1/1 Running 0 6d4h

deployment-probe-55bb6bb858-4kv4d 1/1 Running 0 6d4h

deployment-probe-55bb6bb858-c6gqm 1/1 Running 0 6d4h

nginx-6799fc88d8-vrjdr 1/1 Running 0 6d4h

pod-123-k8s-master 1/1 Running 5 97d

pod-abc-k8s-node1 1/1 Running 4 97d

web-tomcat-69bd46bc65-8qnxn 1/1 Running 0 51m

[root@k8s-master ~]# kubectl logs web-tomcat-69bd46bc65-8qnxn

[root@k8s-master ~]# kubectl logs -f web-tomcat-69bd46bc65-8qnxn

标准输出在宿主机的路径:/var/lib/docker/containers//-json.log

[root@k8s-master ~]# cd /var/lib/docker/containers/74ef260c6ff15bb3245c9629446453e91a487bf990af29a4f90ead081ce1b98b/

checkpoints/ mounts/

[root@k8s-master ~]# cd /var/lib/docker/containers/

[root@k8s-master containers]# ls

01d0421aaed57e5bc3e3e17389a7536914386d388bc97da407899c297a964515

107b5f4467be48e01e2e6f68183ab8902549387120b0e873161659efc0562d84

11c90a440fd53a62f90548e929fadbbb03baffbdb6a3c4c8b423561de3585139

11dfb1afebebbabe59bdfd2b3ca762a2b8fa2257a85f57f3950bb1460a0862a3

14ad5b67259a3024c5e8c49704bbff08e0d0555f049f8bd025597b73f4cc45a6

18dde659445074d64be0e7d69bc2392618c6a44a7196ba09c169a01e5cb9aab7

1baeecee157378939e9bc03b3bca8ccb88433916225a289da3f4866e4ed82c5f

1bd6fac24a30b76801a78e3d2169fc453f8f4c7728b86a958104e4c154757489

日志文件,进入到终端日志目录查看:kubectl exec -it – bash

root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-probe-55bb6bb858-49ftf 1/1 Running 0 6d4h

deployment-probe-55bb6bb858-4kv4d 1/1 Running 0 6d4h

deployment-probe-55bb6bb858-c6gqm 1/1 Running 0 6d4h

nginx-6799fc88d8-vrjdr 1/1 Running 0 6d4h

pod-123-k8s-master 1/1 Running 5 97d

pod-abc-k8s-node1 1/1 Running 4 97d

web-tomcat-69bd46bc65-8qnxn 1/1 Running 0 56m

[root@k8s-master ~]# kubectl exec -it web-tomcat-69bd46bc65-8qnxn bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@web-tomcat-69bd46bc65-8qnxn:/usr/local/tomcat# cd

root@web-tomcat-69bd46bc65-8qnxn:~# ls

root@web-tomcat-69bd46bc65-8qnxn:~# cd /

root@web-tomcat-69bd46bc65-8qnxn:/# ls

bin boot dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

收集k8s日志

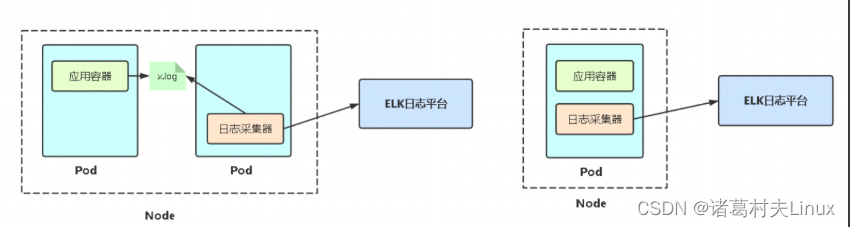

针对标准输出:以DaemonSet方式在每个Node 上部署一个日志收集程序,采集 /var/lib/docker/containers/目录下所有容器日志

针对容器中日志文件:在Pod中增加一个容器运行 日志采集器,使用emtyDir共享日志目录让日志采 集器读取到日志文件

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-GQDNdzR4-1657612144521)(C:\Users\liuyong05\AppData\Roaming\Typora\typora-user-images\image-20220705184924347.png)]

srv sys tmp usr var

## 收集k8s日志

#### 针对标准输出:以DaemonSet方式在每个Node 上部署一个日志收集程序,采集 /var/lib/docker/containers/目录下所有容器日志

#### 针对容器中日志文件:在Pod中增加一个容器运行 日志采集器,使用emtyDir共享日志目录让日志采 集器读取到日志文件

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)