【Pyspark】list转为dataframe报错:TypeError:not supported type: class numpy.float64

在PySpark中经常会使用到dataframe数据形式,本篇博文主要介绍,将list转为dataframe时,遇到的数据类型问题。有如下一个list:[(22.31670676205784, 15.00427254361571, 14.274554462639939, -48.011495169271186)]正常情况下:#!/usr/bin/python# -*- coding: utf-8

·

在PySpark中经常会使用到dataframe数据形式,本篇博文主要介绍,将list转为dataframe时,遇到的数据类型问题。

有如下一个list:

[(22.31670676205784, 15.00427254361571, 14.274554462639939, -48.011495169271186)]正常情况下:

#!/usr/bin/python

# -*- coding: utf-8 -*-

from pyspark.sql import Row

from pyspark.ml.linalg import Vectors

import numpy as np

from pyspark.ml.classification import LogisticRegression

from pyspark.sql import SparkSession

from pyspark.sql import SQLContext

import os

from pyspark import SparkContext, SparkConf

from pyspark.sql import HiveContext

from pyspark.mllib.classification import LogisticRegressionWithLBFGS

spark = SparkSession \

.builder \

.master("yarn") \

.appName('create_df_test2') \

.enableHiveSupport() \

.getOrCreate()

re = [(22.31670676205784, 15.00427254361571, 14.274554462639939, -48.011495169271186)]

print(re)

print(type(re))

df_re = spark.createDataFrame(re,['r1', 'r2', 'r3', 'r'])

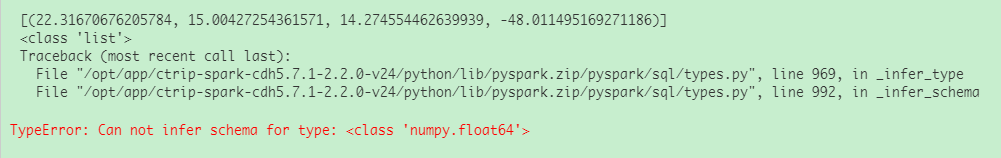

由于re中的数据,其实都是float类型的,直接这样写会报错,如下:

这时需要这样处理:

spark = SparkSession \

.builder \

.master("yarn") \

.appName('create_df_test2') \

.enableHiveSupport() \

.getOrCreate()

re = [(22.31670676205784, 15.00427254361571, 14.274554462639939, -48.011495169271186)]

print(re)

print(type(re))

df_re = spark.createDataFrame([(float(tup[0]), float(tup[1]), float(tup[2]), float(tup[3])) for tup in re],

['r1', 'r2', 'r3', 'r'])

这样就可以达到效果了。

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)