利用kolla容器化部署Train版openstack + ceph后端

Kolla 简介长久以来 OpenStack 部署难、 升级难的问题经常为人诟病,简单高效的部署升级方案是所有 OpenStack 用户(云服务供应商、客户、开发者)的共性刚需。Kolla 项目正是应需而生,它基于社区的最佳实践,提出了可靠、可扩展的生产级别 OpenStack Service Containers 部署方案。简而言之,Kolla 就是一个充分运用容器特性,实现容器化部署 Op..

Kolla 简介

长久以来 OpenStack 部署难、 升级难的问题经常为人诟病,简单高效的部署升级方案是所有 OpenStack 用户(云服务供应商、客户、开发者)的共性刚需。Kolla 项目正是应需而生,它基于社区的最佳实践,提出了可靠、可扩展的生产级别 OpenStack Service Containers 部署方案。

简而言之,Kolla 就是一个充分运用容器特性,实现容器化部署 OpenStack 服务的 DevOps 工具。

众所周知,容器技术具有非常优秀的应用部署敏捷性和扩展性,其中又以 Docker 和 Kubernetes 作为构建容器化应用程序的主要标准,是最受欢迎的容器技术选型。为了适配多样的应用场景,社区将 Kolla 项目解耦成为了三个组件。由 Kolla 继续负责构建容器镜像,由 Kolla-ansible/Kolla-kubernetes 负责容器的自动化部署与管理。三者间既互相配合又自成一家,松耦合的架构更有利于覆盖用户多方面的需求。

Kolla:容器镜像构建

Kolla-ansible:容器部署

Kolla-kubernetes:容器部署与管理

Kolla 显著的特点是「开箱即用」和「简易升级」,前者由编排工具提供自动化支撑,后者则完全是容器技术的功劳。Kolla 追求为每一个 OpenStack Service 都构建容器镜像,将升级/回滚的粒度(隔离依赖关系集)降维到 Service 级别,实现了操作原子性。版本升级只需三步:

Pull New Docker Image

Stop Old Docker Container

Start New Docker Container

即使升级失败,也能够立即 Start Old Docker Container 完成回滚。

从最新的 Kolla Queens Release 可以看出,Kolla 为实现「从升级到零宕机升级」的目标作出了努力,现已支持 Cinder 和 Keystone 项目的最小宕机时间升级,并不断扩展至其他项目。

笔者认为,零宕机升级功能的发布对 Kolla 而言具有里程碑式的意义,以往,Kolla 提供的升级服务因为具有时延性,所以并未真正触碰到用户的痛点,只能算是解决了用户的痒点需求(在部署和升级上提供了便利)。如何在生产环境实现基础设施管理平台升级的同时保障用户业务负载的连续性和可用性,才是用户最深切的痛点。这样有助于加深用户和 OpenStack 社区的粘合度,紧随社区发展,并不断引入新的功能来壮大自身。现在的 Kolla 或许离实现这一目标已经不远了。

规划

主机规划

准备8台主机,配置CPU:4C,内存:8G,硬盘:50G,2块网卡,其中镜像仓库单独加30G以上的镜像存储空间,ceph节点每台增加50G硬盘2块作为ceph的存储。

| 主机名 | 地址 | 操作系统 | 用途 | 配置 |

|---|---|---|---|---|

| registry | 172.30.82.129 | centos7.7 | docker镜像仓库 | 4C+8G+50G+30G硬盘 |

| kolla-deploy | 172.30.82.130 | centos7.7 | kolla部署操作机+ceph部署操作机 | 4C+8G+50G |

| c1 | 172.30.82.131 | centos7.7 | openstack控制节点1 | 4C+8G+50G+2网卡 |

| c2 | 172.30.82.132 | centos7.7 | 控制节点2 | 4C+8G+50G+3网卡 |

| c3 | 172.30.82.133 | centos7.7 | 控制节点3 | 4C+8G+50G+3网卡 |

| n1 | 172.30.82.134 | centos7.7 | 网络节点1 | 4C+8G+50G+3网卡 |

| n2 | 172.30.82.135 | centos7.7 | 网络节点2 | 4C+8G+50G+3网卡 |

| s1 | 172.30.82.136 | centos7.7 | 计算节点1,存储节点1 | 4C+8G+50G+3网卡+50GX3 |

| s2 | 172.30.82.137centos7.7 | 计算节点2,存储节点2 | 4C+8G+50G+3网卡+50GX3 | |

| s3 | 172.30.82.138 | centos7.7 | 计算节点3,存储节点3 | 4C+8G+50G+3网卡+50GX3 |

| v | 172.30.82.139 | centos7.7 | openstack高可用虚拟地址 |

网络规划

| 主机名 | 网卡名 | IP地址 | 用途 |

|---|---|---|---|

| c1 | ens192 | 172.30.82.131 | 管理网络 |

| c1 | ens224 | 业务网络 | |

| c1 | ens256 | 192.168.255.11 | 存储网络 |

| c2 | ens192 | 172.30.82.132 | 管理网络 |

| c2 | ens224 | 业务网络 | |

| c2 | ens256 | 192.168.255.12 | 存储网络 |

| c3 | ens192 | 172.30.82.133 | 管理网络 |

| c3 | ens224 | 业务网络 | |

| c3 | ens256 | 192.168.255.13 | 存储网络 |

| n1 | ens192 | 172.30.82.134 | 管理网络 |

| n1 | ens224 | 业务网络 | |

| n1 | ens256 | 192.168.255.21 | 存储网络 |

| n2 | ens192 | 172.30.82.135 | 管理网络 |

| n2 | ens224 | 业务网络 | |

| n2 | ens256 | 192.168.255.22 | 存储网络 |

| s1 | ens192 | 172.30.82.136 | 管理网络 |

| s1 | ens224 | 业务网络 | |

| s1 | ens256 | 192.168.255.1 | 存储网络 |

| s2 | ens192 | 172.30.82.137 | 管理网络 |

| s2 | ens224 | 业务网络 | |

| s2 | ens256 | 192.168.255.2 | 存储网络 |

| s3 | ens192 | 172.30.82.138 | 管理网络 |

| s3 | ens224 | 业务网络 | |

| s3 | ens256 | 192.168.255.3 | 存储网络 |

环境准备

1. 设置IP地址及主机名

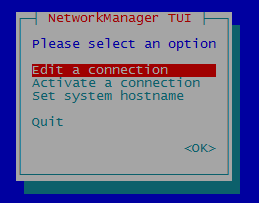

使用nmtui配置工具设置网卡地址及主机名

第一块网卡修改profile name和ip地址

第二块网卡修改profile name,不配置地址

2. 设置主机名

hostnamectl set-hostname --static server-xx

3. 配置 host 解析

cat << EOF >> /etc/hosts

172.30.82.129 dev.registry.io

172.30.82.130 deploy

172.30.82.131 c1

172.30.82.132 c2

172.30.82.133 c3

172.30.82.136 s1

172.30.82.137 s2

172.30.82.138 s3

EOF

for line in `cat /etc/hosts | sed 1,2d | awk '{print $2}' `

do

echo $line "scp /etc/hosts"

scp /etc/hosts $line:/etc/

done

4. ssh秘钥登录

生成秘钥

[root@localhost ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:0vbfeOZHrZphilfPCZ2uhivIySkvVQkUJIoBXTc5A70 root@localhost.localdomain

The key's randomart image is:

+---[RSA 2048]----+

|+. .+=*o |

| o.. o*. |

|. . = . |

| E.o |

| ..S . ..|

| .o . o o o|

| + + .oo= + |

| o * ..o+.=O .|

| +. .ooo**o. |

+----[SHA256]-----+

[root@localhost .ssh]# cd ~/.ssh

[root@localhost .ssh]# pwd

/root/.ssh

[root@localhost .ssh]# ls -al

total 8

drwxr-xr-x. 2 root root 38 Apr 19 23:40 .

dr-xr-x---. 5 root root 194 Apr 19 23:40 ..

-rw------- 1 root root 1679 Apr 19 23:37 id_rsa

-rw-r--r-- 1 root root 408 Apr 19 23:37 id_rsa.pub

拷贝秘钥到各个主机

ssh-copy-id c1

大量机器时使用脚本更加方便,修改其中的密码

cat << EOF > ~/auto_ssh.exp

#! /usr/bin/expect

# 执行ssh-keygen命令,如果需要就解注释

spawn ssh-keygen

expect "id_rsa"

send "\r"

expect "phrase"

send "\r"

expect "again"

send "\r"

interact

# 做互信,假设密码为"123123"

set f [open auto_ssh_host_ip r]

while { [gets $f ip]>=0} {

spawn ssh-copy-id $ip

expect {

"*yes/no" {send "yes\r";exp_continue}

"*password:" {send "123123\r";exp_continue}

}

}

close $f

EOF

5. 修改操作系统默认yum源

国内使用阿里的源速度较快

for line in `cat /etc/hosts | sed 1,2d | awk '{print $2}' `

do

echo $line

ssh $line "yum install -y wget"

ssh $line "mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup"

ssh $line "wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo"

ssh $line "wget -P /etc/yum.repos.d/ https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo"

ssh $line "wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo"

ssh $line 'cat << EOF > /etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for \$basearch

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/\$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/SRPMS

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

EOF

'

ssh $line "yum clean all"

ssh $line "yum makecache"

done

6. 修改时区

for line in `cat /etc/hosts | sed 1,2d | awk '{print $2}' `

do

echo $line "ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime"

ssh $line "ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime"

done

7. 配置ntp

因我的环境是虚拟机环境,时间同步主机,这里跳过。

8. 关闭selinux、防火墙

#libvirtd包和要安装的软件有冲突,所以先关闭一下。

for line in `cat /etc/hosts | sed 1,2d | awk '{print $2}' `

do

echo $line

ssh $line "setenforce 0"

ssh $line "sed -i 's/SELINUX=.*$/SELINUX=disabled/' /etc/selinux/config"

ssh $line "systemctl stop firewalld && systemctl disable firewalld"

ssh $line "systemctl stop libvirtd.service && systemctl disable libvirtd.service"

done

9. 修改pip源

for line in `cat /etc/hosts | sed 1,2d | awk '{print $2}' `

do

echo $line

ssh $line "mkdir ~/.pip"

ssh $line "cat << EOF > ~/.pip/pip.conf

[global]

index-url =https://mirrors.aliyun.com/pypi/simple

EOF"

done

10. 更换deploy机器的python版本(仅在部署机)

查看deploy的python版本

python -V

安装python3

yum install -y python3 python3-devel

替换python版本

rm /usr/bin/python -f

ln -s /usr/bin/python3 /usr/bin/python

sed -i '1s/python/python2/' /usr/bin/yum

sed -i '1s/python/python2/' /usr/libexec/urlgrabber-ext-down

11. 安装docker

安装docker并配置Docker共享挂载

for line in `cat /etc/hosts | sed 1,2d | awk '{print $2}' `

do

echo $line

ssh $line "yum install docker-ce -y"

ssh $line "mkdir /etc/systemd/system/docker.service.d"

ssh $line "tee /etc/systemd/system/docker.service.d/kolla.conf << 'EOF'

[Service]

MountFlags=shared

EOF"

done

搭建私有docker镜像仓库registry(registry上操作)

- mount -t ceph

172.30.82.100,172.30.82.101,172.30.82.48,172.30.82.49:6789:/registrydata/docker /var/lib/docker/volumes/registry/_data/docker/ -o

name=docker-io,secret=AQB1PrFdXYJgJhAAm8wi6hVhZqLFeJm4qY+n6A==,mds_namespace=k8sio

1.创建 CA 证书

[root@registry ~]#yum install -y openssl

[root@registry ~]#mkdir -p ~/certs && openssl req -newkey rsa:4096 -nodes -sha256 -keyout ~/certs/domain.key -x509 -days 365 -out ~/certs/domain.crt

Generating a 4096 bit RSA private key

.......................................................................................++

......++

writing new private key to '/root/certs/domain.key'

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:

State or Province Name (full name) []:

Locality Name (eg, city) [Default City]:

Organization Name (eg, company) [Default Company Ltd]:

Organizational Unit Name (eg, section) []:

Common Name (eg, your name or your server's hostname) []:dev.registry.io

Email Address []:

这里要注意 Commin Name 处必须填写私有 registry 的域名,并且建议填写域名而不使用 IP 地址。

2.下载镜像并获取配置文件

docker run -it --rm --entrypoint cat registry:2 /etc/docker/registry/config.yml > ~/config.yml

3.更改 config.yml 配置文件

version: 0.1

log:

fields:

service: registry

storage:

cache:

blobdescriptor: inmemory

filesystem:

rootdirectory: /var/lib/registry

http:

addr: :5000

headers:

X-Content-Type-Options: [nosniff]

tls:

certificate: /var/lib/registry/domain.crt

key: /var/lib/registry/domain.key

health:

storagedriver:

enabled: true

interval: 10s

threshold: 3

proxy:

remoteurl: https://iw3lcsa3.mirror.aliyuncs.com

4.拷贝 CA 证书和配置文件

将配置文件拷贝到宿主机映射目录

mkdir -p /var/lib/docker/volumes/registry/_data/

cp ~/certs/domain.* ~/config.yml /var/lib/docker/volumes/registry/_data/

mkdir -p /etc/docker/certs.d/dev.registry.io

cp ~/certs/domain.crt /etc/docker/certs.d/dev.registry.io/ca.crt

5.挂载镜像存储盘

[root@registry ~]# fdisk -l

Disk /dev/sda: 53.7 GB, 53687091200 bytes, 104857600 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x00006a44

Device Boot Start End Blocks Id System

/dev/sda1 * 2048 2099199 1048576 83 Linux

/dev/sda2 2099200 104857599 51379200 8e Linux LVM

Disk /dev/sdb: 214.7 GB, 214748364800 bytes, 419430400 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

我这里新加了一块200G的硬盘sdb作为后端存储。

[root@registry ~]# pvcreate /dev/sdb

Physical volume "/dev/sdb" successfully created.

[root@registry ~]# vgcreate registry_vg /dev/sdb

Volume group "registry_vg" successfully created

[root@registry ~]# lvcreate -L 100G -n registry_data registry_vg

Logical volume "registry_data" created.

[root@registry ~]# mkfs.xfs /dev/mapper/registry_vg-registry_data

meta-data=/dev/mapper/registry_vg-registry_data isize=512 agcount=4, agsize=6553600 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=26214400, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=12800, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@registry ~]# blkid

/dev/sda1: UUID="ee37bacd-39f1-4ee6-bbf7-0d7f76736c8a" TYPE="xfs"

/dev/sda2: UUID="2EKK8t-ciDH-cxcz-rloF-F7Eh-ynyD-r5Xxmy" TYPE="LVM2_member"

/dev/sr0: UUID="2019-09-09-19-08-41-00" LABEL="CentOS 7 x86_64" TYPE="iso9660" PTTYPE="dos"

/dev/sdb: UUID="0n3cJT-ci8Y-x0qW-zflf-Os2x-OKCf-oFY2e0" TYPE="LVM2_member"

/dev/mapper/centos-root: UUID="eadccf3c-7cd5-4036-aae9-bc36782e1a7e" TYPE="xfs"

/dev/mapper/centos-swap: UUID="87ff1029-dece-4845-8c91-4f5e80e13caa" TYPE="swap"

/dev/mapper/registry_vg-registry_data: UUID="c8af7978-1e84-45ff-bac6-1abeda020ccf" TYPE="xfs"

[root@registry ~]#mkdir -p /var/lib/docker/volumes/registry/_data/docker

[root@registry ~]#vi /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Apr 9 05:26:59 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=ee37bacd-39f1-4ee6-bbf7-0d7f76736c8a /boot xfs defaults 0 0

/dev/mapper/centos-swap swap swap defaults 0 0

UUID=c8af7978-1e84-45ff-bac6-1abeda020ccf /var/lib/docker/volumes/registry/_data/docker xfs defaults 0 0

[root@registry ~]#mount -a

6.运行 registry container

docker run -d --restart=always -p 443:5000 --name registry -v registry:/var/lib/registry registry:2 /var/lib/registry/config.yml

7.访问验证

[root@registry ~] curl -Ik https://dev.registry.io

HTTP/2 200

cache-control: no-cache

date: Thu, 25 Apr 2019 11:03:53 GMT

分发 CA 认证机构文件

for line in `cat /etc/hosts | sed 1,2d | awk '{print $2}' `

do

echo $line

ssh $line "mkdir -p /etc/docker/certs.d/dev.registry.io"

scp /etc/docker/certs.d/dev.registry.io/ca.crt $line:/etc/docker/certs.d/dev.registry.io/

done

验证

我们期望验证结果应该是能正常 pull 镜像:

[root@c1 ~]# docker pull dev.registry.io/library/ubuntu

Using default tag: latest

latest: Pulling from library/ubuntu

5bed26d33875: Pull complete

f11b29a9c730: Pull complete

930bda195c84: Pull complete

78bf9a5ad49e: Pull complete

Digest: sha256:bec5a2727be7fff3d308193cfde3491f8fba1a2ba392b7546b43a051853a341d

Status: Downloaded newer image for dev.registry.io/library/ubuntu:latest

dev.registry.io/library/ubuntu:latest

[root@c1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

dev.registry.io/library/ubuntu latest 4e5021d210f6 4 weeks ago 64.2MB

部署ceph

1. 重新加载docker服务

for line in s1 s2 s3

do

echo $line

ssh $line "systemctl daemon-reload && systemctl enable docker && systemctl restart docker"

done

2. 下载docker镜像

for line in s1 s2 s3

do

echo $line

ssh $line "docker pull dev.registry.io/ceph/daemon:latest-mimic"

done

3. 新建目录

for line in s1 s2 s3

do

echo $line

ssh $line "mkdir -p /etc/ceph"

ssh $line "mkdir -p /var/lib/ceph/"

ssh $line "mkdir -p /var/log/ceph"

ssh $line "chmon 777 /var/log/ceph -r"

done

4. 启动一个ceph.mon

docker run -d --net=host --name=mon \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

--privileged=true \

-e MON_IP=172.30.82.136 \

-e CEPH_CLUSTER_NETWORK=192.168.255.0/24 \

-e CEPH_PUBLIC_NETWORK=172.30.82.0/24 \

dev.registry.io/ceph/daemon:latest-mimic mon

在/etc/ceph目录中生成ceph的初始化文件

[root@s1 ceph]# ll /etc/ceph

total 12

-rw------- 1 167 167 151 Apr 24 17:00 ceph.client.admin.keyring

-rw-r--r-- 1 root root 195 Apr 24 17:00 ceph.conf

-rw------- 1 167 167 228 Apr 24 17:00 ceph.mon.keyring

将这些文件拷贝到S2,S3

5. 启动mgr

在第一个节点上启动mgr服务

docker run -d --net=host --name=mgr \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

--privileged=true \

dev.registry.io/ceph/daemon:latest-mimic mgr

6. 在s2,s3上启动mon,mgr进程

S2:

docker run -d --net=host --name=mon \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

--privileged=true \

-e MON_IP=172.30.82.137 \

-e CEPH_CLUSTER_NETWORK=192.168.255.0/24 \

-e CEPH_PUBLIC_NETWORK=172.30.82.0/24 \

dev.registry.io/ceph/daemon:latest-mimic mon

docker run -d --net=host --name=mgr \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

--privileged=true \

dev.registry.io/ceph/daemon:latest-mimic mgr

S3:

docker run -d --net=host --name=mon \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

--privileged=true \

-e MON_IP=172.30.82.138 \

-e CEPH_CLUSTER_NETWORK=192.168.255.0/24 \

-e CEPH_PUBLIC_NETWORK=172.30.82.0/24 \

dev.registry.io/ceph/daemon:latest-mimic mon

docker run -d --net=host --name=mgr \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

--privileged=true \

dev.registry.io/ceph/daemon:latest-mimic mgr

7. 启动osd

S1:

查看本地磁盘

[root@s1 ceph]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

fd0 2:0 1 4K 0 disk

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 99G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 7.9G 0 lvm [SWAP]

└─centos-home 253:2 0 41.1G 0 lvm /home

sdb 8:16 0 50G 0 disk

sdc 8:32 0 50G 0 disk

sdd 8:48 0 50G 0 disk

sr0 11:0 1 1024M 0 rom

如果磁盘为使用过的旧盘,做好先做一下磁盘的初始化

yum install -y gdisk

sgdisk -Z -o -g /dev/sdb

将本地磁盘sdb作为存储启动osd

docker run -d --net=host --name=osdsdb \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

-v /dev/:/dev/ \

--pid=host \

--privileged=true \

-e OSD_DEVICE=/dev/sdb \

-e OSD_TYPE=disk \

-v /run/udev:/run/udev/ \

dev.registry.io/ceph/daemon:latest-mimic osd

将本地磁盘sdc作为存储启动osd,

docker run -d --net=host --name=osdsdc \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

-v /dev/:/dev/ \

--pid=host \

--privileged=true \

-e OSD_DEVICE=/dev/sdc \

-e OSD_TYPE=disk \

-v /run/udev:/run/udev/ \

dev.registry.io/ceph/daemon:latest-mimic osd

将本地磁盘sdd作为存储启动osd,

docker run -d --net=host --name=osdsdd \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

-v /dev/:/dev/ \

--pid=host \

--privileged=true \

-e OSD_DEVICE=/dev/sdd \

-e OSD_TYPE=disk \

-v /run/udev:/run/udev/ \

dev.registry.io/ceph/daemon:latest-mimic osd

S2:

磁盘情况:

[root@s2 ceph]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

fd0 2:0 1 4K 0 disk

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 99G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 7.9G 0 lvm [SWAP]

└─centos-home 253:2 0 41.1G 0 lvm /home

sdb 8:16 0 48G 0 disk

├─sdb1 8:17 0 100M 0 part

└─sdb2 8:18 0 47.9G 0 part

sdc 8:32 0 46G 0 disk

sdd 8:48 0 46G 0 disk

sr0 11:0 1 1024M 0 rom

将本地磁盘sdb作为存储启动osd

docker run -d --net=host --name=osdsdb \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

-v /dev/:/dev/ \

--pid=host \

--privileged=true \

-e OSD_DEVICE=/dev/sdb \

-e OSD_TYPE=disk \

-v /run/udev:/run/udev/ \

dev.registry.io/ceph/daemon:latest-mimic osd

坑:s2的osd启动可能失败

[root@s3 bootstrap-osd]# docker logs osdsdb

2020-04-25 02:10:27 /opt/ceph-container/bin/entrypoint.sh: static: does not generate config

[errno 1] error connecting to the cluster

2020-04-25 02:10:28 /opt/ceph-container/bin/entrypoint.sh: Timed out while trying to reach out to the Ceph Monitor(s).

2020-04-25 02:10:28 /opt/ceph-container/bin/entrypoint.sh: Make sure your Ceph monitors are up and running in quorum.

2020-04-25 02:10:28 /opt/ceph-container/bin/entrypoint.sh: Also verify the validity of client.bootstrap-osd keyring.

原因为S2的/var/lib/ceph/bootstrap-osd/ceph.keyring 的秘钥串可能是不正确的。可以从S1上拷贝即可。

docker run -d --net=host --name=osdsdc \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

-v /dev/:/dev/ \

--pid=host \

--privileged=true \

-e OSD_DEVICE=/dev/sdc \

-e OSD_TYPE=disk \

-v /run/udev:/run/udev/ \

dev.registry.io/ceph/daemon:latest-mimic osd

docker run -d --net=host --name=osdsdd \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

-v /dev/:/dev/ \

--pid=host \

--privileged=true \

-e OSD_DEVICE=/dev/sdd \

-e OSD_TYPE=disk \

-v /run/udev:/run/udev/ \

dev.registry.io/ceph/daemon:latest-mimic osd

S3:

磁盘情况:

[root@s3 bootstrap-osd]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

fd0 2:0 1 4K 0 disk

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 99G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 7.9G 0 lvm [SWAP]

└─centos-home 253:2 0 41.1G 0 lvm /home

sdb 8:16 0 50G 0 disk

sdc 8:32 0 46G 0 disk

sdd 8:48 0 46G 0 disk

sr0 11:0 1 1024M 0 rom

将本地磁盘sdb作为存储启动osd

docker run -d --net=host --name=osdsdb \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

-v /dev/:/dev/ \

--pid=host \

--privileged=true \

-e OSD_DEVICE=/dev/sdb \

-e OSD_TYPE=disk \

-v /run/udev:/run/udev/ \

dev.registry.io/ceph/daemon:latest-mimic osd

docker run -d --net=host --name=osdsdc \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

-v /dev/:/dev/ \

--pid=host \

--privileged=true \

-e OSD_DEVICE=/dev/sdc \

-e OSD_TYPE=disk \

-v /run/udev:/run/udev/ \

dev.registry.io/ceph/daemon:latest-mimic osd

docker run -d --net=host --name=osdsdd \

-v /etc/ceph:/etc/ceph \

-v /var/lib/ceph:/var/lib/ceph \

-v /var/log/ceph:/var/log/ceph \

-v /dev/:/dev/ \

--pid=host \

--privileged=true \

-e OSD_DEVICE=/dev/sdd \

-e OSD_TYPE=disk \

-v /run/udev:/run/udev/ \

dev.registry.io/ceph/daemon:latest-mimic osd

8. 检查ceph集群服务状态

[root@s1 bootstrap-osd]# docker exec mon ceph -s

cluster:

id: 94b05757-7745-49c9-8483-90c3e19ec2a2

health: HEALTH_OK

services:

mon: 3 daemons, quorum s1,s2,s3

mgr: s2(active), standbys: s3, s1

osd: 9 osds: 9 up, 9 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 9.0 GiB used, 422 GiB / 431 GiB avail

pgs:

[root@s1 bootstrap-osd]#

至此ceph部署完成

9.为openstack初始化存储pool

#新建pool

docker exec mon ceph osd pool create volumes 128

docker exec mon ceph osd pool create images 128

docker exec mon ceph osd pool create vms 128

#初始化

docker exec mon rbd pool init volumes

docker exec mon rbd pool init images

docker exec mon rbd pool init vms

#创建glance用户及权限

docker exec mon ceph auth get-or-create client.glance mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=images' -o /etc/ceph/ceph.client.glance.keyring

#创建cinder用户及权限

docker exec mon ceph auth get-or-create client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms ,allow rx pool=images' -o /etc/ceph/ceph.client.cinder.keyring

#创建nova用户及权限

docker exec mon ceph auth get-or-create client.nova mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms ,allow rx pool=images' -o /etc/ceph/ceph.client.nova.keyring

#创建用户后,在/etc/ceph目录中将生成一下三个文件

[root@s1 ceph]# ls -al

total 48

drwxr-xr-x 2 root root 202 Apr 25 13:07 .

drwxr-xr-x. 82 root root 8192 Apr 25 09:08 ..

-rw------- 1 root root 161 Apr 25 2020 ceph.client.admin.keyring

-rw-r--r-- 1 root root 64 Apr 25 13:07 ceph.client.cinder.keyring #生成

-rw-r--r-- 1 root root 64 Apr 25 12:56 ceph.client.glance.keyring #生成

-rw-r--r-- 1 root root 62 Apr 25 13:07 ceph.client.nova.keyring #生成

-rw-r--r-- 1 root root 346 Apr 25 13:03 ceph.conf

-rw-r--r-- 1 root root 12288 Apr 25 01:40 .ceph.conf.swp

-rw------- 1 167 167 690 Apr 25 2020 ceph.mon.keyring

[root@s1 ceph]#

openstack部署

1.安装PIP

#安装依赖

yum install -y python3-devel libffi-devel gcc openssl-devel libselinux-python

#安装PIP

yum install -y python3-pip

ln -s /usr/bin/pip3 /usr/bin/pip

pip install --upgrade pip

2.安装docker模块

pip install docker

3.ansible安装

yum install -y ansible

pip install -U ansible

4.安装kolla和kolla-ansible

pip install kolla kolla-ansible

5.拷贝配置模板

cp -r /usr/local/share/kolla-ansible/etc_examples/kolla /etc/

cp /usr/local/share/kolla-ansible/ansible/inventory/* /home/

6.生成秘钥

[root@deploy ~]# kolla-genpwd

生成的秘钥文件为 /etc/kolla/passwords.yml ,可以自行修改其中的密码。常用密码如

openstack登录密码:keystone_admin_password

mysql数据库密码:database_password

7.修改部署的配置文件

配置文件为:/etc/kolla/globals.yml

- 配置文件

[root@deploy kolla]# grep -Ev "^$|^[#;]" /etc/kolla/globals.yml

---

config_strategy: "COPY_ONCE"

kolla_base_distro: "centos"

kolla_install_type: "source"

openstack_release: "train"

kolla_internal_vip_address: "172.30.82.139"

docker_registry: "dev.registry.io"

docker_namespace: "kolla"

network_interface: "ens192"

api_interface: "{{ network_interface }}"

storage_interface: "ens256"

neutron_external_interface: "ens224"

neutron_plugin_agent: "openvswitch"

enable_haproxy: "yes"

enable_ceph: "no"

enable_cinder: "yes"

enable_cinder_backup: "yes"

enable_heat: "yes"

glance_backend_ceph: "yes"

glance_enable_rolling_upgrade: "no"

cinder_backend_ceph: "yes"

nova_backend_ceph: "yes"

8.修改部署节点文件

文件为:/home/multinode

[root@deploy ~]# grep -Ev "^$|^[#;]" /home/multinode

[control]

c1

c2

c3

[network]

n1

n2

[compute]

s1

s2

s3

[monitoring]

deploy

[storage]

[deployment]

localhost ansible_connection=local

[baremetal:children]

control

network

compute

storage

monitoring

………………

9.为openstack配置rbd后端

#创建文件

mkdir -p /etc/kolla/config/glance

mkdir -p /etc/kolla/config/cinder

mkdir -p /etc/kolla/config/nova

cat << EOF > /etc/kolla/config/glance/glance-api.conf

[glance_store]

stores = rbd

default_store = rbd

rbd_store_pool = images

rbd_store_user = glance

rbd_store_ceph_conf = /etc/ceph/ceph.conf

EOF

cat << EOF > /etc/kolla/config/cinder/cinder-volume.conf

[DEFAULT]

enabled_backends = ceph

[ceph]

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_user = cinder

rbd_pool = volumes

volume_backend_name = ceph

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_secret_uuid = {{ cinder_rbd_secret_uuid }}

#glance_api_version = 2

EOF

cat << EOF > /etc/kolla/config/nova/nova-compute.conf

[libvirt]

images_rbd_pool = vms

images_type = rbd

images_rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_user = nova

rbd_secret_uuid = {{ cinder_rbd_secret_uuid }}

EOF

cp /etc/ceph/ceph.conf /etc/kolla/config/glance/

cp /etc/ceph/ceph.conf /etc/kolla/config/cinder/

cp /etc/ceph/ceph.conf /etc/kolla/config/nova/

cp /etc/ceph/ceph.client.cinder.keyring /etc/kolla/config/nova/

- 将cinder的keyring文件拷贝到容器内部

docker cp /etc/ceph/ceph.client.admin.keyring cinder_volume:/etc/ceph/

6 重新执行部署

kolla-ansible -i all-in-one deploy

10.开始部署

kolla-ansible -i /home/multinode bootstrap-servers

#遇到错误处理后再次执行,知道完全通过。

#deploy有一个python 版本不对的错误,因为前面改过python的版本

kolla-ansible -i /home/multinode pull

kolla-ansible -i /home/multinode prechecks

kolla-ansible -i /home/multinode deploy

##deploy时的一个坑:

haproxy部署时会一直等待,无法启动。因为kolla脚本没有将配置文件映射到docker中。

在每个有hapeoxy的节点上执行拷贝命令docker cp /etc/kolla/haproxy/services.d/ haproxy:/etc/haproxy/services.d/,然后执行docker重启docker restart haproxy

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)