[K8S集群:初始化异常]:kubelet cgroup driver: \“systemd\“ is different from docker cgroup driver: \“cgroupfs\

[K8S集群:初始化异常]:kubelet cgroup driver: \"systemd\" is different from docker cgroup driver: \"cgroupfs\

·

文章目录

零:场景复现:pod:calico-node异常

主节点:安装coredns -> init初始化 主节点(此时还没有安装calico)

从节点:基于主节点生成join命令加入集群

主节点:安装calico:apply 生成pod,此时没有调整yaml网卡

coredns 和calico pod 运行成功

但是 calico-node-cl8f2 运行失败

一:查看异常日志:主从节点[主要从节点]:journalctl -f -u kubelet

1.1:主节点:journalctl -f -u kubelet

[root@sv-master ~]# journalctl -f -u kubelet

-- Logs begin at 四 2024-04-11 15:17:47 CST. --

4月 11 15:30:47 sv-master kubelet[12537]: I0411 15:30:47.319143 12537 scope.go:110] "RemoveContainer" containerID="b10912f438c5592e65aed26059c91724957660371ca43ee49327ccee20173363"

4月 11 15:30:47 sv-master kubelet[12537]: E0411 15:30:47.319537 12537 remote_runtime.go:572] "ContainerStatus from runtime service failed" err="rpc error: code = Unknown desc = Error: No such container: b10912f438c5592e65aed26059c91724957660371ca43ee49327ccee20173363" containerID="b10912f438c5592e65aed26059c91724957660371ca43ee49327ccee20173363"

4月 11 15:30:47 sv-master kubelet[12537]: I0411 15:30:47.319603 12537 pod_container_deletor.go:52] "DeleteContainer returned error" containerID={Type:docker ID:b10912f438c5592e65aed26059c91724957660371ca43ee49327ccee20173363} err="failed to get container status \"b10912f438c5592e65aed26059c91724957660371ca43ee49327ccee20173363\": rpc error: code = Unknown desc = Error: No such container: b10912f438c5592e65aed26059c91724957660371ca43ee49327ccee20173363"

4月 11 15:30:47 sv-master kubelet[12537]: E0411 15:30:47.555224 12537 remote_runtime.go:479] "StopContainer from runtime service failed" err="rpc error: code = Unknown desc = Error response from daemon: No such container: e560e71cb9fa3911d73ac67fa1a8497d6793542860730be4749d794f9707ed26" containerID="e560e71cb9fa3911d73ac67fa1a8497d6793542860730be4749d794f9707ed26"

1.2:从节点:journalctl -f -u kubelet

[root@sv-slaver-on ~]# journalctl -f -u kubelet

4月 11 15:06:21 sv-slaver-one kubelet[5050]: E0411 15:06:21.113081 5050 server.go:302] "Failed to run kubelet" err="failed to run Kubelet: misconfiguration: kubelet cgroup driver: \"systemd\" is different from docker cgroup driver: \"cgroupfs\""

4月 11 15:06:21 sv-slaver-one systemd[1]: kubelet.service: main process exited, code=exited, status=1/FAILURE

4月 11 15:06:21 sv-slaver-one systemd[1]: Unit kubelet.service entered failed state.

4月 11 15:06:21 sv-slaver-one systemd[1]: kubelet.service failed.

三:解决方式,主要是在主节点重启docker和k8s服务

3.1:执行命令

[root@10 docker]# systemctl restart docker

[root@10 docker]# systemctl daemon-reload

[root@10 docker]# systemctl restart kubelet

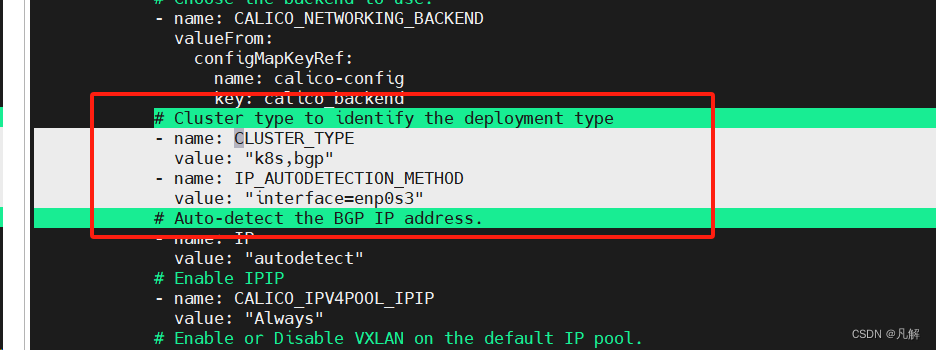

3.2:编辑calicoyaml文件网卡

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# 主要添加下面两行

- name: IP_AUTODETECTION_METHOD

value: "interface=enp0s3"

3.3:再次查看nodes和pod

sv-slaver-one为主节点,配反了

[root@sv-slaver-one ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-64cc74d646-4z8zf 1/1 Running 2 (9h ago) 9h

kube-system calico-node-cl8f2 1/1 Running 0 9h

kube-system calico-node-pfxnd 1/1 Running 0 9h

kube-system coredns-6d8c4cb4d-8q7tb 1/1 Running 2 (9h ago) 9h

kube-system coredns-6d8c4cb4d-m2gz2 1/1 Running 2 (9h ago) 9h

kube-system etcd-sv-slaver-one 1/1 Running 3 (9h ago) 9h

kube-system kube-apiserver-sv-slaver-one 1/1 Running 3 (9h ago) 9h

kube-system kube-controller-manager-sv-slaver-one 1/1 Running 4 (3h59m ago) 9h

kube-system kube-proxy-6kfnf 1/1 Running 2 (9h ago) 9h

kube-system kube-proxy-s9pzm 1/1 Running 3 (9h ago) 9h

kube-system kube-scheduler-sv-slaver-one 1/1 Running 4 (3h59m ago) 9h

[root@sv-slaver-one ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

sv-master Ready <none> 9h v1.23.0

sv-slaver-one Ready control-plane,master 9h v1.23.0

[root@sv-slaver-one ~]#

更多推荐

已为社区贡献11条内容

已为社区贡献11条内容

所有评论(0)