尚硅谷学习笔记-Kubeadm安装K8S集群

k8s集群安装

一、 安装要求

2台纯净centos虚拟机,版本为7.x及以上

机器配置 2核2G以上 x2台

服务器网络互通

禁止swap分区

禁止selinux

关闭防火墙

时间同步

二、 环境准备

1. 关闭防火墙功能

systemctl stop firewalld

systemctl disable firewalld

2.关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

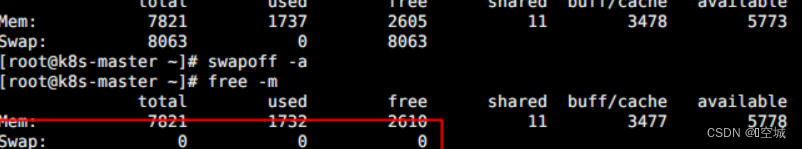

3. 关闭swap

临时

swapoff -a

永久

sed -ri 's/.*swap.*/#&/' /etc/fstab

关闭swap分区后,可以用free -m命令进行查看

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-60xXJ1gu-1684481292733)(null)]](https://i-blog.csdnimg.cn/blog_migrate/4c859c3b09b787d59d528dd930723a91.png)

4. 服务器规划

cat > /etc/hosts << EOF

10.10.10.112 k8s-master

10.10.10.113 k8s-node

EOF

5. 主机名配置:

hostnamectl set-hostname k8s-master

6. 时间同步配置

yum install -y ntpdate

ntpdate time.windows.com

7.开启转发

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

8. 时间同步

echo '*/5 * * * * /usr/sbin/ntpdate -u ntp.api.bz' >>/var/spool/cron/root

systemctl restart crond.service

crontab -l

注:以上可以全部复制粘贴直接运行,但是主机名配置需要重新修改

三、 Docker安装

1. Docker配置cgroup驱动[所有节点]

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

重新加载Docker的配置文件

sudo systemctl daemon-reload

重启Docker

sudo systemctl restart docker

设置Docker开机自启

systemctl enable docker.service

2. kubernetes源配置[所有节点]

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

3. 安装kubeadm,kubelet和kubectl[所有节点]

yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

systemctl enable kubelet

4. 部署Kubernetes Master [ master节点]

kubeadm init \

--apiserver-advertise-address=<MASTER_NODE_IP> \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.18.0 \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

参数说明: --apiserver-advertise-address=10.10.10.112 这个参数就是master主机的IP地址

–image-repository=registry.aliyuncs.com/google_containers

这个是镜像地址,由于国外地址无法访问,故使用的阿里云仓库地址:registry.aliyuncs.com/google_containers

–kubernetes-version=v1.18.0

这个参数是下载的k8s软件版本号

–service-cidr=10.1.0.0/16

这个参数是svc网络,IP地址直接就套用10.1.0.0/16 ,以后安装时也套用即可,不要更改

–pod-network-cidr=10.244.0.0/16

k8s内部的pod节点之间网络可以使用的IP段,不能和service-cidr写一样,如果不知道怎么配,就先用这个10.244.0.0/16

部署成功后, 输出内容最后会提示下面的内容, 红框中的命令注意保存!后面第7步会用到。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-oou5OE7f-1684481293079)(null)]](https://i-blog.csdnimg.cn/blog_migrate/863cc86cfc4216977d6f68ae7f41283f.png)

5. kubectl命令工具配置[master]

# 创建目录

mkdir -p $HOME/.kube

# 拷贝文件

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# 授权

sudo chown $(id -u):$(id -g) $HOME/.kube/config

6.分别在master和node节点上执行授权操作,不然会出现下面的报错信息:

[root@k8s-node1 ~]# kubectl get nodes

error: no configuration has been provided, try setting KUBERNETES_MASTER environment variable

拷贝master节点的文件到node节点上[Node节点]

scp root@10.10.10.112:/etc/kubernetes/admin.conf $HOME/.kube/config

该命令需要输入密码

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-L3CRHFzs-1684481293355)(null)]](https://i-blog.csdnimg.cn/blog_migrate/4df25e79589808ee8dc1b3806828520f.png)

授权操作:

# 创建目录

mkdir -p $HOME/.kube

# 拷贝文件

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# 授权

sudo chown $(id -u):$(id -g) $HOME/.kube/config

7. node节点加入集群[Node节点]

kubeadm join 10.10.10.112:6443 --token vrqnfd.pwj0171i425mm16g \

--discovery-token-ca-cert-hash sha256:52a1210bdc976ecd7a60e7719d47fa79bba00b1e55f2dc676ecc617689e99f23

如果此时提示下面的信息,说明token过期不可用需要重新创建

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-DbJ7O1ke-1684481293289)(null)]](https://i-blog.csdnimg.cn/blog_migrate/921b0abc5dbe3906e9fce48e89fa5b0f.png)

解决方案:

1.master 查看节点检查token是否有效

kubeadm token list

2.生成新的token和命令。然后在node重新执行

kubeadm token create --print-join-command

3.使用下面的命令查看节点信息

kubectl get nodes

8. 安装网络插件[master]

8.1 [所有节点操作]:

docker pull lizhenliang/flannel:v0.11.0-amd64

8.2 [master上操作]

上传kube-flannel.yaml,并执行:

kubectl apply -f kube-flannel.yaml

注意:[必须全部运行起来,否则有问题],可通过下面的命令查看

kubectl get pods -n kube-system

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-IpsUDyQL-1684481292807)(null)]](https://i-blog.csdnimg.cn/blog_migrate/edaf073937222889769061084b1186e3.png)

问题

- 如果

coreDNS相关的POD一直处于Pending状态,在master节点执行下面的命令

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ifCtwfeo-1684481292879)(null)]](https://i-blog.csdnimg.cn/blog_migrate/f62da487dd498f3faf5a987dc500a68d.png)

- node节点的

ROLES为的解决办法:

执行下面的命令:

kubectl label no k8s-node1 kubernetes.io/role=node

kube-flannel.yaml文件内容如下:

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan",

"Directrouting": true

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

至此, k8s集群部署完成, 接下来进行验证。

四. 验证部署应用和日志查询

1 验证部署应用

1.1 创建一个nginx应用

kubectl create deployment k8s-status-checke --image=nginx

kubectl get deployment

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-WWXWX8pY-1684481293170)(null)]](https://i-blog.csdnimg.cn/blog_migrate/fc52306288ebe3de53e2dc78dbda2233.png)

1.2 暴露80端口(用命令生成service)

kubectl expose deployment k8s-status-checke --port=80 --target-port=80 --type=NodePort

1.3 查看已经ready

kubectl get deployment

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-frB8YusE-1684481292979)(null)]](https://i-blog.csdnimg.cn/blog_migrate/f2c3f6caba70f2c4bf72f9c0f01ca199.png)

1.4 访问

curl http://10.244.1.3

访问结果如下:

[root@k8s-master offline-install]# curl http://10.244.1.3

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

1.5 删除这个deployment

kubectl delete deployment k8s-status-checke

2 查询日志:

kubectl logs -f k8s-status-checke-5cb6756ddb-6r5qj

3. 验证集群网络是否正常

** 3.1 执行命令,拿到一个应用地址:**

kubectl get pods -o wide

[root@k8s-master1 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

k8s-status-checke-5cb6756ddb-6r5qj 1/1 Running 0 8m25s 10.244.1.3 k8s-node1 <none> <none>

3.2 通过任意节点ping这个应用ip

ping 10.244.1.3

[root@k8s-node1 etc]# ping 10.244.1.3

PING 10.244.1.3 (10.244.1.3) 56(84) bytes of data.

64 bytes from 10.244.1.3: icmp_seq=1 ttl=64 time=0.226 ms

64 bytes from 10.244.1.3: icmp_seq=2 ttl=64 time=0.105 ms

64 bytes from 10.244.1.3: icmp_seq=3 ttl=64 time=0.097 ms

64 bytes from 10.244.1.3: icmp_seq=4 ttl=64 time=0.106 ms

64 bytes from 10.244.1.3: icmp_seq=5 ttl=64 time=0.101 ms

64 bytes from 10.244.1.3: icmp_seq=6 ttl=64 time=0.082 ms

64 bytes from 10.244.1.3: icmp_seq=7 ttl=64 time=0.102 ms

3.3 访问节点

curl -I 10.244.1.3

[root@k8s-master offline-install]# curl -I 10.244.1.3

HTTP/1.1 200 OK

Server: nginx/1.23.2

Date: Fri, 18 Nov 2022 02:56:00 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Wed, 19 Oct 2022 07:56:21 GMT

Connection: keep-alive

ETag: "634fada5-267"

Accept-Ranges: bytes

3.4 查询日志

kubectl logs -f k8s-status-checke-5cb6756ddb-6r5qj

[root@k8s-master offline-install]# kubectl logs -f k8s-status-checke-5cb6756ddb-6r5qj

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2022/11/18 02:47:35 [notice] 1#1: using the "epoll" event method

2022/11/18 02:47:35 [notice] 1#1: nginx/1.23.2

2022/11/18 02:47:35 [notice] 1#1: built by gcc 10.2.1 20210110 (Debian 10.2.1-6)

2022/11/18 02:47:35 [notice] 1#1: OS: Linux 3.10.0-1160.el7.x86_64

2022/11/18 02:47:35 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2022/11/18 02:47:35 [notice] 1#1: start worker processes

2022/11/18 02:47:35 [notice] 1#1: start worker process 28

2022/11/18 02:47:35 [notice] 1#1: start worker process 29

2022/11/18 02:47:35 [notice] 1#1: start worker process 30

2022/11/18 02:47:35 [notice] 1#1: start worker process 31

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)