安装kubernetes--通过软件包管理工具安装

使用软件包的方式快捷搭建k8s集群

kubernetes为绝大部分的操作系统平台都提供了相应的软件包。通过软件包来安装kubernetes是一种最简单的安装方式。对于初学者来说,通过这种方式可以快速搭建起kubernetes 的运行环境。

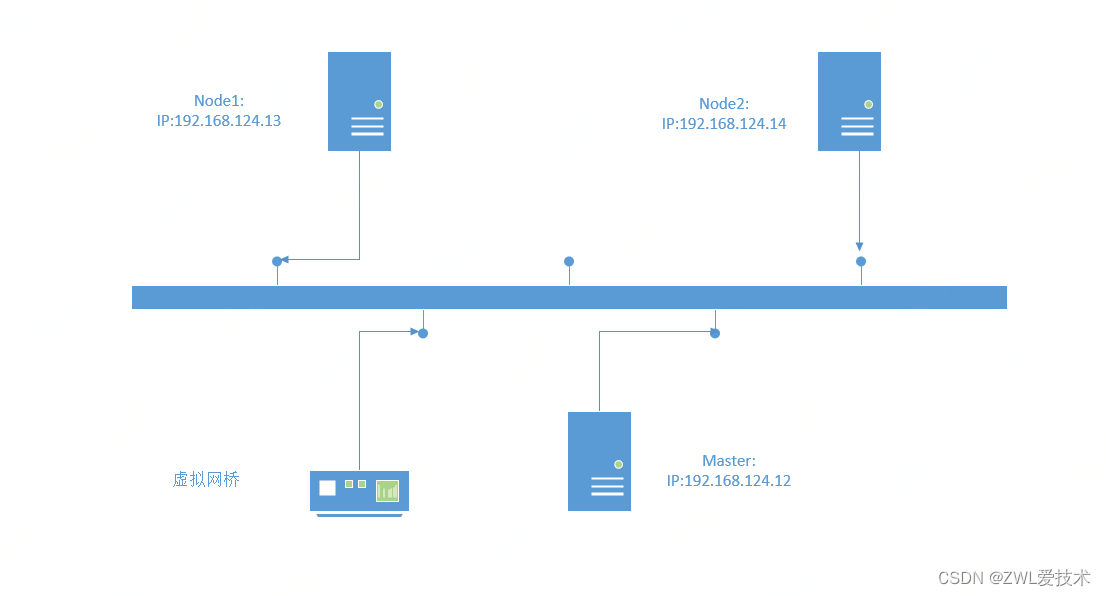

1.节点规划

这里部署了三台主机,一台master节点,两台Node节点,其网络拓扑如上。

其中Master节点上面安装kubernetes-master和etcd软件包,Node节点上面安装kubernetes-node、etcd、flannel以及docker等软件包。kubernetes-master软件包包含了kube-apiserver、kube-controller-manager和kube-scheduler等组件及其管理工具。kubernetes-node包括kubelet及其管理工具。2个Node节点上都安装了etcd软件包,这样3个节点会组成一个etcd集群。flannel为网络组件,docker 为运行容器。

2.安装前准备

在安装软件包前,首先要对所有节点的软件环境进行相应的配置和更新

2.1.禁用selinux

selinux是2.6版本Linux内核中提供的强制访问控制系统,在这种访问控制体系的限制下,进程只能访问某些指定的文件。尽管selinux在很大程度上可以加强Linux的安全性,但他会影响kubernetes某些组件的功能,所以将其禁用;命令如下:

[root@localhost ~]# setenforce 0

以上命令仅为暂时禁用,当系统重启之后selinux又会发生作用。为了彻底禁用,我们可以修改配置文件 /etc/selinux/config

[root@localhost ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

2.2.禁用firewalld

firewalld是centos7 开始采用的防火墙系统。替代了之前的iptables。用户可以通过firewalld来加强系统的安全,关闭或者开放某些端口。firewalld 会影响docker的网络功能,所以部署前需要禁用

命令如下:

[root@localhost selinux]# systemctl stop firewalld

[root@localhost selinux]# systemctl disable firewalld

2.3.更新软件包

在安装部署kubernetes之前,用户应该更新当前系统的软件包,保持所有的软件包都是最新版本,命令如下

[root@localhost selinux]# yum -y update

2.3.ntp同步系统时间】

我们需要先将三台设备通过ntp进行时间同步,否则在后面程序运行中可能会报错 ,命令如下:

[root@localhost selinux]# yum -y install ntp

[root@localhost selinux]# ntpdate -u cn.pool.ntp.org

3.etcd集群配置

前面介绍过,etcd是一个高可用的分布式键值数据库。kubernetes利用etcd来存储某些数据。为了提高可用性,这里我们在三台设备上部署etcd,形成一个拥有三个节点的集群。

在Master节点上执行:

[root@localhost selinux]# yum -y install kubernetes-master etcd

然后修改etcd配置文件 /etc/etcd/etcd.conf ,内容如下

[root@localhost etcd]# cat /etc/etcd/etcd.conf

#[Member]

#ETCD_CORS=""

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

ETCD_LISTEN_PEER_URLS="http://192.168.124.12:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.124.12:2379,http://127.0.0.1:2379"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

ETCD_NAME="etcd1"

#ETCD_SNAPSHOT_COUNT="100000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

#ETCD_QUOTA_BACKEND_BYTES="0"

#ETCD_MAX_REQUEST_BYTES="1572864"

#ETCD_GRPC_KEEPALIVE_MIN_TIME="5s"

#ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s"

#ETCD_GRPC_KEEPALIVE_TIMEOUT="20s"

#

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.124.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.124.12:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_DISCOVERY_SRV=""

ETCD_INITIAL_CLUSTER="etcd1=http://192.168.124.12:2380,etcd2=http://192.168.124.13:2380,etcd3=http://192.168.124.14:2380"

#ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

#ETCD_INITIAL_CLUSTER_STATE="new"

#ETCD_STRICT_RECONFIG_CHECK="true"

#ETCD_ENABLE_V2="true"

#

#[Proxy]

#ETCD_PROXY="off"

#ETCD_PROXY_FAILURE_WAIT="5000"

#ETCD_PROXY_REFRESH_INTERVAL="30000"

#ETCD_PROXY_DIAL_TIMEOUT="1000"

#ETCD_PROXY_WRITE_TIMEOUT="5000"

#ETCD_PROXY_READ_TIMEOUT="0"

#

#[Security]

#ETCD_CERT_FILE=""

#ETCD_KEY_FILE=""

#ETCD_CLIENT_CERT_AUTH="false"

#ETCD_TRUSTED_CA_FILE=""

#ETCD_AUTO_TLS="false"

#ETCD_PEER_CERT_FILE=""

#ETCD_PEER_KEY_FILE=""

#ETCD_PEER_CLIENT_CERT_AUTH="false"

#ETCD_PEER_TRUSTED_CA_FILE=""

#ETCD_PEER_AUTO_TLS="false"

#

#[Logging]

#ETCD_DEBUG="false"

#ETCD_LOG_PACKAGE_LEVELS=""

#ETCD_LOG_OUTPUT="default"

#

#[Unsafe]

#ETCD_FORCE_NEW_CLUSTER="false"

#

#[Version]

#ETCD_VERSION="false"

#ETCD_AUTO_COMPACTION_RETENTION="0"

#

#[Profiling]

#ETCD_ENABLE_PPROF="false"

#ETCD_METRICS="basic"

#

#[Auth]

#ETCD_AUTH_TOKEN="simple"

在上面配置文件中需要修改的配置文件选项如下

3.1 ETCD_LISTEN_PEER_URLS

该选项用于指定etcd节点监听的URL用于与其他分布于etcd节点通信,实现各个节点etcd节点的数据通信、交互、选举、以及数据同步等功能。该URL 采用协议、ip 和端口号相组合的形式可以是

http://ip:prot

或者

https://ip:port

在本架构中,节点的IP地址为192.168.124.12,默认端口是2380。用户可以通过该选项同时指定多个url,各个url之间使用逗号隔开。

3.2 ETCD_LISTEN_CLIENT_URLS

该选项用于指定对外提供服务的地址,即etcdAPI 的地址,etcd客户端通过该url访问etcd服务器。该选项同样采用协议、IP、端口号组合的形式,其默认端口号是2379

3.3 ETCD_NAME

用来指定etcd节点的名称,该名称用于在集群中标识本etcd节点

3.4 ETCD_INITIAL_ADVERTISE_PEER_URLS

该选项用于指定节点同伴监听地址,这个值会告诉etcd集群中其他的etcd节点。该地址用来在etcd集群中传递数据。

3.5 ETCD_ADVERTISE_CLIENT_URLS

该选项用来指定当前etcd节点对外通告的客户端监听地址,这个值会告诉集群其他节点。

3.6 ETCD_INITIAL_CLUSTER

该选项列出当前etcd集群中所有的etcd节点的通信地址。

在Node1节点上执行以下命令,安装kubernetes节点组件、etcd、flannel 以及docker

[root@localhost ~]# yum -y install kubernetes-node etcd flannel docker

安装完成之后,编辑配置文件 /etc/etcd/etcd.conf , 修改内容如下:

#[Member]

#ETCD_CORS=""

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

ETCD_LISTEN_PEER_URLS="http://192.168.124.13:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.124.13:2379,http://127.0.0.1:2379"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

ETCD_NAME="etcd2"

#ETCD_SNAPSHOT_COUNT="100000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

#ETCD_QUOTA_BACKEND_BYTES="0"

#ETCD_MAX_REQUEST_BYTES="1572864"

#ETCD_GRPC_KEEPALIVE_MIN_TIME="5s"

#ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s"

#ETCD_GRPC_KEEPALIVE_TIMEOUT="20s"

#

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.124.13:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.124.13:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_DISCOVERY_SRV=""

ETCD_INITIAL_CLUSTER="etcd1=http://192.168.124.12:2380,etcd2=http://192.168.124.13:2380,etcd3=http://192.168.124.14:2380"

#ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

#ETCD_INITIAL_CLUSTER_STATE="new"

#ETCD_STRICT_RECONFIG_CHECK="true"

#ETCD_ENABLE_V2="true"

#

#[Proxy]

#ETCD_PROXY="off"

#ETCD_PROXY_FAILURE_WAIT="5000"

#ETCD_PROXY_REFRESH_INTERVAL="30000"

#ETCD_PROXY_DIAL_TIMEOUT="1000"

#ETCD_PROXY_WRITE_TIMEOUT="5000"

#ETCD_PROXY_READ_TIMEOUT="0"

#

#[Security]

#ETCD_CERT_FILE=""

#ETCD_KEY_FILE=""

#ETCD_CLIENT_CERT_AUTH="false"

#ETCD_TRUSTED_CA_FILE=""

#ETCD_AUTO_TLS="false"

#ETCD_PEER_CERT_FILE=""

#ETCD_PEER_KEY_FILE=""

#ETCD_PEER_CLIENT_CERT_AUTH="false"

#ETCD_PEER_TRUSTED_CA_FILE=""

#ETCD_PEER_AUTO_TLS="false"

#

#[Logging]

#ETCD_DEBUG="false"

#ETCD_LOG_PACKAGE_LEVELS=""

#ETCD_LOG_OUTPUT="default"

#

#[Unsafe]

#ETCD_FORCE_NEW_CLUSTER="false"

#

#[Version]

#ETCD_VERSION="false"

#ETCD_AUTO_COMPACTION_RETENTION="0"

#

#[Profiling]

#ETCD_ENABLE_PPROF="false"

#ETCD_METRICS="basic"

#

#[Auth]

#ETCD_AUTH_TOKEN="simple"

在Node2上执行相同命令,安装相同组件,修改配置文件 /etc/etcd/etcd.conf ,如下:

#[Member]

#ETCD_CORS=""

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

ETCD_LISTEN_PEER_URLS="http://192.168.124.14:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.124.14:2379,http://127.0.0.1:2379"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

ETCD_NAME="etcd3"

#ETCD_SNAPSHOT_COUNT="100000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

#ETCD_QUOTA_BACKEND_BYTES="0"

#ETCD_MAX_REQUEST_BYTES="1572864"

#ETCD_GRPC_KEEPALIVE_MIN_TIME="5s"

#ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s"

#ETCD_GRPC_KEEPALIVE_TIMEOUT="20s"

#

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.124.14:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.124.14:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_DISCOVERY_SRV=""

ETCD_INITIAL_CLUSTER="etcd1=http://192.168.124.12:2380,etcd2=http://192.168.124.13:2380,etcd3=http://192.168.124.14:2380"

#ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

#ETCD_INITIAL_CLUSTER_STATE="new"

#ETCD_STRICT_RECONFIG_CHECK="true"

#ETCD_ENABLE_V2="true"

#

#[Proxy]

#ETCD_PROXY="off"

#ETCD_PROXY_FAILURE_WAIT="5000"

#ETCD_PROXY_REFRESH_INTERVAL="30000"

#ETCD_PROXY_DIAL_TIMEOUT="1000"

#ETCD_PROXY_WRITE_TIMEOUT="5000"

#ETCD_PROXY_READ_TIMEOUT="0"

#

#[Security]

#ETCD_CERT_FILE=""

#ETCD_KEY_FILE=""

#ETCD_CLIENT_CERT_AUTH="false"

#ETCD_TRUSTED_CA_FILE=""

#ETCD_AUTO_TLS="false"

#ETCD_PEER_CERT_FILE=""

#ETCD_PEER_KEY_FILE=""

#ETCD_PEER_CLIENT_CERT_AUTH="false"

#ETCD_PEER_TRUSTED_CA_FILE=""

#ETCD_PEER_AUTO_TLS="false"

#

#[Logging]

#ETCD_DEBUG="false"

#ETCD_LOG_PACKAGE_LEVELS=""

#ETCD_LOG_OUTPUT="default"

#

#[Unsafe]

#ETCD_FORCE_NEW_CLUSTER="false"

#

#[Version]

#ETCD_VERSION="false"

#ETCD_AUTO_COMPACTION_RETENTION="0"

#

#[Profiling]

#ETCD_ENABLE_PPROF="false"

#ETCD_METRICS="basic"

#

#[Auth]

#ETCD_AUTH_TOKEN="simple"

配置完成之后,在三个节点分别执行以下命令,启动etcd服务:

[root@localhost ~]# systemctl enable etcd

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

[root@localhost ~]# systemctl start etcd

[root@localhost ~]# systemctl status etcd

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2023-03-21 05:32:41 CST; 43s ago

Main PID: 55890 (etcd)

Memory: 13.0M

CGroup: /system.slice/etcd.service

└─55890 /usr/bin/etcd --name=etcd3 --data-dir=/var/lib/etcd/default.etcd --listen-client-urls=http://192.168.124.14:2379,http://127.0.0.1:2379

Mar 21 05:32:43 localhost.localdomain etcd[55890]: updated the cluster version from 3.0 to 3.3

Mar 21 05:32:43 localhost.localdomain etcd[55890]: enabled capabilities for version 3.3

Mar 21 05:32:46 localhost.localdomain etcd[55890]: the clock difference against peer 724a73845f48dda is too high [7h59m57.028466127s > 1s] (prober "ROUND_TRIPPER_SNAPSHOT")

Mar 21 05:32:46 localhost.localdomain etcd[55890]: the clock difference against peer 724a73845f48dda is too high [7h59m57.028178071s > 1s] (prober "ROUND_TRIPPER_RAFT_MESSAGE")

Mar 21 05:32:46 localhost.localdomain etcd[55890]: the clock difference against peer 799668c7ae21b36b is too high [8h0m9.998876839s > 1s] (prober "ROUND_TRIPPER_SNAPSHOT")

Mar 21 05:32:46 localhost.localdomain etcd[55890]: the clock difference against peer 799668c7ae21b36b is too high [8h0m9.998737668s > 1s] (prober "ROUND_TRIPPER_RAFT_MESSAGE")

Mar 21 05:33:16 localhost.localdomain etcd[55890]: the clock difference against peer 799668c7ae21b36b is too high [8h0m9.998549295s > 1s] (prober "ROUND_TRIPPER_RAFT_MESSAGE")

Mar 21 05:33:16 localhost.localdomain etcd[55890]: the clock difference against peer 724a73845f48dda is too high [7h59m57.028037351s > 1s] (prober "ROUND_TRIPPER_SNAPSHOT")

Mar 21 05:33:16 localhost.localdomain etcd[55890]: the clock difference against peer 724a73845f48dda is too high [7h59m57.02760354s > 1s] (prober "ROUND_TRIPPER_RAFT_MESSAGE")

Mar 21 05:33:16 localhost.localdomain etcd[55890]: the clock difference against peer 799668c7ae21b36b is too high [8h0m9.998649751s > 1s] (prober "ROUND_TRIPPER_SNAPSHOT")

etcd提供了etcdctl命令可以查看etcd集群的健康状态,如下:

[root@localhost ~]# etcdctl cluster-health

member 724a73845f48dda is healthy: got healthy result from http://192.168.124.13:2379

member 799668c7ae21b36b is healthy: got healthy result from http://192.168.124.12:2379

member b12ef3122d7a31df is healthy: got healthy result from http://192.168.124.14:2379

cluster is healthy

4.Master节点配置

接下来进行kubernetes的master节点的配置,前面介绍过master节点上要运行着apiserver、controller-Manager以及scheduler等主要的进程。以上服务的配置文件都位于/etc/kubernetes 目录中。其中,通常需要配置的是api-server,其配置文件为/etc/kubernetes/apiserver 。修改文件内容,如下:

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

#KUBE_API_ADDRESS="--insecure-bind-address=127.0.0.1"

KUBE_API_ADDRESS="--address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--port=8080"

# Port minions listen on

KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.124.12:2379,http://192.168.124.13:2379,http://192.168.124.14:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

# Add your own!

KUBE_API_ARGS=""

KUBE_API_ADDRESS 选项标识api-server进程绑定的IP地址,在本例中修改为 “–address=0.0.0.0",表示绑定本机的所有IP地址。KUBE_API_POR用来指定api-server监听的端口。KUBELET_PORT 表示kubelet监听的服务端口。KUBE_ETCD_SERVERS=选项指定etcd集群中每个节点的地址。KUBE_SERVICE_ADDRESSES 选项指定kubernetes中服务的IP地址范围。在默认情况下KUBE_ADMISSION_CONTROL选项会包含SecurityContextDeny和ServiceAccount,这2个值与权限有关,在测试的时候可以将其去掉。

配置完成之后,使用以下命令启动Master节点上的各项服务:

[root@localhost kubernetes]# systemctl start kube-apiserver

[root@localhost kubernetes]# systemctl start kube-controller-manager

[root@localhost kubernetes]# systemctl start kube-scheduler

[root@localhost ~]# systemctl enable kube-apiserver

[root@localhost ~]# systemctl enable kube-controller-manager

[root@localhost ~]# systemctl enable kube-scheduler

kubernetes的api-server提供的各个接口都是RESTful的,用户可以通过浏览器访问Master节点的8080端口,api-server会以JSON对象的形式返回各个API的地址

5.Node节点配置

Node节点上面主要运行kube-proxy和kubelet等进程。用户需要修改的配置文件主要有/etc/kubernetes/config ,/etc/kubernetes/proxy 以及 /etc/kubernetes/kubelet ,这3个文件分别为:kubernetes的全局配置,kube-proxy 配置文件 以及 kubelet配置文件。在所有的Node节点中,这些配置文件大同小异,主要区别在于各个节点的IP地址会有所不同。下面以Node1节点为例说明配置方法:

首先修改/etc/kubernetes/config ,主要修改KUBE_MASTER选项,指定apiserver的地址。如下:

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=false"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://192.168.124.12:8080"

然后修改/etc/kubernetes/kubelet 文件,如下:

[root@localhost ~]# cat /etc/kubernetes/kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=127.0.0.1"

# The port for the info server to serve on

KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=192.168.124.13"

# location of the api-server

KUBELET_API_SERVER="--api-servers=http://192.168.124.12:8080"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS=""

其中 , KUBELET_ADDRESS指定kubelet绑定的IP地址,如果想绑定本机所有的网络接口,可以将其指定为 0.0.0.0 。KUBELET_PORT指定kubelet监听的端口,KUBELET_HOSTNAME指定本节点的主机名,该选项的值可以是主机名,也可以是本机的IP地址。在本例中,Node1节点的IP地址为192.168.124.13,KUBELET_API_SERVER选项指定apiserver的地址。

最后修改 /etc/kubernetes/proxy 文件,内容如下:

[root@localhost ~]# cat /etc/kubernetes/proxy

###

# kubernetes proxy config

# default config should be adequate

# Add your own!

KUBE_PROXY_ARGS="-bind-address=0.0.0.0"

配置完成后,执行以下命令启用配置:

[root@localhost ~]# systemctl enable kube-proxy

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@localhost ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@localhost ~]# systemctl start kubelet

[root@localhost ~]# systemctl start kube-proxy

按照参照以上配置,将文件拷贝到Node2,只需要修改下kubelet配置的Host地址。

配置完成之后,在master机测试一下集群是否正常:

[root@localhost ~]# kubectl get nodes

NAME STATUS AGE

192.168.124.13 Ready 5m

192.168.124.14 Ready 47s

如果上面命令输出各个Node节点,并且其状态为Ready,即表示当前集群已经正常开始工作了。

6.配置网络

flannel是kubernetes中常用的网络配置工具,用于配置第三层(网络层)网络结构。flannel需要在集群中每台主机上运行一个名为flanneld的代理程序,负责从预配置地址空间中为每台主机分配一个网段。flannel直接使用kubernetes API 或 etcd 存储网络配置、分配的子网及任何辅助数据。

在配置flannel之前,用户需要预先设置分配给Docker网络的网段,在Master节点上执行以下命令,在etcd中添加一个名称为 /atomic.io/network/config的主键,通过该主键设置提供给Docker容器使用的网段以及子网。(如果执行命令时卡住了,可以手打)

[root@localhost ~]# etcdctl mk /atomic.io/network/config

{"Network":"172.17.0.0/16","SubnetMin":"172.17.1.0","SubnetMax":"172.17.254.0"}

然后在Node节点上修改配置文件 /etc/sysconfig/flanneld ,内容如下:

[root@localhost ~]# cat /etc/sysconfig/flanneld

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="http://192.168.124.12:2379,http://192.168.124.13:2379,http://192.168.124.14:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/atomic.io/network"

# Any additional options that you want to pass

FLANNEL_OPTIONS="--iface=ens33"

其中,FLANNEL_ETCD_ENDPOINTS用来指定etcd集群的各个节点的地址。FLANNEL_ETCD_PREFIX指定etcd中网络配置的主键,该主键要与前面设置的主键值完全一致。FLANNEL_OPTIONS中的–iface 用来指定网络使用的网络接口。

设置完成之后,分别在Node1 和 Node2上通过以下命令启动flanneld:

[root@localhost ~]# systemctl enable flanneld

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@localhost ~]# systemctl start flanneld

通过ip命令查看系统中的网络接口会看到多出一个名称问flannel0的网络接口,如下

[root@localhost ~]# ip a |grep flannel

4: flannel0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1472 qdisc pfifo_fast state UNKNOWN group default qlen 500

inet 172.17.37.0/16 scope global flannel0

从上边输出结果来看,虚拟接口flannel0的IP地址是前面指定的172.17.0.0/16。

此外flannel还生成了2个配置文件,分别是/run/flannel/subnet.env 和 /run/flannel/docker

内容如下

[root@localhost ~]# cat /run/flannel/subnet.env

FLANNEL_NETWORK=172.17.0.0/16

FLANNEL_SUBNET=172.17.37.1/24

FLANNEL_MTU=1472

FLANNEL_IPMASQ=false

[root@localhost ~]# cat /run/flannel/docker

DOCKER_OPT_BIP="--bip=172.17.37.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=true"

DOCKER_OPT_MTU="--mtu=1472"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.37.1/24 --ip-masq=true --mtu=1472"

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)