kube-ovn默认vpc

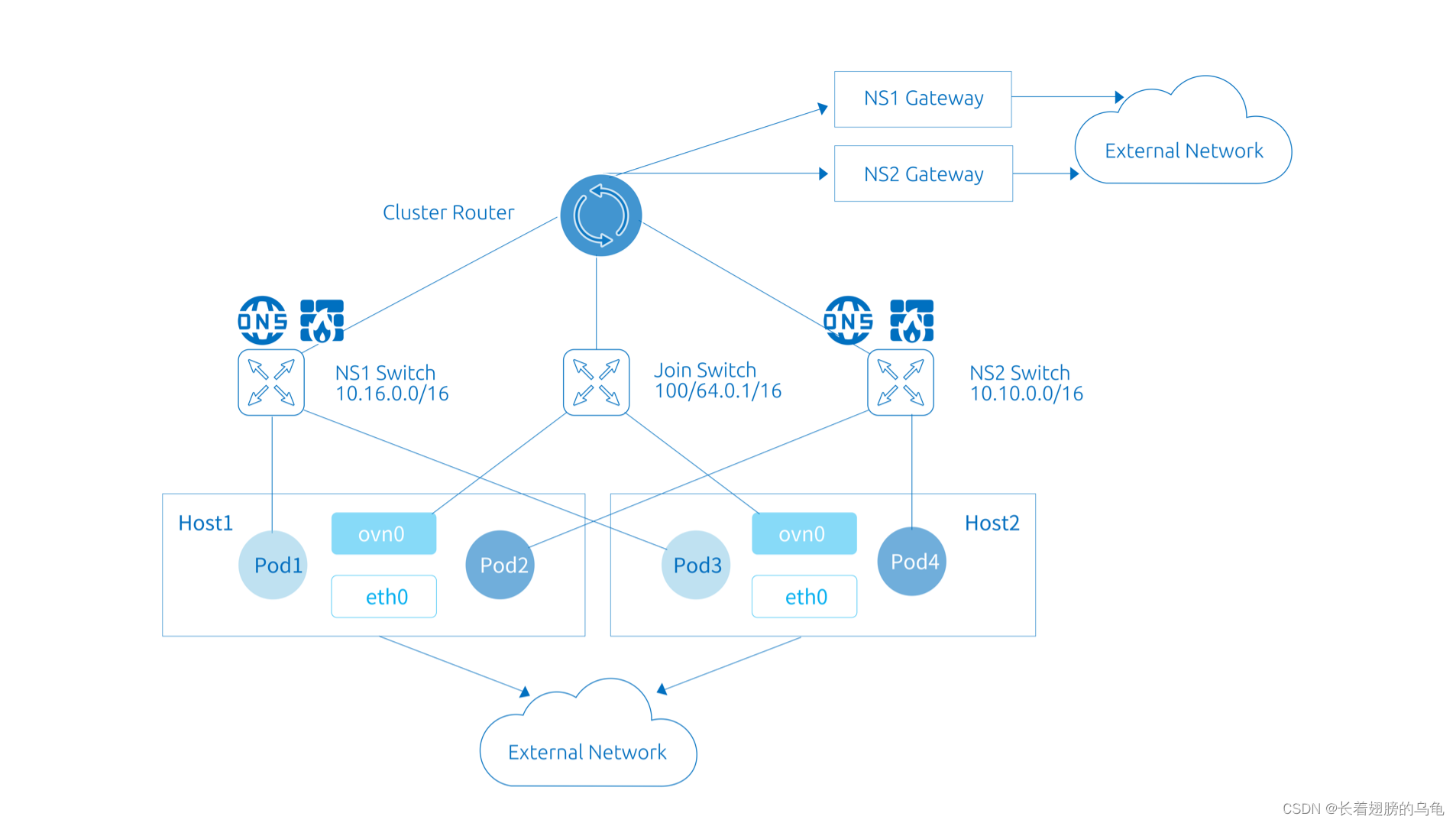

在对应节点和子网上创建的端口会添加到对应的端口组,并且添加了ovn的路由策略通过匹配报文的源ip地址所在的端口组来决定将报文发送到哪个节点的ovn0网卡,通过这种方式实现了分布式网关。join子网是默认vpc下用来管理node的子网,kube-ovn会在k8s的每个集群的节点上的br-int上创建一个ovn0网卡,用来实现默认vpc下pod与节点之间的互通,并且实现默认vpc下的网关功能。ovn-

下面图是kube-ovn默认vpc的拓扑

默认vpc

kube-ovn安装完成后会自带一个默认vpc是ovn-cluster,并且会在这个默认vpc下创建ovn-default子网、join子网,

默认子网

ovn-default是ovn-cluster下的默认子网,在创建pod时没有指定子网时会使用这个默认子网的ip。ovn-default子网的cidr是10.16.0.0/16。可以在安装kube-ovn时通过修改install.sh脚本来进行指定。

join子网

join子网是默认vpc下用来管理node的子网,kube-ovn会在k8s的每个集群的节点上的br-int上创建一个ovn0网卡,用来实现默认vpc下pod与节点之间的互通,并且实现默认vpc下的网关功能。join子网的网段是100.64.0.0/16,可以通过修改install.sh来修改join子网的网段

通过配置ovn来模拟实现kube-ovn的默认vpc下分布式网关

物理环境

首先ovn构建vpc的网络拓扑

流程:

-

创建lr1逻辑路由器

-

创建lr1的lr1-ls1逻辑路由器端口

-

创建lr1的lr1-ls2逻辑路由器端口

-

创建ls1逻辑交换机

-

创建ls2逻辑交换机

-

创建ls1的ls1-lr1的逻辑交换机端口并与lr1相连

-

创建ls2的ls2-lr1的逻辑交换机端口并与lr1相连

-

给ls1创建两个端口

-

给ls2创建两个端口

-

在master节点上创建vm1、vm2,并连接到br-int上

-

在node1节点上创建vm1、vm2,并连接上br-int上

master节点

ovn-nbctl ls-add ls1

ovn-nbctl ls-add ls2

ovn-nbctl lr-add lr1

ovn-nbctl lrp-add lr1 lr1-ls1 00:00:00:00:00:01 10.10.10.1/24

ovn-nbctl lsp-add ls1 ls1-lr1

ovn-nbctl lsp-set-type ls1-lr1 router

ovn-nbctl lsp-set-addresses ls1-lr1 00:00:00:00:00:01

ovn-nbctl lsp-set-options ls1-lr1 router-port=lr1-ls1

ovn-nbctl lrp-add lr1 lr1-ls2 00:00:00:00:00:02 10.10.20.1/24

ovn-nbctl lsp-add ls2 ls2-lr1

ovn-nbctl lsp-set-type ls2-lr1 router

ovn-nbctl lsp-set-addresses ls2-lr1 00:00:00:00:00:02

ovn-nbctl lsp-set-options ls2-lr1 router-port=lr1-ls2

ovn-nbctl lsp-add ls1 ls1-vm1

ovn-nbctl lsp-set-addresses ls1-vm1 "00:00:00:00:00:03 10.10.10.2"

ovn-nbctl lsp-set-port-security ls1-vm1 "00:00:00:00:00:03 10.10.10.2"

ovn-nbctl lsp-add ls1 ls1-vm2

ovn-nbctl lsp-set-addresses ls1-vm2 "00:00:00:00:00:04 10.10.10.3"

ovn-nbctl lsp-set-port-security ls1-vm2 "00:00:00:00:00:04 10.10.10.3"

ovn-nbctl lsp-add ls2 ls2-vm1

ovn-nbctl lsp-set-addresses ls2-vm1 "00:00:00:00:00:03 10.10.20.2"

ovn-nbctl lsp-set-port-security ls2-vm1 "00:00:00:00:00:03 10.10.20.2"

ovn-nbctl lsp-add ls2 ls2-vm2

ovn-nbctl lsp-set-addresses ls2-vm2 "00:00:00:00:00:04 10.10.20.3"

ovn-nbctl lsp-set-port-security ls2-vm2 "00:00:00:00:00:04 10.10.20.3"

ip netns add vm1

ovs-vsctl add-port br-int vm1 -- set interface vm1 type=internal

ip link set vm1 netns vm1

ip netns exec vm1 ip link set vm1 address 00:00:00:00:00:03

ip netns exec vm1 ip addr add 10.10.10.2/24 dev vm1

ip netns exec vm1 ip link set vm1 up

ip netns exec vm1 ip route add default via 10.10.10.1 dev vm1

ovs-vsctl set Interface vm1 external_ids:iface-id=ls1-vm1

ip netns add vm2

ovs-vsctl add-port br-int vm2 -- set interface vm2 type=internal

ip link set vm2 netns vm2

ip netns exec vm2 ip link set vm2 address 00:00:00:00:00:04

ip netns exec vm2 ip addr add 10.10.10.3/24 dev vm2

ip netns exec vm2 ip link set vm2 up

ip netns exec vm2 ip route add default via 10.10.10.1 dev vm2

ovs-vsctl set Interface vm2 external_ids:iface-id=ls1-vm2

master清除环境

ovs-vsctl del-port br-int vm1

ovs-vsctl del-port br-int vm2

ip netns del vm1

ip netns del vm2

ovn-nbctl ls-del ls1

ovn-nbctl ls-del ls2

ovn-nbctl lr-del lr1

node1节点

ip netns add vm1

ovs-vsctl add-port br-int vm1 -- set interface vm1 type=internal

ip link set vm1 netns vm1

ip netns exec vm1 ip link set vm1 address 00:00:00:00:00:03

ip netns exec vm1 ip addr add 10.10.20.2/24 dev vm1

ip netns exec vm1 ip link set vm1 up

ip netns exec vm1 ip route add default via 10.10.20.1 dev vm1

ovs-vsctl set Interface vm1 external_ids:iface-id=ls2-vm1

ip netns add vm2

ovs-vsctl add-port br-int vm2 -- set interface vm2 type=internal

ip link set vm2 netns vm2

ip netns exec vm2 ip link set vm2 address 00:00:00:00:00:04

ip netns exec vm2 ip addr add 10.10.20.3/24 dev vm2

ip netns exec vm2 ip link set vm2 up

ip netns exec vm2 ip route add default via 10.10.20.1 dev vm2

ovs-vsctl set Interface vm2 external_ids:iface-id=ls2-vm2

node1清除

ovs-vsctl del-port br-int vm1

ovs-vsctl del-port br-int vm2

ip netns del vm1

ip netns del vm2

创建join子网

master节点

# 创建逻辑交换机jion

ovn-nbctl ls-add join

# 关联逻辑路由器router0

ovn-nbctl lrp-add lr1 lr1-join 04:ac:10:ff:35:02 10.10.30.1/24

ovn-nbctl lsp-add join join-lr1

ovn-nbctl lsp-set-type join-lr1 router

ovn-nbctl lsp-set-addresses join-lr1 04:ac:10:ff:35:02

ovn-nbctl lsp-set-options join-lr1 router-port=lr1-join

ovn-nbctl lsp-add join master

ovn-nbctl lsp-set-addresses master "04:ac:10:ff:35:04 10.10.30.2"

ovn-nbctl lsp-add join node1

ovn-nbctl lsp-set-addresses node1 "04:ac:10:ff:35:05 10.10.30.3"

# 在master节点创建ovn0网卡

ovs-vsctl add-port br-int ovn0 -- set Interface ovn0 type=internal

ovs-vsctl set Interface ovn0 external_ids:iface-id=master

ifconfig ovn0 10.10.30.2 netmask 255.255.255.0

ifconfig ovn0 hw ether 04:ac:10:ff:35:04

node1节点

# 在node01上

ovs-vsctl add-port br-int ovn0 -- set Interface ovn0 type=internal

ovs-vsctl set Interface ovn0 external_ids:iface-id=node1

ifconfig ovn0 10.10.30.3 netmask 255.255.255.0

ifconfig ovn0 hw ether 04:ac:10:ff:35:05添加默认ovn的静态路由

master节点

# 添加后容器内可以ping通宿主机,指定lr1的路由规则,指定ip从某个端口出去

ovn-nbctl lr-route-add lr1 0.0.0.0/0 10.10.30.1目的是给默认vpc对应的逻辑路由器添加路由条目,将所有的报文的下一条指定为join子网的路由端口

在节点上添加路由表和snat规则

master节点

# 用来引流到ovn0

route add -net 10.10.10.0/24 gw 10.10.30.1

route add -net 10.10.20.0/24 gw 10.10.30.1

# 用来做snat

iptables -t nat -A POSTROUTING -s 10.10.10.0/24 -o ens37 -j MASQUERADE

iptables -t nat -A POSTROUTING -s 10.10.20.0/24 -o ens37 -j MASQUERADE

node1节点

route add -net 10.10.10.0/24 gw 10.10.30.1

route add -net 10.10.20.0/24 gw 10.10.30.1

iptables -t nat -A POSTROUTING -s 10.10.10.0/24 -o ens37 -j MASQUERADE

iptables -t nat -A POSTROUTING -s 10.10.20.0/24 -o ens37 -j MASQUERADE在master的node1节点添加了路由规则和snat规则,路由规则的目的是将外界访问ovn内的网络的报文引入到join子网。snat规则的目的是将ovn内部访问外部的报文进行snat转换,如果不进行snat转换不能够收到返回的报文。

添加端口组和ovn策略路由

对应端口组包含了节点上分配的端口,从而实现分布式网关

ovn-nbctl lr-policy-add lr1 31000 ip4.dst==10.10.10.0/24 allow

ovn-nbctl lr-policy-add lr1 31000 ip4.dst==10.10.20.0/24 allow

ovn-nbctl lr-policy-add lr1 31000 ip4.dst==10.10.30.0/24 allow

# 将访问master和node1的流量进行reroute

ovn-nbctl lr-policy-add lr1 30000 ip4.dst==192.168.100.100 reroute 10.10.30.2

ovn-nbctl lr-policy-add lr1 30000 ip4.dst==192.168.100.200 reroute 10.10.30.3

# 匹配端口组来的流量,将之reroute

ovn-nbctl lr-policy-add lr1 29000 "ip4.src == \$ls1.master_ip4" reroute 10.10.30.2

ovn-nbctl lr-policy-add lr1 29000 "ip4.src == \$ls1.node1_ip4" reroute 10.10.30.3

ovn-nbctl lr-policy-add lr1 29000 "ip4.src == \$ls2.master_ip4" reroute 10.10.30.2

ovn-nbctl lr-policy-add lr1 29000 "ip4.src == \$ls2.node1_ip4" reroute 10.10.30.3

# 添加ls1.master端口组,并将ls1-vm2 ls1-vm1加入到端口组中

ovn-nbctl pg-add ls1.master ls1-vm2 ls1-vm1

# 添加ls2.node1端口组,并将ls2-vm2 ls2-vm1添加到端口组中

ovn-nbctl pg-add ls2.node1 ls2-vm2 ls2-vm1在kube-ovn中根据节点名字和子网名字创建了端口组,比如在我们的拓扑中有两个节点master和node1,并且创建了两个逻辑交换机ls1和ls2,因此会在ovn中创建四个端口组:ls1.master、ls2.master、ls1.node1、ls2.node2。在对应节点和子网上创建的端口会添加到对应的端口组,并且添加了ovn的路由策略通过匹配报文的源ip地址所在的端口组来决定将报文发送到哪个节点的ovn0网卡,通过这种方式实现了分布式网关。

源码分析 1

下面是初始化和构建默认vpc拓扑的流程

Kube-ovn controller在启动时会进行初始化,下面是一些初始化的过程

c.InitOVN()函数

这个函数会创建默认的vpc,并且在默认vpc中创建join子网

-

创建vpc的ovn逻辑路由器

-

创建默认vpc的join子网,这个子网给k8s集群的每个节点分配一个ip

-

创建默认vpc的ovn-default子网,在没有指定subnet的pod使用这个子网分配ip

c.InitDefaultVpc()函数

这个函数更新默认vpc的status信息

c.InitIPAM()函数

这个函数主要用来初始化kube-ovn controller的ipam模块,将所有已经定义的subnet和已经使用了的ip地址记录到ipam中,为后面创建pod分配ip地址做准备

-

遍历了subnet资源,将subnet信息存入ipam中

-

遍历了ippool资源,将ippools信息存入ipam中

-

遍历了ips对象,将已经使用的ip记录到ipam中,当给pod分配ip后会创建ips对象

-

遍历了pod对象,读取pod中已经分配的ip记录到ipam中

-

遍历了vip对象,将ip记录到ipam中

-

遍历了eip对象,将ip记录到ipam中

-

遍历了node对象,将ip记录到ipam中

initNodeRoutes()函数

初始化ovn的路由策略,对于pod访问master和node1节点的流量添加了ovn的路由策略。

在我的k8s集群中这个函数添加了这两条策略,192.168.40.199、192.168.40.201是master和node节点的ip地址,100.64.0.3、100.64.0.2是master和node节点上ovn0网卡的ip地址

//30000 ip4.dst == 192.168.40.199 reroute 100.64.0.3

//30000 ip4.dst == 192.168.40.201 reroute 100.64.0.2

-

获取了所有的节点,并且获取了节点的ip和节点ovn0的ip

-

调用migrateNodeRoute添加ovn策略路由

c.addNodeGwStaticRoute()函数

这个函数主要是给默认vpc的逻辑路由器添加静态路由。

-

添加默认vpc的逻辑路由器静态路由 0.0.0.0/0 100.64.0.1 dst-ip

源码分析 2

Kube-ovn controller在启动时根据watch-list机制会调用node添加,下面是节点添加的流程

-

给新节点从join子网中分配ip、mac地址

-

在join逻辑交换机上添加端口,表示新节点上的ovn0

-

遍历所有子网,以 子网名字.节点名_ip4创建端口组

-

添加路由策略 eg:29000 ip4.src == $ovn.default.master_ip4 reroute 100.64.0.2

-

上面两步实现了默认vpc的分布式网关功能,后面还需要进行snat

func (c *Controller) handleAddNode(key string) error {

c.nodeKeyMutex.LockKey(key)

defer func() { _ = c.nodeKeyMutex.UnlockKey(key) }()

cachedNode, err := c.nodesLister.Get(key)

if err != nil {

if k8serrors.IsNotFound(err) {

return nil

}

return err

}

node := cachedNode.DeepCopy()

klog.Infof("handle add node %s", node.Name)

subnets, err := c.subnetsLister.List(labels.Everything())

if err != nil {

klog.Errorf("failed to list subnets: %v", err)

return err

}

//TODO 获取internalIp,是k8s通信的ip地址

nodeIPv4, nodeIPv6 := util.GetNodeInternalIP(*node)

//TODO 这个循环的作用是判断node上的ip地址是否和默认vpc下subnet的cidr冲突,如果冲突会发送警告事件

for _, subnet := range subnets {

//TODO 只和默认vpc相关

if subnet.Spec.Vpc != c.config.ClusterRouter {

continue

}

v4, v6 := util.SplitStringIP(subnet.Spec.CIDRBlock)

if subnet.Spec.Vlan == "" && (util.CIDRContainIP(v4, nodeIPv4) || util.CIDRContainIP(v6, nodeIPv6)) {

msg := fmt.Sprintf("internal IP address of node %s is in CIDR of subnet %s, this may result in network issues", node.Name, subnet.Name)

klog.Warning(msg)

c.recorder.Eventf(&v1.Node{ObjectMeta: metav1.ObjectMeta{Name: node.Name, UID: types.UID(node.Name)}}, v1.EventTypeWarning, "NodeAddressConflictWithSubnet", msg)

break

}

}

//TODO 没有太理解provider

if err = c.handleNodeAnnotationsForProviderNetworks(node); err != nil {

klog.Errorf("failed to handle annotations of node %s for provider networks: %v", node.Name, err)

return err

}

//TODO 获取join子网

subnet, err := c.subnetsLister.Get(c.config.NodeSwitch)

if err != nil {

klog.Errorf("failed to get node subnet: %v", err)

return err

}

//TODO 下面这一坨代码需要获取node的ovn0的ip、mac地址,如果不存在则需要随机分配一个地址

var v4IP, v6IP, mac string

portName := fmt.Sprintf("node-%s", key)

if node.Annotations[util.AllocatedAnnotation] == "true" && node.Annotations[util.IPAddressAnnotation] != "" && node.Annotations[util.MacAddressAnnotation] != "" {

macStr := node.Annotations[util.MacAddressAnnotation]

v4IP, v6IP, mac, err = c.ipam.GetStaticAddress(portName, portName, node.Annotations[util.IPAddressAnnotation],

&macStr, node.Annotations[util.LogicalSwitchAnnotation], true)

if err != nil {

klog.Errorf("failed to alloc static ip addrs for node %v: %v", node.Name, err)

return err

}

} else {

v4IP, v6IP, mac, err = c.ipam.GetRandomAddress(portName, portName, nil, c.config.NodeSwitch, "", nil, true)

if err != nil {

klog.Errorf("failed to alloc random ip addrs for node %v: %v", node.Name, err)

return err

}

}

ipStr := util.GetStringIP(v4IP, v6IP)

//TODO 在join逻辑交换机上给node创建ovs端口

if err := c.OVNNbClient.CreateBareLogicalSwitchPort(c.config.NodeSwitch, portName, ipStr, mac); err != nil {

return err

}

//TODO 添加策略路由,这个ip是节点join子网的ip

for _, ip := range strings.Split(ipStr, ",") {

if ip == "" {

continue

}

nodeIP, af := nodeIPv4, 4

if util.CheckProtocol(ip) == kubeovnv1.ProtocolIPv6 {

nodeIP, af = nodeIPv6, 6

}

//TODO 为了添加这个路由策略30000 ip4.dst == 192.168.40.199 reroute 100.64.0.3

if nodeIP != "" {

//TODO 这个便是集群node节点通信的地址策略,做reroute

var (

match = fmt.Sprintf("ip%d.dst == %s", af, nodeIP)

action = kubeovnv1.PolicyRouteActionReroute

externalIDs = map[string]string{

"vendor": util.CniTypeName,

"node": node.Name,

"address-family": strconv.Itoa(af),

}

)

klog.Infof("add policy route for router: %s, match %s, action %s, nexthop %s, externalID %v", c.config.ClusterRouter, match, action, ip, externalIDs)

if err = c.addPolicyRouteToVpc(

c.config.ClusterRouter,

//TODO 匹配目的ip是节点internal ip,action是reroute,下一条ip是节点上ovn0的ip

&kubeovnv1.PolicyRoute{

Priority: util.NodeRouterPolicyPriority,

Match: match,

Action: action,

NextHopIP: ip,

},

externalIDs,

); err != nil {

klog.Errorf("failed to add logical router policy for node %s: %v", node.Name, err)

return err

}

if err = c.deletePolicyRouteForLocalDNSCacheOnNode(node.Name, af); err != nil {

return err

}

if c.config.NodeLocalDNSIP != "" {

if err = c.addPolicyRouteForLocalDNSCacheOnNode(portName, ip, node.Name, af); err != nil {

return err

}

}

}

}

//TODO 添加静态路由,将目的地址是0.0.0.0/0的下一条为join子网网关。

if err := c.addNodeGwStaticRoute(); err != nil {

klog.Errorf("failed to add static route for node gw: %v", err)

return err

}

patchPayloadTemplate := `[{

"op": "%s",

"path": "/metadata/annotations",

"value": %s

}]`

op := "replace"

if len(node.Annotations) == 0 {

node.Annotations = map[string]string{}

op = "add"

}

//TODO 设置了node在join子网的地址、网关信息

node.Annotations[util.IPAddressAnnotation] = ipStr

node.Annotations[util.MacAddressAnnotation] = mac

node.Annotations[util.CidrAnnotation] = subnet.Spec.CIDRBlock

node.Annotations[util.GatewayAnnotation] = subnet.Spec.Gateway

node.Annotations[util.LogicalSwitchAnnotation] = c.config.NodeSwitch

node.Annotations[util.AllocatedAnnotation] = "true"

node.Annotations[util.PortNameAnnotation] = fmt.Sprintf("node-%s", key)

raw, _ := json.Marshal(node.Annotations)

//TODO 构造patch请求,修改node的注解信息

patchPayload := fmt.Sprintf(patchPayloadTemplate, op, raw)

_, err = c.config.KubeClient.CoreV1().Nodes().Patch(context.Background(), key, types.JSONPatchType, []byte(patchPayload), metav1.PatchOptions{}, "")

if err != nil {

klog.Errorf("patch node %s failed: %v", key, err)

return err

}

//TODO 给node节点创建的ovn0创建ips

if err := c.createOrUpdateCrdIPs("", ipStr, mac, c.config.NodeSwitch, "", node.Name, "", ""); err != nil {

klog.Errorf("failed to create or update IPs node-%s: %v", key, err)

return err

}

//TODO 不太理解这个

//TODO 给所有默认vpc下子网都添加这个路由策略$ovn.default.master_ip4这个东西不太理解,个人的理解是匹配指定子网在指定节点,我自己配的没起作用。再从代码看$ovn.default.master_ip4是端口组的名字,

//TODO 每次创建pod的时候会根据pod调度的节点来将pod的port id添加到对应子网节点的端口组里,这里又添加了策略路由匹配端口组来选择reroute到哪个ovn0,从而实现了分布式网关

//TODO 会添加这个策略 29000 ip4.src == $ovn.default.master_ip4 reroute 100.64.0.2

for _, subnet := range subnets {

//TODO 只关注默认vpc下的子网

if (subnet.Spec.Vlan != "" && !subnet.Spec.LogicalGateway) || subnet.Spec.Vpc != c.config.ClusterRouter || subnet.Name == c.config.NodeSwitch || subnet.Spec.GatewayType != kubeovnv1.GWDistributedType {

continue

}

if err = c.createPortGroupForDistributedSubnet(node, subnet); err != nil {

klog.Errorf("failed to create port group for node %s and subnet %s: %v", node.Name, subnet.Name, err)

return err

}

if err = c.addPolicyRouteForDistributedSubnet(subnet, node.Name, v4IP, v6IP); err != nil {

klog.Errorf("failed to add policy router for node %s and subnet %s: %v", node.Name, subnet.Name, err)

return err

}

// policy route for overlay distributed subnet should be reconciled when node ip changed

c.addOrUpdateSubnetQueue.Add(subnet.Name)

}

// ovn acl doesn't support address_set name with '-', so replace '-' by '.'

pgName := strings.ReplaceAll(node.Annotations[util.PortNameAnnotation], "-", ".")

if err = c.OVNNbClient.CreatePortGroup(pgName, map[string]string{networkPolicyKey: "node" + "/" + key}); err != nil {

klog.Errorf("create port group %s for node %s: %v", pgName, key, err)

return err

}

//TODO 添加策略路由,不理解。看着也是遍历subnet添加路由策略,和上面遍历subnet添加路由策略有什么区别

if err := c.addPolicyRouteForCentralizedSubnetOnNode(node.Name, ipStr); err != nil {

klog.Errorf("failed to add policy route for node %s, %v", key, err)

return err

}

if err := c.UpdateChassisTag(node); err != nil {

return err

}

return c.retryDelDupChassis(util.ChasRetryTime, util.ChasRetryIntev+2, c.cleanDuplicatedChassis, node)

}

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)