nacos在k8s上的集群安装实践

nacos在k8s上的集群安装实践,k8s版本为1.27.x ,nacos 2.0.3版本

概述

本文主要对 nacos 在k8s上的集群安装 进行说明与实践。主要版本信息,k8s: 1.27.x,nacos: 2.0.3。运行环境为 centos 7.x。

实践

在线安装参考: nacos官网安装指南

离线安装: 需要参考 在线安装,整理其中的镜像,下载后上传私服(注意镜像地址及nfs相关修改,其它默认就好)。

懒人快递: nfs-subdir-external-provisioner-4.0.2, mysql离线镜像安装包,版本为5.7.26 ,

nacos在k8s上集群化安装-nacos-2.0.3-k8s-1.27.x-yaml配置文件 , nacos-peer-finder-plugin-nacos集群安装所需要的镜像

nacos-server离线镜像安装包:链接:https://pan.baidu.com/s/1uQ07yzIkXyQcu2wUPCHDqQ?pwd=4utl

提取码:4utl

–来自百度网盘超级会员V2的分享

nfs

安装

centos 7.x 上安装 nfs

repotrack -p nfs nfs-utils

repotrack -p nfs rpcbind

zip -r nfs.zip ./nfs

rpm -ivh --replacefiles --replacepkgs --force --nodeps *.rpm

# 安装nfs和rpc的软件包

yum install -y nfs-utils rpcbind

# 开启nfs、rpcbind服务

systemctl start nfs

systemctl start rpcbind

# 开机自启

systemctl enable nfs

systemctl enable rpcbind

使用

[root@hadoop02 ~]# mkdir -p /data/nfs

[root@hadoop02 data]# chmod 777 ./nfs

ls /etc/exports

cat /etc/exports

vi /etc/exports

/data/nfs *(rw,sync,no_root_squash)

systemctl restart nfs

systemctl restart rpcbind

showmount -e 10.xx.xx.143

k8s持久化

nacos集群安装,使用到了两种类型的持久化策略,一种直接使用卷挂载 nfs,这种在mysql中使用,另一种使用 pvc,应用场景在 nacos 上。

mysql-nfs.yaml

apiVersion: v1

kind: ReplicationController

metadata:

namespace: default

name: mysql

labels:

name: mysql

spec:

replicas: 1

selector:

name: mysql

template:

metadata:

labels:

name: mysql

spec:

containers:

- name: mysql

image: harbor.easzlab.io.local:8443/library/nacos/nacos-mysql:5.7

ports:

- containerPort: 3306

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: "root"

- name: MYSQL_DATABASE

value: "nacos_devtest"

- name: MYSQL_USER

value: "nacos"

- name: MYSQL_PASSWORD

value: "nacos"

volumes:

- name: mysql-data

nfs:

server: 10.xx.xx.143

path: /data/nfs/nacos/mysql

---

apiVersion: v1

kind: Service

metadata:

name: mysql

labels:

name: mysql

spec:

ports:

- port: 3306

targetPort: 3306

selector:

name: mysql

nacos-pvc-nfs.yaml

部分代码如下

# 请阅读Wiki文章

# https://github.com/nacos-group/nacos-k8s/wiki/%E4%BD%BF%E7%94%A8peerfinder%E6%89%A9%E5%AE%B9%E6%8F%92%E4%BB%B6

---

apiVersion: v1

kind: Service

metadata:

namespace: default

name: nacos-headless

labels:

app: nacos

spec:

publishNotReadyAddresses: true

ports:

- port: 8848

name: server

targetPort: 8848

- port: 9848

name: client-rpc

targetPort: 9848

- port: 9849

name: raft-rpc

targetPort: 9849

## 兼容1.4.x版本的选举端口

- port: 7848

name: old-raft-rpc

targetPort: 7848

clusterIP: None

selector:

app: nacos

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: default

name: nacos-cm

data:

mysql.host: "mysql"

mysql.db.name: "nacos_devtest"

mysql.port: "3306"

mysql.user: "nacos"

mysql.password: "nacos"

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: default

name: nacos

spec:

podManagementPolicy: Parallel

serviceName: nacos-headless

replicas: 2

template:

metadata:

labels:

app: nacos

annotations:

pod.alpha.kubernetes.io/initialized: "true"

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- nacos

topologyKey: "kubernetes.io/hostname"

serviceAccountName: nfs-client-provisioner

initContainers:

- name: peer-finder-plugin-install

image: harbor.easzlab.io.local:8443/library/nacos/nacos-peer-finder-plugin:1.1

imagePullPolicy: Always

volumeMounts:

- mountPath: /home/nacos/plugins/peer-finder

name: data

subPath: peer-finder

containers:

- name: nacos

imagePullPolicy: Always

image: harbor.easzlab.io.local:8443/library/nacos/nacos-server:latest

resources:

requests:

memory: "2Gi"

cpu: "500m"

ports:

- containerPort: 8848

name: client-port

- containerPort: 9848

name: client-rpc

- containerPort: 9849

name: raft-rpc

- containerPort: 7848

name: old-raft-rpc

env:

- name: NACOS_REPLICAS

value: "2"

- name: SERVICE_NAME

value: "nacos-headless"

- name: DOMAIN_NAME

value: "cluster.local"

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: MYSQL_SERVICE_HOST

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.host

- name: MYSQL_SERVICE_DB_NAME

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.db.name

- name: MYSQL_SERVICE_PORT

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.port

- name: MYSQL_SERVICE_USER

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.user

- name: MYSQL_SERVICE_PASSWORD

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.password

- name: SPRING_DATASOURCE_PLATFORM

value: "mysql"

- name: NACOS_SERVER_PORT

value: "8848"

- name: NACOS_APPLICATION_PORT

value: "8848"

- name: PREFER_HOST_MODE

value: "hostname"

volumeMounts:

- name: data

mountPath: /home/nacos/plugins/peer-finder

subPath: peer-finder

- name: data

mountPath: /home/nacos/data

subPath: data

- name: data

mountPath: /home/nacos/logs

subPath: logs

volumeClaimTemplates:

- metadata:

name: data

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes: [ "ReadWriteMany" ]

resources:

requests:

storage: 2Gi

selector:

matchLabels:

app: nacos

nacos安装

创建角色

[root@hadoop01 nacos-k8s]# kubectl create -f deploy/nfs/rbac.yaml

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

[root@hadoop01 nacos-k8s]# kubectl create -f deploy/nfs/deployment.yaml

serviceaccount/nfs-client-provisioner created

deployment.apps/nfs-client-provisioner created

[root@hadoop01 nacos-k8s]# kubectl create -f deploy/nfs/class.yaml

storageclass.storage.k8s.io/managed-nfs-storage created

[root@hadoop01 nacos-k8s]# kubectl get pod -l app=nfs-client-provisioner

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-7ddb7b97b6-n4vh2 1/1 Running 0 24s

部署数据库

[root@hadoop01 nacos-k8s]# kubectl create -f deploy/mysql/mysql-nfs.yaml

replicationcontroller/mysql created

service/mysql created

[root@hadoop01 nacos-k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-dl99b 1/1 Running 0 15s

nfs-client-provisioner-7ddb7b97b6-n4vh2 1/1 Running 0 2m23s

kubectl exec -it mysql-dl99b CONTAINER_ID mysql -u nacos

kubectl exec -it mysql-dl99b sh

# mysql -h localhost -u nacos -p

Enter password:

执行数据库初始化语句

不用执行,已经好了

mysql> show tables;

+-------------------------+

| Tables_in_nacos_devtest |

+-------------------------+

| config_info |

| config_info_aggr |

| config_info_beta |

| config_info_tag |

| config_tags_relation |

| group_capacity |

| his_config_info |

| permissions |

| roles |

| tenant_capacity |

| tenant_info |

| users |

+-------------------------+

12 rows in set (0.00 sec)

mysql> select * from uses;

ERROR 1146 (42S02): Table 'nacos_devtest.uses' doesn't exist

mysql> select * from users;

+----------+--------------------------------------------------------------+---------+

| username | password | enabled |

+----------+--------------------------------------------------------------+---------+

| nacos | $2a$10$EuWPZHzz32dJN7jexM34MOeYirDdFAZm2kuWj7VEOJhhZkDrxfvUu | 1 |

+----------+--------------------------------------------------------------+---------+

1 row in set (0.00 sec)

mysql>

部署nacos

kubectl create -f deploy/nacos/nacos-pvc-nfs.yaml

ingress

ingress-nacos.yaml

如有疑问,请参考 k8s中ingress-nginx离线安装实践

#ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nacos

namespace: default

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

# kubernetes.io/ingress.class: nginx

spec:

ingressClassName: nginx

rules:

- host: "nacos.fun.com"

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: nacos-headless

port:

number: 8848

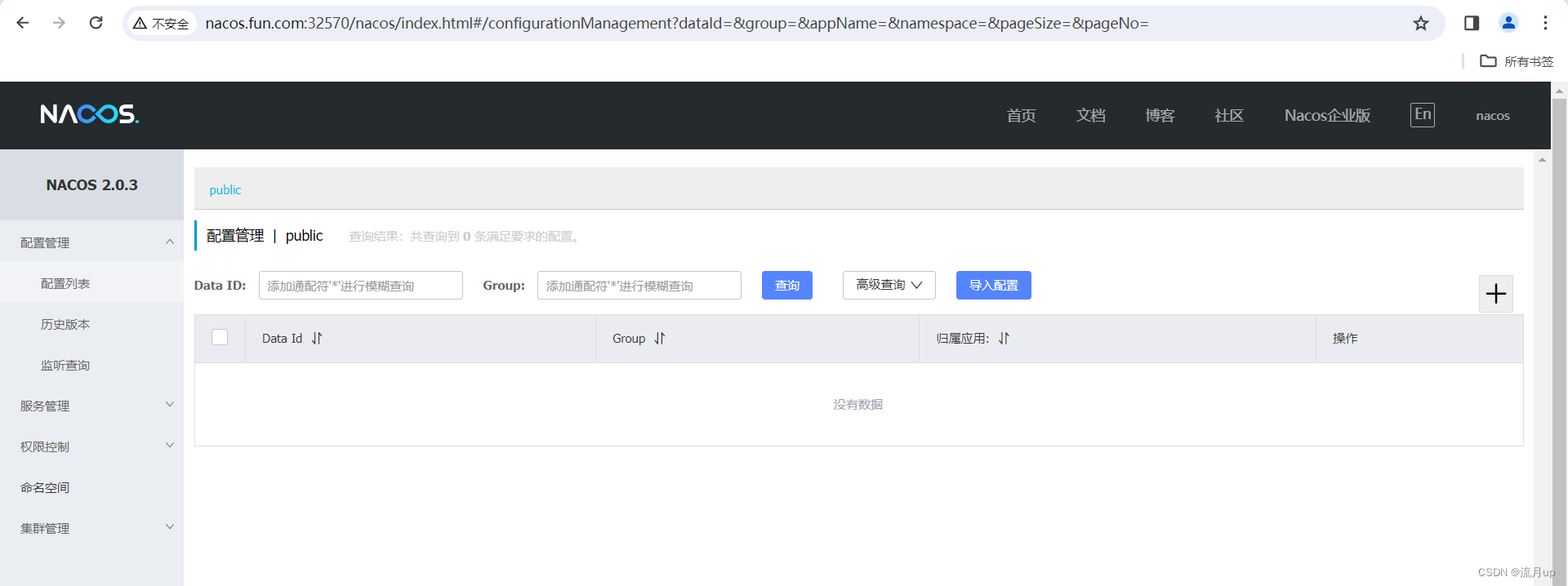

效果展示

访问地址:http://nacos.fun.com:32570/nacos/index.html

直接使用 http://nacos.fun.com 是无法访问的。

问题修复

解决需要端口进行访问:http://nacos.fun.com:32570/nacos/index.html

#ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nacos

namespace: default

annotations:

# nginx.ingress.kubernetes.io/rewrite-target: /

# kubernetes.io/ingress.class: nginx

spec:

ingressClassName: nginx

rules:

- host: "nacos.fun.com"

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: nacos-headless

port:

number: 8848

红线部分,解决了带端口访问问题

结束

nacos在k8s上的集群安装实践

更多推荐

已为社区贡献10条内容

已为社区贡献10条内容

所有评论(0)