k8s快速部署,附带脚本

将yml文件放在文章中是不想遇到下载不下来的情况,可跳过yml文件内容,文章本身内容不多。本章内容可供学习及简单了解k8s集群部署及使用。参考文档里有些地方是有坑的。使用本章整理内容可完整部署出两个版本。[k8s1.18.8集群][metrics server][kuboard] [k8s1.26.1集群][metrics server][kuboard v3]

内容导航

(一)资产信息

| 项目 | Value |

|---|---|

| CPU | >=2 |

(二)脚本内容

注意:脚本中的初始化命令中的–apiserver-advertise-address参数=172.16.0.1注意要更改为个人实际master的ip地址。

注意:1.26版本也适用,containerd服务要提前改配置后再初始化

#!/bin/bash

#yum update -y

yum -y install ipvsadm && ipvsadm -C && iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

#关闭防火墙

systemctl stop firewalld && systemctl disable firewalld && iptables -F

#关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config && setenforce 0

#关闭交换分区

swapoff -a

#加载模块

modprobe overlay

modprobe br_netfilter

modprobe br_netfilter

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack_ipv4

#创建/etc/modules-load.d/containerd.conf配置文件

cat >/etc/modules-load.d/containerd.conf<<-EOF

overlay

br_netfilter

EOF

#设置内核参数

cat >/etc/sysctl.d/k8s.conf<<-EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

#docker配置文件

mkdir /etc/docker/

cat >/etc/docker/daemon.json<<-EOF

{

"registry-mirrors": ["https://gqs7xcfd.mirror.aliyuncs.com","https://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {"max-size": "100m"},

"storage-driver": "overlay2"

}

EOF

#下载docker repo

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum makecache -y

yum -y install docker-ce-19.03.9-3.el7 docker-ce-cli-19.03.9-3.el7

#yum -y install docker-ce-20.10.18-3.el7 docker-ce-cli-20.10.18-3.el7

#启动docker

systemctl daemon-reload && systemctl enable docker && systemctl start docker

#安装kubeadm,kubelet和kubectl

cat >/etc/yum.repos.d/kubernetes.repo<<-EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum makecache -y

yum install -y kubelet-1.18.8 kubeadm-1.18.8 kubectl-1.18.8

#yum install -y kubelet-1.26.1 kubeadm-1.26.1 kubectl-1.26.1

#设置kubelet开机自启

systemctl enable kubelet

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

source /etc/profile

##################

#!!!!!!!!!!!!!!!!!!!!!!!node节点注释后面所有内容!!!!!!!!!!!!!!!

#################

#初始化前要使用域名作为control-plane-endpoint的话,需要提前做好解析,解析地址为master地址。用域名是为了多master。可以直接写ip地址。

kubeadm init --kubernetes-version 1.18.8 --apiserver-advertise-address=172.16.0.1 --control-plane-endpoint=k8s.cluster.com --apiserver-cert-extra-sans=k8s.cluster.com,172.16.0.1 --service-cidr=10.96.0.0/16 --pod-network-cidr=10.245.0.0/16 --image-repository registry.aliyuncs.com/google_containers && mkdir -p $HOME/.kube && cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && chown $(id -u):$(id -g) $HOME/.kube/config

#kubeadm init --kubernetes-version 1.18.8 --apiserver-advertise-address=172.16.0.1 --service-cidr=10.96.0.0/16 --pod-network-cidr=10.245.0.0/16 --image-repository registry.aliyuncs.com/google_containers && mkdir -p $HOME/.kube && cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && chown $(id -u):$(id -g) $HOME/.kube/config

#master节点初始化,版本必须和上边安装的kubelet,kubead,kubectl保持一致

#--apiserver-advertise-address 本机ip

#--kubernetes-version v1.18.8 指定版本

#--apiserver-advertise-address 为通告给其它组件的IP,一般应为master节点的IP地址

#--service-cidr 指定service网络,不能和node网络冲突

#--pod-network-cidr 指定pod网络,不能和node网络、service网络冲突

#--image-repository registry.aliyuncs.com/google_containers 指定镜像源,由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

#--control-plane-endpoint 虚拟ip地址(keepalive),hosts绑定,为了多部署master。

#如果k8s版本比较新,可能阿里云没有对应的镜像,就需要自己从其它地方获取镜像了。

#--control-plane-endpoint 标志应该被设置成负载均衡器的地址或 DNS 和端口(可选)

#获取网络插件,更改flannel.yml网络配置Network,要和 pod-network-cidr保持一致

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

(三)网络插件flannel

1,使用flannel网络插件

1,更改kube-flannel.yml中的网络配置Network,要和初始化时候的pod-network-cidr保持一致

vim kube-flannel.yml

2,修改网络模式为ipvs,(svc无法ping通)。

1,修改configmap,将mode: " "修改为mode: “ipvs”,:wq!保存退出

kubectl edit -n kube-system cm kube-proxy

2,查看kube-system命名空间下的kube-proxy并删除,使flannel插件生效

kubectl get pod -n kube-system |grep kube-proxy |awk '{system("kubectl delete pod "$1" -n kube-system")}'

(四)yml基本结构编写

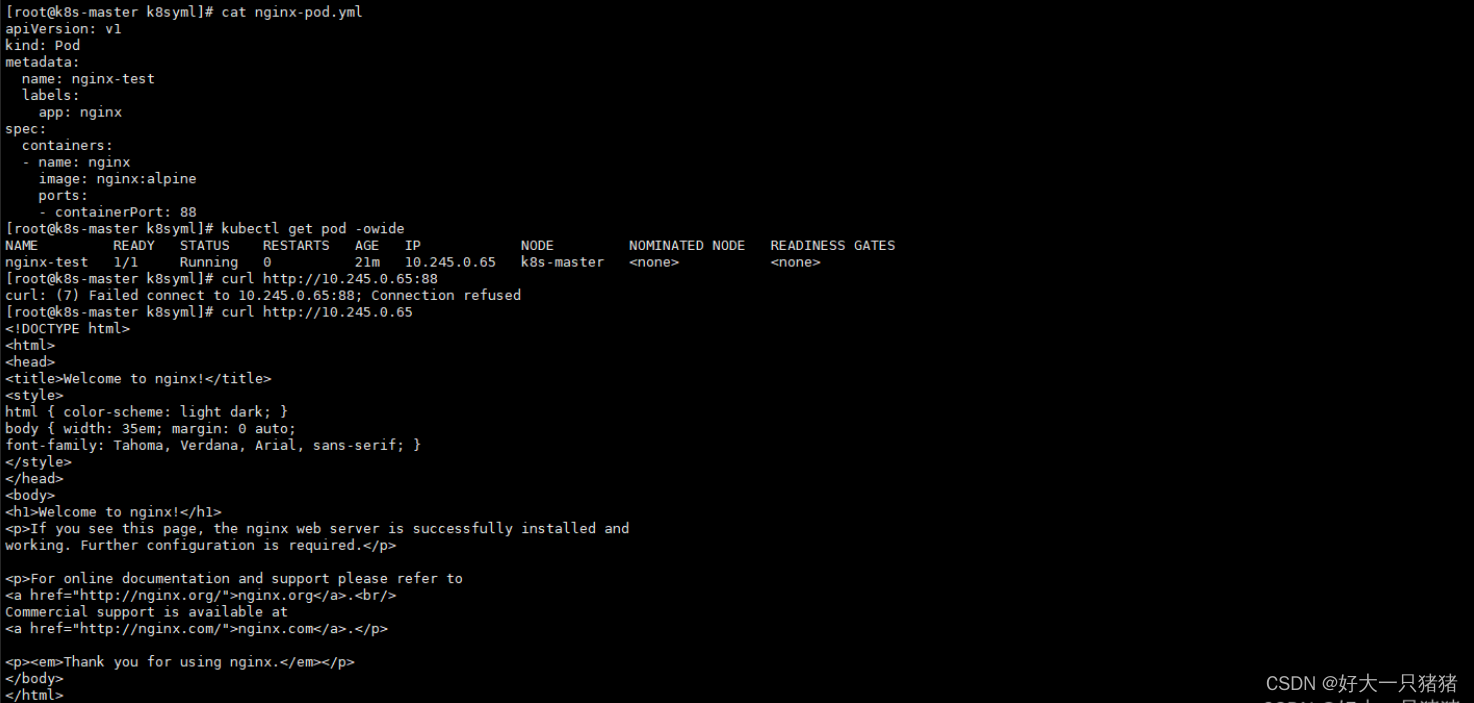

1,pod文件编写

apiVersion: v1 #xxx区别还没看

kind: Pod #说明服务类型

metadata: #元数据集

name: nginx #命名

labels: #标签

app: nginx #标签内容

spec: #规格/结构

containers: #容器详情

- name: nginx #容器名

image: nginx:alpine #容器镜像名

ports: #端口

- containerPort: 80 #pod端口,应该与程序监听端口一致

这里有点疑惑,pod文件中containerPort: 88,但是访问80是可以的。

2,deploy文件编写

rs,rc模式可以看看(这里演示一个pod多个container)

apiVersion: apps/v1 #xx区别还没认真看过

kind: Deployment #说明服务类型

metadata: #元数据集

name: nginx-deployment #命名

namespace: taoxu #指定namespace,默认为default

spec: #deploy的规范

selector: #标签选择器(可以倒过来看,这里匹配的是下边模板定义的。)

matchLabels: #匹配标签

app: nginx #模板定义的标签

replicas: 5 #创建的pod数量

template: #预创建的应用模板

metadata: #应用元数据

labels: #标签

app: nginx #标签详情 --show-labels

spec: #应用/app的规范

containers: #容器内容

- image: nginx:alpine #镜像名

imagePullPolicy: IfNotPresent #pull规则,本地有就用本地的。

name: nginx #命名,用于进入容器时候-c选择

ports: #端口

- containerPort: 80 #容器内端口(我始终觉得这是pod的端口,非应用端口)

containers: #容器内容

- image: bitnami/php-fpm #镜像名

imagePullPolicy: IfNotPresent

name: php-fpm #命名,用于进入容器时候-c选择

ports: #端口

- containerPort: 9000 #容器内端口

3,service文件编写

nodeport提供外部访问,kube-porxy会将创建的service 都写进IPVS规则,使用ipvsadm -Ln查看。

apiVersion: v1 #这里的区别没看

kind: Service #定义类型

metadata: #元数据

name: nginx-service #命名

namespace: kube-private #指定namespace

spec: #规范

selector: #标签选择器

app: nginx #这里是说与labels中带有app=nginx的pod进行protocol

ports: #端口

- name: port1 #命名

protocol: TCP #tcp类型

port: 80 #service端口

targetPort: 80 #容器应用端口

nodePort: 30080 #宿主机端口

- name: port2 #命名

protocol: TCP #tcp类型

port: 9000 #service端口

targetPort: 9000 #容器应用端口

nodePort: 39000 #宿主机端口

type: NodePort #指定类型,不指定只对集群内访问,为cluster

(五)ingress-nginx部署

(六)K8S内微服务之间访问方式

外部访问:nodeport,clusterip + ingress,loadblance

内部访问:http://service.namespace:port

同一ns中:http://service:port

(七)常用命令

1,加入节点

注意:初始化脚本里有使用到如下所有参数,这里只建议看看了解下功能。

1,指定(声明)controlPlane地址(角色)

注意:初始化时候有指定–control-plane-endpoint,这里不需要设置了。

kubectl -n kube-system edit cm kubeadm-config

controlPlaneEndpoint:172.16.0.1:6443

2,生成证书(–certificate-key )

注意:该标志–certificate-key未传递到kubeadm init, kubeadm init phase upload-certs则会自动生成一个新密钥。

kubeadm init phase upload-certs --upload-certs --certificate-key=SOME_VALUE --config=SOME_YAML_FILE

3,加入–control-plane(master加入需要使用该参数)

kubectl init --control-plane-endpoint=ip/domain --apiserver-cert-extra-sans=ip/domain

2,删除节点

1,驱逐节点上的pod

注意:会删除本地的数据,有本地持久化数据的请先手动迁移

kubectl drain k8s-master2 --force --ignore-daemonsets --delete-local-data

2,删除节点

kubectl delete node k8s-master2

3,重新初始化reset

注意:kubeadm reset -f等效于1+2步骤。

1,删除本地信息

rm -rf /root/.kube

rm -rf /etc/cni/net.d

rm -rf /var/lib/etcd

2,执行reset

kubeadm reset

3,初始化

yum install -y ipvsadm &&ipvsadm -C && iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

kubectl init --kubernetes-version 1.18.8 --apiserver-advertise-address=172.16.0.1 --control-plane-endpoint=k8s.cluster.com --apiserver-cert-extra-sans=k8s.cluster.com,172.16.0.1 --service-cidr=10.96.0.0/16 --pod-network-cidr=10.245.0.0/16 --image-repository registry.aliyuncs.com/google_containers && mkdir -p $HOME/.kube && cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && chown $(id -u):$(id -g) $HOME/.kube/config

4,使用污点维护节点调度

NoSchedule: 一定不能被调度

PreferNoSchedule: 尽量不要调度

NoExecute: 不仅不会调度, 还会驱逐Node上已有的Pod

1,查看污点

kubectl describe node master1 |grep -i taint

2,添加污点

注意:添加污点时候格式=:不要把冒号搞掉了。

kubectl taint nodes master1 node-role.kubernetes.io/master=:NoSchedule

3,去除污点

kubectl taint nodes master1 node-role.kubernetes.io/master-

5,维护节点调度方法2

cordon, drain, uncordon:正式release的1.2新加入的命令,三个命令需要配合使用可以实现节点的维护。cordon不可调度,uncordon可调度

1,设置节点不可调度

kubectl cordon xxx-node-01

2,驱逐节点上的pod

将运行在xxx-node-01上运行的pod平滑的赶到其他节点上

kubectl drain xxx-node-01 --force --ignore-daemonsets

3,恢复节点可调度

kubectl uncordon xxx-node-01

6,k8s命令大全推荐

(八)部署Metrics Server

1,Metrics API 的作用

- 它的主要作用是将资源使用指标提供给 K8s 自动扩缩器组件。

- Metrics API 提供有关节点和 Pod 的资源使用情况的信息, 包括 CPU 和内存的指标。

- Metrics API可以监听K8s集群中每个节点和Pod的CPU和内存使用量。

- 如果将 Metrics API 部署到集群中, 那么 Kubernetes API 的客户端就可以查询这些信息,并且可以使用 Kubernetes 的访问控制机制来管理权限。

- 安装kuboard(可视化管理界面)前需要确保已经安装好了 metric-server

2,yml文件部署Metrics Server

1,下载yml清单

- github有时候连不上,后边把yml内容贴上

wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

- 官方chart包中的镜像默认是k8s.gcr.io/metrics-server/metrics-server,国内无法访问谷歌镜像库,因此需要先到docker hub中找到对应版本的容器镜像,再与之替换即可。

- chart包中默认镜像标签是 v0.6.1,而我们替换的镜像标签是 0.6.1 。

- 访问kubernetes API服务器默认需要kubelet证书,通过向Metrics Server传递 --kubelet-insecure-tls 来禁用证书验证

2,修改components.yml内容

- k8s.gcr.io/metrics-server/metrics-server:v0.6.1==>bitnami/metrics-server:0.6.1

- 添加args内容- --kubelet-insecure-tls

vim components.yml

- --kubelet-insecure-tls

image: bitnami/metrics-server:0.6.1

3,修改后的components.yml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

#image: k8s.gcr.io/metrics-server/metrics-server:v0.6.2

image: bitnami/metrics-server:0.6.1

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

4,部署 metrics-server

kubectl apply -f components.yaml

5,metrics-server部署参考文档

6,部署遇到pending状态

解释:执行apply后会遇到pending情况,使用describe查看详细信息,一般是由于node节点的taint限制了,将污点去掉即可。

pending状态原因分析及解决参考文档

(九)部署kuboard可视化界面

1,1.18.8版本适用

1,下载yml清单

wget https://kuboard.cn/install-script/kuboard.yaml

2,所有节点下载镜像eipwork/kuboard:latest

docker pull eipwork/kuboard:latest

3,修改 kuboard.yaml 把镜像pull策略改为 IfNotPresent

vim kuboard.yml

4,kuboard.yml文件内容

apiVersion: apps/v1

kind: Deployment

metadata:

name: kuboard

namespace: kube-system

annotations:

k8s.kuboard.cn/displayName: kuboard

k8s.kuboard.cn/ingress: "true"

k8s.kuboard.cn/service: NodePort

k8s.kuboard.cn/workload: kuboard

labels:

k8s.kuboard.cn/layer: monitor

k8s.kuboard.cn/name: kuboard

spec:

replicas: 1

selector:

matchLabels:

k8s.kuboard.cn/layer: monitor

k8s.kuboard.cn/name: kuboard

template:

metadata:

labels:

k8s.kuboard.cn/layer: monitor

k8s.kuboard.cn/name: kuboard

spec:

containers:

- name: kuboard

image: eipwork/kuboard:latest

imagePullPolicy: IfNotPresent

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

operator: Exists

---

apiVersion: v1

kind: Service

metadata:

name: kuboard

namespace: kube-system

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 32567

selector:

k8s.kuboard.cn/layer: monitor

k8s.kuboard.cn/name: kuboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kuboard-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kuboard-user

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kuboard-viewer

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: view

subjects:

- kind: ServiceAccount

name: kuboard-viewer

namespace: kube-system

# ---

# apiVersion: extensions/v1beta1

# kind: Ingress

# metadata:

# name: kuboard

# namespace: kube-system

# annotations:

# k8s.kuboard.cn/displayName: kuboard

# k8s.kuboard.cn/workload: kuboard

# nginx.org/websocket-services: "kuboard"

# nginx.com/sticky-cookie-services: "serviceName=kuboard srv_id expires=1h path=/"

# spec:

# rules:

# - host: kuboard.yourdomain.com

# http:

# paths:

# - path: /

# backend:

# serviceName: kuboard

# servicePort: http

5,部署kuboard

kubectl apply -f kuboard.yaml

6,查看pod状态

kubectl get pods -n kube-system

7,获取登录token

echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d)

8,登录http://ip:32567

用上面命令获取的 token登录

9,1.18.8版本Kuboard 参考文档

2,1.26.1版本适用

1,使用yml清单部署kuboard v3

kubectl apply -f https://addons.kuboard.cn/kuboard/kuboard-v3.yaml

也可使用下边的命令部署,区别在于使用华为云的镜像仓库替代 docker hub 分发 Kuboard 所需要的镜像

kubectl apply -f https://addons.kuboard.cn/kuboard/kuboard-v3-swr.yaml

2,文档kuboard-v3.yml

---

apiVersion: v1

kind: Namespace

metadata:

name: kuboard

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kuboard-v3-config

namespace: kuboard

data:

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-built-in.html

# [common]

KUBOARD_SERVER_NODE_PORT: '30080'

KUBOARD_AGENT_SERVER_UDP_PORT: '30081'

KUBOARD_AGENT_SERVER_TCP_PORT: '30081'

KUBOARD_SERVER_LOGRUS_LEVEL: info # error / debug / trace

# KUBOARD_AGENT_KEY 是 Agent 与 Kuboard 通信时的密钥,请修改为一个任意的包含字母、数字的32位字符串,此密钥变更后,需要删除 Kuboard Agent 重新导入。

KUBOARD_AGENT_KEY: 32b7d6572c6255211b4eec9009e4a816

KUBOARD_AGENT_IMAG: eipwork/kuboard-agent

KUBOARD_QUESTDB_IMAGE: questdb/questdb:6.0.5

KUBOARD_DISABLE_AUDIT: 'false' # 如果要禁用 Kuboard 审计功能,将此参数的值设置为 'true',必须带引号。

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-gitlab.html

# [gitlab login]

# KUBOARD_LOGIN_TYPE: "gitlab"

# KUBOARD_ROOT_USER: "your-user-name-in-gitlab"

# GITLAB_BASE_URL: "http://gitlab.mycompany.com"

# GITLAB_APPLICATION_ID: "7c10882aa46810a0402d17c66103894ac5e43d6130b81c17f7f2d8ae182040b5"

# GITLAB_CLIENT_SECRET: "77c149bd3a4b6870bffa1a1afaf37cba28a1817f4cf518699065f5a8fe958889"

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-github.html

# [github login]

# KUBOARD_LOGIN_TYPE: "github"

# KUBOARD_ROOT_USER: "your-user-name-in-github"

# GITHUB_CLIENT_ID: "17577d45e4de7dad88e0"

# GITHUB_CLIENT_SECRET: "ff738553a8c7e9ad39569c8d02c1d85ec19115a7"

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-ldap.html

# [ldap login]

# KUBOARD_LOGIN_TYPE: "ldap"

# KUBOARD_ROOT_USER: "your-user-name-in-ldap"

# LDAP_HOST: "ldap-ip-address:389"

# LDAP_BIND_DN: "cn=admin,dc=example,dc=org"

# LDAP_BIND_PASSWORD: "admin"

# LDAP_BASE_DN: "dc=example,dc=org"

# LDAP_FILTER: "(objectClass=posixAccount)"

# LDAP_ID_ATTRIBUTE: "uid"

# LDAP_USER_NAME_ATTRIBUTE: "uid"

# LDAP_EMAIL_ATTRIBUTE: "mail"

# LDAP_DISPLAY_NAME_ATTRIBUTE: "cn"

# LDAP_GROUP_SEARCH_BASE_DN: "dc=example,dc=org"

# LDAP_GROUP_SEARCH_FILTER: "(objectClass=posixGroup)"

# LDAP_USER_MACHER_USER_ATTRIBUTE: "gidNumber"

# LDAP_USER_MACHER_GROUP_ATTRIBUTE: "gidNumber"

# LDAP_GROUP_NAME_ATTRIBUTE: "cn"

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kuboard-boostrap

namespace: kuboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-boostrap-crb

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kuboard-boostrap

namespace: kuboard

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

k8s.kuboard.cn/name: kuboard-etcd

name: kuboard-etcd

namespace: kuboard

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/name: kuboard-etcd

template:

metadata:

labels:

k8s.kuboard.cn/name: kuboard-etcd

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/master

operator: Exists

- matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

- matchExpressions:

- key: k8s.kuboard.cn/role

operator: In

values:

- etcd

containers:

- env:

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: HOSTIP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

image: 'eipwork/etcd-host:3.4.16-2'

imagePullPolicy: Always

name: etcd

ports:

- containerPort: 2381

hostPort: 2381

name: server

protocol: TCP

- containerPort: 2382

hostPort: 2382

name: peer

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 2381

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

volumeMounts:

- mountPath: /data

name: data

dnsPolicy: ClusterFirst

hostNetwork: true

restartPolicy: Always

serviceAccount: kuboard-boostrap

serviceAccountName: kuboard-boostrap

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

- key: node-role.kubernetes.io/control-plane

operator: Exists

volumes:

- hostPath:

path: /usr/share/kuboard/etcd

name: data

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: kuboard-v3

name: kuboard-v3

namespace: kuboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/name: kuboard-v3

template:

metadata:

labels:

k8s.kuboard.cn/name: kuboard-v3

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

- key: node-role.kubernetes.io/master

operator: Exists

weight: 100

- preference:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

weight: 100

containers:

- env:

- name: HOSTIP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

envFrom:

- configMapRef:

name: kuboard-v3-config

image: 'eipwork/kuboard:v3'

imagePullPolicy: Always

livenessProbe:

failureThreshold: 3

httpGet:

path: /kuboard-resources/version.json

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: kuboard

ports:

- containerPort: 80

name: web

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 10081

name: peer

protocol: TCP

- containerPort: 10081

name: peer-u

protocol: UDP

readinessProbe:

failureThreshold: 3

httpGet:

path: /kuboard-resources/version.json

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources: {}

# startupProbe:

# failureThreshold: 20

# httpGet:

# path: /kuboard-resources/version.json

# port: 80

# scheme: HTTP

# initialDelaySeconds: 5

# periodSeconds: 10

# successThreshold: 1

# timeoutSeconds: 1

dnsPolicy: ClusterFirst

restartPolicy: Always

serviceAccount: kuboard-boostrap

serviceAccountName: kuboard-boostrap

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

---

apiVersion: v1

kind: Service

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: kuboard-v3

name: kuboard-v3

namespace: kuboard

spec:

ports:

- name: web

nodePort: 30080

port: 80

protocol: TCP

targetPort: 80

- name: tcp

nodePort: 30081

port: 10081

protocol: TCP

targetPort: 10081

- name: udp

nodePort: 30081

port: 10081

protocol: UDP

targetPort: 10081

selector:

k8s.kuboard.cn/name: kuboard-v3

sessionAffinity: None

type: NodePort

3,访问 Kuboard v3

- 以下信息都可以在yml文件中修改

- 在浏览器中打开链接 http://your-node-ip-address:30080

- 用户名: admin

- 密码: Kuboard123

4,1.26.1版本kuboard v3参考文档

(十)pod中的volunm用法

1,参考文档

- 不想写了,直接上参考文档pod中的volunm用法

- 以下内容是博主记得有点模糊了,写在这里,可忽略。

- 想起一个东西,就是凭证/secert,可以搜一下k8s使用docker私库部署应用相关内容。

- hostpath,subpash(configmap),nfs挂载。

2,简单应用volume示例。

#创建service,用于暴露端口

apiVersion: v1

kind: Service

metadata:

name: nginx-service #service命名

namespace: dev #所属namespace,默认为default

spec:

selector:

app: nginx #标签选择(要通过service暴露端口的pod标签)

ports:

- name: p1 #为该service(nginx-service)要暴露的端口命名

protocol: TCP #protocol为tcp协议

port: 80 #该service(nginx-service)暴露的端口

targetPort: 80 #映射的容器端口(容器暴露的端口)

nodePort: 30080 #宿主机对外的端口

type: NodePort #nodeport类型,默认为cluster模型(对内可访问/提供服务)

---

#创建deploment(计划,比如创建多少个pod,调用的是rs还是rc忘了。)

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy #deploy命名

namespace: dev #所属namespace,默认为default

spec:

selector:

matchLabels:

app: nginx ##标签选择(要通过deploy部署的pod标签)

replicas: 2 #副本数量(起几个pod)

template: #模板

metadata: #元数据

labels:

app: nginx #标签(pod标签,也是上边的svc和deploy服务选择的标签,在此定义)

spec:

imagePullSecrets: #私有仓库,所以多了个认证

- name: docker-reg-secret #根据私有仓库的用户认证所生成的secert。

containers: #容器

- image: 8.134.99.24/pro/nginx:alpine_0202 #镜像

imagePullPolicy: IfNotPresent #imagepull规则,本地有则不pull,使用本地的。

name: nginx #为容器命名

volumeMounts: #挂载(容器内要挂载的内容)

- name: nginx-conf #命名挂载1

mountPath: /etc/nginx/nginx.conf #容器内的文件路径

subPath: nginx.conf #要覆盖的文件(重命名的文件)

- name: html #命名挂载2

mountPath: /usr/share/nginx/html #容器内的目录路径

- name: conf #命名挂载3

mountPath: /etc/nginx/conf.d #容器内的目录路径

ports:

- containerPort: 80 #容器暴露的端口

volumes: #宿主机要跟容器内映射的挂载内容

- name: nginx-conf #使用name跟上边的对应。

configMap: #文件类型cm

name: nginx-conf #cm名

- name: html

hostPath: #宿主机目录路径

path: /data/nginx/html

- name: conf

hostPath:

path: /data/nginx/conf.d

3,简单应用临时volume示例。

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

app: jenkins

template:

metadata:

labels:

app: jenkins

spec:

containers:

- name: jenkins

image: jenkins/jenkins:lts-jdk11

ports:

- containerPort: 8080

volumeMounts:

- name: jenkins-home

mountPath: /var/jenkins_home

volumes:

- name: jenkins-home

emptyDir: { }

(十一)k8s1.26.1部署

1,下载docker-ce对应版本

下载docker-ce-20.10.18-3.el7 docker-ce-cli-20.10.18-3.el7

yum -y install docker-ce-20.10.18-3.el7 docker-ce-cli-20.10.18-3.el7

2,导出containerd服务配置文件并修改

注意:不要">“,要追加重定向”>>"。源文件中disabled_plugins = [“cri”]要保留并把它注释了。所有节点都需要更改不然会有镜像pull timeout以及cri问题,网络依然使用flannel,同上更改yml并create/apply即可。使用calico的话就自己找下资料吧

containerd config default >>/etc/containerd/config.toml

vim /etc/containerd/config.toml

#注释"disabled_plugins = ["cri"]"

#disabled_plugins = ["cri"]

#sandbox_image= "替换如下"

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.8"

#SystemdCgroup从false更改为true,Centos启动时使用systemd(即我们使用的systemctl,Centos7之前是使用/etc/rc.d/init.d)进行初始化系统,会生成一个cgroup manager去管理cgroupfs。如果让Containerd直接去管理cgroupfs,又会生成一个cgroup manager。一个系统有两个cgroup manager很不稳定。所以我们需要配置Containerd直接使用systemd去管理cgroupfs

SystemdCgroup = true

#在模块[plugins."io.containerd.grpc.v1.cri".registry.mirrors]下添加以下三行,注意对齐格式。

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

#endpoint = [“https://registry-1.docker.io”]

# 注释上面那行,添加下面三行

endpoint = ["https://docker.mirrors.ustc.edu.cn"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"]

endpoint = ["https://registry.aliyuncs.com/google_containers"]

systemctl restart containerd

3,修改脚本内容

依然是该脚本,替换k8s版本和docker-ce版本,将脚本对应的内容替换如下。初始化命令注意修改指定版本号如果遇到初始化问题,直接kubeadm reset -f

vim k8s-init.sh

yum -y install docker-ce-20.10.18-3.el7 docker-ce-cli-20.10.18-3.el7

yum install -y kubelet-1.26.1 kubeadm-1.26.1 kubectl-1.26.1

4,执行脚本

chmod +x k8s-init.sh

./k8s-init.sh

5,Runc 漏洞(CVE-2021-30465)离线修复

Runc 漏洞(CVE-2021-30465)离线修复

注:组件:runc

漏洞名称:runc 路径遍历漏洞/Docker runc容器逃逸漏洞

CVE 编号:CVE-2021-30465

修复策略:将 runc 升级到 1.0.0-rc95 及以上版本

本章runc 版本是在 1.0.0-rc95 以上的,可忽略。

6,containerd排错参考文章

kubeadm init初始化报错container错误参考教程

containerd集群配置安装完整踩坑教程

(十二) 不想整理的内容

使用kubeadm部署k8s1.26参考文档

ingress-nginx部署参考文档

结语。

- 将yml文件放在文章中是不想遇到下载不下来的情况,可跳过yml文件内容,文章本身内容不多。

- 本章内容可供学习及简单了解k8s集群部署及使用。

- 参考文档里有些地方是有坑的。使用本章整理内容可完整部署出两个版本。

- [k8s1.18.8集群]+[metrics server]+[kuboard或者kuboard v3]

- [k8s1.26.1集群]+[metrics server]+[kuboard v3]

注意:使用1.26.1版本部署kuboard无法找到kuboard-user的凭证信息。(博主是没有找到的,所以使用kuboard v3)

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)