一个案例看机器学习建模基本过程

machine learning for credit scoringBanks play a crucial role in market economies. They decide who can get finance and on what terms and can make or break investment decisions. For markets and society

machine learning for credit scoring

Banks play a crucial role in market economies. They decide who can get finance and on what terms and can make or break investment decisions. For markets and society to function, individuals and companies need access to credit.

Credit scoring algorithms, which make a guess at the probability of default, are the method banks use to determine whether or not a loan should be granted. This competition requires participants to improve on the state of the art in credit scoring, by predicting the probability that somebody will experience financial distress in the next two years. Dataset

Attribute Information:

| Variable Name | Description | Type |

|---|---|---|

| SeriousDlqin2yrs | Person experienced 90 days past due delinquency or worse | Y/N |

| RevolvingUtilizationOfUnsecuredLines | Total balance on credit divided by the sum of credit limits | percentage |

| age | Age of borrower in years | integer |

| NumberOfTime30-59DaysPastDueNotWorse | Number of times borrower has been 30-59 days past due | integer |

| DebtRatio | Monthly debt payments | percentage |

| MonthlyIncome | Monthly income | real |

| NumberOfOpenCreditLinesAndLoans | Number of Open loans | integer |

| NumberOfTimes90DaysLate | Number of times borrower has been 90 days or more past due. | integer |

| NumberRealEstateLoansOrLines | Number of mortgage and real estate loans | integer |

| NumberOfTime60-89DaysPastDueNotWorse | Number of times borrower has been 60-89 days past due | integer |

| NumberOfDependents | Number of dependents in family | integer |

将数据读进pandas

import pandas as pd

pd.set_option('display.max_columns', 500)

import zipfile

with zipfile.ZipFile('KaggleCredit2.csv.zip', 'r') as z:

f = z.open('KaggleCredit2.csv')

data = pd.read_csv(f, index_col=0)

data.head()| SeriousDlqin2yrs | RevolvingUtilizationOfUnsecuredLines | age | NumberOfTime30-59DaysPastDueNotWorse | DebtRatio | MonthlyIncome | NumberOfOpenCreditLinesAndLoans | NumberOfTimes90DaysLate | NumberRealEstateLoansOrLines | NumberOfTime60-89DaysPastDueNotWorse | NumberOfDependents | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0.766127 | 45.0 | 2.0 | 0.802982 | 9120.0 | 13.0 | 0.0 | 6.0 | 0.0 | 2.0 |

| 1 | 0 | 0.957151 | 40.0 | 0.0 | 0.121876 | 2600.0 | 4.0 | 0.0 | 0.0 | 0.0 | 1.0 |

| 2 | 0 | 0.658180 | 38.0 | 1.0 | 0.085113 | 3042.0 | 2.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| 3 | 0 | 0.233810 | 30.0 | 0.0 | 0.036050 | 3300.0 | 5.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 4 | 0 | 0.907239 | 49.0 | 1.0 | 0.024926 | 63588.0 | 7.0 | 0.0 | 1.0 | 0.0 | 0.0 |

data.shape(108648, 11)

去除异常值

data.isnull().sum(axis=0)SeriousDlqin2yrs 0

RevolvingUtilizationOfUnsecuredLines 0

age 0

NumberOfTime30-59DaysPastDueNotWorse 0

DebtRatio 0

MonthlyIncome 0

NumberOfOpenCreditLinesAndLoans 0

NumberOfTimes90DaysLate 0

NumberRealEstateLoansOrLines 0

NumberOfTime60-89DaysPastDueNotWorse 0

NumberOfDependents 0

dtype: int64

data.dropna(inplace=True)

data.shape(108648, 11)

创建X 和 y

y = data['SeriousDlqin2yrs']

X = data.drop('SeriousDlqin2yrs', axis=1)import seaborn as sns

import matplotlib.pyplot as plt

%matplotlib inline

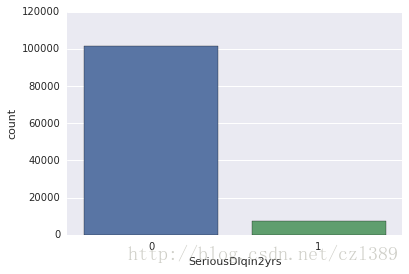

sns.countplot(x='SeriousDlqin2yrs',data=data)<matplotlib.axes._subplots.AxesSubplot at 0x7f0c07606dd0>

label为1的样本偏少,可见样本失衡

练习1:数据集准备

把数据切分成训练集和测试集

切分数据集

# Added version check for recent scikit-learn 0.18 checks

from distutils.version import LooseVersion as Version

from sklearn import __version__ as sklearn_version

if Version(sklearn_version) < '0.18':

from sklearn.cross_validation import train_test_split

else:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=0)对连续值特征做幅度缩放

from sklearn.preprocessing import StandardScaler

stdsc=StandardScaler()

X_train_std=stdsc.fit_transform(X_train)

X_test_std=stdsc.transform(X_test)练习2:用多种机器学习方法建模

使用logistic regression建模,并且输出一下系数,分析重要度。

使用决策树/SVM/KNN…等sklearn分类算法进行分类,尝试了解参数含义,调整不同的参数。

logistic regression

from sklearn.linear_model import LogisticRegression

lr = LogisticRegression(penalty='l1',C=1000.0, random_state=0)

lr.fit(X_train_std, y_train)

lrLogisticRegression(C=1000.0, class_weight=None, dual=False,

fit_intercept=True, intercept_scaling=1, max_iter=100,

multi_class='ovr', n_jobs=1, penalty='l1', random_state=0,

solver='liblinear', tol=0.0001, verbose=0, warm_start=False)

print '训练集准确度:%f'%lr.score(X_train_std,y_train)训练集准确度:0.933126

逻辑回归模型的系数

import numpy as np

feat_labels=data.columns[1:]

coefs=lr.coef_

indices=np.argsort(coefs[0])[::-1]

for f in range(X_train.shape[1]):

print '%2d) %-*s %f'%(f,30,feat_labels[indices[f]],coefs[0,indices[f]]) 0) NumberOfTime30-59DaysPastDueNotWorse 1.728817

1) NumberOfTimes90DaysLate 1.689074

2) DebtRatio 0.312097

3) NumberOfDependents 0.116383

4) RevolvingUtilizationOfUnsecuredLines -0.014289

5) NumberOfOpenCreditLinesAndLoans -0.091914

6) MonthlyIncome -0.115238

7) NumberRealEstateLoansOrLines -0.196420

8) age -0.364304

9) NumberOfTime60-89DaysPastDueNotWorse -3.247956

我的理解是权重绝对值大的特征标签比较重要

决策树

from sklearn.tree import DecisionTreeClassifier

tree = DecisionTreeClassifier(criterion='entropy', max_depth=3, random_state=0)

tree.fit(X_train_std, y_train)DecisionTreeClassifier(class_weight=None, criterion='entropy', max_depth=3,

max_features=None, max_leaf_nodes=None,

min_impurity_split=1e-07, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=0, splitter='best')

print '训练集准确度:%f'%tree.score(X_train_std,y_train)训练集准确度:0.934217

SVM(支持向量机)

太耗时间了,只取了“NumberOfTime60-89DaysPastDueNotWorse”这一项特征标签

X_train_std=pd.DataFrame(X_train_std,columns=feat_labels)

X_test_std=pd.DataFrame(X_test_std,columns=feat_labels)

X_train_std[['NumberOfTime60-89DaysPastDueNotWorse']].head()| NumberOfTime60-89DaysPastDueNotWorse | |

|---|---|

| 0 | -0.054381 |

| 1 | -0.054381 |

| 2 | -0.054381 |

| 3 | -0.054381 |

| 4 | -0.054381 |

from sklearn.svm import SVC

svm = SVC()

svm.fit(X_train_std[['NumberOfTime60-89DaysPastDueNotWorse']], y_train)SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=None, degree=3, gamma='auto', kernel='rbf',

max_iter=-1, probability=False, random_state=None, shrinking=True,

tol=0.001, verbose=False)

print '训练集准确度:%f'%svm.score(X_train_std[['NumberOfTime60-89DaysPastDueNotWorse']],y_train)训练集准确度:0.932876

KNN

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier(n_neighbors=5, p=2, metric='minkowski')

knn.fit(X_train, y_train)KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=5, p=2,

weights='uniform')

练习3:在测试集上预测

在测试集上进行预测,计算准确度

logistic regression

y_pred_lr=lr.predict(X_test)

print('错误分类数: %d' % (y_test != y_pred_lr).sum())

print '测试集准确度:%f'%lr.score(X_test_std,y_test)错误分类数: 2171

测试集准确度:0.933886

决策树

y_pred_tree=tree.predict(X_test)

print('错误分类数: %d' % (y_test != y_pred_tree).sum())

print '测试集准确度:%f'%tree.score(X_test_std,y_test)错误分类数: 2498

训练集准确度:0.935021

SVM

y_pred_svm=svm.predict(X_test[['DebtRatio']])

print('错误分类数: %d' % (y_test != y_pred_svm).sum())

print '测试集准确度:%f'%svm.score(X_test_std[['NumberOfTime60-89DaysPastDueNotWorse']],y_test)错误分类数: 4619

训练集准确度:0.934100

KNN

y_pred_knn=knn.predict(X_test)

print('错误分类数: %d' % (y_test != y_pred_knn).sum())

print '测试集准确度:%f'%knn.score(X_test,y_test)错误分类数: 2213

训练集准确度:0.932106

练习4:评估标准

查看sklearn的官方说明,了解混淆矩阵等评估标准,并对此例进行评估。

y的类别

class_names=np.unique(data['SeriousDlqin2yrs'].values)

class_namesarray([0, 1])

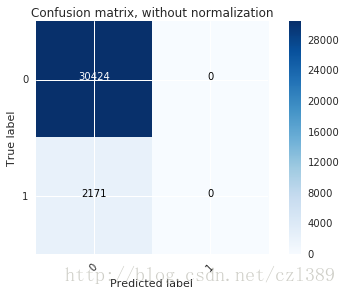

4种方法的confusion matrix

from sklearn.metrics import confusion_matrix

cnf_matrix_lr=confusion_matrix(y_test, y_pred_lr)

cnf_matrix_lrarray([[30424, 0],

[ 2171, 0]])

cnf_matrix_tree=confusion_matrix(y_test, y_pred_tree)

cnf_matrix_treearray([[29346, 1078],

[ 1420, 751]])

cnf_matrix_svm=confusion_matrix(y_test, y_pred_svm)

cnf_matrix_svmarray([[27661, 2763],

[ 1856, 315]])

cnf_matrix_knn=confusion_matrix(y_test, y_pred_knn)

cnf_matrix_knnarray([[30351, 73],

[ 2140, 31]])

一个绘制混淆矩阵的函数

import itertools

import numpy as np

import matplotlib.pyplot as plt

from sklearn.metrics import confusion_matrix

def plot_confusion_matrix(cm, classes,

normalize=False,

title='Confusion matrix',

cmap=plt.cm.Blues):

"""

This function prints and plots the confusion matrix.

Normalization can be applied by setting `normalize=True`.

"""

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

print("Normalized confusion matrix")

else:

print('Confusion matrix, without normalization')

print(cm)

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

tick_marks = np.arange(len(classes))

plt.xticks(tick_marks, classes, rotation=45)

plt.yticks(tick_marks, classes)

fmt = '.2f' if normalize else 'd'

thresh = cm.max() / 2.

for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])):

plt.text(j, i, format(cm[i, j], fmt),

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

plt.tight_layout()

plt.ylabel('True label')

plt.xlabel('Predicted label')可视化

以logistic regression 为例

np.set_printoptions(precision=2)

# Plot non-normalized confusion matrix

plt.figure()

plot_confusion_matrix(cnf_matrix_lr, classes=class_names,

title='Confusion matrix, without normalization')

# Plot normalized confusion matrix

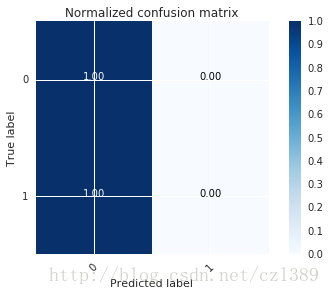

plt.figure()

plot_confusion_matrix(cnf_matrix_lr, classes=class_names, normalize=True,

title='Normalized confusion matrix')

plt.show()Confusion matrix, without normalization

[[30424 0]

[ 2171 0]]

Normalized confusion matrix

[[ 1. 0.]

[ 1. 0.]]

可见,真实标签为“0”的分类准确率很高。

真实标签为“1”的分类准确率很低。

练习5:调整模型参数

银行通常会有更严格的要求,因为fraud带来的后果通常比较严重,一般我们会调整模型的标准。

比如在logistic regression当中,一般我们的概率判定边界为0.5,但是我们可以把阈值设定低一些,来提高模型的“敏感度”,试试看把阈值设定为0.3,再看看这个时候的混淆矩阵等评估指标。

from sklearn.linear_model import LogisticRegression

lr = LogisticRegression(penalty='l2',C=1000.0, random_state=0,class_weight={1:0.3,0:0.7})

lr.fit(X_train, y_train)

lrLogisticRegression(C=1000.0, class_weight={0: 0.7, 1: 0.3}, dual=False,

fit_intercept=True, intercept_scaling=1, max_iter=100,

multi_class='ovr', n_jobs=1, penalty='l2', random_state=0,

solver='liblinear', tol=0.0001, verbose=0, warm_start=False)

y_pred_lr=lr.predict(X_test)

print('错误分类数: %d' % (y_test != y_pred_lr).sum())

print '训练集准确度:%f'%lr.score(X_test,y_test)错误分类数: 2169

训练集准确度:0.933456

from sklearn.metrics import confusion_matrix

cnf_matrix_lr=confusion_matrix(y_test, y_pred_lr)

cnf_matrix_lrarray([[30410, 14],

[ 2155, 16]])

练习6:特征选择、重新建模

尝试对不同特征的重要度进行排序,通过特征选择的方式,对特征进行筛选。并重新建模,观察此时的模型准确率等评估指标。

用随机森林的方法进行特征筛选

from sklearn.ensemble import RandomForestClassifier

feat_labels = data.columns[1:]

forest=RandomForestClassifier(n_estimators=10000,random_state=0,n_jobs=-1)

forest.fit(X_train,y_train)

importances=forest.feature_importances_import numpy as np

feat_labels=data.columns[1:]

indices=np.argsort(importances)[::-1]

for f in range(X_train.shape[1]):

print '%2d) %-*s %f'%(f,30,feat_labels[indices[f]],importances[indices[f]]) 0) NumberOfDependents 0.188808

1) NumberOfTime60-89DaysPastDueNotWorse 0.173198

2) NumberRealEstateLoansOrLines 0.165334

3) NumberOfTimes90DaysLate 0.122311

4) NumberOfOpenCreditLinesAndLoans 0.089278

5) MonthlyIncome 0.087939

6) DebtRatio 0.051493

7) NumberOfTime30-59DaysPastDueNotWorse 0.045888

8) age 0.043824

9) RevolvingUtilizationOfUnsecuredLines 0.031928

选取4个特征,建立逻辑回归模型

X_train_4feat=X_train_std[['NumberOfDependents','NumberOfTime60-89DaysPastDueNotWorse','NumberRealEstateLoansOrLines','NumberOfTimes90DaysLate']]

X_test_4feat=X_test_std[['NumberOfDependents','NumberOfTime60-89DaysPastDueNotWorse','NumberRealEstateLoansOrLines','NumberOfTimes90DaysLate']]from sklearn.linear_model import LogisticRegression

lr = LogisticRegression(penalty='l1',C=1000.0, random_state=0)

lr.fit(X_train_4feat, y_train)

lrLogisticRegression(C=1000.0, class_weight=None, dual=False,

fit_intercept=True, intercept_scaling=1, max_iter=100,

multi_class='ovr', n_jobs=1, penalty='l1', random_state=0,

solver='liblinear', tol=0.0001, verbose=0, warm_start=False)

print '训练集准确度:%f'%lr.score(X_train_4feat,y_train)训练集准确度:0.933086

y_pred_lr=lr.predict(X_test_4feat)

print('错误分类数: %d' % (y_test != y_pred_lr).sum())

print '测试集准确度:%f'%lr.score(X_test_4feat,y_test)错误分类数: 2161

测试集准确度:0.933701

cnf_matrix_lr=confusion_matrix(y_test, y_pred_lr)

cnf_matrix_lrarray([[30381, 43],

[ 2118, 53]])

从最后的结果看,虽然经过特征选择和模型参数调整,但依然未能解决混淆矩阵指标太差的问题。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)