kubernetes1.4 基础篇:Learn Kubernetes 1.4 by 6 steps(2):Step 1. Create a Kubernetes cluster

在本文中我们将会将会学到如何利用kubeadm快速创建集群, 和google的官方教程气提供的交互式minikube不同,我们将会创建一个真正的由3个node和一个master构成的k8s的几乎最小的集群。

在本文中我们将会将会学到如何利用kubeadm快速创建集群, 和google的官方教程气提供的交互式minikube不同,我们将会创建一个真正的由3个node和一个master构成的k8s的几乎最小的集群。

Kubernetes

Kubernetes协调高可用的集群将其作为一个整体来提供服务。使用kubernetes使得你在部署容器化的应用到集群上的时候不必再考虑如何指定到哪台具体的机器,而这个前提这是应用是需要被容器化了的。容器化的应用比传统的部署模块更具灵活性因为它的解耦。Kubernetes能及其高效地在整个集群中自动发布和编排应用容器。而且,被重复说了很多遍的是:Kubernetes是OpenSource的(不要钱),Kubernetes是production-ready(好用的).

为了尊重kubernetes所做出的贡献,我们不在乎再背一遍书,只希望下个版本更加好用。

组成要素

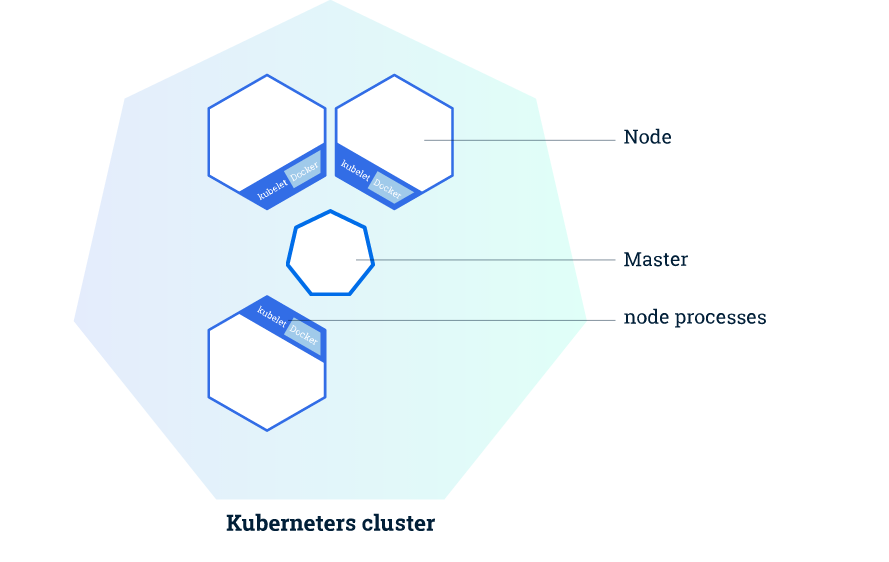

Kubernetes集群包含如下两种类型的资源:

| 类型 | 作用 |

|---|---|

| Master | 协调和操控集群 |

| Node | 实际用于运行应用容器的”worker” |

集群构成

构成说明

Master的主要职责在于管理集群,协调集群上的所有活动,比如:

- 编排应用

- 维护应用状态

- 扩展应用

- 更新应用等

Node

Node就是一台在kubernetes集群中担任worker的VM或者物理机。在每个Node上都有一个kubelet,而Kubelet就是一个用于管理node和Master之间的沟通的agent。在node上需要安装docker,或者说用于管理容器操作的工具,因为kubernetes并不绑定docker,我们以前介绍过的rkt在kubernetes中也是支持的。而且明眼人都能看出来,rkt纯粹是google用于制衡docker的,rkt和kubernetes深度融合,但是是否能得到市场的认可这并不是一个纯粹技术的问题。

一个在生产环境中能够大体使用的kubernetes集群至少要有3个node。本来就是node的协调和编排,你就一个node,也非要用kubernetes,虽然可以任性,但是屠龙宝刀只用来裁墙纸多少会有大材小用的唏嘘。

Master

Master则用来管理集群。当你在kubernetes集群上部署应用的时候,你可能会需要Master启动某个应用容器。 Master在集群中协调用于启动此容器的node,而node使用kubernetes API和master进行通信(当然,用户也可以直接使用kubernetes API和集群进行交互)。

Kubernetes集群创建

Kubernetes集群可以被部署到物理机或者虚拟机上。可以使用minikube,但是本文将使用kubeadm方式创建一个3 node + 1 Master的集群。

构成

| 项番 | 类别 | host名 | IP |

|---|---|---|---|

| No.1 | Master | host31 | 192.168.32.31 |

| No.2 | Node | host32 | 192.168.32.32 |

| No.3 | Node | host33 | 192.168.32.33 |

| No.4 | Node | host34 | 192.168.32.34 |

easypack_kubernetes.sh

K8S在1.4出来的时候向全世界宣布2条命令创建集群,在VPN的环境下,的确是这样,因为这些依赖关系1.4会根据需要自动的去pull,但是pull不到google_container的就这样被无比简单的一件事情堵在外面。本着利己利人的出发点,写了个脚本,放到了github上(https://github.com/liumiaocn/easypack/tree/master/k8s),脚本的使用基本上是sh easypack_kubernetes.sh MASTER来创建Master,sh easypack_kubernetes.sh NODE token MASTERIP来join,其他的诸如docker和kubelet/kubectl/kubeadm等的安装,container的事前下载都写进去了,版本全部目前统一为1.4.1的版本,脚本及其简单,无非将google的步骤放到一起而已,可以自行按自己需要进行修改。

创建Master

创建master在kubernetes1.4的版本只需要一条命令,kubeadm init, 但是其前提是能够联上网络,kubeadm会自动地按照需求去pull相应的image的版本,所以省去了翻来覆去的确认各个image等的版本,但是安装过程会慢一些,如果你查看linux的系统日志你就会发现/var/log/messages中只有在安装的时候在本地找不到正确版本的image的时候才回去pull,所以事前把所需要的内容都pull下来是一个很好的主意。

命令:

git clone https://github.com/liumiaocn/easypack

cd easypack/k8s

sh easypack_kubernetes.sh MASTER

>实际执行的时候不需要一定设定本地git,将上面github上面的脚本easypack_kubernetes.sh的内容copy下来在本地vi生成一个即可安装耗时:10分钟(基本上都是在pull google_container的镜像)

输出参照:

[root@host31 ~]# git clone https://github.com/liumiaocn/easypack

Cloning into 'easypack'...

remote: Counting objects: 67, done.

remote: Compressing objects: 100% (52/52), done.

remote: Total 67 (delta 11), reused 0 (delta 0), pack-reused 15

Unpacking objects: 100% (67/67), done.

[root@host31 ~]# cd easypack/k8s

[root@host31 k8s]# sh easypack_kubernetes.sh MASTER

Wed Nov 9 05:05:53 EST 2016

##INSTALL LOG : /tmp/k8s_install.1456.log

##Step 1: Stop firewall ...

Wed Nov 9 05:05:53 EST 2016

##Step 2: set repository and install kubeadm etc...

install kubectl in Master...

#######Set docker proxy when needed. If ready, press any to continue...

注意:此处需要回车一下才能继续,因为需要给安装完docker还要设定docker的代理的提供一个手动处理的方式,不通过代理的直接回车即可

Wed Nov 9 05:07:04 EST 2016

##Step 3: pull google containers...

Now begin to pull images from liumiaocn

No.1 : kube-proxy-amd64:v1.4.1 pull begins ...

No.1 : kube-proxy-amd64:v1.4.1 pull ends ...

No.1 : kube-proxy-amd64:v1.4.1 rename ...

No.1 : kube-proxy-amd64:v1.4.1 untag ...

No.2 : kube-discovery-amd64:1.0 pull begins ...

No.2 : kube-discovery-amd64:1.0 pull ends ...

No.2 : kube-discovery-amd64:1.0 rename ...

No.2 : kube-discovery-amd64:1.0 untag ...

No.3 : kube-scheduler-amd64:v1.4.1 pull begins ...

No.3 : kube-scheduler-amd64:v1.4.1 pull ends ...

No.3 : kube-scheduler-amd64:v1.4.1 rename ...

No.3 : kube-scheduler-amd64:v1.4.1 untag ...

No.4 : kube-controller-manager-amd64:v1.4.1 pull begins ...

No.4 : kube-controller-manager-amd64:v1.4.1 pull ends ...

No.4 : kube-controller-manager-amd64:v1.4.1 rename ...

No.4 : kube-controller-manager-amd64:v1.4.1 untag ...

No.5 : kube-apiserver-amd64:v1.4.1 pull begins ...

No.5 : kube-apiserver-amd64:v1.4.1 pull ends ...

No.5 : kube-apiserver-amd64:v1.4.1 rename ...

No.5 : kube-apiserver-amd64:v1.4.1 untag ...

No.6 : pause-amd64:3.0 pull begins ...

No.6 : pause-amd64:3.0 pull ends ...

No.6 : pause-amd64:3.0 rename ...

No.6 : pause-amd64:3.0 untag ...

No.7 : etcd-amd64:2.2.5 pull begins ...

No.7 : etcd-amd64:2.2.5 pull ends ...

No.7 : etcd-amd64:2.2.5 rename ...

No.7 : etcd-amd64:2.2.5 untag ...

No.8 : kubedns-amd64:1.7 pull begins ...

No.8 : kubedns-amd64:1.7 pull ends ...

No.8 : kubedns-amd64:1.7 rename ...

No.8 : kubedns-amd64:1.7 untag ...

No.9 : kube-dnsmasq-amd64:1.3 pull begins ...

No.9 : kube-dnsmasq-amd64:1.3 pull ends ...

No.9 : kube-dnsmasq-amd64:1.3 rename ...

No.9 : kube-dnsmasq-amd64:1.3 untag ...

No.10 : exechealthz-amd64:1.1 pull begins ...

No.10 : exechealthz-amd64:1.1 pull ends ...

No.10 : exechealthz-amd64:1.1 rename ...

No.10 : exechealthz-amd64:1.1 untag ...

No.11 : kubernetes-dashboard-amd64:v1.4.1 pull begins ...

No.11 : kubernetes-dashboard-amd64:v1.4.1 pull ends ...

No.11 : kubernetes-dashboard-amd64:v1.4.1 rename ...

No.11 : kubernetes-dashboard-amd64:v1.4.1 untag ...

All images have been pulled to local as following

gcr.io/google_containers/kube-controller-manager-amd64 v1.4.1 ad7b34f5ecb8 11 days ago 142.4 MB

gcr.io/google_containers/kube-apiserver-amd64 v1.4.1 4a76dd338dfe 4 weeks ago 152.1 MB

gcr.io/google_containers/kube-scheduler-amd64 v1.4.1 f9641959ec72 4 weeks ago 81.67 MB

gcr.io/google_containers/kube-proxy-amd64 v1.4.1 b47199222245 4 weeks ago 202.7 MB

gcr.io/google_containers/kubernetes-dashboard-amd64 v1.4.1 1dda73f463b2 4 weeks ago 86.76 MB

gcr.io/google_containers/kube-discovery-amd64 1.0 c5e0c9a457fc 6 weeks ago 134.2 MB

gcr.io/google_containers/kubedns-amd64 1.7 bec33bc01f03 10 weeks ago 55.06 MB

gcr.io/google_containers/kube-dnsmasq-amd64 1.3 9a15e39d0db8 4 months ago 5.126 MB

gcr.io/google_containers/pause-amd64 3.0 99e59f495ffa 6 months ago 746.9 kB

Wed Nov 9 05:14:18 EST 2016

Wed Nov 9 05:14:18 EST 2016

##Step 4: kubeadm init

Running pre-flight checks

注意:此处被卡的原因在于不符合安装要求,基本上将提示的问题对应即可,一般是/etc/kubernetes下面事前已经有内容了,一些端口已经被占用了等等。

<master/tokens> generated token: "77eddc.77edc19a7ade45d6"

<master/pki> generated Certificate Authority key and certificate:

Issuer: CN=kubernetes | Subject: CN=kubernetes | CA: true

Not before: 2016-11-09 10:14:19 +0000 UTC Not After: 2026-11-07 10:14:19 +0000 UTC

Public: /etc/kubernetes/pki/ca-pub.pem

Private: /etc/kubernetes/pki/ca-key.pem

Cert: /etc/kubernetes/pki/ca.pem

<master/pki> generated API Server key and certificate:

Issuer: CN=kubernetes | Subject: CN=kube-apiserver | CA: false

Not before: 2016-11-09 10:14:19 +0000 UTC Not After: 2017-11-09 10:14:19 +0000 UTC

Alternate Names: [192.168.32.31 10.0.0.1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local]

Public: /etc/kubernetes/pki/apiserver-pub.pem

Private: /etc/kubernetes/pki/apiserver-key.pem

Cert: /etc/kubernetes/pki/apiserver.pem

<master/pki> generated Service Account Signing keys:

Public: /etc/kubernetes/pki/sa-pub.pem

Private: /etc/kubernetes/pki/sa-key.pem

<master/pki> created keys and certificates in "/etc/kubernetes/pki"

<util/kubeconfig> created "/etc/kubernetes/kubelet.conf"

<util/kubeconfig> created "/etc/kubernetes/admin.conf"

<master/apiclient> created API client configuration

<master/apiclient> created API client, waiting for the control plane to become ready

注意:此处是容易被卡住的最多的地方,从这里开始基本上是google_container没有下载下来或者是没有下载到正确版本,详细可以参看另外一篇单独讲解使用kubeadm进行安装的文章。

<master/apiclient> all control plane components are healthy after 17.439551 seconds

<master/apiclient> waiting for at least one node to register and become ready

<master/apiclient> first node is ready after 4.004373 seconds

<master/apiclient> attempting a test deployment

<master/apiclient> test deployment succeeded

<master/discovery> created essential addon: kube-discovery, waiting for it to become ready

<master/discovery> kube-discovery is ready after 2.001892 seconds

<master/addons> created essential addon: kube-proxy

<master/addons> created essential addon: kube-dns

Kubernetes master initialised successfully!

You can now join any number of machines by running the following on each node:

kubeadm join --token=77eddc.77edc19a7ade45d6 192.168.32.31

注意:这个一定要注意,token是别的node用来join的时候必须的

Wed Nov 9 05:14:46 EST 2016

##Step 5: taint nodes...

node "host31" tainted

NAME STATUS AGE

host31 Ready 5s

注意:Master一般不是作为node来用的使用这种方法,这样可以折衷。

Wed Nov 9 05:14:46 EST 2016

##Step 6: confirm version...

Client Version: version.Info{Major:"1", Minor:"4", GitVersion:"v1.4.1", GitCommit:"33cf7b9acbb2cb7c9c72a10d6636321fb180b159", GitTreeState:"clean", BuildDate:"2016-10-10T18:19:49Z", GoVersion:"go1.6.3", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"4", GitVersion:"v1.4.1", GitCommit:"33cf7b9acbb2cb7c9c72a10d6636321fb180b159", GitTreeState:"clean", BuildDate:"2016-10-10T18:13:36Z", GoVersion:"go1.6.3", Compiler:"gc", Platform:"linux/amd64"}

kubeadm version: version.Info{Major:"1", Minor:"5+", GitVersion:"v1.5.0-alpha.1.409+714f816a349e79", GitCommit:"714f816a349e7978bc93b35c67ce7b9851e53a6f", GitTreeState:"clean", BuildDate:"2016-10-17T13:01:29Z", GoVersion:"go1.6.3", Compiler:"gc", Platform:"linux/amd64"}

Wed Nov 9 05:14:47 EST 2016

##Step 7: set weave-kube ...

daemonset "weave-net" created

[root@host31 k8s]#添加节点

创建完MASTER之后,就可以向这个集群添加节点了,首先添加host32。

命令:sh easypack_kubernetes.sh NODE 77eddc.77edc19a7ade45d6 192.168.32.31安装耗时:10分钟左右(基本上也是docker pull的时间,但是同时取决于你的网速和dockhub的状况)

安装参照:

[root@host32 k8s]# sh easypack_kubernetes.sh NODE 77eddc.77edc19a7ade45d6 192.168.32.31

Wed Nov 9 05:43:10 EST 2016

##INSTALL LOG : /tmp/k8s_install.10517.log

##Step 1: Stop firewall ...

Wed Nov 9 05:43:10 EST 2016

##Step 2: set repository and install kubeadm etc...

#######Set docker proxy when needed. If ready, press any to continue...

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /etc/systemd/system/kubelet.service.

Wed Nov 9 05:44:04 EST 2016

##Step 3: pull google containers...

Now begin to pull images from liumiaocn

No.1 : kube-proxy-amd64:v1.4.1 pull begins ...

No.1 : kube-proxy-amd64:v1.4.1 pull ends ...

No.1 : kube-proxy-amd64:v1.4.1 rename ...

No.1 : kube-proxy-amd64:v1.4.1 untag ...

No.2 : kube-discovery-amd64:1.0 pull begins ...

No.2 : kube-discovery-amd64:1.0 pull ends ...

No.2 : kube-discovery-amd64:1.0 rename ...

No.2 : kube-discovery-amd64:1.0 untag ...

No.3 : kube-scheduler-amd64:v1.4.1 pull begins ...

No.3 : kube-scheduler-amd64:v1.4.1 pull ends ...

No.3 : kube-scheduler-amd64:v1.4.1 rename ...

No.3 : kube-scheduler-amd64:v1.4.1 untag ...

No.4 : kube-controller-manager-amd64:v1.4.1 pull begins ...

No.4 : kube-controller-manager-amd64:v1.4.1 pull ends ...

No.4 : kube-controller-manager-amd64:v1.4.1 rename ...

No.4 : kube-controller-manager-amd64:v1.4.1 untag ...

No.5 : kube-apiserver-amd64:v1.4.1 pull begins ...

No.5 : kube-apiserver-amd64:v1.4.1 pull ends ...

No.5 : kube-apiserver-amd64:v1.4.1 rename ...

No.5 : kube-apiserver-amd64:v1.4.1 untag ...

No.6 : pause-amd64:3.0 pull begins ...

No.6 : pause-amd64:3.0 pull ends ...

No.6 : pause-amd64:3.0 rename ...

No.6 : pause-amd64:3.0 untag ...

No.7 : etcd-amd64:2.2.5 pull begins ...

No.7 : etcd-amd64:2.2.5 pull ends ...

No.7 : etcd-amd64:2.2.5 rename ...

No.7 : etcd-amd64:2.2.5 untag ...

No.8 : kubedns-amd64:1.7 pull begins ...

No.8 : kubedns-amd64:1.7 pull ends ...

No.8 : kubedns-amd64:1.7 rename ...

No.8 : kubedns-amd64:1.7 untag ...

No.9 : kube-dnsmasq-amd64:1.3 pull begins ...

No.9 : kube-dnsmasq-amd64:1.3 pull ends ...

No.9 : kube-dnsmasq-amd64:1.3 rename ...

No.9 : kube-dnsmasq-amd64:1.3 untag ...

No.10 : exechealthz-amd64:1.1 pull begins ...

No.10 : exechealthz-amd64:1.1 pull ends ...

No.10 : exechealthz-amd64:1.1 rename ...

No.10 : exechealthz-amd64:1.1 untag ...

No.11 : kubernetes-dashboard-amd64:v1.4.1 pull begins ...

No.11 : kubernetes-dashboard-amd64:v1.4.1 pull ends ...

No.11 : kubernetes-dashboard-amd64:v1.4.1 rename ...

No.11 : kubernetes-dashboard-amd64:v1.4.1 untag ...

All images have been pulled to local as following

gcr.io/google_containers/kube-controller-manager-amd64 v1.4.1 ad7b34f5ecb8 11 days ago 142.4 MB

gcr.io/google_containers/pause-amd64 latest 19047b725e84 3 weeks ago 746.9 kB

gcr.io/google_containers/kube-apiserver-amd64 v1.4.1 4a76dd338dfe 4 weeks ago 152.1 MB

gcr.io/google_containers/kube-scheduler-amd64 v1.4.1 f9641959ec72 4 weeks ago 81.67 MB

gcr.io/google_containers/kube-proxy-amd64 v1.4.1 b47199222245 4 weeks ago 202.7 MB

gcr.io/google_containers/kubernetes-dashboard-amd64 v1.4.1 1dda73f463b2 4 weeks ago 86.76 MB

gcr.io/google_containers/kube-proxy-amd64 v1.4.0 1f6aa6a8c3dc 6 weeks ago 202.8 MB

gcr.io/google_containers/kube-apiserver-amd64 v1.4.0 828dcf2a8776 6 weeks ago 152.3 MB

gcr.io/google_containers/kube-controller-manager-amd64 v1.4.0 b77714f7dc16 6 weeks ago 142.2 MB

gcr.io/google_containers/kube-scheduler-amd64 v1.4.0 9ed70b516ca8 6 weeks ago 81.8 MB

gcr.io/google_containers/kube-discovery-amd64 1.0 c5e0c9a457fc 6 weeks ago 134.2 MB

gcr.io/google_containers/kubedns-amd64 1.7 bec33bc01f03 10 weeks ago 55.06 MB

gcr.io/google_containers/kube-dnsmasq-amd64 1.3 9a15e39d0db8 4 months ago 5.126 MB

gcr.io/google_containers/pause-amd64 3.0 99e59f495ffa 6 months ago 746.9 kB

Wed Nov 9 05:52:49 EST 2016

Wed Nov 9 05:52:49 EST 2016

##Step 4: kubeadm join

Running pre-flight checks

<util/tokens> validating provided token

<node/discovery> created cluster info discovery client, requesting info from "http://192.168.32.31:9898/cluster-info/v1/?token-id=77eddc"

<node/discovery> cluster info object received, verifying signature using given token

<node/discovery> cluster info signature and contents are valid, will use API endpoints [https://192.168.32.31:6443]

注意:此处有可能被卡,比如此host的时间日期和Master都不一致等会导致被卡,其他被卡被坑的情况可以参照Master或者另外一片文章的整理。

<node/bootstrap> trying to connect to endpoint https://192.168.32.31:6443

<node/bootstrap> detected server version v1.4.1

<node/bootstrap> successfully established connection with endpoint https://192.168.32.31:6443

<node/csr> created API client to obtain unique certificate for this node, generating keys and certificate signing request

<node/csr> received signed certificate from the API server:

Issuer: CN=kubernetes | Subject: CN=system:node:host32 | CA: false

Not before: 2016-11-09 10:49:00 +0000 UTC Not After: 2017-11-09 10:49:00 +0000 UTC

<node/csr> generating kubelet configuration

<util/kubeconfig> created "/etc/kubernetes/kubelet.conf"

Node join complete:

* Certificate signing request sent to master and response

received.

* Kubelet informed of new secure connection details.

Run 'kubectl get nodes' on the master to see this machine join.

Wed Nov 9 05:52:55 EST 2016

##Step 5: confirm version...

kubeadm version: version.Info{Major:"1", Minor:"5+", GitVersion:"v1.5.0-alpha.1.409+714f816a349e79", GitCommit:"714f816a349e7978bc93b35c67ce7b9851e53a6f", GitTreeState:"clean", BuildDate:"2016-10-17T13:01:29Z", GoVersion:"go1.6.3", Compiler:"gc", Platform:"linux/amd64"}[root@host32 k8s]#结果确认

使用kubectl get nodes在Master上即可查看出集群的构成

[root@host31 k8s]# kubectl get nodes

NAME STATUS AGE

host31 Ready 42m

host32 Ready 17m

host33 Ready 3m

host34 Ready 5m

[root@host31 k8s]#更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)