【k8s资源调度-Deployment】

无状态服务器资源调度

1、标签和选择器

1.1 标签Label

- 配置文件:在各类资源的sepc.metadata.label 中进行配置

- 通过kubectl 命令行创建修改标签,语法如下

- 创建临时label:kubectl label po <资源名称> app=hello -n <命令空间(可不加)>

- 修改已经存在的label: kubectl label po <资源名称> app=hello -n <命令空间(可不加)> --overwrite

- 通过查看lable的时候修改label

- selector 按照 label 单值查找节点: kubectl get po -A -l app=hello

- 查看所有节点的labels :kubectl get po --show-labels

## 创建pod

[root@k8s-master ~]# kubectl create -f nginx-po.yml

pod/nginx-liveness-po created

# 查看pod状态

[root@k8s-master ~]# kubectl get po nginx-liveness-po

NAME READY STATUS RESTARTS AGE

nginx-liveness-po 1/1 Running 0 51s

# 查看pod状态,显示label信息

[root@k8s-master ~]# kubectl get po nginx-liveness-po --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-liveness-po 1/1 Running 0 68s test=l.0.0,type=app

# 临时给pod添加label

[root@k8s-master ~]# kubectl label po nginx-liveness-po auth=xiaobai

pod/nginx-liveness-po labeled

[root@k8s-master ~]# kubectl get po nginx-liveness-po --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-liveness-po 1/1 Running 0 105s auth=xiaobai,test=l.0.0,type=app

# 修改pod的label,但是不加 --overwrite 会提示失败,需要加 --overweite

[root@k8s-master ~]# kubectl label po nginx-liveness-po auth=xiaohong

error: 'auth' already has a value (xiaobai), and --overwrite is false

[root@k8s-master ~]# kubectl label po nginx-liveness-po auth=xiaohong --overwrite

pod/nginx-liveness-po labeled

# 修改label属性已经更改

[root@k8s-master ~]# kubectl get po nginx-liveness-po --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-liveness-po 1/1 Running 0 2m3s auth=xiaohong,test=l.0.0,type=app

# 通过单个labe信息查找pod

[root@k8s-master ~]# kubectl get po -A -l type=app

NAMESPACE NAME READY STATUS RESTARTS AGE

default nginx-liveness-po 1/1 Running 0 8m27s

1.2 选择器Seletor

- 通过yaml配置文件,在各对象的配置 spec.seletor 或其他可以写selector的属性中编写。

- 通过命令行模式,语法如下

- 匹配但个值,查找app=hello的pod:kubectl get po -A -I app=hello

- 匹配多个值:kubectl get po -A -I ‘k8s-app in (metrics-server,kubernetes-dashboard)’

- 查找version!=1 and app=nginx的pod信息:kubectl get po -I version!=1,app=nginx

# 单值匹配的时候

[root@k8s-master ~]# kubectl get po -A -l type=app --show-labels

NAMESPACE NAME READY STATUS RESTARTS AGE LABELS

default nginx-liveness-po 1/1 Running 0 18m auth=xiaohong,test=l.0.0,type=app

# 显示所有的pod的label信息

[root@k8s-master ~]# kubectl get po -A --show-labels

NAMESPACE NAME READY STATUS RESTARTS AGE LABELS

default nginx-liveness-po 1/1 Running 0 18m auth=xiaohong,test=l.0.0,type=app

kube-flannel kube-flannel-ds-glkkb 1/1 Running 4 (24h ago) 3d19h app=flannel,controller-revision-hash=7cfb6d964b,pod-template-generation=1,tier=node

kube-flannel kube-flannel-ds-pdmtw 1/1 Running 1 (24h ago) 44h app=flannel,controller-revision-hash=7cfb6d964b,pod-template-generation=1,tier=node

kube-flannel kube-flannel-ds-tpm8x 1/1 Running 2 (24h ago) 3d19h app=flannel,controller-revision-hash=7cfb6d964b,pod-template-generation=1,tier=node

kube-system coredns-c676cc86f-pdsl6 1/1 Running 3 (24h ago) 2d7h k8s-app=kube-dns,pod-template-hash=c676cc86f

kube-system coredns-c676cc86f-q7hcw 1/1 Running 1 (24h ago) 2d7h k8s-app=kube-dns,pod-template-hash=c676cc86f

kube-system etcd-k8s-master 1/1 Running 2 (24h ago) 3d20h component=etcd,tier=control-plane

kube-system kube-apiserver-k8s-master 1/1 Running 3 (24h ago) 3d20h component=kube-apiserver,tier=control-plane

kube-system kube-controller-manager-k8s-master 1/1 Running 4 (24h ago) 3d20h component=kube-controller-manager,tier=control-plane

kube-system kube-proxy-n2w92 1/1 Running 4 (24h ago) 3d19h controller-revision-hash=dd4c999cf,k8s-app=kube-proxy,pod-template-generation=1

kube-system kube-proxy-p8fhs 1/1 Running 1 (24h ago) 44h controller-revision-hash=dd4c999cf,k8s-app=kube-proxy,pod-template-generation=1

kube-system kube-proxy-xtllb 1/1 Running 2 (24h ago) 3d20h controller-revision-hash=dd4c999cf,k8s-app=kube-proxy,pod-template-generation=1

kube-system kube-scheduler-k8s-master 1/1 Running 4 (24h ago) 3d20h component=kube-scheduler,tier=control-plane

# 匹配单个值 app!=flannel

[root@k8s-master ~]# kubectl get po -A -l app!=flannel

NAMESPACE NAME READY STATUS RESTARTS AGE

default nginx-liveness-po 1/1 Running 0 19m

kube-system coredns-c676cc86f-pdsl6 1/1 Running 3 (24h ago) 2d7h

kube-system coredns-c676cc86f-q7hcw 1/1 Running 1 (24h ago) 2d7h

kube-system etcd-k8s-master 1/1 Running 2 (24h ago) 3d20h

kube-system kube-apiserver-k8s-master 1/1 Running 3 (24h ago) 3d20h

kube-system kube-controller-manager-k8s-master 1/1 Running 4 (24h ago) 3d20h

kube-system kube-proxy-n2w92 1/1 Running 4 (24h ago) 3d19h

kube-system kube-proxy-p8fhs 1/1 Running 1 (24h ago) 44h

kube-system kube-proxy-xtllb 1/1 Running 2 (24h ago) 3d20h

kube-system kube-scheduler-k8s-master 1/1 Running 4 (24h ago) 3d20h

[root@k8s-master ~]# kubectl get po -A -l app!=flannel --show-labels

NAMESPACE NAME READY STATUS RESTARTS AGE LABELS

default nginx-liveness-po 1/1 Running 0 19m auth=xiaohong,test=l.0.0,type=app

kube-system coredns-c676cc86f-pdsl6 1/1 Running 3 (24h ago) 2d7h k8s-app=kube-dns,pod-template-hash=c676cc86f

kube-system coredns-c676cc86f-q7hcw 1/1 Running 1 (24h ago) 2d7h k8s-app=kube-dns,pod-template-hash=c676cc86f

kube-system etcd-k8s-master 1/1 Running 2 (24h ago) 3d20h component=etcd,tier=control-plane

kube-system kube-apiserver-k8s-master 1/1 Running 3 (24h ago) 3d20h component=kube-apiserver,tier=control-plane

kube-system kube-controller-manager-k8s-master 1/1 Running 4 (24h ago) 3d20h component=kube-controller-manager,tier=control-plane

kube-system kube-proxy-n2w92 1/1 Running 4 (24h ago) 3d19h controller-revision-hash=dd4c999cf,k8s-app=kube-proxy,pod-template-generation=1

kube-system kube-proxy-p8fhs 1/1 Running 1 (24h ago) 44h controller-revision-hash=dd4c999cf,k8s-app=kube-proxy,pod-template-generation=1

kube-system kube-proxy-xtllb 1/1 Running 2 (24h ago) 3d20h controller-revision-hash=dd4c999cf,k8s-app=kube-proxy,pod-template-generation=1

kube-system kube-scheduler-k8s-master 1/1 Running 4 (24h ago) 3d20h component=kube-scheduler,tier=control-plane

# 多值匹配

[root@k8s-master ~]# kubectl get po -A -l app!=flannel,test=1.0.0 --show-labels

No resources found

# 多值匹配,多值匹配是“与”的关系,不是“或”的关系

[root@k8s-master ~]# kubectl get po -A -l app!=flannel,type=app --show-labels

NAMESPACE NAME READY STATUS RESTARTS AGE LABELS

default nginx-liveness-po 1/1 Running 0 20m auth=xiaohong,test=l.0.0,type=app

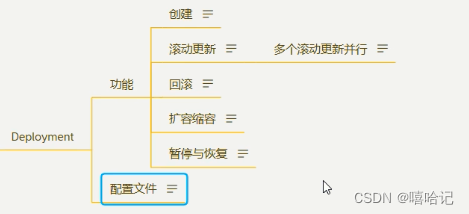

2、部署对象Deployment(无状态应用)

2.1 创建deploy

2.1.1 通过命令行创建一个deployment

# 创建一个deployment

[root@k8s-master ~]# kubectl create deploy nginx-deploy --image=nginx:1.20

deployment.apps/nginx-deploy created

创建一个deployment

使用命令行模式:kubectl create deploy nginx-deploy --image=nginx:1.20

或执行 kubectl create -f xxx.yaml --record

-record会在annotation中记录当前命令创建或升级了资源,后续可以查看做过哪变动操作。

2.1.2 查看deployment 信息

通过kubectl get 查看deployment,可以使用deployment,也可以用deploy

# 通过kubectl get 查看deployment,可以使用deployment,也可以用deploy

[root@k8s-master ~]# kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 1/1 1 1 10s

[root@k8s-master ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 1/1 1 1 14s

2.1.3 查看replicasets 信息

由于创建的deployment资源包含 replicasets信息(replicasets是可以自动扩容和缩容),可以查看到 replicasets的名字中包含deploy资源的名字信息

# 由于deployment包含 replicasets(replicasets是可以自动扩容和缩容),可以查看到 replicasets的名字中包含deploy的名字信息

[root@k8s-master ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-86b7d8c46d 1 1 1 18s

[root@k8s-master ~]# kubectl get replicasets.apps

NAME DESIRED CURRENT READY AGE

nginx-deploy-86b7d8c46d 1 1 1 38s

2.1.4 查看pod 信息

可以查看到deployment创建的pod种包含 replicasets 资源的名字信息

# 下面的pod可以查看到pod的名字包含 replicasets 名字的信息

[root@k8s-master ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

nginx-deploy-86b7d8c46d-78rj9 1/1 Running 0 46s

2.1.5 通过命令行模式创建的deployment 生成 yaml信息

# 通过创建好的deploy生成yaml文件

[root@k8s-master ~]# kubectl get deploy nginx-deploy -o yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: "2024-02-23T10:59:04Z"

generation: 1

labels:

app: nginx-deploy

name: nginx-deploy

namespace: default

resourceVersion: "235341"

uid: def47aae-13f7-415a-a9d1-18ef72e5a925

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx-deploy

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: nginx-deploy

spec:

containers:

- image: nginx:1.20

imagePullPolicy: IfNotPresent

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 1

conditions:

- lastTransitionTime: "2024-02-23T10:59:05Z"

lastUpdateTime: "2024-02-23T10:59:05Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2024-02-23T10:59:04Z"

lastUpdateTime: "2024-02-23T10:59:05Z"

message: ReplicaSet "nginx-deploy-86b7d8c46d" has successfully progressed.

reason: NewReplicaSetAvailable

status: "True"

type: Progressing

observedGeneration: 1

readyReplicas: 1

replicas: 1

updatedReplicas: 1

2.1.6 查看po、rs、deploy中所有的label信息

通过以下信息,可以查看到:

deploy、rs、pod种都包含同一个label信息:app=nginx-deploy;

rs和pod中同时还有一个相同的pod标签信息:pod-template-hash=86b7d8c46d,这个标签信息就是为了我们能更方便的动态创建pod使用。

[root@k8s-master ~]# kubectl get po,rs,deploy --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod/nginx-deploy-86b7d8c46d-78rj9 1/1 Running 0 30m app=nginx-deploy,pod-template-hash=86b7d8c46d

NAME DESIRED CURRENT READY AGE LABELS

replicaset.apps/nginx-deploy-86b7d8c46d 1 1 1 30m app=nginx-deploy,pod-template-hash=86b7d8c46d

NAME READY UP-TO-DATE AVAILABLE AGE LABELS

deployment.apps/nginx-deploy 1/1 1 1 30m app=nginx-deploy

2.1.7 通过(2.1.5)中生成的yaml信息创建yaml文件

通过命令行创建的deployment信息生成yaml信息,根据我们所需信息来创建yaml文件

apiVersion: apps/v1 # deployment api版本

kind: Deployment # 资源类型为 Deployment

metadata: # 原信息

labels: # 标签

app: nginx-deploy # 具体的标签信息:app=nginx-deploy key: value 配置形式

name: nginx-deploy # deployment的名字

namespace: default # 所在的命名空间

spec:

replicas: 1 # 期望副本数

revisionHistoryLimit: 10 # 进行滚动更新后,保留的历史版本数量

selector: # 选择器,用于找到匹配的RS

matchLabels: # 按照标签匹配

app: nginx-deploy # 匹配的标签

strategy: #更新策略

rollingUpdate: # 滚动更新配置

maxSurge: 25% # 滚动更新时,更新的个数最多超过多少个期望副本数,或者比例

maxUnavailable: 25% # 进行滚动更新时,最大不可用比例更新比例,表示在所有副本数中,最多可以有多少个不更新成功

type: RollingUpdate # 更新类型,采用滚动更新

template: # pod 模板

metadata: # pod模板的元信息

labels: # pod模板的标签

app: nginx-deploy # pod模板的标签信息

spec: # pod 期望信息

containers: # pod 的容器信息

- image: nginx:1.20 # 镜像信息

imagePullPolicy: IfNotPresent # 镜像拉取策略

name: nginx # 容器名字

restartPolicy: Always # pod的重启策略

terminationGracePeriodSeconds: 30 # pod的过期时间

2.2 滚动更新

只有修改了deployment 配置文件文件中的template中的属性后,才会触发滚动更新操作

2.2.1 修改deploy中的其他属性查看pod是否会更新

[root@k8s-master ~]# kubectl get deployments --show-labels

NAME READY UP-TO-DATE AVAILABLE AGE LABELS

nginx-deploy 1/1 1 1 44m app=nginx-deploy

[root@k8s-master ~]# kubectl edit deployments nginx-deploy

deployment.apps/nginx-deploy edited

可以通过下面新的看到我们的deploy更新并没有导致pod更新,只是把新加的标签给更新到了deploy上。

[root@k8s-master ~]# kubectl get deployments --show-labels

NAME READY UP-TO-DATE AVAILABLE AGE LABELS

nginx-deploy 1/1 1 1 49m app=nginx-deploy,new-version=test

# rs和pod并没有这个标签信息

[root@k8s-master ~]# kubectl get deployments,rs,pod --show-labels

NAME READY UP-TO-DATE AVAILABLE AGE LABELS

deployment.apps/nginx-deploy 1/1 1 1 51m app=nginx-deploy,new-version=test

NAME DESIRED CURRENT READY AGE LABELS

replicaset.apps/nginx-deploy-86b7d8c46d 1 1 1 51m app=nginx-deploy,pod-template-hash=86b7d8c46d

NAME READY STATUS RESTARTS AGE LABELS

pod/nginx-deploy-86b7d8c46d-78rj9 1/1 Running 0 51m app=nginx-deploy,pod-template-hash=86b7d8c46d

2.2.2 修改这个deploy的副本数

[root@k8s-master ~]# kubectl get deploy nginx-deploy --show-labels

NAME READY UP-TO-DATE AVAILABLE AGE LABELS

nginx-deploy 3/3 3 3 55m app=nginx-deploy,new-version=test

[root@k8s-master ~]# kubectl describe deployments nginx-deploy

Name: nginx-deploy

Namespace: default

CreationTimestamp: Fri, 23 Feb 2024 18:59:04 +0800

Labels: app=nginx-deploy

new-version=test

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx-deploy

Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx-deploy

Containers:

nginx:

Image: nginx:1.20

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Progressing True NewReplicaSetAvailable

Available True MinimumReplicasAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx-deploy-86b7d8c46d (3/3 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 55m deployment-controller Scaled up replica set nginx-deploy-86b7d8c46d to 1

Normal ScalingReplicaSet 27s deployment-controller Scaled up replica set nginx-deploy-86b7d8c46d to 3 from 1

[root@k8s-master ~]#

通过增加副本数后,我们可以看到deploy变为了3,rs为1,pod为3,pod的模版使用的都是同一个模版。

[root@k8s-master ~]# kubectl get deployments,rs,po --show-labels

NAME READY UP-TO-DATE AVAILABLE AGE LABELS

deployment.apps/nginx-deploy 3/3 3 3 57m app=nginx-deploy,new-version=test

NAME DESIRED CURRENT READY AGE LABELS

replicaset.apps/nginx-deploy-86b7d8c46d 3 3 3 57m app=nginx-deploy,pod-template-hash=86b7d8c46d

NAME READY STATUS RESTARTS AGE LABELS

pod/nginx-deploy-86b7d8c46d-6cf95 1/1 Running 0 93s app=nginx-deploy,pod-template-hash=86b7d8c46d

pod/nginx-deploy-86b7d8c46d-78rj9 1/1 Running 0 57m app=nginx-deploy,pod-template-hash=86b7d8c46d

pod/nginx-deploy-86b7d8c46d-vjncw 1/1 Running 0 93s app=nginx-deploy,pod-template-hash=86b7d8c46d

2.2.3 通过edit选项编辑template属性后我们看看会发生那些变化

通过实时监控deploy的时候可以看到deploy的变化

[root@k8s-master ~]# kubectl get deployments.apps nginx-deploy -w

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 3/3 3 3 61m

nginx-deploy 3/3 3 3 61m

nginx-deploy 3/3 3 3 61m

nginx-deploy 3/3 0 3 61m

nginx-deploy 3/3 1 3 61m

nginx-deploy 4/3 1 4 62m

nginx-deploy 3/3 1 3 62m

nginx-deploy 3/3 2 3 62m

nginx-deploy 4/3 2 4 62m

nginx-deploy 3/3 2 3 62m

nginx-deploy 3/3 3 3 62m

nginx-deploy 4/3 3 4 62m

nginx-deploy 3/3 3 3 62m

# 通过这个命令可以看到更新的状态为成功了

[root@k8s-master ~]# kubectl rollout status deployment nginx-deploy

deployment "nginx-deploy" successfully rolled out

2.2.4 修改set选项编辑刚才的镜像信息看看更新如何

[root@k8s-master ~]# kubectl set image deployment/nginx-deploy nginx=nginx:1.20

deployment.apps/nginx-deploy image updated

[root@k8s-master ~]# kubectl get deployments.apps nginx-deploy -w

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 3/3 3 3 72m

nginx-deploy 3/3 3 3 72m

nginx-deploy 3/3 3 3 72m

nginx-deploy 3/3 0 3 72m

nginx-deploy 3/3 1 3 72m

nginx-deploy 4/3 1 4 72m

nginx-deploy 3/3 1 3 72m

nginx-deploy 3/3 2 3 72m

nginx-deploy 4/3 2 4 72m

nginx-deploy 3/3 2 3 72m

nginx-deploy 3/3 3 3 72m

nginx-deploy 4/3 3 4 72m

nginx-deploy 3/3 3 3 72m

通过如下信息,可以看到新的nginx pod信息可以看到他滚动更新到刚开始的rs信息

[root@k8s-master ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-6bf65c4445 0 0 0 17m

nginx-deploy-86b7d8c46d 3 3 3 79m

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-86b7d8c46d-4s98c 1/1 Running 0 7m17s

nginx-deploy-86b7d8c46d-7s9gz 1/1 Running 0 7m19s

nginx-deploy-86b7d8c46d-w8ljk 1/1 Running 0 7m15s

2.3 回滚操作

有时候你可能想回退一个Deployment,例如,当Deployment不稳定时,比如一直crash looping。

默认侍况下,kubernetes会在系统中保存前两次的Deployment的rollout历史记录,以便你可以随时会退(你可以修改revisionhistory limit来更改保存的revision数),

2.3.1 通过set选项修改一个错误的images信息

[root@k8s-master ~]# kubectl set image deployment/nginx-deploy nginx=nginx:1.200

deployment.apps/nginx-deploy image updated

# 可以看到一个更新的这个nginx的状态是ImagePullBackOff

[root@k8s-master ~]# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

nginx-deploy-85bc5c8fdb-h4rmn 0/1 ImagePullBackOff 0 83s

nginx-deploy-86b7d8c46d-4s98c 1/1 Running 0 25m

nginx-deploy-86b7d8c46d-7s9gz 1/1 Running 0 25m

nginx-deploy-86b7d8c46d-w8ljk 1/1 Running 0 25m

使用命令:kubectl describe pod nginx-deploy-85bc5c8fdb-h4rmn 可以查看到这个新的pod信息

2.3.2 通过edit选项查看这个deploy的信息

2.3.3 查看历史的版本信息

[root@k8s-master ~]# kubectl rollout history deployment/nginx-deploy --revision=0

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

2 <none>

3 <none>

4 <none>

2.3.4 查看历史更新的具体信息

[root@k8s-master ~]# kubectl rollout history deployment/nginx-deploy --revision=4

deployment.apps/nginx-deploy with revision #4

Pod Template:

Labels: app=nginx-deploy

pod-template-hash=85bc5c8fdb

Containers:

nginx:

Image: nginx:1.200

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

[root@k8s-master ~]# kubectl rollout history deployment/nginx-deploy --revision=3

deployment.apps/nginx-deploy with revision #3

Pod Template:

Labels: app=nginx-deploy

pod-template-hash=86b7d8c46d

Containers:

nginx:

Image: nginx:1.20

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

[root@k8s-master ~]# kubectl rollout history deployment/nginx-deploy --revision=2

deployment.apps/nginx-deploy with revision #2

Pod Template:

Labels: app=nginx-deploy

pod-template-hash=6bf65c4445

Containers:

nginx:

Image: nginx:1.21

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

2.3.5 回滚为revision=3的版本

[root@k8s-master ~]# kubectl rollout undo deployment/nginx-deploy --to-revision=3

deployment.apps/nginx-deploy rolled back

[root@k8s-master ~]# kubectl rollout status deployments.apps nginx-deploy

deployment "nginx-deploy" successfully rolled out

如下已经回滚为了revision=3的版本。

[root@k8s-master ~]# kubectl describe deployments nginx-deploy

Name: nginx-deploy

Namespace: default

CreationTimestamp: Fri, 23 Feb 2024 18:59:04 +0800

Labels: app=nginx-deploy

new-version=test

Annotations: deployment.kubernetes.io/revision: 5

Selector: app=nginx-deploy

Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx-deploy

Containers:

nginx:

Image: nginx:1.20

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx-deploy-86b7d8c46d (3/3 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 57m deployment-controller Scaled up replica set nginx-deploy-86b7d8c46d to 3 from 1

Normal ScalingReplicaSet 51m deployment-controller Scaled up replica set nginx-deploy-6bf65c4445 to 1

Normal ScalingReplicaSet 50m deployment-controller Scaled down replica set nginx-deploy-86b7d8c46d to 2 from 3

Normal ScalingReplicaSet 50m deployment-controller Scaled up replica set nginx-deploy-6bf65c4445 to 2 from 1

Normal ScalingReplicaSet 49m deployment-controller Scaled down replica set nginx-deploy-86b7d8c46d to 1 from 2

Normal ScalingReplicaSet 49m deployment-controller Scaled up replica set nginx-deploy-6bf65c4445 to 3 from 2

Normal ScalingReplicaSet 49m deployment-controller Scaled down replica set nginx-deploy-86b7d8c46d to 0 from 1

Normal ScalingReplicaSet 40m deployment-controller Scaled up replica set nginx-deploy-86b7d8c46d to 1 from 0

Normal ScalingReplicaSet 40m deployment-controller Scaled down replica set nginx-deploy-6bf65c4445 to 2 from 3

Normal ScalingReplicaSet 40m (x4 over 40m) deployment-controller (combined from similar events): Scaled down replica set nginx-deploy-6bf65c4445 to 0 from 1

Normal ScalingReplicaSet 16m deployment-controller Scaled up replica set nginx-deploy-85bc5c8fdb to 1

Normal ScalingReplicaSet 116s deployment-controller Scaled down replica set nginx-deploy-85bc5c8fdb to 0 from 1

2.3.6 为啥可以回退为revision=3呢?

可以通过设置,spec.revisonHistoryLimit来指定deployment保留多少revision。如果revisonHistoryLimit设置为0,则不允许deployment回退了。

2.4 扩容和缩容

- 扩缩容命令: kubectl scale --replicas=6 deployment nginx-deploy

- 扩容和缩容一样,只需要通过–replicas 指定数量即可

2.4.1 扩容

# 目前有3个pod,扩容到6个

[root@k8s-master ~]# kubectl scale --replicas=6 deployment nginx-deploy

deployment.apps/nginx-deploy scaled

[root@k8s-master ~]# kubectl get deployments nginx-deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 6/6 6 6 5h43m

[root@k8s-master ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-6bf65c4445 0 0 0 4h42m

nginx-deploy-85bc5c8fdb 0 0 0 4h7m

nginx-deploy-86b7d8c46d 6 6 6 5h43m

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-86b7d8c46d-4s98c 1/1 Running 0 4h31m

nginx-deploy-86b7d8c46d-7s9gz 1/1 Running 0 4h31m

nginx-deploy-86b7d8c46d-n2m6j 1/1 Running 0 33s

nginx-deploy-86b7d8c46d-w8ljk 1/1 Running 0 4h31m

nginx-deploy-86b7d8c46d-wgdnv 1/1 Running 0 33s

nginx-deploy-86b7d8c46d-z86rx 1/1 Running 0 33s

2.4.1 缩容

# 缩容,有6个pod,现在缩容到4个pod

[root@k8s-master ~]# kubectl scale --replicas=4 deployment nginx-deploy

deployment.apps/nginx-deploy scaled

[root@k8s-master ~]# kubectl get deployments nginx-deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 4/4 4 4 5h45m

[root@k8s-master ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-6bf65c4445 0 0 0 4h44m

nginx-deploy-85bc5c8fdb 0 0 0 4h9m

nginx-deploy-86b7d8c46d 4 4 4 5h45m

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-86b7d8c46d-4s98c 1/1 Running 0 4h33m 10.2.2.12 k8s-node-01 <none> <none>

nginx-deploy-86b7d8c46d-7s9gz 1/1 Running 0 4h33m 10.2.1.28 k8s-node-02 <none> <none>

nginx-deploy-86b7d8c46d-w8ljk 1/1 Running 0 4h33m 10.2.1.29 k8s-node-02 <none> <none>

nginx-deploy-86b7d8c46d-z86rx 1/1 Running 0 2m33s 10.2.2.14 k8s-node-01 <none> <none>

2.5 暂停和恢复

由于每次对pod 的 template中的信息发生修改后,都会触发更新deployment操作,那么此时如果频繁修改信息,就会产生多次更新,而实际上只需要执行最后一次更新即可,当出现此类情况时我们就可以暂停deployment的 rollout。

2.5.1 给template添加一个最小cpu和内存的参数

2.5.2 暂停deploy更新

通过kubectl rollout pause deployment <name> 就可以实现暂停,直到你下次恢复后才会继续进行滚动更新。

# 暂时deploy更新

[root@k8s-master ~]# kubectl rollout pause deployment nginx-deploy

deployment.apps/nginx-deploy paused

2.5.3 再次更新template,给cpu和内存添加一个最大参数

这次更新过之后,rs信息和pod信息都没有发生改变,是由于我们暂停了deploy的更新

[root@k8s-master ~]# kubectl edit deploy nginx-deploy

deployment.apps/nginx-deploy edited

[root@k8s-master ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-6bf65c4445 0 0 0 5h7m

nginx-deploy-6dc7697cfb 3 3 3 8m33s

nginx-deploy-85bc5c8fdb 0 0 0 4h32m

nginx-deploy-86b7d8c46d 0 0 0 6h8m

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-6dc7697cfb-6p9mj 1/1 Running 0 8m38s

nginx-deploy-6dc7697cfb-lc472 1/1 Running 0 8m37s

nginx-deploy-6dc7697cfb-w295s 1/1 Running 0 8m35s

2.5.4 查看更新信息

通过下面的信息,可以看到刚才添加cpu和内存参数在更新历史列表里面没有显示,说明我们的deploy没有更新

[root@k8s-master ~]# kubectl rollout history deployment nginx-deploy --revision=0

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

2 <none>

4 <none>

5 <none>

6 <none>

[root@k8s-master ~]# kubectl rollout history deployment nginx-deploy --revision=6

deployment.apps/nginx-deploy with revision #6

Pod Template:

Labels: app=nginx-deploy

pod-template-hash=6dc7697cfb

Containers:

nginx:

Image: nginx:1.20

Port: <none>

Host Port: <none>

Requests:

cpu: 100m

memory: 128Mi

Environment: <none>

Mounts: <none>

Volumes: <none>

2.5.5 恢复deploy的更新

启动deploy的更新

[root@k8s-master ~]# kubectl rollout resume deployment nginx-deploy

deployment.apps/nginx-deploy resumed

这里 rs 已经发生了改变

[root@k8s-master ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-57bf686b9d 3 3 3 12s

nginx-deploy-6bf65c4445 0 0 0 5h15m

nginx-deploy-6dc7697cfb 0 0 0 17m

nginx-deploy-85bc5c8fdb 0 0 0 4h41m

nginx-deploy-86b7d8c46d 0 0 0 6h17m

刚才查询的历史更新版本只有4个,现在又多了一个

[root@k8s-master ~]# kubectl rollout history deployment nginx-deploy --revision=0

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

2 <none>

4 <none>

5 <none>

6 <none>

7 <none>

通过历史更新版本信息可以看到,我们添加的最大cpu和内存参数都发生了改变。

[root@k8s-master ~]# kubectl rollout history deployment nginx-deploy --revision=7

deployment.apps/nginx-deploy with revision #7

Pod Template:

Labels: app=nginx-deploy

pod-template-hash=57bf686b9d

Containers:

nginx:

Image: nginx:1.20

Port: <none>

Host Port: <none>

Limits:

cpu: 500m

memory: 512Mi

Requests:

cpu: 100m

memory: 128Mi

Environment: <none>

Mounts: <none>

Volumes: <none>

2.6 配置文件(部署无状态服务nginx的yaml文件)

[root@k8s-master ~]# cat nginx-deploy.yaml

apiVersion: apps/v1 # deployment api版本

kind: Deployment # 资源类型为 Deployment

metadata: # 原信息

labels: # 标签

app: nginx-deploy # 具体的标签信息:app=nginx-deploy key: value 配置形式

name: nginx-deploy # deployment的名字

namespace: default # 所在的命名空间

spec:

replicas: 1 # 期望副本数

revisionHistoryLimit: 10 # 进行滚动更新后,保留的历史版本数量

selector: # 选择器,用于找到匹配的RS

matchLabels: # 按照标签匹配

app: nginx-deploy # 匹配的标签

strategy: #更新策略

rollingUpdate: # 滚动更新配置

maxSurge: 25% # 滚动更新时,更新的个数最多超过多少个期望副本数,或者比例

maxUnavailable: 25% # 进行滚动更新时,最大不可用比例更新比例,表示在所有副本数中,最多可以有多少个不更新成功

type: RollingUpdate # 更新类型,采用滚动更新

template: # pod 模板

metadata: # pod模板的元信息

labels: # pod模板的标签

app: nginx-deploy # pod模板的标签信息

spec: # pod 期望信息

containers: # pod 的容器信息

- image: nginx:1.20 # 镜像信息

imagePullPolicy: IfNotPresent # 镜像拉取策略

name: nginx # 容器名字

restartPolicy: Always # pod的重启策略

terminationGracePeriodSeconds: 30 # pod的过期时间

更多推荐

已为社区贡献18条内容

已为社区贡献18条内容

所有评论(0)