k8s集群搭建【二进制架设】

k8s集群架设

k8s集群搭建【二进制架设】

搭建介绍

本文档搭建基于kubernetes各组件方式搭建,支持非局域网构建集群。集群为单master集群。

1. 环境规划

1.1. 机器准备

1.15.124.83 2U2G50G 1M k8-master 云服务器

1.15.129.142 2U2G50G 1M k8-node1 云服务器

1.15.170.180 2U2G50G 1M k8-node2 云服务器

1.1.1. 设置主机名

hostnamectl set-hostname --static k8-master

hostnamectl set-hostname --static k8-node1

hostnamectl set-hostname --static k8-node2

1.1.2. /etc/hosts

echo "

1.15.124.83 k8-master etcd-1

1.15.129.142 k8-node1 etcd-2

1.15.170.180 k8-node2 etcd-3

" >> /etc/hosts

ssh-keygen

ssh-copy-id -i /root/.ssh/id_rsa.pub root@k8-master

ssh-copy-id -i /root/.ssh/id_rsa.pub root@k8-node1

ssh-copy-id -i /root/.ssh/id_rsa.pub root@k8-node2

# vim /root/.ssh/known_hosts

1.1.3. 配置网桥、关闭selinux

echo "

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

" >> /etc/sysctl.conf

modprobe br_netfilter

sysctl -p

systemctl stop firewalld && systemctl disable firewalld

setenforce 0 # getenforce

systemctl status firewalld

swapoff -a

1.1.4. 环境变量

mkdir -p /mnt/disk/kube && cd /mnt/disk/kube

cat > env.conf <<EOF

etcd_1=1.15.124.83

etcd_2=1.15.129.142

etcd_3=1.15.170.180

k8_master=1.15.124.83

k8_node1=1.15.129.142

k8_node2=1.15.170.180

# 集群Service虚拟IP地址段

service_cidr=10.0.0.0

service_cidr_gw=10.0.0.1

cluster_cidr=10.244.0.0

EOF

echo 'source /mnt/disk/kube/env.conf' >> /etc/profile && source /etc/profile

2.组件安装

# master组件

# etcd kube-apiserver kube-controller-manager kube-scheduler kubectl

# node组件

# docker kubelet kube-proxy flannel

2.1. etcd

2.1.1. 证书

# etcd apiserver

-

cfssl证书生成工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 wget http://124.223.59.209:7070/chfs/shared/linux/devops/k8s/rpm/cfssl/cfssl_linux-amd64 wget http://124.223.59.209:7070/chfs/shared/linux/devops/k8s/rpm/cfssl/cfssljson_linux-amd64 wget http://124.223.59.209:7070/chfs/shared/linux/devops/k8s/rpm/cfssl/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo -

创建工作目录

mkdir -pv /mnt/disk/kube/ssl/{etcd,k8s} cd /mnt/disk/kube/ssl/etcd -

自签CA

cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOFcat > ca-csr.json <<EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" } ] } EOF -

生成ca证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca - -

使用自签CA签发etcd的https证书

-

创建证书申请文件

cat > server-csr.json <<EOF { "CN": "etcd", "hosts": [ "${etcd_1}", "${etcd_2}", "${etcd_3}", "etcd-1", "etcd-2", "etcd-3", "127.0.0.1" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "k8s", "OU": "System" } ] } EOF -

生成https证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

# apiserver

- 自签证书颁发机构(CA)

-

进入工作目录

cd /mnt/disk/kube/ssl/k8s/ -

使用etcd的自签CA证书

cp /mnt/disk/kube/ssl/etcd/ca*.pem ./ cp /mnt/disk/kube/ssl/etcd/ca-config.json ./ -

创建证书申请文件

cat > kube-proxy-csr.json << EOF { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOFcat > server-csr.json<< EOF { "CN": "kubernetes", "hosts": [ "127.0.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local", "${service_cidr}", "${service_cidr_gw}", "${cluster_cidr}", "${k8_master}", "${k8_node1}", "${k8_node2}", "k8-master", "k8-node1", "k8-node2" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF -

生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare servercfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

-

2.1.2. etcd

-

下载etcd二进制文件

wget https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz cd /mnt/disk/kube wget http://124.223.59.209:7070/chfs/shared/linux/devops/k8s/rpm/etcd-v3.4.9-linux-amd64.tar.gz mkdir -pv /etc/etcd/{bin,cfg,ssl} tar -zxvf etcd-v3.4.9-linux-amd64.tar.gz mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /etc/etcd/bin/ cp /mnt/disk/kube/ssl/etcd/*.pem /etc/etcd/ssl/ cd /etc/etcd/ && ll bin && ll ssl && ll cfg -

创建etcd配置文件

cat > /etc/etcd/cfg/etcd.conf << EOF #[Member] ETCD_NAME="etcd-1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://0.0.0.0:2380" ETCD_LISTEN_CLIENT_URLS="https://0.0.0.0:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${etcd_1}:2380" ETCD_ADVERTISE_CLIENT_URLS="https://${etcd_1}:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://${etcd_1}:2380,etcd-2=https://${etcd_2}:2380,etcd-3=https://${etcd_3}:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" #[Security] ETCD_CERT_FILE="/etc/etcd/ssl/server.pem" ETCD_KEY_FILE="/etc/etcd/ssl/server-key.pem" ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem" ETCD_CLIENT_CERT_AUTH="true" ETCD_PEER_CERT_FILE="/etc/etcd/ssl/server.pem" ETCD_PEER_KEY_FILE="/etc/etcd/ssl/server-key.pem" ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem" ETCD_PEER_CLIENT_CERT_AUTH="true" EOFETCD_NAME:节点名称。 ETCD_DATA_DIR:数据目录。 ETCD_LISTEN_PEER_URLS:集群通信监听地址。 ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址 。 ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址。 ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址。 ETCD_INITIAL_CLUSTER:集群节点地址。 ETCD_INITIAL_CLUSTER_TOKEN:集群 Token。 ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态, new 是新集群, existing 表示加入已有集群。 -

创建etcd服务

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify WorkingDirectory=/data/etcd/ EnvironmentFile=/etc/etcd/cfg/etcd.conf ExecStart=/etc/etcd/bin/etcd --enable-v2 Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF -

分发到node节点上

# etcd-2 上执行 scp -r etcd-1:/etc/etcd/ /etc/ scp etcd-1:/usr/lib/systemd/system/etcd.service /usr/lib/systemd/system/ sed -i 's/"etcd-1"/"etcd-2"/g' /etc/etcd/cfg/etcd.conf sed -i "s/\"https:\/\/${etcd_1}:2380\"/\"https:\/\/${etcd_2}:2380\"/g" /etc/etcd/cfg/etcd.conf sed -i "s/\"https:\/\/${etcd_1}:2379\"/\"https:\/\/${etcd_2}:2379\"/g" /etc/etcd/cfg/etcd.confscp -r etcd-1:/etc/etcd/ /etc/ scp etcd-1:/usr/lib/systemd/system/etcd.service /usr/lib/systemd/system/ # etcd-3 上执行 sed -i 's/"etcd-1"/"etcd-3"/g' /etc/etcd/cfg/etcd.conf sed -i "s/\"https:\/\/${etcd_1}:2380\"/\"https:\/\/${etcd_3}:2380\"/g" /etc/etcd/cfg/etcd.conf sed -i "s/\"https:\/\/${etcd_1}:2379\"/\"https:\/\/${etcd_3}:2379\"/g" /etc/etcd/cfg/etcd.conf -

启动etcd

mkdir -p /data/etcd/ systemctl daemon-reload systemctl restart etcd systemctl enable etcd systemctl status etcd.service -

问题

- 重置

systemctl stop etcd.service rm -rf /var/lib/etcd/default.etcd systemctl daemon-reload systemctl start etcd systemctl enable etcd systemctl status etcd.service systemctl restart kube-apiserver kube-controller-manager kube-scheduler systemctl restart kubelet kube-proxy - 新增节点

/etc/etcd/bin/etcdctl --cert=/etc/etcd/ssl/server.pem --key=/etc/etcd/ssl/server-key.pem --cacert=/etc/etcd/ssl/ca.pem \ --endpoints=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379 \ endpoint health member list -w table /etc/etcd/bin/etcdctl --cert=/etc/etcd/ssl/server.pem --key=/etc/etcd/ssl/server-key.pem --cacert=/etc/etcd/ssl/ca.pem \ --endpoints=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379 \ member remove ID(etcd-2) /etc/etcd/bin/etcdctl --cert=/etc/etcd/ssl/server.pem --key=/etc/etcd/ssl/server-key.pem --cacert=/etc/etcd/ssl/ca.pem \ --endpoints=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379 \ member add etcd-2 --peer-urls=https://106.12.131.95:2380 /etc/etcd/bin/etcdctl --cert=/etc/etcd/ssl/server.pem --key=/etc/etcd/ssl/server-key.pem --cacert=/etc/etcd/ssl/ca.pem \ --endpoints=https://etcd-1:2379 snapshot save /etc/etcd/cfg/backup /etc/etcd/bin/etcdctl --cert=/etc/etcd/ssl/server.pem --key=/etc/etcd/ssl/server-key.pem --cacert=/etc/etcd/ssl/ca.pem \ --endpoints=[https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379] \ snapshot restore backup --data-dir /var/lib/etcd/default.etcd

- 重置

-

etcd 操作

/etc/etcd/bin/etcdctl --cert=/etc/etcd/ssl/server.pem --key=/etc/etcd/ssl/server-key.pem --cacert=/etc/etcd/ssl/ca.pem \ --endpoints=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379 \ endpoint health /etc/etcd/bin/etcdctl --cert=/etc/etcd/ssl/server.pem --key=/etc/etcd/ssl/server-key.pem --cacert=/etc/etcd/ssl/ca.pem \ --endpoints=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379 \ get / --prefix --keys-only /etc/etcd/bin/etcdctl --cert=/etc/etcd/ssl/server.pem --key=/etc/etcd/ssl/server-key.pem --cacert=/etc/etcd/ssl/ca.pem \ --endpoints=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379 \ member list -w table /etc/etcd/bin/etcdctl --endpoints=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379 \ member list -w table

2.2. k8 master组件部署

# etcd kube-apiserver kube-controller-manager kube-scheduler kubectl

2.2.1. 下载二进制包

- 下载二进制包,copy可执行程序及证书。

mkdir -pv /etc/kubernetes/{bin,cfg,ssl,logs} mkdir -p /mnt/disk/kube/ && cd /mnt/disk/kube/ wget https://storage.googleapis.com/kubernetes-release/release/v1.9.0/kubernetes-server-linux-amd64.tar.gz tar -zxvf kubernetes-server-linux-amd64.tar.gz cd kubernetes/server/bin cp kube-apiserver kube-scheduler kube-controller-manager /etc/kubernetes/bin cp kubectl /usr/bin/ cp /mnt/disk/kube/ssl/k8s/*.pem /etc/kubernetes/ssl/

2.2.2. apiserver

-

创建配置文件

cat > /etc/kubernetes/cfg/kube-apiserver.conf << EOF KUBE_APISERVER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --etcd-servers=https://${etcd_1}:2379,https://${etcd_2}:2379,https://${etcd_3}:2379 \\ --bind-address=0.0.0.0 \\ --secure-port=6443 \\ --advertise-address=${k8_master} \\ --allow-privileged=true \\ --service-cluster-ip-range=${service_cidr}/24 \\ --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\ --authorization-mode=RBAC,Node \\ --enable-bootstrap-token-auth=true \\ --token-auth-file=/etc/kubernetes/cfg/token.csv \\ --service-node-port-range=1-65535 \\ --anonymous-auth=false \\ --kubelet-client-certificate=/etc/kubernetes/ssl/server.pem \\ --kubelet-client-key=/etc/kubernetes/ssl/server-key.pem \\ --tls-cert-file=/etc/kubernetes/ssl/server.pem \\ --tls-private-key-file=/etc/kubernetes/ssl/server-key.pem \\ --client-ca-file=/etc/kubernetes/ssl/ca.pem \\ --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \\ --etcd-cafile=/etc/etcd/ssl/ca.pem \\ --etcd-certfile=/etc/etcd/ssl/server.pem \\ --etcd-keyfile=/etc/etcd/ssl/server-key.pem \\ --audit-log-maxage=30 \\ --audit-log-maxbackup=3 \\ --audit-log-maxsize=100 \\ --audit-log-path=/etc/kubernetes/logs/k8s-audit.log" EOF--logtostderr:启用日志。 --v:日志等级。 --log-dir:日志目录。 --etcd-servers:etcd集群地址。 --bind-address:监听地址。 --secure-port:https安全端口。 --advertise-address:集群通告地址。 --allow-privileged:启用授权。 --service-cluster-ip-range:Service虚拟IP地址段。 --enable-admission-plugins:准入控制模块。 --authorization-mode:认证授权,启用RBAC授权和节点自管理。 --enable-bootstrap-token-auth:启用TLS bootstrap机制。 --token-auth-file:bootstrap token文件。 --service-node-port-range:Sevice nodeport类型默认分配端口范围。 --kubelet-client-xxx:apiserver访问kubelet客户端整数。 --tls-xxx-file:apiserver https证书。 --etcd-xxxfile:连接etcd集群证书。 --audit-log-xxx:审计日志。 -

启用TLS Bootstrapping机制

Master上的apiserver启用TLS认证后,Node节点kubelet和kube-proxy要和kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多的时候,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化操作流程,k8s引入了TLS Bootstrapping机制来自动颁发客户端证书,kubelet会以一个低权限用户向apiserver申请证书,kubelet的证书由apiserver动态签署。

-

制作token令牌

head -c 16 /dev/urandom | od -An -t x | tr -d ' ' -

创建token

cat > /etc/kubernetes/cfg/token.csv << EOF 6af18570857ee397452e2a4c2bc99830,kubelet-bootstrap,10001,"system:node-bootstrapper" EOF -

创建apiserver服务

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/etc/kubernetes/cfg/kube-apiserver.conf ExecStart=/etc/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF -

启动apiserver

systemctl daemon-reload systemctl start kube-apiserver systemctl enable kube-apiserver systemctl status kube-apiserver

2.2.3. 部署kube-controller-manager

- 创建配置文件

cat > /etc/kubernetes/cfg/kube-controller-manager.conf << EOF KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/etc/kubernetes/logs \\ --leader-elect=true \\ --master=127.0.0.1:8080 \\ --address=127.0.0.1 \\ --allocate-node-cidrs=true \\ --cluster-cidr=${cluster_cidr}/16 \\ --service-cluster-ip-range=${service_cidr}/24 \\ --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\ --root-ca-file=/etc/kubernetes/ssl/ca.pem \\ --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \\ --experimental-cluster-signing-duration=87600h0m0s" EOF--master:通过本地非安全本地端口8080连接apiserver。 --leader-elect:当该组件启动多个的时候,自动选举。 --cluster-signing-cert-file和--cluster-signing-key-file:自动为kubelet颁发证书的CA,和apiserver保持一致。 - 创建kube-controller-manager服务

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/etc/kubernetes/cfg/kube-controller-manager.conf ExecStart=/etc/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF - 启动服务

systemctl daemon-reload systemctl start kube-controller-manager systemctl enable kube-controller-manager systemctl status kube-controller-manager

2.2.4. 部署kube-scheduler

- 创建配置文件

cat > /etc/kubernetes/cfg/kube-scheduler.conf << EOF KUBE_SCHEDULER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/etc/kubernetes/logs \\ --leader-elect=true \\\ --master=127.0.0.1:8080 \\ --address=127.0.0.1" EOF--master:通过本地非安全本地端口 8080 连接 apiserver。 --leader-elect:当该组件启动多个时, 自动选举( HA) 。 - 创建kube-scheduler服务

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/etc/kubernetes/cfg/kube-scheduler.conf ExecStart=/etc/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF - 启动服务

systemctl daemon-reload systemctl start kube-scheduler systemctl enable kube-scheduler systemctl status kube-scheduler

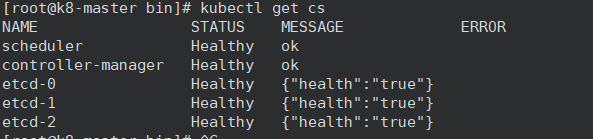

2.2.5. 查看集群状态

-

所有组件都已经启动成功,通过kubectl工具查看当前集群的组件状态

kubectl get cs

2.2.6. 在Master节点生成bootstrap.kubeconfig和kube-proxy.kubeconfig文件

- 创建配置文件(修改token)

### 修改token!!!! cat > ~/configure.sh << EOF #! /bin/bash # create TLS Bootstrapping Token #---------------- # 创建 kubelet bootstrapping 配置文件 export PATH=$PATH:/etc/kubernetes/bin export KUBE_APISERVER="https://${k8_master}:6443" # 修改token export BOOTSTRAP_TOKEN="6af18570857ee397452e2a4c2bc99830" # 创建绑定角色 kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap # 设置 cluster 参数 kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=\${KUBE_APISERVER} \ --kubeconfig=bootstrap.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kubelet-bootstrap \ --token=\${BOOTSTRAP_TOKEN} \ --kubeconfig=bootstrap.kubeconfig # 设置上下文 kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=bootstrap.kubeconfig kubectl config use-context default --kubeconfig=bootstrap.kubeconfig #------------- # 创建 kube-proxy 配置文件 # 设置 cluster 参数 kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=\${KUBE_APISERVER} \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy \ --client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \ --client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig EOF - 执行脚本,并将bootstrap.kubeconfig和kube-proxy.kubeconfig文件复制到所有的Node节点

cd ~ chmod +x configure.sh ./configure.sh scp etcd-1:/root/{bootstrap.kubeconfig,kube-proxy.kubeconfig} /etc/kubernetes/cfg/

2.3. node

# docker kubelet kube-proxy flannel

2.3.1. 安装docker

-

yum安装

# 1.13.1 yum install -y docker systemctl start docker systemctl enable docker systemctl status docker -

设置镜像加速、私有Harbor

sudo tee /etc/docker/daemon.json <<-'EOF' { "insecure-registries": ["124.223.59.209"], "exec-opts": ["native.cgroupdriver=cgroupfs"], "registry-mirrors": ["https://iybn9a8d.mirror.aliyuncs.com"] } EOF # unable to configure the Docker daemon with file /etc/docker/daemon.json: # the following directives are specified both as a flag and in the configuration file: # exec-opts: (from flag: [native.cgroupdriver=systemd], from file: ['native.cgroupdriver=cgroupfs']) # 删除 --exec-opt native.cgroupdriver=systemd vim /usr/lib/systemd/system/docker.service systemctl daemon-reload systemctl restart docker # copy docker Harbor证书 scp -rp 1.15.170.180:/etc/docker/certs.d/124.223.59.209/ /etc/docker/certs.d/ docker login 124.223.59.209 admin Harbor12345

2.3.2. 下载二进制包

- 下载二进制包,并copy证书。

mkdir -pv /etc/kubernetes/{bin,cfg,ssl,logs} mkdir -p /mnt/disk/kube/ && cd /mnt/disk/kube/ wget https://storage.googleapis.com/kubernetes-release/release/v1.9.0/kubernetes-server-linux-amd64.tar.gz tar -zxvf kubernetes-server-linux-amd64.tar.gz cd kubernetes/server/bin cp kubelet kube-proxy /etc/kubernetes/bin # copy k8s证书及bootstrap token scp -rp k8-master:/etc/kubernetes/ssl/*.pem /etc/kubernetes/ssl/ scp k8-master:/root/*.kubeconfig /etc/kubernetes/cfg/

2.3.3. 部署kubelet

-

创建配置文件

cat > /etc/kubernetes/cfg/kubelet.conf << EOF KUBELET_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/etc/kubernetes/logs \\ --hostname-override=k8-node1 \\ --kubeconfig=/etc/kubernetes/cfg/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/etc/kubernetes/cfg/bootstrap.kubeconfig \\ --cert-dir=/etc/kubernetes/ssl \\ --allow-privileged=true \\ --pod-infra-container-image=124.223.59.209/k8s/pause-amd64:3.0" EOFcat > /etc/kubernetes/cfg/kubelet.conf << EOF KUBELET_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/etc/kubernetes/logs \\ --hostname-override=k8-node2 \\ --kubeconfig=/etc/kubernetes/cfg/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/etc/kubernetes/cfg/bootstrap.kubeconfig \\ --cert-dir=/etc/kubernetes/ssl \\ --allow-privileged=true \\ --pod-infra-container-image=124.223.59.209/k8s/pause-amd64:3.0" EOF--hostname-override:显示名称,集群中唯一。 --network-plugin:启用CNI网络插件。 --kubeconfig:用于连接apiserver。 --cert-dir:kubelet证书生成目录。 --pod-infra-container-image:管理Pod网络容器的镜像。 -

创建kubelet服务

cat > /usr/lib/systemd/system/kubelet.service << EOF [Unit] Description=Kubernetes Kubelet After=docker.service [Service] EnvironmentFile=/etc/kubernetes/cfg/kubelet.conf ExecStart=/etc/kubernetes/bin/kubelet \$KUBELET_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF -

启动kubelet服务

systemctl daemon-reload systemctl restart kubelet systemctl enable kubelet systemctl status kubelet -

master上完成token认证

kubectl get csr # approve,deny kubectl certificate approve node-csr-QHw17qwEvu2Iig3QDImTOkM2FC94b0Kn3Pp7cgdQj3M kubectl geet node

-

kubelet连接apiserver条件:

-

–bootstrap-kubeconfig(Bootstrap Token认证)认证*

token确定RBAC权限*下发证书。作用自动分发证书。

-

–kubeconfig 需要指定,不然kubectl get node无法获取。

-

2.3.4. 部署kube-proxy

- 创建配置文件

cat > /etc/kubernetes/cfg/kube-proxy.conf << EOF KUBE_PROXY_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/etc/kubernetes/logs \\ --kubeconfig=/etc/kubernetes/cfg/kube-proxy.kubeconfig \\ --hostname-override=k8-node1" EOFcat > /etc/kubernetes/cfg/kube-proxy.conf << EOF KUBE_PROXY_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/etc/kubernetes/logs \\ --kubeconfig=/etc/kubernetes/cfg/kube-proxy.kubeconfig \\ --hostname-override=k8-node2" EOF - 创建kube-proxy服务

cat > /usr/lib/systemd/system/kube-proxy.service << EOF [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=/etc/kubernetes/cfg/kube-proxy.conf ExecStart=/etc/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF - 启动服务

systemctl daemon-reload systemctl start kube-proxy systemctl enable kube-proxy systemctl status kube-proxy - 问题

- Failed to execute iptables-restore: exit status 1 (~~ yum remove -y iptables ~~)

# iptables的版本号降低到iptables-1.4.21-24.1.el7_5.x86 不能卸载iptables cd /mnt/disk/kube/ wget http://124.223.59.209:7070/chfs/shared/linux/devops/k8s/rpm/iptables/iptables-1.4.21-24.el7.x86_64.rpm rpm --force -ivh iptables-1.4.21-24.el7.x86_64.rpm rpm -qa | grep iptables # 删除不需要的版本 rpm -e iptables-1.4.21-35.el7.x86_64 rpm -e iptables-devel-1.4.21-35.el7.x86_64

- Failed to execute iptables-restore: exit status 1 (~~ yum remove -y iptables ~~)

2.3.5. 部署flannel网络

- 下载二进制包

wget https://github.com/flannel-io/flannel/releases/download/v0.7.1/flannel-v0.7.1-linux-amd64.tar.gz cd /mnt/disk/kube/ wget http://124.223.59.209:7070/chfs/shared/linux/devops/k8s/rpm/flannel-v0.7.1-linux-amd64.tar.gz tar -zxvf flannel-v0.7.1-linux-amd64.tar.gz mkdir -pv /etc/flannel/{bin,cfg} mv flanneld mk-docker-opts.sh /etc/flannel/bin/ - 创建配置文件

cat > /etc/flannel/cfg/flannel.conf << EOF #[flannel config] FLANNELD_PUBLIC_IP="${k8_node1}" FLANNELD_IFACE="eth0" #[etcd] FLANNELD_ETCD_ENDPOINTS="https://${etcd_1}:2379,https://${etcd_2}:2379,https://${etcd_3}:2379" FLANNEL_ETCD_PREFIX="/flannel/network" FLANNELD_ETCD_KEYFILE="/etc/etcd/ssl/server-key.pem" FLANNELD_ETCD_CERTFILE="/etc/etcd/ssl/server.pem" FLANNELD_ETCD_CAFILE="/etc/etcd/ssl/ca.pem" FLANNELD_IP_MASQ=true FLANNEL_OPTIONS=" --etcd-prefix /flannel/network " EOFcat > /etc/flannel/cfg/flannel.conf << EOF #[flannel config] FLANNELD_PUBLIC_IP="${k8_node2}" FLANNELD_IFACE="eth0" #[etcd] FLANNELD_ETCD_ENDPOINTS="https://${etcd_1}:2379,https://${etcd_2}:2379,https://${etcd_3}:2379" FLANNEL_ETCD_PREFIX="/flannel/network" FLANNELD_ETCD_KEYFILE="/etc/etcd/ssl/server-key.pem" FLANNELD_ETCD_CERTFILE="/etc/etcd/ssl/server.pem" FLANNELD_ETCD_CAFILE="/etc/etcd/ssl/ca.pem" FLANNELD_IP_MASQ=true FLANNEL_OPTIONS=" --etcd-prefix /flannel/network " EOF - 创建flannel服务

cat > /usr/lib/systemd/system/flanneld.service << EOF [Unit] Description=Flanneld overlay address etcd agent After=network.target After=network-online.target Wants=network-online.target After=etcd.service Before=docker.service [Service] Type=notify EnvironmentFile=/etc/flannel/cfg/flannel.conf EnvironmentFile=-/etc/sysconfig/docker-network ExecStart=/etc/flannel/bin/flanneld \$FLANNEL_OPTIONS ExecStartPost=/etc/flannel/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker Restart=on-failure [Install] WantedBy=multi-user.target WantedBy=docker.service EOF - etcd

### 注意FLANNEL_ETCD_PREFIX="/flannel/network"配置和mk的参数/flannel/network/config不同 ### /flannel/network/config配置可能无用,默认/coreos.com/network/config ETCDCTL_API=2 /etc/etcd/bin/etcdctl --cert-file=/etc/etcd/ssl/server.pem --key-file=/etc/etcd/ssl/server-key.pem --ca-file=/etc/etcd/ssl/ca.pem \ --endpoints=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379 \ mk /flannel/network/config '{"Network":"10.244.0.0/16","Backend":{"Type":"vxlan"}}' ETCDCTL_API=2 /etc/etcd/bin/etcdctl --cert-file=/etc/etcd/ssl/server.pem --key-file=/etc/etcd/ssl/server-key.pem --ca-file=/etc/etcd/ssl/ca.pem \ --endpoints=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379 \ get /flannel/network/config ### ETCDCTL_API=2 mk get rm ### ETCDCTL_API=3 put get del - 启动服务

systemctl daemon-reload systemctl start flanneld systemctl enable flanneld systemctl status flanneld systemctl daemon-reload systemctl restart docker.service systemctl status docker - 问题

- 重置iptables规则

iptables -P INPUT ACCEPT iptables -P FORWARD ACCEPT iptables -F iptables -L -n - The endpoint is probably not valid etcd cluster endpoint

# 无法获取网络配置:客户端:响应无效json。端点可能不是有效的etcd集群端点 --enable-v2 # ExecStart=/usr/bin/etcd --enable-v2 - 无法修改docker网段

# flannel网络配置文件输出在/run/flannel/docker下: vim /usr/lib/systemd/system/flanneld.service # mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker # 需要让docker识别这个配置文件$DOCKER_NETWORK_OPTIONS,默认配置文件/etc/sysconfig/docker-network,删除或者下面添加 vim /usr/lib/systemd/system/docker.service EnvironmentFile=-/run/flannel/docker # ps -ef | grep docker 查看是否有flannel的配置参数 --bip=10.244.9.1/24 --ip-masq=false --mtu=1450 - ping不通,可能是flannel网络ip多个。

ip a #检查 systemctl stop flanneld ip link delete flannel.1 systemctl start flanneld

- 重置iptables规则

3. 部署项目

3.1. 部署dashboard

-

工作目录

mkdir -p /mnt/disk/kube/yaml && cd /mnt/disk/kube/yaml yum -y install lrzsz #安装lrzsz -

准备yaml配置文件

cat > dashbord.yaml << EOF # Copyright 2017 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. apiVersion: v1 kind: Namespace metadata: name: kubernetes-dashboard --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: ports: - port: 80 targetPort: 9090 nodePort: 9090 selector: k8s-app: kubernetes-dashboard type: NodePort --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboard type: Opaque --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-csrf namespace: kubernetes-dashboard type: Opaque data: csrf: "" --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboard type: Opaque --- kind: ConfigMap apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings namespace: kubernetes-dashboard --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard rules: # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster", "dashboard-metrics-scraper"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"] verbs: ["get"] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: # Allow Metrics Scraper to get metrics from the Metrics server - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes","nodes/stats","nodes/stats"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: 124.223.59.209/k8s/dashboard:v2.0.0-beta4 ports: - containerPort: 9090 protocol: TCP args: - --namespace=kubernetes-dashboard # 上面2行我加的 by sskcal # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://1.15.124.83:8080 volumeMounts: # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: path: / port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30 securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true volumes: - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard # Comment the following tolerations if Dashboard must not be deployed on master # tolerations: # - key: node-role.kubernetes.io/master # effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: ports: - port: 8000 targetPort: 8000 selector: k8s-app: dashboard-metrics-scraper --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: dashboard-metrics-scraper template: metadata: labels: k8s-app: dashboard-metrics-scraper spec: containers: - name: dashboard-metrics-scraper image: 124.223.59.209/k8s/metrics-scraper:v1.0.1 ports: - containerPort: 8000 protocol: TCP livenessProbe: httpGet: scheme: HTTP path: / port: 8000 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - mountPath: /tmp name: tmp-volume serviceAccountName: kubernetes-dashboard # Comment the following tolerations if Dashboard must not be deployed on master # tolerations: # - key: node-role.kubernetes.io/master # effect: NoSchedule volumes: - name: tmp-volume emptyDir: {} EOF

参考文档

https://www.cnblogs.com/xuweiweiwoaini/p/13884112.html 【部署文档】

https://www.cnblogs.com/zhangzihong/p/9447489.html 【配置kubectl config】

https://blog.csdn.net/liumiaocn/article/details/88413428

https://blog.csdn.net/qq_25934401/article/details/103595695

https://www.jianshu.com/p/d25078c8f027 【基于kubeadm搭建k8s高可用集群】

k8s体验集群【已失效】

本次文档搭建集群:http://106.13.215.188:9090/

无证书k8s集群:http://1.15.170.180:9090/

自动化部署jenkins:http://124.223.59.209:8080/jenkins/view/k8/ admin/admin

私用Harbor仓库:https://124.223.59.209/harbor admin/Harbor12345

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)