Kubernetes K8S节点选择(nodeName、nodeSelector、nodeAffinity、podAffinity、Taints以及Tolerations用法)

Kubernetes K8S之固定节点nodeName和nodeSelector调度详解与示例主机配置规划服务器名称(hostname)系统版本配置内网IP外网IP(模拟)k8s-masterCentOS7.72C/4G/20G172.16.1.11010.0.0.110k8s-node01CentOS7.72C/4G/20G172.16.1.11110.0.0.111k8s-node0

感谢以下文章的支持:

容器编排系统K8s之Pod Affinity - Linux-1874 - 博客园

容器编排系统K8s之节点污点和pod容忍度 - Linux-1874 - 博客园

Kubernetes K8S之固定节点nodeName和nodeSelector调度详解 - 技术颜良 - 博客园

介绍:

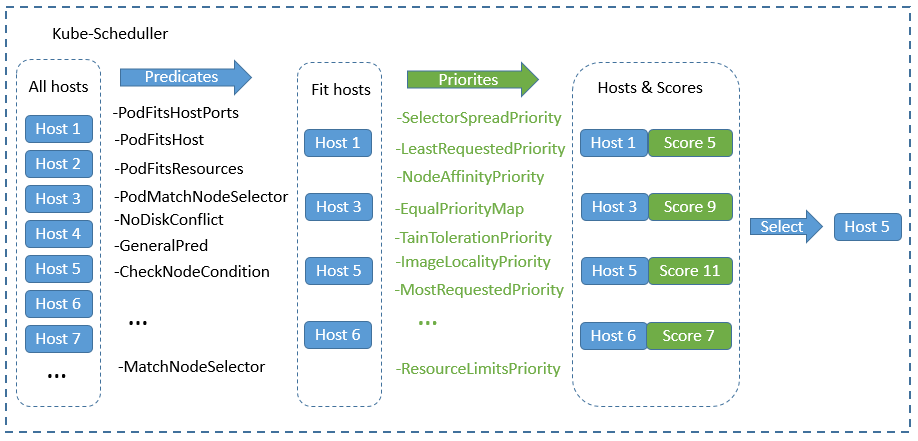

在k8s上有一个非常重要的组件kube-scheduler,它主要作用是监听apiserver上的pod资源中的nodename字段是否为空,如果该字段为空就表示对应pod还没有被调度,此时kube-scheduler就会从k8s众多节点中,根据pod资源的定义相关属性,从众多节点中挑选一个最佳运行pod的节点,并把对应主机名称填充到对应pod的nodename字段,然后把pod定义资源存回apiserver;此时apiserver就会根据pod资源上的nodename字段中的主机名,通知对应节点上的kubelet组件来读取对应pod资源定义,kubelet从apiserver读取对应pod资源定义清单,根据资源清单中定义的属性,调用本地docker把对应pod运行起来;然后把pod状态反馈给apiserver,由apiserver把对应pod的状态信息存回etcd中;整个过程,kube-scheduler主要作用是调度pod,并把调度信息反馈给apiserver,那么问题来了,kube-scheduler它是怎么评判众多节点哪个节点最适合运行对应pod的呢?

在k8s上调度器的工作逻辑是根据调度算法来实现对应pod的调度的;不同的调度算法,调度结果也有所不同,其评判的标准也有所不同,当调度器发现apiserver上有未被调度的pod时,它会把k8s上所有节点信息,挨个套进对应的预选策略函数中进行筛选,把不符合运行pod的节点淘汰掉,我们把这个过程叫做调度器的预选阶段(Predicate);剩下符合运行pod的节点会进入下一个阶段优选(Priority),所谓优选是在这些符合运行pod的节点中根据各个优选函数的评分,最后把每个节点通过各个优选函数评分加起来,选择一个最高分,这个最高分对应的节点就是调度器最后调度结果,如果最高分有多个节点,此时调度器会从最高分相同的几个节点随机挑选一个节点当作最后运行pod的节点;我们把这个这个过程叫做pod选定过程(select);简单讲调度器的调度过程会通过三个阶段,第一阶段是预选阶段,此阶段主要是筛选不符合运行pod节点,并将这些节点淘汰掉;第二阶段是优选,此阶段是通过各个优选函数对节点评分,筛选出得分最高的节点;第三阶段是节点选定,此阶段是从多个高分节点中随机挑选一个作为最终运行pod的节点;大概过程如下图所示

提示:预选过程是一票否决机制,只要其中一个预选函数不通过,对应节点则直接被淘汰;剩下通过预选的节点会进入优选阶段,此阶段每个节点会通过对应的优选函数来对各个节点评分,并计算每个节点的总分;最后调度器会根据每个节点的最后总分来挑选一个最高分的节点,作为最终调度结果;如果最高分有多个节点,此时调度器会从对应节点集合中随机挑选一个作为最后调度结果,并把最后调度结果反馈给apiserver;

影响调度的因素:nodename、NodeSelector、Node Affinity、Pod Affinity、taint和tolerations

1、nodeName

主机配置规划

| 服务器名称(hostname) | 系统版本 | 配置 | 内网IP | 外网IP(模拟) |

|---|---|---|---|---|

| k8s-master | CentOS7.7 | 2C/4G/20G | 172.16.1.110 | 10.0.0.110 |

| k8s-node01 | CentOS7.7 | 2C/4G/20G | 172.16.1.111 | 10.0.0.111 |

| k8s-node02 | CentOS7.7 | 2C/4G/20G | 172.16.1.112 | 10.0.0.112 |

nodeName是节点选择约束的最简单形式,但是由于其限制,通常很少使用它。nodeName是PodSpec的领域。

pod.spec.nodeName将Pod直接调度到指定的Node节点上,会【跳过Scheduler的调度策略】,该匹配规则是【强制】匹配。可以越过Taints污点进行调度。

nodeName用于选择节点的一些限制是:

- 如果指定的节点不存在,则容器将不会运行,并且在某些情况下可能会自动删除。

- 如果指定的节点没有足够的资源来容纳该Pod,则该Pod将会失败,并且其原因将被指出,例如OutOfmemory或OutOfcpu。

- 云环境中的节点名称并非总是可预测或稳定的。

nodeName示例

获取当前的节点信息

[root@k8s-master ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready master 42d v1.17.4 172.16.1.110 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://19.3.8

k8s-node01 Ready <none> 42d v1.17.4 172.16.1.111 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://19.3.8

k8s-node02 Ready <none> 42d v1.17.4 172.16.1.112 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://19.3.81.1、当nodeName指定节点存在

[root@k8s-master ~]# vi scheduler_nodeName.yaml apiVersion: apps/v1 kind: Deployment metadata: name: scheduler-nodename-deploy labels: app: nodename-deploy spec: replicas: 5 selector: matchLabels: app: myapp template: metadata: labels: app: myapp spec: containers: - name: myapp-pod image: registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1 imagePullPolicy: IfNotPresent ports: - containerPort: 80 # 指定节点运行 nodeName: k8s-masternodeName: k8s-master

pod.spec下定义nodeName指定节点运行,nodeName后接的是node节点的名字

[root@k8s-master ~]# kubectl apply -f scheduler_nodeName.yaml

deployment.apps/scheduler-nodename-deploy created

[root@k8s-master ~]# kubectl get deploy -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

scheduler-nodename-deploy 0/5 5 0 6s myapp-pod registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1 app=myapp

[root@k8s-master ~]# kubectl get rs -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

scheduler-nodename-deploy-d5c9574bd 5 5 5 15s myapp-pod registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1 app=myapp,pod-template-hash=d5c9574bd

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

scheduler-nodename-deploy-d5c9574bd-6l9d8 1/1 Running 0 23s 10.244.0.123 k8s-master <none> <none>

scheduler-nodename-deploy-d5c9574bd-c82cc 1/1 Running 0 23s 10.244.0.119 k8s-master <none> <none>

scheduler-nodename-deploy-d5c9574bd-dkkjg 1/1 Running 0 23s 10.244.0.122 k8s-master <none> <none>

scheduler-nodename-deploy-d5c9574bd-hcn77 1/1 Running 0 23s 10.244.0.121 k8s-master <none> <none>

scheduler-nodename-deploy-d5c9574bd-zstjx 1/1 Running 0 23s 10.244.0.120 k8s-master <none> <none>由上可见,yaml文件中nodeName: k8s-master生效,所有pod被调度到了k8s-master节点。如果这里是nodeName: k8s-node02,那么就会直接调度到k8s-node02节点。

1.2、当nodeName指定节点不存在

[root@k8s-master ~]# vi scheduler_nodeName_02.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: scheduler-nodename-deploy

labels:

app: nodename-deploy

spec:

replicas: 5

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp-pod

image: registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

# 指定节点运行,该节点不存在

nodeName: k8s-node08[root@k8s-master ~]# kubectl apply -f scheduler_nodeName_02.yaml

deployment.apps/scheduler-nodename-deploy created

[root@k8s-master ~]# kubectl get deploy -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

scheduler-nodename-deploy 0/5 5 0 4s myapp-pod registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1 app=myapp

[root@k8s-master ~]# kubectl get rs -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

scheduler-nodename-deploy-75944bdc5d 5 5 0 9s myapp-pod registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1 app=myapp,pod-template-hash=75944bdc5d

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

scheduler-nodename-deploy-75944bdc5d-c8f5d 0/1 Pending 0 13s <none> k8s-node08 <none> <none>

scheduler-nodename-deploy-75944bdc5d-hfdlv 0/1 Pending 0 13s <none> k8s-node08 <none> <none>

scheduler-nodename-deploy-75944bdc5d-q9qgt 0/1 Pending 0 13s <none> k8s-node08 <none> <none>

scheduler-nodename-deploy-75944bdc5d-q9zl7 0/1 Pending 0 13s <none> k8s-node08 <none> <none>

scheduler-nodename-deploy-75944bdc5d-wxsnv 0/1 Pending 0 13s <none> k8s-node08 <none> <none>由上可见,如果指定的节点不存在,则容器将不会运行,一直处于Pending 状态。

2、nodeSelector调度

主机配置规划

| 服务器名称(hostname) | 系统版本 | 配置 | 内网IP | 外网IP(模拟) |

|---|---|---|---|---|

| k8s-master | CentOS7.7 | 2C/4G/20G | 172.16.1.110 | 10.0.0.110 |

| k8s-node01 | CentOS7.7 | 2C/4G/20G | 172.16.1.111 | 10.0.0.111 |

| k8s-node02 | CentOS7.7 | 2C/4G/20G | 172.16.1.112 | 10.0.0.112 |

nodeSelector是节点选择约束的最简单推荐形式。nodeSelector是PodSpec的领域。它指定键值对的映射。

Pod.spec.nodeSelector是通过Kubernetes的label-selector机制选择调度到那个节点。可以为一批node节点打上指定的标签,由调度器取匹配符合的标签,并让Pod调度到这些节点,该匹配规则属于【强制】约束。由于是调度器调度,因此不能越过Taints污点进行调度。

nodeSelector示例

[root@k8s-master ~]# kubectl get node -o wide --show-labels

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME LABELS

k8s-master Ready master 42d v1.17.4 172.16.1.110 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://19.3.8 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master,kubernetes.io/os=linux,node-role.kubernetes.io/master=

k8s-node01 Ready <none> 42d v1.17.4 172.16.1.111 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://19.3.8 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node01,kubernetes.io/os=linux

k8s-node02 Ready <none> 42d v1.17.4 172.16.1.112 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://19.3.8 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node02,kubernetes.io/os=linux2.1、当nodeSelector标签存在

添加label标签

运行kubectl get nodes以获取群集节点的名称。然后可以对指定节点添加标签。比如:k8s-node01的磁盘为SSD,那么添加disk-type=ssd;k8s-node02的CPU核数高,那么添加cpu-type=hight;如果为Web机器,那么添加service-type=web。怎么添加标签可以根据实际规划情况而定。

给k8s-node01 添加指定标签 [root@k8s-master ~]# kubectl label nodes k8s-node01 disk-type=ssd node/k8s-node01 labeled 查看labels [root@k8s-master ~]# kubectl get node --show-labels NAME STATUS ROLES AGE VERSION LABELS k8s-master Ready master 42d v1.17.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master,kubernetes.io/os=linux,node-role.kubernetes.io/master= k8s-node01 Ready <none> 42d v1.17.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disk-type=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node01,kubernetes.io/os=linux k8s-node02 Ready <none> 42d v1.17.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node02,kubernetes.io/os=linux 如果要删除标签命令 kubectl label nodes k8s-node01 disk-type-由上可见,已经为k8s-node01节点添加了disk-type=ssd 标签。

编写配置文件

[root@k8s-master ~]# vi scheduler_nodeSelector.yaml apiVersion: apps/v1 kind: Deployment metadata: name: scheduler-nodeselector-deploy labels: app: nodeselector-deploy spec: replicas: 5 selector: matchLabels: app: myapp template: metadata: labels: app: myapp spec: containers: - name: myapp-pod image: registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1 imagePullPolicy: IfNotPresent ports: - containerPort: 80 # 指定节点标签选择,且标签存在 nodeSelector: disk-type: ssdnodeSelector:

disk-type: ssd

# pod.spec下定义nodeSelector指定节点运行,nodeName后接的是node节点的名字

[root@k8s-master ~]# kubectl apply -f scheduler_nodeSelector.yaml

deployment.apps/scheduler-nodeselector-deploy created

[root@k8s-master ~]# kubectl get deploy -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

scheduler-nodeselector-deploy 5/5 5 5 10s myapp-pod registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1 app=myapp

[root@k8s-master ~]# kubectl get rs -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

scheduler-nodeselector-deploy-79455db454 5 5 5 14s myapp-pod registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1 app=myapp,pod-template-hash=79455db454

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

scheduler-nodeselector-deploy-79455db454-745ph 1/1 Running 0 19s 10.244.4.154 k8s-node01 <none> <none>

scheduler-nodeselector-deploy-79455db454-bmjvd 1/1 Running 0 19s 10.244.4.151 k8s-node01 <none> <none>

scheduler-nodeselector-deploy-79455db454-g5cg2 1/1 Running 0 19s 10.244.4.153 k8s-node01 <none> <none>

scheduler-nodeselector-deploy-79455db454-hw8jv 1/1 Running 0 19s 10.244.4.152 k8s-node01 <none> <none>

scheduler-nodeselector-deploy-79455db454-zrt8d 1/1 Running 0 19s 10.244.4.155 k8s-node01 <none> <none>

[root@k8s-master ~]# kubectl describe pod test-busybox # 也可以通过describe 中的Events查看调度结果由上可见,所有pod都被调度到了k8s-node01节点。当然如果其他节点也有disk-type=ssd 标签,那么pod也会调度到这些节点上。

2.2、当nodeSelector标签不存在

[root@k8s-master ~]#

[root@k8s-master ~]# vi scheduler_nodeSelector_02.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: scheduler-nodeselector-deploy

labels:

app: nodeselector-deploy

spec:

replicas: 5

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp-pod

image: registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

# 指定节点标签选择,且标签不存在

nodeSelector:

service-type: web[root@k8s-master ~]# kubectl apply -f scheduler_nodeSelector_02.yaml

deployment.apps/scheduler-nodeselector-deploy created

[root@k8s-master ~]# kubectl get deploy -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

scheduler-nodeselector-deploy 0/5 5 0 26s myapp-pod registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1 app=myapp

[root@k8s-master ~]# kubectl get rs -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

scheduler-nodeselector-deploy-799d748db6 5 5 0 30s myapp-pod registry.cn-beijing.aliyuncs.com/google_registry/myapp:v1 app=myapp,pod-template-hash=799d748db6

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

scheduler-nodeselector-deploy-799d748db6-92mqj 0/1 Pending 0 40s <none> <none> <none> <none>

scheduler-nodeselector-deploy-799d748db6-c2w25 0/1 Pending 0 40s <none> <none> <none> <none>

scheduler-nodeselector-deploy-799d748db6-c8tlx 0/1 Pending 0 40s <none> <none> <none> <none>

scheduler-nodeselector-deploy-799d748db6-tc5n7 0/1 Pending 0 40s <none> <none> <none> <none>

scheduler-nodeselector-deploy-799d748db6-z8c57 0/1 Pending 0 40s <none> <none> <none> <none>

[root@k8s-master ~]# kubectl describe pod test-busybox # 也可以通过describe 中的Events查看调度结果由上可见,如果nodeSelector匹配的标签不存在,则容器将不会运行,一直处于Pending 状态。

3、nodeAffinity

通过上面的例子我们可以感受到nodeSelector的方式比较直观,但是还够灵活,控制粒度偏大,下面我们再看另外一种更加灵活的方式:nodeAffinity。

nodeAffinity就是节点亲和性,相对应的单词是Anti-Affinity,就是反亲和性“互斥”,这两个词比较形象,可以把 pod 选择 node 的过程类比成磁铁的吸引和互斥,不同的是除了简单的正负极之外,pod 和 node 的吸引和互斥是可以灵活配置的。

需要说明的是,nodeAffinity并没有anti-affinity这种东西,因为nodeAffinity中的NotIn和DoesNotExist参数能提供类似的功能。

这种方法比上面的nodeSelector更加灵活,它可以在nodeSelector标签选择基础上进行一些简单的逻辑组合,不只是简单的相等匹配。 调度可以分成软策略和硬策略两种方式。

硬策略,一个pod资源声明了一个策略,这个策略中可以写限制条件,比如标签,在此pod资源交付到k8s集群后,他会在集群中所有节点找到符合我这个策略的节点,比如定义的标签,如果在所有节点中找到符合我定义的标签,我就在这个节点部署我的pod,如果所有节点都没有满足条件的话,就不断重试直到遇见满足条件的node节点为止,不然这个pod就别启动。将一直处于挂起,直到有符合要求才会在匹配上的节点上创建pod。硬策略适用于 pod 必须运行在某种节点,否则会出现问题的情况,比如集群中节点的架构不同,而运行的服务必须依赖某种架构提供的功能。

软策略,跟硬策略一样,都是pod资源声明了一个策略,这个策略中可以写限制条件,比如标签,如果有节点能满足我的条件,就在此节点部署pod,但是如果所有节点全部没有满足调度要求的话,POD 就会忽略这条规则,通过默认的Scheduler调度算法,进行pod部署,直到有符合要求node节点,才会重新按照软策略匹配到符合的节点上创建pod,说白了就是满足条件最好了,没有的话也无所谓了的策略,此调度适用于服务最好运行在某个区域,减少网络传输等。这种区分是用户的具体需求决定的,并没有绝对的技术依赖。

nodeAffinity可对应的两种策略:

preferredDuringScheduling(IgnoredDuringExecution / RequiredDuringExecution) 软策略requiredDuringScheduling(IgnoredDuringExecution / RequiredDuringExecution) 硬策略

3.1、node affinity详细使用方法

requiredDuringSchedulingIgnoredDuringExecution

表示pod必须部署到满足条件的节点上,如果没有满足条件的节点,就不停重试。其中IgnoreDuringExecution表示pod部署之后运行的时候,如果节点标签发生了变化,不再满足pod指定的条件,pod也会继续运行,直到停止此pod生命周期。requiredDuringSchedulingRequiredDuringExecution

表示pod必须部署到满足条件的节点上,如果没有满足条件的节点,就不停重试。其中RequiredDuringExecution表示pod部署之后运行的时候,如果节点标签发生了变化,不再满足pod指定的条件,停止此pod生命周期,重新选择符合要求的节点。preferredDuringSchedulingIgnoredDuringExecution

表示优先部署到满足条件的节点上,如果没有满足条件的节点,就忽略这些条件,按照正常逻辑部署。其中IgnoreDuringExecution表示pod部署之后运行的时候,如果节点标签发生了变化,不再满足pod指定的条件,pod也会继续运行,直到停止此pod生命周期。preferredDuringSchedulingRequiredDuringExecution

表示优先部署到满足条件的节点上,如果没有满足条件的节点,就忽略这些条件,按照正常逻辑部署。其中RequiredDuringExecution表示如果后面节点标签发生了变化,满足了条件,停止此pod生命周期,重新调度到满足条件的节点。总结:

requiredDuringScheduling pod资源在配置中声明一种标签,只有node节点跟我声明的标签一致,pod才能 被调度到此节点,如果没有匹配上,那我就一直等有匹配上的节点。 preferredDuringScheduling pod资源在配置中声明一种标签,只有node节点跟我声明的标签一致,pod才能 被调度到此节点,但如果没有匹配上,那我就不等了,随机找节点。 IgnoredDuringExecution 如果pod已经部署到此节点,但如果此节点labels发生变化,已经运行的pod会 怎么办?pod也会继续运行,直到停止此pod生命周期。 RequiredDuringExecution 如果pod已经部署到此节点,但如果此节点labels发生变化,已经运行的pod会 怎么办?立刻停止生命周期,pod会重新调度到满足条件的节点。如果同时部署硬策略、软策略,当然两种策略都会执行,但是硬策略会苛刻一些,所以必须满足硬策略的节点,然后在这些节点中选择满足软策略的节点,但如果软策略一个都不满足,触发软策略的忽略这个软策略规则,让默认调度算法,调度这个满足硬策略的节点,直到遇到同时满足硬策略、软策略。

3.2、如何编写node affinity配置文件

spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/e2e-az-name operator: In values: - e2e-az1 - e2e-az2定义位置:pod.spec.nodeAffinity,对于nodeaffinity来说,它有两种限制,硬限制/软限制,比如用硬限制requiredDuringSchedulingIgnoredDuringExecution字段来定义,该字段为一个对象,其里面只有nodeSelectorTerms一个字段可以定义,该字段为一个列表对象,可以使用matchExpressions字段来定义匹配对应节点标签的表达式,(其中对应表达式operator中可以使用的操作符有In、NotIn、Exists、DoesNotExists、Lt、Gt;Lt和Gt用于字符串比较,Exists和DoesNotExists用来判断对应标签key是否存在,In和NotIn用来判断对应标签的值是否在某个集合中)

- In: label的值在某个列表中

- NotIn:label的值不在某个列表中

- Exists:某个label存在

- DoesNotExist:某个label不存在

- Gt:label的值大于某个值(字符串比较)

- Lt:label的值小于某个值(字符串比较)

也可以使用matchFields字段来定义对应匹配节点字段;所谓硬限制是指必须满足对应定义的节点标签选择表达式或节点字段选择器,对应pod才能够被调度在对应节点上运行,否则对应pod不能被调度到节点上运行,如果没有满足对应的节点标签表达式或节点字段选择器,则对应pod会一直被挂起;

preferredDuringSchedulingIgnoredDuringExecution: - weight: 1 preference: matchExpressions: - key: another-node-label-key operator: In values: - another-node-label-value第二种是软限制,定义位置:spec.nodeAffinity,用preferredDuringSchedulingIgnoredDuringExecution字段定义,该字段为一个列表对象,里面可以用weight来定义对应软限制的权重,该权重会被调度器在最后计算node得分时加入到对应节点总分中;preference字段是用来定义对应软限制匹配条件;即满足对应软限制的节点在调度时会被调度器把对应权重加入对应节点总分;对于软限制来说,只有当硬限制匹配有多个node时,对应软限制才会生效;即软限制是在硬限制的基础上做的第二次限制,它表示在硬限制匹配多个node,优先使用软限制中匹配的node,如果软限制中给定的权重和匹配条件不能让多个node决胜出最高分,即使用默认调度调度机制,从多个最高分node中随机挑选一个node作为最后调度结果;如果在软限制中给定权重和对应匹配条件能够决胜出对应node最高分,则对应node就为最后调度结果;简单讲软限制和硬限制一起使用,软限制是辅助硬限制对node进行挑选;如果只是单纯的使用软限制,则优先把pod调度到权重较高对应条件匹配的节点上;如果权重一样,则调度器会根据默认规则从最后得分中挑选一个最高分,作为最后调度结果;以上示例表示运行pod的硬限制必须是对应节点上满足有key为foo的节点标签或者key为disktype的节点标签;如果对应硬限制没有匹配到任何节点,则对应pod不做任何调度,即处于pending状态,如果对应硬限制都匹配,则在软限制中匹配key为foo的节点将在总分中加上10,对key为disktype的节点总分加2分;即软限制中,pod更倾向key为foo的节点标签的node上;这里需要注意的是nodeAffinity没有node anti Affinity,要想实现反亲和性可以使用NotIn或者DoesNotExists操作符来匹配对应条件;

如果nodeAffinity中nodeSelector有多个选项,节点满足任何一个条件即可;如果matchExpressions有多个选项,则节点必须同时满足这些选项才能运行pod 。

3.3、nodeaffinity示例

3.3.1、node affinity示例

示例1:使用affinity中的nodeaffinity调度策略

[root@master01 ~]# cat pod-demo-affinity-nodeaffinity.yaml apiVersion: v1 kind: Pod metadata: name: nginx-pod-nodeaffinity spec: containers: - name: nginx image: nginx:1.14-alpine imagePullPolicy: IfNotPresent ports: - name: http containerPort: 80 affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: foo operator: Exists values: [] - matchExpressions: - key: disktype operator: Exists values: [] preferredDuringSchedulingIgnoredDuringExecution: - weight: 10 preference: matchExpressions: - key: foo operator: Exists values: [] - weight: 2 preference: matchExpressions: - key: disktype operator: Exists values: [] [root@master01 ~]#应用资源清单

[root@master01 ~]# kubectl get nodes -L foo,disktype NAME STATUS ROLES AGE VERSION FOO DISKTYPE master01.k8s.org Ready control-plane,master 29d v1.20.0 node01.k8s.org Ready <none> 29d v1.20.0 node02.k8s.org Ready <none> 29d v1.20.0 ssd node03.k8s.org Ready <none> 29d v1.20.0 node04.k8s.org Ready <none> 19d v1.20.0 [root@master01 ~]# kubectl apply -f pod-demo-affinity-nodeaffinity.yaml pod/nginx-pod-nodeaffinity created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-pod 1/1 Running 0 122m 10.244.1.28 node01.k8s.org <none> <none> nginx-pod-nodeaffinity 1/1 Running 0 7s 10.244.2.22 node02.k8s.org <none> <none> nginx-pod-nodeselector 1/1 Running 0 113m 10.244.2.18 node02.k8s.org <none> <none> [root@master01 ~]#提示:可以看到应用清单以后对应pod被调度到node02上运行了,之所以调度到node02是因为对应节点上有key为disktype的节点标签,该条件满足对应运行pod的硬限制;

验证:删除pod和对应node02上的key为disktype的节点标签,再次应用资源清单,看看对应pod怎么调度?

[root@master01 ~]# kubectl delete -f pod-demo-affinity-nodeaffinity.yaml pod "nginx-pod-nodeaffinity" deleted [root@master01 ~]# kubectl label node node02.k8s.org disktype- node/node02.k8s.org labeled [root@master01 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-pod 1/1 Running 0 127m nginx-pod-nodeselector 1/1 Running 0 118m [root@master01 ~]# kubectl get node -L foo,disktype NAME STATUS ROLES AGE VERSION FOO DISKTYPE master01.k8s.org Ready control-plane,master 29d v1.20.0 node01.k8s.org Ready <none> 29d v1.20.0 node02.k8s.org Ready <none> 29d v1.20.0 node03.k8s.org Ready <none> 29d v1.20.0 node04.k8s.org Ready <none> 19d v1.20.0 [root@master01 ~]# kubectl apply -f pod-demo-affinity-nodeaffinity.yaml pod/nginx-pod-nodeaffinity created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-pod 1/1 Running 0 128m 10.244.1.28 node01.k8s.org <none> <none> nginx-pod-nodeaffinity 0/1 Pending 0 9s <none> <none> <none> <none> nginx-pod-nodeselector 1/1 Running 0 118m 10.244.2.18 node02.k8s.org <none> <none> [root@master01 ~]#提示:可以看到删除原有pod和node2上面的标签后,再次应用资源清单,pod就一直处于pending状态;其原因是对应k8s节点没有满足对应pod运行时的硬限制;所以对应pod无法进行调度;

验证:删除pod,分别给node01和node03打上key为foo和key为disktype的节点标签,看看然后再次应用清单,看看对应pod会这么调度?

[root@master01 ~]# kubectl delete -f pod-demo-affinity-nodeaffinity.yaml pod "nginx-pod-nodeaffinity" deleted [root@master01 ~]# kubectl label node node01.k8s.org foo=bar node/node01.k8s.org labeled [root@master01 ~]# kubectl label node node03.k8s.org disktype=ssd node/node03.k8s.org labeled [root@master01 ~]# kubectl get nodes -L foo,disktype NAME STATUS ROLES AGE VERSION FOO DISKTYPE master01.k8s.org Ready control-plane,master 29d v1.20.0 node01.k8s.org Ready <none> 29d v1.20.0 bar node02.k8s.org Ready <none> 29d v1.20.0 node03.k8s.org Ready <none> 29d v1.20.0 ssd node04.k8s.org Ready <none> 19d v1.20.0 [root@master01 ~]# kubectl apply -f pod-demo-affinity-nodeaffinity.yaml pod/nginx-pod-nodeaffinity created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-pod 1/1 Running 0 132m 10.244.1.28 node01.k8s.org <none> <none> nginx-pod-nodeaffinity 1/1 Running 0 5s 10.244.1.29 node01.k8s.org <none> <none> nginx-pod-nodeselector 1/1 Running 0 123m 10.244.2.18 node02.k8s.org <none> <none> [root@master01 ~]#提示:可以看到当硬限制中的条件被多个node匹配时,优先调度对应软限制条件匹配权重较大的节点上,即硬限制不能正常抉择出调度节点,则软限制中对应权重大的匹配条件有限被调度;

验证:删除node01上的节点标签,看看对应pod是否会被移除,或被调度其他节点?

[root@master01 ~]# kubectl get nodes -L foo,disktype NAME STATUS ROLES AGE VERSION FOO DISKTYPE master01.k8s.org Ready control-plane,master 29d v1.20.0 node01.k8s.org Ready <none> 29d v1.20.0 bar node02.k8s.org Ready <none> 29d v1.20.0 node03.k8s.org Ready <none> 29d v1.20.0 ssd node04.k8s.org Ready <none> 19d v1.20.0 [root@master01 ~]# kubectl label node node01.k8s.org foo- node/node01.k8s.org labeled [root@master01 ~]# kubectl get nodes -L foo,disktype NAME STATUS ROLES AGE VERSION FOO DISKTYPE master01.k8s.org Ready control-plane,master 29d v1.20.0 node01.k8s.org Ready <none> 29d v1.20.0 node02.k8s.org Ready <none> 29d v1.20.0 node03.k8s.org Ready <none> 29d v1.20.0 ssd node04.k8s.org Ready <none> 19d v1.20.0 [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-pod 1/1 Running 0 145m 10.244.1.28 node01.k8s.org <none> <none> nginx-pod-nodeaffinity 1/1 Running 0 12m 10.244.1.29 node01.k8s.org <none> <none> nginx-pod-nodeselector 1/1 Running 0 135m 10.244.2.18 node02.k8s.org <none> <none> [root@master01 ~]#提示:可以看到当pod正常运行以后,即便后来对应节点不满足对应pod运行的硬限制,对应pod也不会被移除或调度到其他节点,说明节点亲和性是在调度时发生作用,一旦调度完成,即便后来节点不满足pod运行节点亲和性,对应pod也不会被移除或再次调度;简单讲nodeaffinity对pod调度既成事实无法做二次调度;

3.3.2、nodeAffinity和nodeSelector一起使用示例

nodeAffinity和nodeSelector一起使用时,两者间关系取“与”关系,即两者条件必须同时满足,对应节点才满足调度运行或不运行对应pod;

[root@master01 ~]# cat pod-demo-affinity-nodesector.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-nodeaffinity-nodeselector

spec:

containers:

- name: nginx

image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: foo

operator: Exists

values: []

nodeSelector:

disktype: ssd

[root@master01 ~]#提示:以上清单表示对应pod倾向运行在节点上有节点标签key为foo的节点并且对应节点上还有disktype=ssd节点标签

应用清单

[root@master01 ~]# kubectl get nodes -L foo,disktype

NAME STATUS ROLES AGE VERSION FOO DISKTYPE

master01.k8s.org Ready control-plane,master 29d v1.20.0

node01.k8s.org Ready <none> 29d v1.20.0

node02.k8s.org Ready <none> 29d v1.20.0

node03.k8s.org Ready <none> 29d v1.20.0 ssd

node04.k8s.org Ready <none> 19d v1.20.0

[root@master01 ~]# kubectl apply -f pod-demo-affinity-nodesector.yaml

pod/nginx-pod-nodeaffinity-nodeselector created

[root@master01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 0 168m 10.244.1.28 node01.k8s.org <none> <none>

nginx-pod-nodeaffinity 1/1 Running 0 35m 10.244.1.29 node01.k8s.org <none> <none>

nginx-pod-nodeaffinity-nodeselector 0/1 Pending 0 7s <none> <none> <none> <none>

nginx-pod-nodeselector 1/1 Running 0 159m 10.244.2.18 node02.k8s.org <none> <none>

[root@master01 ~]#提示:可以看到对应pod被创建以后,一直处于pengding状态,原因是没有节点满足同时有节点标签key为foo并且disktype=ssd的节点,所以对应pod就无法正常被调度,只好挂起;

3.3.3、多nodeaffinity同时指定多nodeSelectorTerms示例

多个nodeaffinity同时指定多个nodeSelectorTerms时,相互之间取“或”关系;即使用多个matchExpressions列表分别指定对应的匹配条件;

[root@master01 ~]# cat pod-demo-affinity2.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-nodeaffinity2

spec:

containers:

- name: nginx

image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: foo

operator: Exists

values: []

- matchExpressions:

- key: disktype

operator: Exists

values: []

[root@master01 ~]#提示:以上示例表示运行pod节点倾向对应节点上有节点标签key为foo或key为disktype的节点;

应用清单

[root@master01 ~]# kubectl get nodes -L foo,disktype

NAME STATUS ROLES AGE VERSION FOO DISKTYPE

master01.k8s.org Ready control-plane,master 29d v1.20.0

node01.k8s.org Ready <none> 29d v1.20.0

node02.k8s.org Ready <none> 29d v1.20.0

node03.k8s.org Ready <none> 29d v1.20.0 ssd

node04.k8s.org Ready <none> 19d v1.20.0

[root@master01 ~]# kubectl apply -f pod-demo-affinity2.yaml

pod/nginx-pod-nodeaffinity2 created

[root@master01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 0 179m 10.244.1.28 node01.k8s.org <none> <none>

nginx-pod-nodeaffinity 1/1 Running 0 46m 10.244.1.29 node01.k8s.org <none> <none>

nginx-pod-nodeaffinity-nodeselector 0/1 Pending 0 10m <none> <none> <none> <none>

nginx-pod-nodeaffinity2 1/1 Running 0 6s 10.244.3.21 node03.k8s.org <none> <none>

nginx-pod-nodeselector 1/1 Running 0 169m 10.244.2.18 node02.k8s.org <none> <none>

[root@master01 ~]#提示:可以看到对应pod被调度node03上运行了,之所以能在node03运行是因为对应node03满足节点标签key为foo或key为disktype条件;

3.3.4、同一个matchExpressions,多个条件取“与”关系示例

同一个matchExpressions,多个条件取“与”关系;即使用多个key列表分别指定对应的匹配条件;

示例:在一个matchExpressions下指定多个条件

[root@master01 ~]# cat pod-demo-affinity3.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-nodeaffinity3

spec:

containers:

- name: nginx

image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: foo

operator: Exists

values: []

- key: disktype

operator: Exists

values: []

[root@master01 ~]#提示:上述清单表示pod倾向运行在节点标签key为foo和节点标签key为disktype的节点上;

应用清单

[root@master01 ~]# kubectl get nodes -L foo,disktype

NAME STATUS ROLES AGE VERSION FOO DISKTYPE

master01.k8s.org Ready control-plane,master 29d v1.20.0

node01.k8s.org Ready <none> 29d v1.20.0

node02.k8s.org Ready <none> 29d v1.20.0

node03.k8s.org Ready <none> 29d v1.20.0 ssd

node04.k8s.org Ready <none> 19d v1.20.0

[root@master01 ~]# kubectl apply -f pod-demo-affinity3.yaml

pod/nginx-pod-nodeaffinity3 created

[root@master01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 0 3h8m 10.244.1.28 node01.k8s.org <none> <none>

nginx-pod-nodeaffinity 1/1 Running 0 56m 10.244.1.29 node01.k8s.org <none> <none>

nginx-pod-nodeaffinity-nodeselector 0/1 Pending 0 20m <none> <none> <none> <none>

nginx-pod-nodeaffinity2 1/1 Running 0 9m38s 10.244.3.21 node03.k8s.org <none> <none>

nginx-pod-nodeaffinity3 0/1 Pending 0 7s <none> <none> <none> <none>

nginx-pod-nodeselector 1/1 Running 0 179m 10.244.2.18 node02.k8s.org <none> <none>

[root@master01 ~]#提示:可以看到对应pod创建以后,一直处于pengding状态;原因是没有符合节点标签同时满足key为foo和key为disktyp的节点;

4、podAffinity/podAntiAffinity

4.1 podAffinity

podAffinity是用来定义pod与pod间的亲和性,所谓pod与pod的亲和性是指,pod更愿意和那个或那些pod在一起;与之相反的也有pod更不愿意和那个或那些pod在一起,这种我们叫做pod anti affinity,即pod与pod间的反亲和性;所谓在一起是指和对应pod在同一个位置,这个位置可以是按主机名划分,也可以按照区域划分,这样一来我们要定义pod和pod在一起或不在一起,定义位置就显得尤为重要,也是评判对应pod能够运行在哪里标准。Kubernetes在1.4版本引入的podAffinity概念。

podAffinity 的工作逻辑和使用方式跟nodeAffinity类似,podAffinity也有硬限制和软限制,其逻辑和nodeAffinity一样。

硬亲和:一个pod资源声明了一个特殊的标签,在此pod资源交付到k8s集群后,他会在集群中找寻所有pod,对比这些pod的标签是否跟我声明的特殊的标签内容一致,如果一致,我将在这个pod所在的节点上,创建我自己的pod。如果所有的pod的标签都跟我定义的标签不符合,我将不会创建我的pod,将一直处于挂起,直到有符合要求才会在匹配上的节点上创建pod。

软亲和:对比硬亲和,我定义一个pod资源,声明了这个特殊的标签后,如果在集群所有pod中,存在相同标签的pod,那么我会在这些pod中,优先筛选软亲和规则中权重较大的pod,在这个pod对应的节点上,创建我自己的pod。如果所有的pod的标签都跟我定义的标签不符合,我将舍弃这个规则,直到遇到符合的标签之前,我将通过Scheduler调度机制,调度到某一个节点上。

硬亲和、软亲和都存在:软亲和规则辅助硬亲和规则挑选出符合要求的pod,意思是先要满足硬亲和,如果有pod满足硬亲和,在这些pod中要满足软亲和,但如果软亲和都不满足,就在这些pod中,执行默认的调度算法。

podAffinity 可对应的两种策略:

preferredDuringScheduling(IgnoredDuringExecution / RequiredDuringExecution) 软策略requiredDuringScheduling(IgnoredDuringExecution / RequiredDuringExecution) 硬策略

podAffinity示例

示例1:使用Affinity中的PodAffinity中的硬限制调度策略

[root@master01 ~]# cat require-podaffinity.yaml apiVersion: v1 kind: Pod metadata: name: with-pod-affinity-1 spec: affinity: podAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - {key: app, operator: In, values: ["nginx"]} topologyKey: kubernetes.io/hostname containers: - name: myapp image: ikubernetes/myapp:v1 [root@master01 ~]#提示:上述清单是podaffinity中的硬限制使用方式,其中定义podaffinity需要在spec.affinity字段中使用podAffinity字段来定义;requiredDuringSchedulingIgnoredDuringExecution字段是定义对应podAffinity的硬限制所使用的字段,该字段为一个列表对象,其中labelSelector用来定义和对应pod在一起pod的标签选择器;topologyKey字段是用来定义对应在一起的位置以那个什么来划分,该位置可以是对应节点上的一个节点标签key;上述清单表示运行myapp这个pod的硬限制条件是必须满足对应对应节点上必须运行的有一个pod,这个pod上有一个app=nginx的标签;即标签为app=nginx的pod运行在那个节点,对应myapp就运行在那个节点;如果没有对应pod存在,则该pod也会处于pending状态;

应用清单

[root@master01 ~]# kubectl get pods -L app -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES APP nginx-pod 1/1 Running 0 8m25s 10.244.4.25 node04.k8s.org <none> <none> nginx [root@master01 ~]# kubectl apply -f require-podaffinity.yaml pod/with-pod-affinity-1 created [root@master01 ~]# kubectl get pods -L app -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES APP nginx-pod 1/1 Running 0 8m43s 10.244.4.25 node04.k8s.org <none> <none> nginx with-pod-affinity-1 1/1 Running 0 6s 10.244.4.26 node04.k8s.org <none> <none> [root@master01 ~]#提示:可以看到对应pod运行在node04上了,其原因对应节点上有一个app=nginx标签的pod存在,满足对应podAffinity中的硬限制;

验证:删除上述两个pod,然后再次应用清单,看看对应pod是否能够正常运行?

[root@master01 ~]# kubectl delete all --all pod "nginx-pod" deleted pod "with-pod-affinity-1" deleted service "kubernetes" deleted [root@master01 ~]# kubectl apply -f require-podaffinity.yaml pod/with-pod-affinity-1 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES with-pod-affinity-1 0/1 Pending 0 8s <none> <none> <none> <none> [root@master01 ~]#提示:可以看到对应pod处于pending状态,其原因是没有一个节点上运行的有app=nginx pod标签,不满足podAffinity中的硬限制;

示例2:使用Affinity中的PodAffinity中的软限制调度策略

[root@master01 ~]# cat prefernece-podaffinity.yaml apiVersion: v1 kind: Pod metadata: name: with-pod-affinity-2 spec: affinity: podAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 80 podAffinityTerm: labelSelector: matchExpressions: - {key: app, operator: In, values: ["db"]} topologyKey: rack - weight: 20 podAffinityTerm: labelSelector: matchExpressions: - {key: app, operator: In, values: ["db"]} topologyKey: zone containers: - name: myapp image: ikubernetes/myapp:v1提示:podAffinity中的软限制需要用preferredDuringSchedulingIgnoredDuringExecution字段定义;其中weight用来定义对应软限制条件的权重,即满足对应软限制的node,最后得分会加上这个权重;上述清单表示以节点标签key=rack来划分位置,如果对应节点上运行的有对应pod标签为app=db的pod,则对应节点总分加80;如果以节点标签key=zone来划分位置,如果对应节点上运行的有pod标签为app=db的pod,对应节点总分加20;如果没有满足的节点,则使用默认调度规则进行调度;

应用清单

[root@master01 ~]# kubectl get node -L rack,zone NAME STATUS ROLES AGE VERSION RACK ZONE master01.k8s.org Ready control-plane,master 30d v1.20.0 node01.k8s.org Ready <none> 30d v1.20.0 node02.k8s.org Ready <none> 30d v1.20.0 node03.k8s.org Ready <none> 30d v1.20.0 node04.k8s.org Ready <none> 20d v1.20.0 [root@master01 ~]# kubectl get pods -o wide -L app NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES APP with-pod-affinity-1 0/1 Pending 0 22m <none> <none> <none> <none> [root@master01 ~]# kubectl apply -f prefernece-podaffinity.yaml pod/with-pod-affinity-2 created [root@master01 ~]# kubectl get pods -o wide -L app NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES APP with-pod-affinity-1 0/1 Pending 0 22m <none> <none> <none> <none> with-pod-affinity-2 1/1 Running 0 6s 10.244.4.28 node04.k8s.org <none> <none> [root@master01 ~]#提示:可以看到对应pod正常运行起来,并调度到node04上;从上面的示例来看,对应pod的运行并没有走软限制条件进行调度,而是走默认调度法则;其原因是对应节点没有满足对应软限制中的条件;

验证:删除pod,在node01上打上rack节点标签,在node03上打上zone节点标签,再次运行pod,看看对应pod会怎么调度?

[root@master01 ~]# kubectl delete -f prefernece-podaffinity.yaml pod "with-pod-affinity-2" deleted [root@master01 ~]# kubectl label node node01.k8s.org rack=group1 node/node01.k8s.org labeled [root@master01 ~]# kubectl label node node03.k8s.org zone=group2 node/node03.k8s.org labeled [root@master01 ~]# kubectl get node -L rack,zone NAME STATUS ROLES AGE VERSION RACK ZONE master01.k8s.org Ready control-plane,master 30d v1.20.0 node01.k8s.org Ready <none> 30d v1.20.0 group1 node02.k8s.org Ready <none> 30d v1.20.0 node03.k8s.org Ready <none> 30d v1.20.0 group2 node04.k8s.org Ready <none> 20d v1.20.0 [root@master01 ~]# kubectl apply -f prefernece-podaffinity.yaml pod/with-pod-affinity-2 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES with-pod-affinity-1 0/1 Pending 0 27m <none> <none> <none> <none> with-pod-affinity-2 1/1 Running 0 9s 10.244.4.29 node04.k8s.org <none> <none> [root@master01 ~]#提示:可以看到对应pod还是被调度到node04上运行,说明节点上的位置标签不影响其调度结果;

验证:删除pod,在node01和node03上分别创建一个标签为app=db的pod,然后再次应用清单,看看对应pod会这么调度?

[root@master01 ~]# kubectl apply -f prefernece-podaffinity.yaml pod/with-pod-affinity-2 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES with-pod-affinity-1 0/1 Pending 0 27m <none> <none> <none> <none> with-pod-affinity-2 1/1 Running 0 9s 10.244.4.29 node04.k8s.org <none> <none> [root@master01 ~]# [root@master01 ~]# kubectl delete -f prefernece-podaffinity.yaml pod "with-pod-affinity-2" deleted [root@master01 ~]# cat pod-demo.yaml apiVersion: v1 kind: Pod metadata: name: redis-pod1 labels: app: db spec: nodeSelector: rack: group1 containers: - name: redis image: redis:4-alpine imagePullPolicy: IfNotPresent ports: - name: redis containerPort: 6379 --- apiVersion: v1 kind: Pod metadata: name: redis-pod2 labels: app: db spec: nodeSelector: zone: group2 containers: - name: redis image: redis:4-alpine imagePullPolicy: IfNotPresent ports: - name: redis containerPort: 6379 [root@master01 ~]# kubectl apply -f pod-demo.yaml pod/redis-pod1 created pod/redis-pod2 created [root@master01 ~]# kubectl get pods -L app -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES APP redis-pod1 1/1 Running 0 34s 10.244.1.35 node01.k8s.org <none> <none> db redis-pod2 1/1 Running 0 34s 10.244.3.24 node03.k8s.org <none> <none> db with-pod-affinity-1 0/1 Pending 0 34m <none> <none> <none> <none> [root@master01 ~]# kubectl apply -f prefernece-podaffinity.yaml pod/with-pod-affinity-2 created [root@master01 ~]# kubectl get pods -L app -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES APP redis-pod1 1/1 Running 0 52s 10.244.1.35 node01.k8s.org <none> <none> db redis-pod2 1/1 Running 0 52s 10.244.3.24 node03.k8s.org <none> <none> db with-pod-affinity-1 0/1 Pending 0 35m <none> <none> <none> <none> with-pod-affinity-2 1/1 Running 0 9s 10.244.1.36 node01.k8s.org <none> <none> [root@master01 ~]#提示:可以看到对应pod运行在node01上,其原因是对应node01上有一个pod标签为app=db的pod运行,满足对应软限制条件,并且对应节点上有key为rack的节点标签;即满足对应权重为80的条件,所以对应pod更倾向运行在node01上;

示例3:使用Affinity中的PodAffinity中的硬限制和软限制调度策略

[root@master01 ~]# cat require-preference-podaffinity.yaml apiVersion: v1 kind: Pod metadata: name: with-pod-affinity-3 spec: affinity: podAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - {key: app, operator: In, values: ["db"]} topologyKey: kubernetes.io/hostname preferredDuringSchedulingIgnoredDuringExecution: - weight: 80 podAffinityTerm: labelSelector: matchExpressions: - {key: app, operator: In, values: ["db"]} topologyKey: rack - weight: 20 podAffinityTerm: labelSelector: matchExpressions: - {key: app, operator: In, values: ["db"]} topologyKey: zone containers: - name: myapp image: ikubernetes/myapp:v1 [root@master01 ~]#提示:上述清单表示对应pod必须运行在对应节点上运行的有标签为app=db的pod,如果没有节点满足,则对应pod只能挂起;如果满足的节点有多个,则对应满足软限制中的要求;如果满足硬限制的同时也满足对应节点上有key为rack的节点标签,则对应节点总分加80,如果对应节点有key为zone的节点标签,则对应节点总分加20;

应用清单

[root@master01 ~]# kubectl get pods -o wide -L app NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES APP redis-pod1 1/1 Running 0 13m 10.244.1.35 node01.k8s.org <none> <none> db redis-pod2 1/1 Running 0 13m 10.244.3.24 node03.k8s.org <none> <none> db with-pod-affinity-1 0/1 Pending 0 48m <none> <none> <none> <none> with-pod-affinity-2 1/1 Running 0 13m 10.244.1.36 node01.k8s.org <none> <none> [root@master01 ~]# kubectl apply -f require-preference-podaffinity.yaml pod/with-pod-affinity-3 created [root@master01 ~]# kubectl get pods -o wide -L app NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES APP redis-pod1 1/1 Running 0 14m 10.244.1.35 node01.k8s.org <none> <none> db redis-pod2 1/1 Running 0 14m 10.244.3.24 node03.k8s.org <none> <none> db with-pod-affinity-1 0/1 Pending 0 48m <none> <none> <none> <none> with-pod-affinity-2 1/1 Running 0 13m 10.244.1.36 node01.k8s.org <none> <none> with-pod-affinity-3 1/1 Running 0 6s 10.244.1.37 node01.k8s.org <none> <none> [root@master01 ~]#提示:可以看到对应pod被调度到node01上运行,其原因是对应节点满足硬限制条件的同时也满足对应权重最大的软限制条件;

验证:删除上述pod,重新应用清单看看对应pod是否还会正常运行?

[root@master01 ~]# kubectl delete all --all pod "redis-pod1" deleted pod "redis-pod2" deleted pod "with-pod-affinity-1" deleted pod "with-pod-affinity-2" deleted pod "with-pod-affinity-3" deleted service "kubernetes" deleted [root@master01 ~]# kubectl apply -f require-preference-podaffinity.yaml pod/with-pod-affinity-3 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES with-pod-affinity-3 0/1 Pending 0 5s <none> <none> <none> <none> [root@master01 ~]#提示:可以看到对应pod创建出来处于pending状态,其原因是没有任何节点满足对应pod调度的硬限制;所以对应pod没法调度,只能被挂起;

4.2 podAntiAffinity

podAffinity的反义“互斥”,用法跟podAffinity恰恰相反,符合的标签排斥,在不符合pod的node节点上创建自己的pod。也有硬亲和、软亲和。硬亲和代表如果此节点,没有跟我声明的特殊标签相符的pod,我在此node节点创建pod,如果都是符合的,我将一直处于挂起,一致等待。软亲和代表如果此节点,没有跟我声明的特殊标签相符的pod,我将在这些node节点中,优先筛选规则中互斥最大的pod的node节点创建pod,如果都是符合的,我将舍弃这个规则,直到遇到都不符合的node节点之前,我将通过Scheduler调度机制,调度到某一个节点上。

跟podAffinity唯一不同的是如果要使用互斥性,我们需要使用podAntiAffinity字段。 如下例子,我们希望with-pod-affinity和busybox-pod能够就近部署,而不希望和node-affinity-pod部署在同一个拓扑域下面:(test-pod-affinity.yaml)

podAntiAffinity示例

示例:使用Affinity中的podAntiAffinity调度策略

[root@master01 ~]# cat require-preference-podantiaffinity.yaml

apiVersion: v1

kind: Pod

metadata:

name: with-pod-affinity-4

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- {key: app, operator: In, values: ["db"]}

topologyKey: kubernetes.io/hostname

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 80

podAffinityTerm:

labelSelector:

matchExpressions:

- {key: app, operator: In, values: ["db"]}

topologyKey: rack

- weight: 20

podAffinityTerm:

labelSelector:

matchExpressions:

- {key: app, operator: In, values: ["db"]}

topologyKey: zone

containers:

- name: myapp

image: ikubernetes/myapp:v1

[root@master01 ~]#提示:podantiaffinity的使用和podaffinity的使用方式一样,只是其对应的逻辑相反,podantiaffinity是定义满足条件的节点不运行对应pod,podaffinity是满足条件运行pod;上述清单表示对应pod一定不能运行在有标签为app=db的pod运行的节点,并且对应节点上如果有key为rack和key为zone的节点标签,这类节点也不运行;即只能运行在上述三个条件都满足的节点上;如果所有节点都满足上述三个条件,则对应pod只能挂;如果单单使用软限制,则pod会勉强运行在对应节点得分较低的节点上运行;

应用清单

[root@master01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

with-pod-affinity-3 0/1 Pending 0 22m <none> <none> <none> <none>

[root@master01 ~]# kubectl apply -f require-preference-podantiaffinity.yaml

pod/with-pod-affinity-4 created

[root@master01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

with-pod-affinity-3 0/1 Pending 0 22m <none> <none> <none> <none>

with-pod-affinity-4 1/1 Running 0 6s 10.244.4.30 node04.k8s.org <none> <none>

[root@master01 ~]# kubectl get node -L rack,zone

NAME STATUS ROLES AGE VERSION RACK ZONE

master01.k8s.org Ready control-plane,master 30d v1.20.0

node01.k8s.org Ready <none> 30d v1.20.0 group1

node02.k8s.org Ready <none> 30d v1.20.0

node03.k8s.org Ready <none> 30d v1.20.0 group2

node04.k8s.org Ready <none> 20d v1.20.0

[root@master01 ~]#提示:可以看到对应pod被调度到node04上运行;其原因是node04上没有上述三个条件;当然node02也是符合运行对应pod的节点;

验证:删除上述pod,在四个节点上各自运行一个app=db标签的pod,再次应用清单,看看对用pod怎么调度?

[root@master01 ~]# kubectl delete all --all

pod "with-pod-affinity-3" deleted

pod "with-pod-affinity-4" deleted

service "kubernetes" deleted

[root@master01 ~]# cat pod-demo.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: redis-ds

labels:

app: db

spec:

selector:

matchLabels:

app: db

template:

metadata:

labels:

app: db

spec:

containers:

- name: redis

image: redis:4-alpine

ports:

- name: redis

containerPort: 6379

[root@master01 ~]# kubectl apply -f pod-demo.yaml

daemonset.apps/redis-ds created

[root@master01 ~]# kubectl get pods -L app -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES APP

redis-ds-4bnmv 1/1 Running 0 44s 10.244.2.26 node02.k8s.org <none> <none> db

redis-ds-c2h77 1/1 Running 0 44s 10.244.1.38 node01.k8s.org <none> <none> db

redis-ds-mbxcd 1/1 Running 0 44s 10.244.4.32 node04.k8s.org <none> <none> db

redis-ds-r2kxv 1/1 Running 0 44s 10.244.3.25 node03.k8s.org <none> <none> db

[root@master01 ~]# kubectl apply -f require-preference-podantiaffinity.yaml

pod/with-pod-affinity-5 created

[root@master01 ~]# kubectl get pods -o wide -L app

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES APP

redis-ds-4bnmv 1/1 Running 0 2m29s 10.244.2.26 node02.k8s.org <none> <none> db

redis-ds-c2h77 1/1 Running 0 2m29s 10.244.1.38 node01.k8s.org <none> <none> db

redis-ds-mbxcd 1/1 Running 0 2m29s 10.244.4.32 node04.k8s.org <none> <none> db

redis-ds-r2kxv 1/1 Running 0 2m29s 10.244.3.25 node03.k8s.org <none> <none> db

with-pod-affinity-5 0/1 Pending 0 提示:可以看到对应pod没有节点可以运行,处于pending状态,其原因对应节点都满足排斥运行对应pod的硬限制;

通过上述验证过程可以总结,不管是pod与节点的亲和性还是pod与pod的亲和性,只要在调度策略中定义了硬亲和,对应pod一定会运行在满足硬亲和条件的节点上,如果没有节点满足硬亲和条件,则对应pod挂起;如果只是定义了软亲和,则对应pod会优先运行在匹配权重较大软限制条件的节点上,如果没有节点满足软限制,对应调度就走默认调度策略,找得分最高的节点运行;对于反亲和性也是同样的逻辑;不同的是反亲和满足对应硬限制或软限制,对应pod不会运行在对应节点上;这里还需要注意一点,使用pod与pod的亲和调度策略,如果节点较多,其规则不应该设置的过于精细,颗粒度应该适当即可,过度精细会导致pod在调度时,筛选节点消耗更多的资源,导致整个集群性能下降;建议在大规模集群中使用node affinity;

5、Taints(污点)和Tolerations(容忍)

Taint(污点)和 Toleration(容忍)分别作用于 node 节点和 pod 资源上,其目的是优化 pod 在集群间的调度,它们相互配合,可以用来避免 pod 被分配到不合适的节点上。

5.1、什么是Taint 和 Toleration

Taint:

- 节点污点有点类似节点上的标签或注解信息,它们都是用来描述对应节点的元数据信息。污点定义的格式和标签、注解的定义方式很类似,都是用一个kv数据来表示,不同于节点标签,污点的键值数据中包含对应污点的effect,污点的effect是用于描述对应节点上的污点有什么作用。

- 不同于Kubernetes 亲和性调度(是描述 pod 的属性,来声明此 pod 希望调度到哪类),node刚好相反,它是node 的一个属性,允许 node 主动排斥 pod 的调度。

Toleration:

- 对应的 k8s 又给 pod 资源,新增了配套属性

toleration(容忍),从字面意思就能够理解,pod要想运行在对应有污点的节点上,对应pod就要容忍对应节点上的污点, pod 可以(但不强制要求)被调度到具有相应 taints 的 nodes 上。这两者经常一起搭配,来确保不将 pod 调度到不合适的 nodes。- 通常匹配节点污点的方式有两种,一种是等值匹配,一种是存在性匹配;所谓等值匹配表示对应pod的污点容忍度,必须和节点上的污点属性相等,所谓污点属性是指污点的key、value以及effect;即容忍度必须满足和对应污点的key,value和effect相同,这样表示等值匹配关系,其操作符为Equal;存在性匹配是指对应容忍度只需要匹配污点的key和effect即可,value不纳入匹配标准,即容忍度只要满足和对应污点的key和effect相同就表示能够容忍对应污点,其操作符为Exists;

总结Taint 和 Toleration关系:

说白了,就是在node节点上,打一个Taint(污点),因为有这个污点,导致所有的POD认为你被污染了,我不会把POD调度到此节点。但如果在pod资源上定义一个Toleration(容忍),这个容忍的内容跟刚才给那个节点打Taint(污点)的内容完全一致,这样POD就可以容忍这个被污染节点,继续把POD调度到此节点。

提示:如上图所示,只有能够容忍对应节点污点的pod才能够被调度到对应节点运行,不能容忍节点污点的pod是一定不能调度到对应节点上运行(除节点污点为PreferNoSchedule);

5.2、Taint(污点)

使用kubectl taint命令可以给某个 Node 节点设置污点,只需要指定对应节点名称和污点即可,污点可以指定多个,用空格隔开;

kubectl taint node [node] key=value:[effect] kubectl taint node [node] key_1=value_1:[effect_1] ... key_n=value_n:[effect_n][node] 代表的是node节点名称,因为可以通过kubectl 给任意节点打污点,不需要到指定物理机上执行

key 可以是随意的数值,自定义

[effect] 影响效果,设置污点的影响效果,具体的数值如下[effect] 可取值:[ NoSchedule | PreferNoSchedule | NoExecute ]: ● NoSchedule :表示拒绝pod调度到对应节点上运行,代表一定不能被调度。 ● NoExecute:表示拒绝pod调度到对应节点上运行,而且不仅不会调度,若已部署在此节点的pod不具备容忍 此污点,还会按照规则驱逐Node上已有的Pod。可以做节点故障、升级,把pod驱逐。 ● PreferNoSchedule:尽量不把pod调度到此节点上运行,若POD资源无法容忍所有节点,改成可以容忍 所有污点,通过默认调度算法启动POD,要容忍就容忍所有的污点,不会按照污点数少进行优先调度。

NoExecute 详细说明

NoExecute 这个 Taint 效果对节点上正在运行的 pod 有以下影响:

● 没有设置 Toleration 的 Pod 会被立刻驱逐 ● 配置了对应 Toleration 的 pod,如果没有为 tolerationSeconds 赋值,则会一直留在这一节点中 ● 配置了对应 Toleration 的 pod 且指定了 tolerationSeconds 值,则会在指定时间后驱逐从 kubernetes1.6 版本开始引入了一个 alpha 版本的功能,即把节点故障标记为 Taint(目前只针对 node unreachable 及 node not ready,相应的 NodeCondition "Ready" 的值为 Unknown 和 False)。

激活 TaintBasedEvictions 功能后(在–feature-gates 参数中加入 TaintBasedEvictions=true),NodeController 会自动为 Node 设置 Taint,而状态为 "Ready" 的 Node 上之前设置过的普通驱逐逻辑将会被禁用。

注意,在节点故障情况下,为了保持现存的 pod 驱逐的限速设置,系统将会以限速的模式逐步给 node 设置 Taint,这就能防止在一些特定情况下(比如 master 暂时失联)造成的大量 pod 被驱逐的后果。这一功能兼容于 tolerationSeconds,允许 pod 定义节点故障时持续多久才被逐出。

NoExecute效应由TaintBasedEviction控制,TaintBasedEviction是 Beta 版功能,自 Kubernetes 1.13 起默认启用。总结来说,NoExecute可以作为节点故障的时候使用,当一个node节点出问题了,必须要下线维。那么正常情况下,应该先通过NoExecute参数,把运行在此节点的pod进行驱逐,通过scheduler调度算法让在其他节点部署。然后在k8s集群中把此节点删除,才能下线维,直到维修完成,在把他加入到k8s集群中,去除污点,删除其他负载高的节点的pod,使其重新调度

PreferNoSchedule 应用场景

应用场景:Kubernetes master节点不运行工作负载

Kubernetes集群的Master节点是十分重要的,一个高可用的Kubernetes集群一般会存在3个以上的master节点,为了保证master节点的稳定性,一般不推荐将业务的Pod调度到master节点上。

下面将介绍一下我们使用Kubernetes调度的Taints和和Tolerations特性确保Kubernetes的Master节点不执行工作负载的实践。

我们的Kubernetes集群中总共有3个master节点,节点的名称分别为k8s-01、k8s-02、k8s-03。

为了保证集群的稳定性,同时提高master节点的利用率,我们将其中一个节点设置为node-role.kubernetes.io/master:NoSchedule,另外两个节点设置为node-role.kubernetes.io/master:PreferNoSchedule,kubectl taint nodes k8s-01 node-role.kubernetes.io/master=:NoSchedule kubectl taint nodes k8s-02 node-role.kubernetes.io/master=:PreferNoSchedule kubectl taint nodes k8s-03 node-role.kubernetes.io/master=:PreferNoSchedule这样保证3个节点中的1个无论在任何情况下都将不运行业务Pod,而另外2个载集群资源充足的情况下尽量不运行业务Pod。

另外对于我们部署在集群中的一些非业务组件,例如Kubernetes Dashboard、jaeger-collector、jaeger-query、Prometheus、kube-state-metrics等组件,通过设置Tolerations和Pod Affinity(亲和性)将这些组件运行在master节点上,而其他的业务Pod运行在普通的Node节点上

5.2.2 kubernetes 内置taint行为

kubernetes 1.6 版本,node controller 会跟进系统情况自动设置 node taint 属性。

内置 taint key 如下:

- node.kubernetes.io/not-ready: 节点尚未就绪

- node.kubernetes.io/unreachable: 节点无法被访问

- node.kubernetes.io/unschedulable: 节点不可调度

- node.kubernetes.io/out-of-disk: 节点磁盘不足

- node.kubernetes.io/memory-pressure: 节点有内存压力

- node.kubernetes.io/disk-pressure: 节点有磁盘压力

- node.kubernetes.io/network-unavailable: 节点网络不可用

- node.kubernetes.io/pid-pressure: 节点有 pid 压力

- node.cloudprovider.kubernetes.io/uninitialized: 云节点未初始化

- node.cloudprovider.kubernetes.io/shutdown: 云节点已下线

kubernetes 会通过 DefaultTolerationSeconds admission controller 为创建的 pod 添加两个默认的 toleration: node.kubernetes.io/not-ready 和 node.kubernetes.io/unreachable,并且设置 tolerationSeconds = 300。当然这个默认配置,用户可以自行覆盖。

这些自动添加的默认配置,确保 node 出现问题后,pod 可以继续在 node 上停留 5 分钟。

DefaultTolerationSeconds admission controller 设置的 tolerationSeconds 值,也可以由用户指定。

具体参考: kubernetes/admission.go at master · kubernetes/kubernetes · GitHub

还有一个默认行为,就是 DaemonSet。

所有 DaemonSet 创建的 pod 都会添加两个 toleration:

node.alpha.kubernetes.io/unreachable和node.alpha.kubernetes.io/notReady。设置effect = NoExecute,并且不指定tolerationSeconds。

目的是确保在 node 出现 unreachable 或 notReady 的问题时,DaemonSet Pods 永远不会被驱逐。

5.2.3 打污点示例

给node01(节点名字node01.k8s.org)添加一个test=test:NoSchedule的污点

[root@master01 ~]# kubectl taint node node01.k8s.org test=test:NoSchedule

node/node01.k8s.org tainted

[root@master01 ~]#查看节点污点

[root@master01 ~]# kubectl describe node node01.k8s.org |grep Taint

Taints: test=test:NoSchedule

[root@master01 ~]#5.2.4 删除污点

去除污点:kubectl taint node [node] key:[effect]-

[root@master01 ~]# kubectl describe node node01.k8s.org |grep Taint

Taints: test=test:NoSchedule

[root@master01 ~]# kubectl taint node node01.k8s.org test:NoSchedule-

node/node01.k8s.org untainted

[root@master01 ~]# kubectl describe node node01.k8s.org |grep Taint

Taints: <none>

[root@master01 ~]#提示:删除污点可以指定对应节点上的污点的key和对应污点的effect,也可以直接在对应污点的key后面加“-”,表示删除对应名为对应key的所有污点;因为key是唯一的,一个node节点中label中只有一个一样的key,不存在key01=value01 key01=value02

5.3、Toleration(容忍)

5.3.1 容忍配置

pod.spec.tolerations 注:一个 node节点 label 中只有一个一样的 key,不存在相同 key 不同value

# 只满足 taint 中带有 key1=value1:NoSchedule

tolerations:

- key: "key1"

operator: "Equal"

value: "value1"

effect: "NoSchedule"

# 只满足 taint 中带有 key1=value1:NoExecute

tolerations:

- key: "key1"

operator: "Equal"

value: "value1"

effect: "NoExecute"

tolerationSeconds: 3600

# 只要是 taint 中带有 key2 和 :NoExecute,不在意value,比如 key2=value1:NoExecute key2=value2:NoExecute

# 其实从上述我们也知道,一个 node节点 label 中只有一个一样的 key,不存在相同 key 不同 value,所以写不写 value 无意义

tolerations:

- key: "key2"

operator: "Exists"

effect: "NoSchedule"● 其中 key, vaule 要与 Node 上设置的 taint 保持一致,effect是否一样,需要查看operator定义。

● tolerationSeconds 用于描述当 Pod 需要被驱逐时可以在 Pod 上继续保留运行的时间,该值与

effect为NoExecute 配套使用,等待期间如果taint被移除,那么这个 pod 也就不会被驱逐了。

● operator 值为Equal,则表示其key与value之间的关系是equal等于(key=value),代表key必须跟污染

节点的key相同,对应key的value也要保持一致,否则视为不能容忍。 如果不指定 operator 属性,则

默认值为 Equal。如果operator值为Exists ,如果定义了value 值,尽可能的调度到key=value节点,

如果没有key=value节点,或者节点资源已经占满,退而求其次可以调度到满足key节点,忽略 value 值1、满足无定义key、operator为Exists操作符,代表能够匹配所有的键和值,表示容忍所有的污点(master节点也会部署):

# 是Exists,所以不管key=value,无定义key、operator、value就代表* ,所以容忍所有key、operator,就是所有污点

tolerations:

- operator: "Exists"

# 是Exists,所以不管key=value,无定义key、operator就代表* ,但是定义value,所以容忍所有key后面的value是value1

tolerations:

- operator: "Exists"

value: "value1"

# 是Exists,所以不管key=value,无定义key、value就代表* ,但是定义operator,所以容忍所有operator也就是effect="NoSchedule"

tolerations:

- operator: "Exists"

effect: "NoSchedule"

# 是Exists,所以不管key=value,无定义key就代表* ,但是定义operator、value,所以容忍所有带有effect="NoSchedule" 和 value= "value1"

tolerations:

- operator: "Exists"

value: "value1"

effect: "NoSchedule"2、当不指定 effect 值时,表示忽略effect 效果影响。 如operator规则为Equal,满足key=value节点即可。operator规则为Exists,满足有key的节点即可。

# 是Exists,所以不管key=value,无定义operator、value就代表* ,但是定义key,所以容忍所有带有key="key"

tolerations:

- key: "key"

operator: "Exists"

# 是Equal,所以要匹配key1=value1,无定义operator就代表* ,但是定义key,所以容忍所有带有匹配key1=value1

tolerations:

- key: "key1"

operator: "Equal"

value: "value1" 3、有多个 Master 存在时,防止资源浪费,可以如下设置(还没有测试),设置Node-Name节点尽量不调度

kubectl taint nodes Node-Name node-role.kubernetes.io/master=:PreferNoSchedule

4、多污点与多容忍配置

我们可以对 node 设置多个 taints,当然也可以在 pod 配置相同个数的 tolerations。影响调度和运行的具体行为,我们可以分为以下几类:

- 如果至少有一个 effect == NoSchedule 的 taint 没有被 pod toleration,那么 pod 不会被调度到该节点上。

- 如果所有 effect == NoSchedule 的 taints 都被 pod toleration,但是至少有一个 effect == PreferNoSchedule 没有被 pod toleration,那么 k8s 将努力尝试不把 pod 调度到该节点上。

- 如果至少有一个 effect == NoExecute 的 taint 没有被 pod toleration,那么不仅这个 pod 不会被调度到该节点,甚至这个节点上已经运行但是也没有设置容忍该污点的 pods,都将被驱逐。

5.3.2 示例

示例:创建一个pod,其容忍度为对应节点有 node-role.kubernetes.io/master:NoSchedule的污点

[root@master01 ~]# cat pod-demo-taints.yaml apiVersion: v1 kind: Pod metadata: name: redis-demo labels: app: db spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule [root@master01 ~]#提示:定义pod对节点污点的容忍度需要用tolerations字段定义,该字段为一个列表对象;其中key是用来指定对应污点的key,这个key必须和对应节点污点上的key相等;operator字段用于指定对应的操作符,即描述容忍度怎么匹配污点,这个操作符只有两个,Equal和Exists;effect字段用于描述对应的效用,该字段的值通常有三个,NoSchedule、PreferNoSchedule、NoExecute;这个字段的值必须和对应的污点相同;上述清单表示,redis-demo这个pod能够容忍节点上有node-role.kubernetes.io/master:NoSchedule的污点;

应用清单

[root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo 1/1 Running 0 7s 10.244.4.35 node04.k8s.org <none> <none> [root@master01 ~]#提示:可以看到对应pod运行在node04上;这里需要注意,定义pod容忍度只是表示对应pod可以运行在对应有污点的节点上,并非它一定运行在对应节点上;它也可以运行在那些没有污点的节点上;

验证:删除pod,给node01,node02,03,04都打上test:NoSchedule的污点,再次应用清单,看看对应pod是否能够正常运行?

[root@master01 ~]# kubectl delete -f pod-demo-taints.yaml pod "redis-demo" deleted [root@master01 ~]# kubectl taint node node01.k8s.org test:NoSchedule node/node01.k8s.org tainted [root@master01 ~]# kubectl taint node node02.k8s.org test:NoSchedule node/node02.k8s.org tainted [root@master01 ~]# kubectl taint node node03.k8s.org test:NoSchedule node/node03.k8s.org tainted [root@master01 ~]# kubectl taint node node04.k8s.org test:NoSchedule node/node04.k8s.org tainted [root@master01 ~]# kubectl describe node node01.k8s.org |grep Taints Taints: test:NoSchedule [root@master01 ~]# kubectl describe node node02.k8s.org |grep Taints Taints: test:NoSchedule [root@master01 ~]# kubectl describe node node03.k8s.org |grep Taints Taints: test:NoSchedule [root@master01 ~]# kubectl describe node node04.k8s.org |grep Taints Taints: test:NoSchedule [root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo 1/1 Running 0 18s 10.244.0.14 master01.k8s.org <none> <none> [root@master01 ~]#提示:可以看到对应pod,被调度到master节点上运行了;其原因是对应pod能够容忍master节点上的污点;对应其他node节点上的污点,它并不能容忍,所以只能运行在master节点;

删除对应pod中容忍度的定义,再次应用pod清单,看看对应pod是否会正常运行?

[root@master01 ~]# kubectl delete pod redis-demo pod "redis-demo" deleted [root@master01 ~]# cat pod-demo-taints.yaml apiVersion: v1 kind: Pod metadata: name: redis-demo labels: app: db spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 [root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo 0/1 Pending 0 6s <none> <none> <none> <none> [root@master01 ~]#提示:可以看到对应pod处于pending状态;其原因是对应pod没法容忍对应节点污点;即所有节点都排斥对应pod运行在对应节点上;

示例:定义等值匹配关系污点容忍度

[root@master01 ~]# cat pod-demo-taints.yaml apiVersion: v1 kind: Pod metadata: name: redis-demo labels: app: db spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 tolerations: - key: test operator: Equal value: test effect: NoSchedule [root@master01 ~]#提示:定义等值匹配关系的容忍度,需要指定对应污点中的value属性;

删除原有pod,应用清单

[root@master01 ~]# kubectl delete pod redis-demo pod "redis-demo" deleted [root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo 0/1 Pending 0 4s <none> <none> <none> <none> [root@master01 ~]#提示:可以看到应用对应清单以后,pod处于pending状态,其原因是没有满足对应pod容忍度的节点,所以对应pod无法正常调度到节点上运行;

验证:修改node01节点的污点为test=test:NoSchedule

[root@master01 ~]# kubectl describe node node01.k8s.org |grep Taints Taints: test:NoSchedule [root@master01 ~]# kubectl taint node node01.k8s.org test=test:NoSchedule --overwrite node/node01.k8s.org modified [root@master01 ~]# kubectl describe node node01.k8s.org |grep Taints Taints: test=test:NoSchedule [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo 1/1 Running 0 4m46s 10.244.1.44 node01.k8s.org <none> <none> [root@master01 ~]#提示:可以看到把node01的污点修改为test=test:NoSchedule以后,对应pod就被调度到node01上运行;

验证:修改node01节点上的污点为test:NoSchedule,看看对应pod是否被驱离呢?

[root@master01 ~]# kubectl taint node node01.k8s.org test:NoSchedule --overwrite node/node01.k8s.org modified [root@master01 ~]# kubectl describe node node01.k8s.org |grep Taints Taints: test:NoSchedule [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo 1/1 Running 0 7m27s 10.244.1.44 node01.k8s.org <none> <none> [root@master01 ~]#提示:可以看到对应节点污点修改为test:NoSchedule以后,对应pod也不会被驱离,说明效用为NoSchedule的污点只是在pod调度时起作用,对于调度完成的pod不起作用;

示例:定义pod容忍度为test:PreferNoSchedule

[root@master01 ~]# cat pod-demo-taints.yaml apiVersion: v1 kind: Pod metadata: name: redis-demo1 labels: app: db spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 tolerations: - key: test operator: Exists effect: PreferNoSchedule [root@master01 ~]#应用清单

[root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo1 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo 1/1 Running 0 11m 10.244.1.44 node01.k8s.org <none> <none> redis-demo1 0/1 Pending 0 6s <none> <none> <none> <none> [root@master01 ~]#提示:可以看到对应pod处于pending状态,其原因是没有节点污点是test:PerferNoSchedule,所以对应pod不能被调度运行;

给node02节点添加test:PreferNoSchedule污点

[root@master01 ~]# kubectl describe node node02.k8s.org |grep Taints Taints: test:NoSchedule [root@master01 ~]# kubectl taint node node02.k8s.org test:PreferNoSchedule node/node02.k8s.org tainted [root@master01 ~]# kubectl describe node node02.k8s.org |grep -A 1 Taints Taints: test:NoSchedule test:PreferNoSchedule [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo 1/1 Running 0 18m 10.244.1.44 node01.k8s.org <none> <none> redis-demo1 0/1 Pending 0 6m21s <none> <none> <none> <none> [root@master01 ~]#提示:可以看到对应node02上有两个污点,对应pod也没有正常运行起来,其原因是node02上有一个test:NoSchedule污点,对应pod容忍度不能容忍此类污点;

验证:修改node01,node03,node04上的节点污点为test:PreferNoSchedule,修改pod的容忍度为test:NoSchedule,再次应用清单,看看对应pod怎么调度

[root@master01 ~]# kubectl taint node node01.k8s.org test:NoSchedule- node/node01.k8s.org untainted [root@master01 ~]# kubectl taint node node03.k8s.org test:NoSchedule- node/node03.k8s.org untainted [root@master01 ~]# kubectl taint node node04.k8s.org test:NoSchedule- node/node04.k8s.org untainted [root@master01 ~]# kubectl taint node node01.k8s.org test:PreferNoSchedule node/node01.k8s.org tainted [root@master01 ~]# kubectl taint node node03.k8s.org test:PreferNoSchedule node/node03.k8s.org tainted [root@master01 ~]# kubectl taint node node04.k8s.org test:PreferNoSchedule node/node04.k8s.org tainted [root@master01 ~]# kubectl describe node node01.k8s.org |grep -A 1 Taints Taints: test:PreferNoSchedule Unschedulable: false [root@master01 ~]# kubectl describe node node02.k8s.org |grep -A 1 Taints Taints: test:NoSchedule test:PreferNoSchedule [root@master01 ~]# kubectl describe node node03.k8s.org |grep -A 1 Taints Taints: test:PreferNoSchedule Unschedulable: false [root@master01 ~]# kubectl describe node node04.k8s.org |grep -A 1 Taints Taints: test:PreferNoSchedule Unschedulable: false [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo 1/1 Running 0 31m 10.244.1.44 node01.k8s.org <none> <none> redis-demo1 1/1 Running 0 19m 10.244.1.45 node01.k8s.org <none> <none> [root@master01 ~]# kubectl delete pod --all pod "redis-demo" deleted pod "redis-demo1" deleted [root@master01 ~]# cat pod-demo-taints.yaml apiVersion: v1 kind: Pod metadata: name: redis-demo1 labels: app: db spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 tolerations: - key: test operator: Exists effect: NoSchedule [root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo1 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo1 1/1 Running 0 5s 10.244.4.36 node04.k8s.org <none> <none> [root@master01 ~]#提示:从上面的验证过程来看,当我们把node01,node03,node04节点上的污点删除以后,刚才创建的redis-demo1pod被调度到node01上运行了;其原因是node01上的污点第一个被删除;但我们把pod的容忍对修改成test:NoSchedule以后,再次应用清单,对应pod被调度到node04上运行;目前node01,node02,03,04只有test:PreferNoSchedule污点,所以对于pod资源来说,不管配不配置tolerations,意义不大,在不考虑其他污点情况下,pod资源会先去在没有PreferNoSchedule的污点的节点下部署,如果都有,那就按照默认scheduler算法调度。

示例:定义pod容忍度为test:NoExecute

[root@master01 ~]# cat pod-demo-taints.yaml apiVersion: v1 kind: Pod metadata: name: redis-demo2 labels: app: db spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 tolerations: - key: test operator: Exists effect: NoExecute [root@master01 ~]#应用清单

[root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo2 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo1 1/1 Running 0 35m 10.244.4.36 node04.k8s.org <none> <none> redis-demo2 1/1 Running 0 5s 10.244.4.38 node04.k8s.org <none> <none> [root@master01 ~]#提示:可以看到对应pod被调度到node04上运行,说明容忍效用为NoExecute能够容忍污点效用为PreferNoSchedule的节点;

验证:更改所有node节点污点为test:NoSchedule,删除原有pod,再次应用清单,看看对应pod是否还会正常运行?

[root@master01 ~]# kubectl taint node node01.k8s.org test- node/node01.k8s.org untainted [root@master01 ~]# kubectl taint node node02.k8s.org test- node/node02.k8s.org untainted [root@master01 ~]# kubectl taint node node03.k8s.org test- node/node03.k8s.org untainted [root@master01 ~]# kubectl taint node node04.k8s.org test- node/node04.k8s.org untainted [root@master01 ~]# kubectl taint node node01.k8s.org test:NoSchedule node/node01.k8s.org tainted [root@master01 ~]# kubectl taint node node02.k8s.org test:NoSchedule node/node02.k8s.org tainted [root@master01 ~]# kubectl taint node node03.k8s.org test:NoSchedule node/node03.k8s.org tainted [root@master01 ~]# kubectl taint node node04.k8s.org test:NoSchedule node/node04.k8s.org tainted [root@master01 ~]# kubectl describe node node01.k8s.org |grep -A 1 Taints Taints: test:NoSchedule Unschedulable: false [root@master01 ~]# kubectl describe node node02.k8s.org |grep -A 1 Taints Taints: test:NoSchedule Unschedulable: false [root@master01 ~]# kubectl describe node node03.k8s.org |grep -A 1 Taints Taints: test:NoSchedule Unschedulable: false [root@master01 ~]# kubectl describe node node04.k8s.org |grep -A 1 Taints Taints: test:NoSchedule Unschedulable: false [root@master01 ~]# kubectl delete pod --all pod "redis-demo1" deleted pod "redis-demo2" deleted [root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo2 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 0/1 Pending 0 6s <none> <none> <none> <none> [root@master01 ~]#提示:可以看到对应pod处于pending状态,说明pod容忍效用为NoExecute,并不能容忍污点效用为NoSchedule;

删除pod,修改所有节点污点为test:NoExecute,把pod容忍度修改为NoScheudle,然后应用清单,看看对应pod怎么调度

[root@master01 ~]# kubectl delete pod --all pod "redis-demo2" deleted [root@master01 ~]# kubectl taint node node01.k8s.org test- node/node01.k8s.org untainted [root@master01 ~]# kubectl taint node node02.k8s.org test- node/node02.k8s.org untainted [root@master01 ~]# kubectl taint node node03.k8s.org test- node/node03.k8s.org untainted [root@master01 ~]# kubectl taint node node04.k8s.org test- node/node04.k8s.org untainted [root@master01 ~]# kubectl taint node node01.k8s.org test:NoExecute node/node01.k8s.org tainted [root@master01 ~]# kubectl taint node node02.k8s.org test:NoExecute node/node02.k8s.org tainted [root@master01 ~]# kubectl taint node node03.k8s.org test:NoExecute node/node03.k8s.org tainted [root@master01 ~]# kubectl taint node node04.k8s.org test:NoExecute node/node04.k8s.org tainted [root@master01 ~]# kubectl describe node node01.k8s.org |grep -A 1 Taints Taints: test:NoExecute Unschedulable: false [root@master01 ~]# kubectl describe node node02.k8s.org |grep -A 1 Taints Taints: test:NoExecute Unschedulable: false [root@master01 ~]# kubectl describe node node03.k8s.org |grep -A 1 Taints Taints: test:NoExecute Unschedulable: false [root@master01 ~]# kubectl describe node node04.k8s.org |grep -A 1 Taints Taints: test:NoExecute Unschedulable: false [root@master01 ~]# cat pod-demo-taints.yaml apiVersion: v1 kind: Pod metadata: name: redis-demo2 labels: app: db spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 tolerations: - key: test operator: Exists effect: NoSchedule [root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo2 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 0/1 Pending 0 8s <none> <none> <none> <none> [root@master01 ~]#提示:从上面的演示来看,pod容忍度效用为NoSchedule也不能容忍污点效用为NoExecute;

删除pod,修改对应pod的容忍度为test:NoExecute

[root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 0/1 Pending 0 5m5s <none> <none> <none> <none> [root@master01 ~]# kubectl delete pod --all pod "redis-demo2" deleted [root@master01 ~]# cat pod-demo-taints.yaml apiVersion: v1 kind: Pod metadata: name: redis-demo2 labels: app: db spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 tolerations: - key: test operator: Exists effect: NoExecute [root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo2 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 1/1 Running 0 6s 10.244.4.43 node04.k8s.org <none> <none> [root@master01 ~]#修改node04节点污点为test:NoSchedule,看看对应pod是否可以正常运行?

[root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 1/1 Running 0 4m38s 10.244.4.43 node04.k8s.org <none> <none> [root@master01 ~]# kubectl taint node node04.k8s.org test- node/node04.k8s.org untainted [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 1/1 Running 0 8m2s 10.244.4.43 node04.k8s.org <none> <none> [root@master01 ~]# kubectl taint node node04.k8s.org test:NoSchedule node/node04.k8s.org tainted [root@master01 ~]# kubectl describe node node04.k8s.org |grep -A 1 Taints Taints: test:NoSchedule Unschedulable: false [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 1/1 Running 0 8m25s 10.244.4.43 node04.k8s.org <none> <none> [root@master01 ~]#提示:从NoExecute更改为NoSchedule,对原有pod不会进行驱离;

修改pod的容忍度为test:NoSchedule,再次应用清单

[root@master01 ~]# cat pod-demo-taints.yaml apiVersion: v1 kind: Pod metadata: name: redis-demo3 labels: app: db spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 tolerations: - key: test operator: Exists effect: NoSchedule --- apiVersion: v1 kind: Pod metadata: name: redis-demo4 labels: app: db spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 tolerations: - key: test operator: Exists effect: NoSchedule [root@master01 ~]# kubectl apply -f pod-demo-taints.yaml pod/redis-demo3 created pod/redis-demo4 created [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 1/1 Running 0 14m 10.244.4.43 node04.k8s.org <none> <none> redis-demo3 1/1 Running 0 4s 10.244.4.45 node04.k8s.org <none> <none> redis-demo4 1/1 Running 0 4s 10.244.4.46 node04.k8s.org <none> <none> [root@master01 ~]#提示:可以看到后面两个pod都被调度node04上运行;其原因是对应pod的容忍度test:NoSchedule只能容忍node04上的污点test:NoSchedule;

修改node04的污点为NoExecute,看看对应pod是否会被驱离?

[root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 1/1 Running 0 17m 10.244.4.43 node04.k8s.org <none> <none> redis-demo3 1/1 Running 0 2m32s 10.244.4.45 node04.k8s.org <none> <none> redis-demo4 1/1 Running 0 2m32s 10.244.4.46 node04.k8s.org <none> <none> [root@master01 ~]# kubectl describe node node04.k8s.org |grep -A 1 Taints Taints: test:NoSchedule Unschedulable: false [root@master01 ~]# kubectl taint node node04.k8s.org test- node/node04.k8s.org untainted [root@master01 ~]# kubectl taint node node04.k8s.org test:NoExecute node/node04.k8s.org tainted [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 1/1 Running 0 18m 10.244.4.43 node04.k8s.org <none> <none> redis-demo3 0/1 Terminating 0 3m43s 10.244.4.45 node04.k8s.org <none> <none> redis-demo4 0/1 Terminating 0 3m43s 10.244.4.46 node04.k8s.org <none> <none> [root@master01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES redis-demo2 1/1 Running 0 18m 10.244.4.43 node04.k8s.org <none> <none> [root@master01 ~]#提示:可以看到修改node04的污点为test:NoExecute以后,对应pod容忍污点效用为不是NoExecute的pod被驱离了;说明污点效用为NoExecute,它会驱离不能容忍该污点效用的所有pod;

创建一个deploy,其指定容器的容忍度为test:NoExecute,并指定其驱离延迟施加为10秒

[root@master01 ~]# cat deploy-demo-taint.yaml apiVersion: apps/v1 kind: Deployment metadata: name: deploy-demo spec: replicas: 3 selector: matchLabels: app: redis template: metadata: labels: app: redis spec: containers: - name: redis image: redis:4-alpine ports: - name: redis containerPort: 6379 tolerations: - key: test operator: Exists effect: NoExecute tolerationSeconds: 10 [root@master01 ~]#提示:tolerationSeconds字段用于指定其驱离宽限其时长;该字段只能用在其容忍污点效用为NoExecute的容忍度中使用;其他污点效用不能使用该字段来指定其容忍宽限时长;

应用配置清单

[root@master01 ~]# kubectl apply -f deploy-demo-taint.yaml deployment.apps/deploy-demo created [root@master01 ~]# kubectl get pods -o wide -w NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES deploy-demo-79b89f9847-9zk8j 1/1 Running 0 7s 10.244.2.71 node02.k8s.org <none> <none> deploy-demo-79b89f9847-h8zlc 1/1 Running 0 7s 10.244.3.61 node03.k8s.org <none> <none> deploy-demo-79b89f9847-shscr 1/1 Running 0 7s 10.244.1.62 node01.k8s.org <none> <none> redis-demo2 1/1 Running 0 54m 10.244.4.43 node04.k8s.org <none> <none> deploy-demo-79b89f9847-h8zlc 1/1 Terminating 0 10s 10.244.3.61 node03.k8s.org <none> <none> deploy-demo-79b89f9847-shscr 1/1 Terminating 0 10s 10.244.1.62 node01.k8s.org <none> <none> deploy-demo-79b89f9847-2x8w6 0/1 Pending 0 0s <none> <none> <none> <none> deploy-demo-79b89f9847-2x8w6 0/1 Pending 0 0s <none> node03.k8s.org <none> <none> deploy-demo-79b89f9847-lhltv 0/1 Pending 0 0s <none> <none> <none> <none> deploy-demo-79b89f9847-9zk8j 1/1 Terminating 0 10s 10.244.2.71 node02.k8s.org <none> <none> deploy-demo-79b89f9847-2x8w6 0/1 ContainerCreating 0 0s <none> node03.k8s.org <none> <none> deploy-demo-79b89f9847-lhltv 0/1 Pending 0 0s <none> node02.k8s.org <none> <none> deploy-demo-79b89f9847-lhltv 0/1 ContainerCreating 0 0s <none> node02.k8s.org <none> <none> deploy-demo-79b89f9847-w8xjw 0/1 Pending 0 0s <none> <none> <none> <none> deploy-demo-79b89f9847-w8xjw 0/1 Pending 0 0s <none> node01.k8s.org <none> <none> deploy-demo-79b89f9847-w8xjw 0/1 ContainerCreating 0 0s <none> node01.k8s.org <none> <none> deploy-demo-79b89f9847-shscr 1/1 Terminating 0 10s 10.244.1.62 node01.k8s.org <none> <none> deploy-demo-79b89f9847-h8zlc 1/1 Terminating 0 10s 10.244.3.61 node03.k8s.org <none> <none> deploy-demo-79b89f9847-9zk8j 1/1 Terminating 0 10s 10.244.2.71 node02.k8s.org <none> <none> deploy-demo-79b89f9847-shscr 0/1 Terminating 0 11s 10.244.1.62 node01.k8s.org <none> <none> deploy-demo-79b89f9847-2x8w6 0/1 ContainerCreating 0 1s <none> node03.k8s.org <none> <none> deploy-demo-79b89f9847-lhltv 0/1 ContainerCreating 0 1s <none> node02.k8s.org <none> <none> deploy-demo-79b89f9847-w8xjw 0/1 ContainerCreating 0 1s <none> node01.k8s.org <none> <none> deploy-demo-79b89f9847-h8zlc 0/1 Terminating 0 11s 10.244.3.61 node03.k8s.org <none> <none> deploy-demo-79b89f9847-2x8w6 1/1 Running 0 1s 10.244.3.62 node03.k8s.org <none> <none> deploy-demo-79b89f9847-9zk8j 0/1 Terminating 0 11s 10.244.2.71 node02.k8s.org <none> <none> deploy-demo-79b89f9847-lhltv 1/1 Running 0 1s 10.244.2.72 node02.k8s.org <none> <none> deploy-demo-79b89f9847-w8xjw 1/1 Running 0 2s 10.244.1.63 node01.k8s.org <none> <none> deploy-demo-79b89f9847-h8zlc 0/1 Terminating 0 15s 10.244.3.61 node03.k8s.org <none> <none> deploy-demo-79b89f9847-h8zlc 0/1 Terminating 0 15s 10.244.3.61 node03.k8s.org <none> <none> ^C[root@master01 ~]#提示:可以看到对应pod只能在对应节点上运行10秒,随后就被驱离,因为我们创建的是一个deploy,对应pod被驱离以后,对应deploy又会重建;

总结:对于污点效用为NoSchedule来说,它只会拒绝新建的pod,不会对原有pod进行驱离;如果对应pod能够容忍该污点,则对应pod就有可能运行在对应节点上;如果不能容忍,则对应pod一定不会调度到对应节点运行;对于污点效用为PreferNoSchedule来说,它也不会驱离已存在pod,它只有在所有节点都不满足对应pod容忍度时,对应pod可以勉强运行在此类污点效用的节点上;对于污点效用为NoExecute来说,默认不指定其容忍宽限时长,表示能够一直容忍,如果指定了其宽限时长,则到了宽限时长对应pod将会被驱离;对应之前被调度到该节点上的pod,在节点污点效用变为NoExecute后,该节点会立即驱离所有不能容忍污点效用为NoExecute的pod;

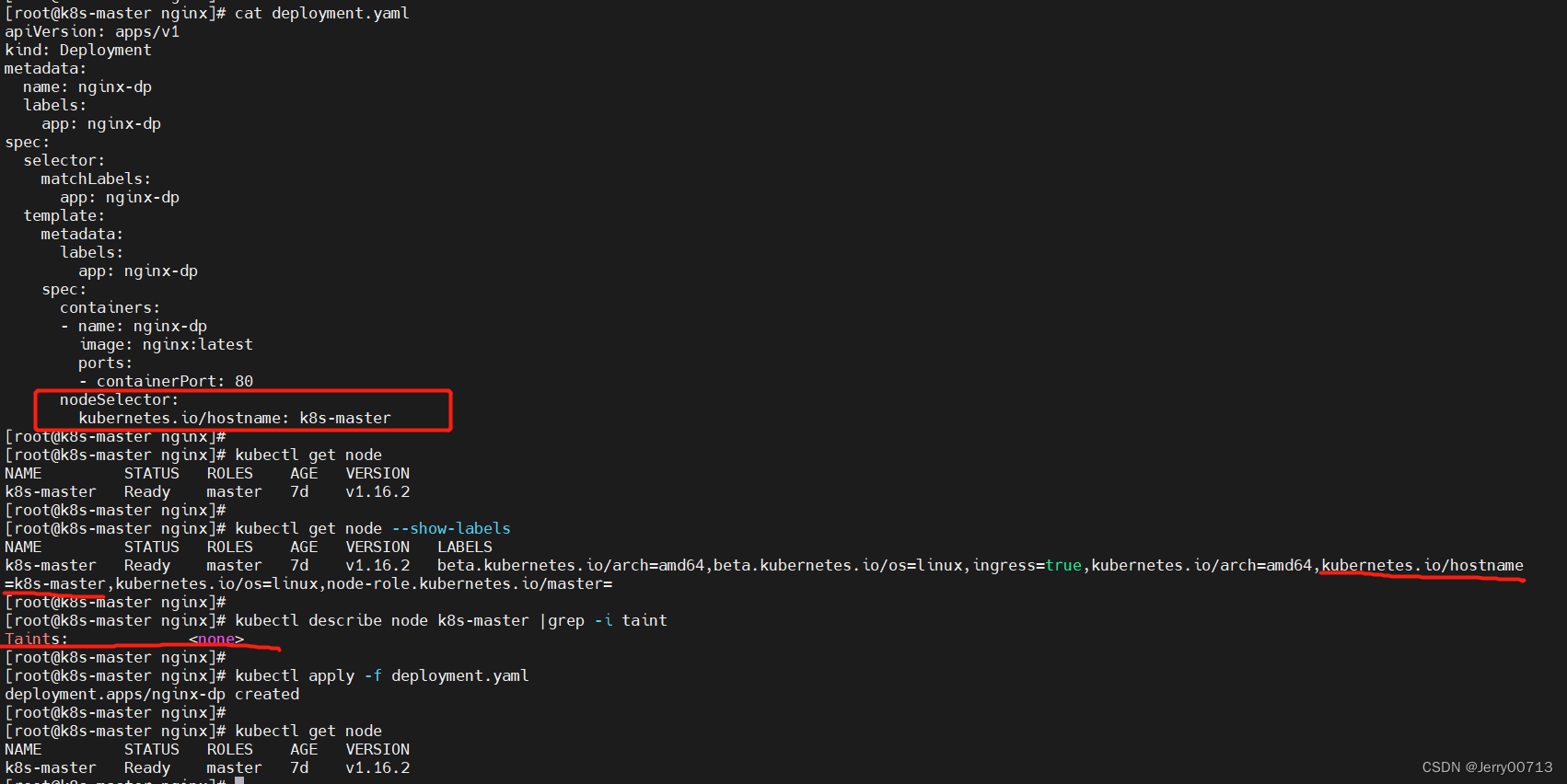

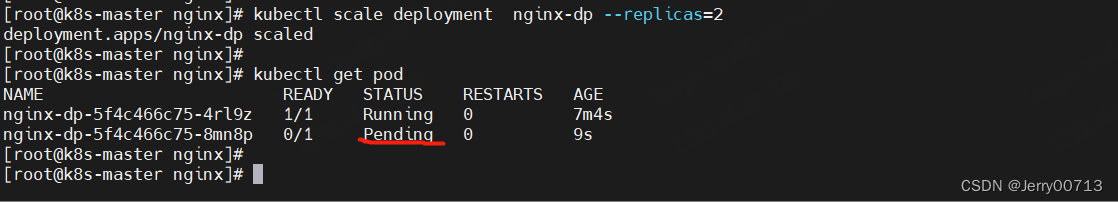

6、Taints 和 nodeSelector 同时存在

如下,无 Taints 的情况下,是可以调度到 nodeSelector 的节点

增加 Taints = NoSchedule ,由于不是NoExecute,已经运行的pod不会删除,扩容=2后发现吗,新的pod是Pending,代表Api server 已经创建pod,等待调度器调用

总结:nodeName、nodeSelector、nodeAffinity、podAffinity、Taint 都存在情况下,需要都满足

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)