K8S 资源控制器

1. 概述kubernetes 控制器会监听资源的 创建/更新/删除 事件,并触发 Reconcile 函数作为响应。整个调整过程被称作 “Reconcile Loop”(调谐循环) 或者 “Sync Loop“(同步循环)Reconcile 是一个使用资源对象的命名空间和资源对象名称来调用的函数,使得资源对象的实际状态与 资源清单中定义的状态保持一致。调用完成后,Reconcile 会将资源对象

1. 概述

kubernetes 控制器会监听资源的 创建/更新/删除 事件,并触发 Reconcile 函数作为响应。整个调整过程被称作 “Reconcile Loop”(调谐循环) 或者 “Sync Loop“(同步循环)

Reconcile 是一个使用资源对象的命名空间和资源对象名称来调用的函数,使得资源对象的实际状态与 资源清单中定义的状态保持一致。调用完成后,Reconcile 会将资源对象的状态更新为当前实际状态。

for {

desired := getDesiredState()

current := getCurrentState()

if current == desired {

// nothing to do

} else {

// change current to desired status

}

}

根据Pod的是否有管理者,分为两类:

- 自主式Pod:Pod退出,不会被再次创建,因为无管理者(资源控制器)。

- 控制器管理的Pod: 在控制器的生命周期里,始终要维持 Pod 的副本数目

2. ReplicaSet

作用:维持一组 Pod 副本的运行,保证一定数量的 Pod 在集群中正常运行,ReplicaSet 控制器会持续监听它说控制的这些 Pod 的运行状态,在 Pod 发送故障数量减少或者增加时会触发调谐过程,始终保持副本数量一定。

关键配置项:

.spec.replicas:pod 副本数量.spec.selector:Label Selector,用来匹配要控制的 Pod 标签,需要和 pod template 中的标签一致.spec.template:pod 模板,将 pod 的定义以模板的形式嵌入 ReplicaSet 中

# nginx-rs.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: nginx

spec:

replicas: 3

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

$ kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-75qzs 1/1 Running 0 13s app=web

nginx-m86nn 1/1 Running 0 13s app=web

nginx-spgp6 1/1 Running 0 13s app=web

$ kubectl label pod nginx-75qzs app=nginx --overwrite=true

pod/nginx-75qzs labeled

$ kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-75qzs 1/1 Running 0 49s app=nginx # 不再受 rs 管理

nginx-d6brp 1/1 Running 0 8s app=web

nginx-m86nn 1/1 Running 0 49s app=web

nginx-spgp6 1/1 Running 0 49s app=web

# Pod 与 ReplicaSet 的关系: ownerReferences

$ kubectl get pod nginx-d6brp -o yaml

apiVersion: v1

kind: Pod

metadata:

...

name: nginx-d6brp

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: nginx

uid: adf0d8a6-282c-4741-9975-2a4310967058

...

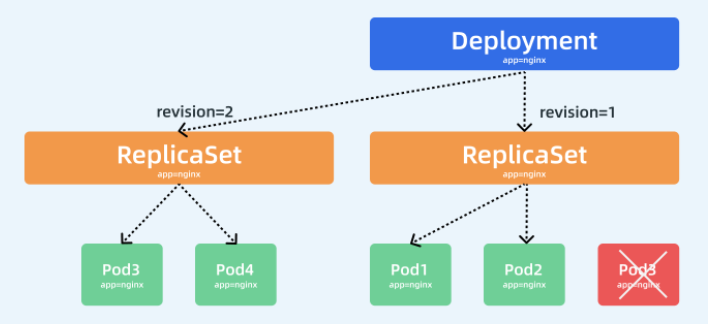

3. Deployment

作用:自主管理ReplicaSet,支持通过 滚动更新(Rolling Update) 方式来升级

- 滚动升级和回滚应用 (创建一个新的RS,新RS中Pod增1,旧RS的Pod减1)

- 扩容和缩容

- 暂停和继续 Deployment

# nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

3.1 水平伸缩

水平扩展/收缩,通过 ReplicaSet 实现,即调整 replicas 副本数即可

$ kubectl scale deployment nginx-deploy --replicas 10

$ kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-f4fd8c4dc 10 10 5 4m57s

3.2 滚动更新

版本更新策略:默认25%替换

清理历史版本:可以通过设置 spec.revisionHistoryLimit 来指定 Deployment 最多保留多少个 revision 历史记录。默认保留所有的revision,如果该项设置为0,Deployment将不能回滚

# nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 3

selector:

matchLabels:

app: nginx

minReadySeconds: 5

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

更新策略:

minReadySeconds: 5 # 5s 后升级

strategy:

type: RollingUpdate # 更新策略:RollingUpdate(滚动更新,默认), Recreate(全部重新创建)

rollingUpdate:

maxSurge: 1 # 升级过程中最多可以比原先设置多1个Pod

maxUnavailable: 1 # 升级过程中最多1个Pod无法提供服务

# --record 记录版本升级操作命令详情

$ kubectl apply -f nginx-deploy.yaml --record

# 升级:镜像更新,会自动创建 RS

$ kubectl set image deployment/nginx-deploy nginx=nginx:1.17.9

# 升级:修改运行资源

$ kubectl set resources deployment/nginx-deploy -c=nginx --limits=cpu=200m,memory=512Mi

# 替换 RS 记录

$ kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-66b6c48dd5 0 0 0 7m26s

nginx-deploy-7895d56f4f 0 0 0 3m21s

nginx-deploy-8468f58985 3 3 2 19s

# 升级记录

$ kubectl rollout history deployment/nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 kubectl apply --filename=nginx-deploy.yaml --record=true

2 kubectl apply --filename=nginx-deploy.yaml --record=true

3 kubectl apply --filename=nginx-deploy.yaml --record=true

$ kubectl rollout history deployment nginx-deploy --revision=1 # 详细记录

deployment.apps/nginx-deploy with revision #3

Pod Template:

Labels: app=nginx

pod-template-hash=8468f58985

Annotations: kubernetes.io/change-cause: kubectl apply --filename=nginx-deploy.yaml --record=true

Containers:

nginx:

Image: nginx:1.17.9

Port: 80/TCP

Host Port: 0/TCP

Limits:

cpu: 200m

memory: 512Mi

Environment: <none>

Mounts: <none>

Volumes: <none>

# 回滚操作

$ kubectl rollout undo deployment/nginx-deploy # 回滚到最近一次, 即 reversion=2

$ kubectl rollout undo deployment/nginx-deploy --to-revision=3

# 升级/回滚状态

$ kubectl rollout status deployment/nginx-deploy

Waiting for deployment "nginx-deploy" rollout to finish: 2 of 3 updated replicas are available...

deployment "nginx-deploy" successfully rolled out

#####################################################

# 暂停,后续操作将不会立即重建Pod

$ kubectl rollout pause deployment/nginx-deploy

# 不会立即升级

$ kubectl set image deployment/nginx-deploy nginx=nginx:1.19.1

$ kubectl rollout status deployment/nginx-deploy

Waiting for deployment "nginx-deploy" rollout to finish: 0 out of 3 new replicas have been updated...

# 恢复,开始进行升级

$ kubectl rollout resume deployment/nginx-deploy

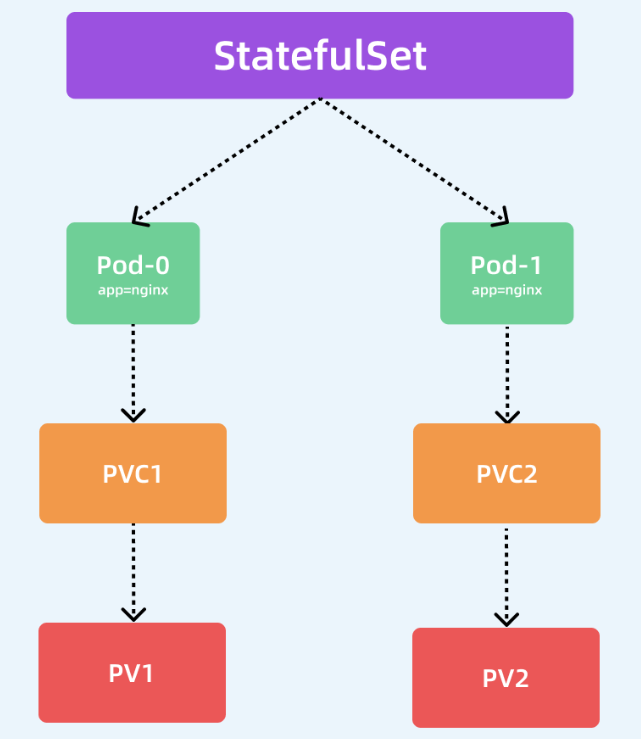

4. StatefulSet

4.1 有状态服务

无状态服务(Stateless Service):该服务运行的实例,不会再本地存储需要持久化的数据,并且多个实例对于同一个请求响应的结果是完全一致的

有状态服务(Stateful Service):该服务运行的实例,需要在本地持久化数据,比如MySQL等,其功能特性如下:

- 稳定的、唯一的网络标识符

- 稳定的、持久化的存储

- 有序的、优雅的部署和伸缩

- 有序的、优雅的删除和终止

- 有序的、自动滚动更新

4.2 无头服务

Headless Service 特性:

- 未配置 ClusterIP,不通过 SVC 分配的 VIP 负载均衡访问 Pod

- 直接以 DNS 记录方式解析到 Pod 对应的IP地址

DNS 记录:为 Pod 分配的一个唯一标识

<pod-name>.<svc-name>.<namespace>.svc.cluster.local

# nginx-headless-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-headless

labels:

app: nginx

spec:

ports:

- name: http

port: 80

clusterIP: None

selector:

app: nginx

4.3 存储准备

首先在各个节点上创建相应的目录(mkdir -p /mnt/pv{1,2}),当然,可替换成nfs, ceph等网络存储

# nginx-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

spec:

capacity:

storage: 500Mi

accessModes:

- ReadWriteOnce

hostPath:

path: /mnt/pv1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv2

spec:

capacity:

storage: 500Mi

accessModes:

- ReadWriteOnce

hostPath:

path: /mnt/pv2

4.4 StatefulSet

作用:解决有状态服务的问题,可以确保部署和 scale 的顺序

典型的使用场景:

- 稳定的持久化存储,即 Pod 重新调度后,还能够访问到相同的持久化数据,基于PVC来实现

- 稳定的网络标识,即 Pod 重新调度后其 PodName 和 HostName 不变,基于 Headless Service (即没有Cluster IP的Service)来实现

- 有序部署、有序扩展,即Pod是有序的,在部署和扩展时,要按照定义的顺序依次进行 (即从 0 到N - 1, 在下一个Pod 运行前,所有 Pod 必须是 Running 和 Ready 状态),基于 Init Containers 来实现

- 有序收缩、有序删除(即从 N-1 到 0)

管理策略:spec.podManagementPolicy

- OrderedReady:按顺序性就绪,默认

- Parallel:并行就绪/终止

更新策略:.spec.updateStrategy.type

OnDelete: 只有手动删除旧的 Pod 才会创建新的 PodRollingUpdate:自动删除旧的 Pod 并创建新的Pod,如果更新发生了错误,这次“滚动更新”就会停止

部分更新:.spec.updateStrategy.rollingUpdate.partition ,SatefulSet 的 Pod 中序号大于或等于 partition 的 Pod 会在 StatefulSet 的模板更新后进行滚动升级,而其余的 Pod 保持不变,该功能可实现灰度发布

现实问题:实际项目中,很少会去直接通过 StatefulSet 来部署有状态服务,因为管理难度太大。对于某些特定的服务,可能通过更加高级的 Operator 来部署,如 etcd-operator,prometheus-operator 等,它们都能够很好的来管理有状态的服务,因为对有状态的应用的数据恢复、故障转移等有更高级功能

# nginx-sts.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: nginx-headless

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.19.1

ports:

- name: web

containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 500Mi

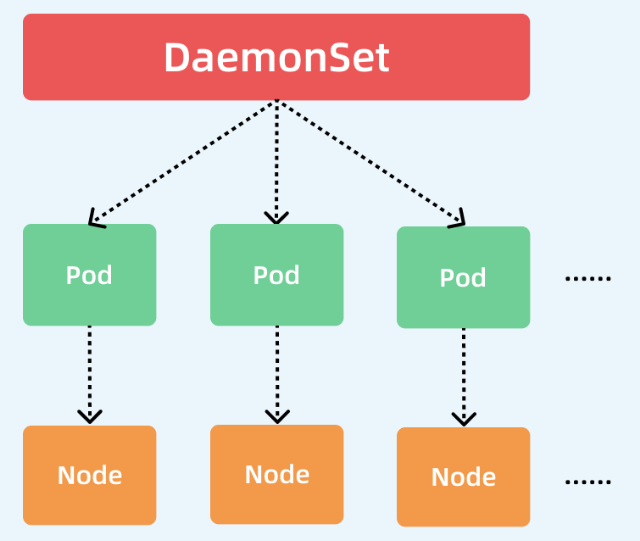

5. DaemonSet

作用:用于部署守护进程,它在每个Node上都运行一个容器副本,典型的应用:

- 日志收集:fluentd,logstash

- 系统监控:Prometheus Node Exporter, collectd

- 系统程序:kube-proxy,kube-dns,ceph,glusterd

更新策略:

- OnDelete:手动删除后更新

- RollingUpdate:滚动更新,默认

# nginx-ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- image: nginx:1.19.1

name: nginx

ports:

- name: http

containerPort: 80

6. Job

6.1 Job

作用:一次性批处理任务,如果执行失败,则会重新创建一个Pod继续执行,直到成功

关键配置项:

.spec.template格式同 Pod.spec.restartPolicy仅支持 Never 或 OnFailurespec.backoffLimit重试次数,重试间隔时间10s、20s、40s….spec.completions标志 Job 结束需要运行的Pod个数,默认为1.spec.parallelism标志并行运行的 Pod 个数,默认为1.spec.activeDeadlineSeconds标志失败 Pod的重试最大时间,超过这个时间将不会再重试

# demo-job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: demo-job

spec:

template:

metadata:

name: pi

spec:

containers:

- name: pi

image: perl

command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"]

restartPolicy: Never

6.2 CronJob

作用:周期性定时任务

关键配置项:

-

.spec.schedule:调度时间m h dom mon dow -

.spec.jobTemplate:Pod 模板 -

.spec.startingDeadlineSeconds:可选。启动Job的期限,如果错过了调度时间,会被认为是失败的 -

.spec.concurrencyPolicy:可选。并发策略Allow:默认,允许并发运行 JobForbid:禁止并发,只能顺序执行Replace:用新的Job替换当前正在运行的 Job

-

.spec.suspend:可选,默认false。true表示后续所有执行都会被挂起 -

.spec.successfulJobsHistoryLimit和.spec.failedJobsHistoryLimit: 可选。可以保留多少完成和失败的Job,默认为3和1。如果设置为0,相关类型的Job完成后,将不会保留

# demo-cronjob.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: demo-cronjob

spec:

schedule: "*/5 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: busybox

image: busybox

args:

- /bin/sh

- -c

- date '+%Y-%m-%d %H:%M:%S'

restartPolicy: OnFailure

执行时间:

$ kubectl get cj

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

demo-cronjob */5 * * * * False 0 20s 2m15s

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

demo-cronjob-27428035-4dsfg 0/1 Completed 0 6m27s

demo-cronjob-27428040-fzzgq 0/1 Completed 0 87s

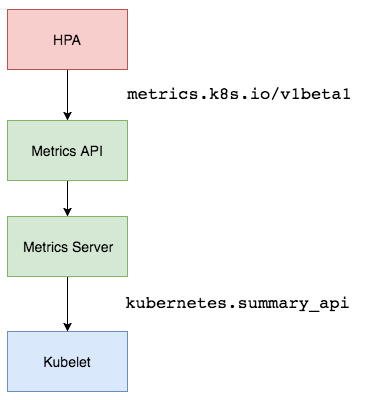

7. HPA

7.1 Metrics Server

Metrics Server 通过标准的 Kubernetes API 把监控数据暴露出来:

https://10.96.0.1/apis/metrics.k8s.io/v1beta1/namespaces/<namespace-name>/pods/<pod-name>

安装:

# 资源文件下载

$ wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.0/components.yaml

# 修改配置

$ vi components.yaml

...

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --kubelet-insecure-tls # 跳过证书校验

- --metric-resolution=15s

image: registry.aliyuncs.com/google_containers/metrics-server:v0.6.0 # 使用国内镜像

imagePullPolicy: IfNotPresent

...

# 安装

$ kubectl apply -f components.yaml

$ kubectl get pod -l k8s-app=metrics-server -n kube-system

NAME READY STATUS RESTARTS AGE

metrics-server-7d69f8dd8f-zvr6m 1/1 Running 0 5m7s

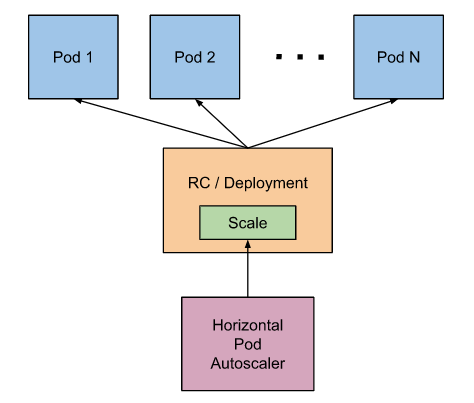

7.2 HPA

Horizontal Pod Autoscaler:Pod 水平自动伸缩,适用于Deployment和ReplicaSet,支持根据Pod的CPU、内存的利用率,用户自定义的metric等,进行自动扩/缩容。负载下降后,controller-manager 默认5分钟过后会进行缩放

# nginx-hpa.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-hpa

spec:

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

resources:

requests: # 需要设置,以便HPA计算

memory: 50Mi

cpu: 50m

---

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

type: NodePort

selector:

app: web

ports:

- name: http

port: 80

targetPort: 80

nodePort: 8080

执行伸缩:

# 设置自动扩容

$ kubectl autoscale deployment nginx-hpa --cpu-percent=10 --min=1 --max=50

$ kubectl get hpa nginx-hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-hpa Deployment/nginx-hpa 0%/10% 1 50 1 12m

# 扩容信息

$ kubectl get hpa nginx-hpa -o yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

annotations:

autoscaling.alpha.kubernetes.io/conditions: '[{"type":"AbleToScale","status":"True","lastTransitionTime":"2022-02-24T07:09:20Z","reason":"ReadyForNewScale","message":"recommended

size matches current size"},{"type":"ScalingActive","status":"True","lastTransitionTime":"2022-02-24T07:09:20Z","reason":"ValidMetricFound","message":"the

HPA was able to successfully calculate a replica count from cpu resource utilization

(percentage of request)"},{"type":"ScalingLimited","status":"True","lastTransitionTime":"2022-02-24T07:14:20Z","reason":"TooFewReplicas","message":"the

desired replica count is less than the minimum replica count"}]'

autoscaling.alpha.kubernetes.io/current-metrics: '[{"type":"Resource","resource":{"name":"cpu","currentAverageUtilization":0,"currentAverageValue":"0"}}]'

creationTimestamp: "2022-02-24T07:09:04Z"

name: nginx-hpa

namespace: default

resourceVersion: "537535"

uid: 99ccf472-5429-4813-8b39-67e52bc83a16

spec:

maxReplicas: 50

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx-hpa

targetCPUUtilizationPercentage: 10

status:

currentCPUUtilizationPercentage: 0

currentReplicas: 1

desiredReplicas: 1

$ kubectl describe hpa nginx-hpa

Name: nginx-hpa

Namespace: default

Labels: <none>

Annotations: <none>

CreationTimestamp: Thu, 24 Feb 2022 15:09:04 +0800

Reference: Deployment/nginx-hpa

Metrics: ( current / target )

resource cpu on pods (as a percentage of request): 0% (0) / 10%

Min replicas: 1

Max replicas: 50

Deployment pods: 1 current / 1 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True ReadyForNewScale recommended size matches current size

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from cpu resource utilization (percentage of request)

ScalingLimited True TooFewReplicas the desired replica count is less than the minimum replica count

Events: <none>

触发扩容:

# 下载http测试工具

$ wget https://hey-release.s3.us-east-2.amazonaws.com/hey_linux_amd64 -O hey

$ chmod +x hey

# 执行测试,触发扩容

./hey -z 1s -c 1000 192.168.80.100:30080

# 已进行自动扩容

$ kubectl get pod -l app=web | grep -v NAME | wc -l

50

# 取消自动扩容

$ kubectl delete hpa nginx-hpa

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)