安装K3s 和 ingress-nginx

文章目录k3s 介绍为什么叫 K3s?#适用场景#一、脚本安装二、安装docker2.1systemd管理docker2.2 docker 使用tab补全功能三、安装ingress-nginx裸机安装注意事项1.使用hostNetwork2.纯软件解决方案:MetalLB测试ingress-nginx创建ingress yaml文件k3s 介绍什么是 K3s?#K3s 是一个轻量级的 Kubern

文章目录

k3s 介绍

什么是 K3s?#

K3s 是一个轻量级的 Kubernetes 发行版,它针对边缘计算、物联网等场景进行了高度优化。K3s 有以下增强功能:

打包为单个二进制文件。

使用基于 sqlite3 的轻量级存储后端作为默认存储机制。同时支持使用 etcd3、MySQL 和 PostgreSQL 作为存储机制。

封装在简单的启动程序中,通过该启动程序处理很多复杂的 TLS 和选项。

默认情况下是安全的,对轻量级环境有合理的默认值。

添加了简单但功能强大的batteries-included功能,例如:本地存储提供程序,服务负载均衡器,Helm controller 和 Traefik Ingress controller。

所有 Kubernetes control-plane 组件的操作都封装在单个二进制文件和进程中,使 K3s 具有自动化和管理包括证书分发在内的复杂集群操作的能力。

最大程度减轻了外部依赖性,K3s 仅需要 kernel 和 cgroup 挂载。 K3s 软件包需要的依赖项包括:

containerd

Flannel

CoreDNS

CNI

主机实用程序(iptables、socat 等)

Ingress controller(Traefik)

嵌入式服务负载均衡器(service load balancer)

嵌入式网络策略控制器(network policy controller)

为什么叫 K3s?#

我们希望安装的 Kubernetes 在内存占用方面只是一半的大小。Kubernetes 是一个 10 个字母的单词,简写为 K8s。所以,有 Kubernetes 一半大的东西就是一个 5 个字母的单词,简写为 K3s。K3s 没有全称,也没有官方的发音。

适用场景#

K3s 适用于以下场景:

边缘计算-Edge

物联网-IoT

CI

Development

ARM

嵌入 K8s

由于运行 K3s 所需的资源相对较少,所以 K3s 也适用于开发和测试场景。在这些场景中,如果开发或测试人员需要对某些功能进行验证,或对某些问题进行重现,那么使用 K3s 不仅能够缩短启动集群的时间,还能够减少集群需要消耗的资源。与此同时,Rancher 中国团队推出了一款针对 K3s 的效率提升工具:AutoK3s。只需要输入一行命令,即可快速创建 K3s 集群并添加指定数量的 master 节点和 worker 节点。如需详细了解 AutoK3s,请参考AutoK3s 功能介绍。

一、脚本安装

curl -sfL http://rancher-mirror.cnrancher.com/k3s/k3s-install.sh >install.sh

chmod +x install.sh

./install.sh --docker --disable traefik --write-kubeconfig-mode 644

#设置kubectl tab 补全

echo "source <(kubectl completion bash)" >> ~/.bashrc

source ~/.bashrc

–docker 使用docker作为镜像下载器

–disable traefik 禁用k3s 自带的 traefik

–write-kubeconfig-mode 644 权限限制

二、安装docker

wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

tar zxvf docker-19.03.9.tgz

mv docker/* /usr/bin

2.1systemd管理docker

cat > /lib/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

#创建配置文件

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

#registry-mirrors 阿里云镜像加速器

#启动并设置开机启动

systemctl daemon-reload

systemctl start docker

systemctl enable docker

2.2 docker 使用tab补全功能

三、安装ingress-nginx

官网文档

我们直接逻辑安装

适用于使用通用Linux发行版(例如CentOs,Ubuntu …)在裸机上部署的kubernetes集群。

介绍无力直接看官网

kubernetes

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.46.0/deploy/static/provider/baremetal/deploy.yaml

#修改镜像,

registry.cn-hongkong.aliyuncs.com/gglnp/ingress-nginx-controller:v0.46.0

#因为k8s.gcr.io的镜像国内没法下载,所以替换成阿里云的镜像

裸机安装注意事项

在按需提供网络负载平衡器的传统云环境中,单个Kubernetes足以为外部客户端以及间接向集群内部运行的任何应用程序提供NGINX Ingress控制器的单点联系。

裸机环境缺少这种商品,需要稍有不同的设置才能向外部消费者提供相同类型的访问权限。

1.使用hostNetwork

怎么简单怎么来啊

#这可以通过启用hostNetworkPods规范中的选项来实现。

template:

spec:

hostNetwork: true

注释掉 NodePort 类型

我们使用默认的ClusterIP

不然的话使用nodeport 对外暴露端口 ,域名访问时还得加上暴露的端口,完全没必要啊,那要域名的意义何在

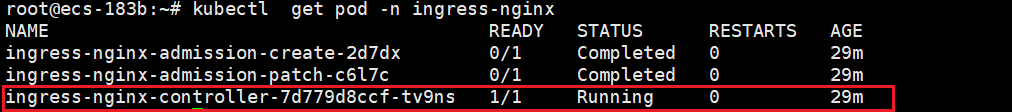

只要ingress-nginx-controller是running状态的就对了

2.纯软件解决方案:MetalLB

MetalLB为不在受支持的云提供程序上运行的Kubernetes群集提供了网络负载平衡器实现,从而有效地允许在任何群集中使用LoadBalancer Services。

本节演示了如何在具有公共可访问节点的Kubernetes集群中,结合使用MetalLB的第2层配置模式和NGINX Ingress控制器。在这种模式下,一个节点吸引了服务IP的所有流量。有关更多详细信息,请参见流量策略。ingress-nginx

MetalLB可以使用简单的Kubernetes清单或Helm进行部署。本示例的其余部分假定已按照安装说明部署了MetalLB 。

MetalLB需要IP地址池,以便能够获得ingress-nginx服务的所有权。可以在名为ConfigMap的ConfigMap中定义此池,该ConfigMapconfig与MetalLB控制器位于同一名称空间中。该IP池必须专用于MetalLB的使用,您不能重用Kubernetes节点IP或DHCP服务器分发的IP。

看了半天总结:

默认的ingress-controller配置的是Loadbalance模式,而Service后端的Pod是随机分配在Worker节点的,所以需要一个前置的loadbalance将流量转发到后端的ingress-controller,如果是在Cloud环境中部署,可以使用云厂商提供的负载均衡服务替代MetalLb。 此外还可以用NodePort模式,会将Ingress-Controller对应的Service的端口映射到master节点的端口上,然后通过masterip:port的形式访问

修改 NodePort 为 LoadBalancer

spec:

type: NodePort --> LoadBalancer

#修改完在重新执行

kubectl apply -f deploy.yaml

安装metallb

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.6/manifests/namespace.yaml

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.6/manifests/metallb.yaml

# On first install only

kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

或者使用下面整合好的配置文件,记得修改IP段

---

apiVersion: v1

kind: Namespace

metadata:

name: metallb-system

labels:

app: metallb

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

labels:

app: metallb

name: controller

namespace: metallb-system

spec:

allowPrivilegeEscalation: false

allowedCapabilities: []

allowedHostPaths: []

defaultAddCapabilities: []

defaultAllowPrivilegeEscalation: false

fsGroup:

ranges:

- max: 65535

min: 1

rule: MustRunAs

hostIPC: false

hostNetwork: false

hostPID: false

privileged: false

readOnlyRootFilesystem: true

requiredDropCapabilities:

- ALL

runAsUser:

ranges:

- max: 65535

min: 1

rule: MustRunAs

seLinux:

rule: RunAsAny

supplementalGroups:

ranges:

- max: 65535

min: 1

rule: MustRunAs

volumes:

- configMap

- secret

- emptyDir

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

labels:

app: metallb

name: speaker

namespace: metallb-system

spec:

allowPrivilegeEscalation: false

allowedCapabilities:

- NET_ADMIN

- NET_RAW

- SYS_ADMIN

allowedHostPaths: []

defaultAddCapabilities: []

defaultAllowPrivilegeEscalation: false

fsGroup:

rule: RunAsAny

hostIPC: false

hostNetwork: true

hostPID: false

hostPorts:

- max: 7472

min: 7472

privileged: true

readOnlyRootFilesystem: true

requiredDropCapabilities:

- ALL

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- configMap

- secret

- emptyDir

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: metallb

name: controller

namespace: metallb-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: metallb

name: speaker

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app: metallb

name: metallb-system:controller

rules:

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- update

- apiGroups:

- ''

resources:

- services/status

verbs:

- update

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- policy

resourceNames:

- controller

resources:

- podsecuritypolicies

verbs:

- use

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app: metallb

name: metallb-system:speaker

rules:

- apiGroups:

- ''

resources:

- services

- endpoints

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- policy

resourceNames:

- speaker

resources:

- podsecuritypolicies

verbs:

- use

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app: metallb

name: config-watcher

namespace: metallb-system

rules:

- apiGroups:

- ''

resources:

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app: metallb

name: pod-lister

namespace: metallb-system

rules:

- apiGroups:

- ''

resources:

- pods

verbs:

- list

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app: metallb

name: metallb-system:controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: metallb-system:controller

subjects:

- kind: ServiceAccount

name: controller

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app: metallb

name: metallb-system:speaker

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: metallb-system:speaker

subjects:

- kind: ServiceAccount

name: speaker

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app: metallb

name: config-watcher

namespace: metallb-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: config-watcher

subjects:

- kind: ServiceAccount

name: controller

- kind: ServiceAccount

name: speaker

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app: metallb

name: pod-lister

namespace: metallb-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: pod-lister

subjects:

- kind: ServiceAccount

name: speaker

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: metallb

component: speaker

name: speaker

namespace: metallb-system

spec:

selector:

matchLabels:

app: metallb

component: speaker

template:

metadata:

annotations:

prometheus.io/port: '7472'

prometheus.io/scrape: 'true'

labels:

app: metallb

component: speaker

spec:

containers:

- args:

- --port=7472

- --config=config

env:

- name: METALLB_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: METALLB_HOST

valueFrom:

fieldRef:

fieldPath: status.hostIP

- name: METALLB_ML_BIND_ADDR

valueFrom:

fieldRef:

fieldPath: status.podIP

# needed when another software is also using memberlist / port 7946

#- name: METALLB_ML_BIND_PORT

# value: "7946"

- name: METALLB_ML_LABELS

value: "app=metallb,component=speaker"

- name: METALLB_ML_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: METALLB_ML_SECRET_KEY

valueFrom:

secretKeyRef:

name: memberlist

key: secretkey

image: metallb/speaker:v0.9.6

imagePullPolicy: Always

name: speaker

ports:

- containerPort: 7472

name: monitoring

resources:

limits:

cpu: 100m

memory: 100Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_ADMIN

- NET_RAW

- SYS_ADMIN

drop:

- ALL

readOnlyRootFilesystem: true

hostNetwork: true

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: speaker

terminationGracePeriodSeconds: 2

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: metallb

component: controller

name: controller

namespace: metallb-system

spec:

revisionHistoryLimit: 3

selector:

matchLabels:

app: metallb

component: controller

template:

metadata:

annotations:

prometheus.io/port: '7472'

prometheus.io/scrape: 'true'

labels:

app: metallb

component: controller

spec:

containers:

- args:

- --port=7472

- --config=config

image: metallb/controller:v0.9.6

imagePullPolicy: Always

name: controller

ports:

- containerPort: 7472

name: monitoring

resources:

limits:

cpu: 100m

memory: 100Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- all

readOnlyRootFilesystem: true

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 65534

serviceAccountName: controller

terminationGracePeriodSeconds: 0

---

#创建IP池

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.0.100-192.168.0.101

执行引导文件

执行过程中出现一个错误

解决

kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

#再次执行一下

root@ecs-183b:~# kubectl apply -f metallb.yaml

namespace/metallb-system unchanged

podsecuritypolicy.policy/controller configured

podsecuritypolicy.policy/speaker configured

serviceaccount/controller unchanged

serviceaccount/speaker unchanged

clusterrole.rbac.authorization.k8s.io/metallb-system:controller unchanged

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker unchanged

role.rbac.authorization.k8s.io/config-watcher unchanged

role.rbac.authorization.k8s.io/pod-lister unchanged

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller unchanged

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker unchanged

rolebinding.rbac.authorization.k8s.io/config-watcher unchanged

rolebinding.rbac.authorization.k8s.io/pod-lister unchanged

daemonset.apps/speaker unchanged

deployment.apps/controller unchanged

configmap/config unchanged

#查看pod

root@ecs-183b:~# kubectl get pod -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-64f86798cc-6zr45 1/1 Running 0 4m7s

speaker-hxdms 1/1 Running 0 4m7s

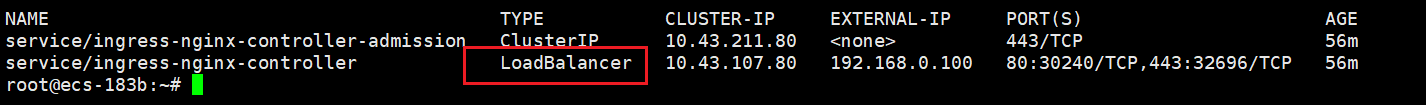

一定要把ingress-nginx 的 NodePort 修改为 LoadBalancer

没修改的话,执行完metallb 显示还是NodePort

修改成LoadBalancer

下面这样就对了

测试ingress-nginx

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: web

name: web

spec:

replicas: 1

selector:

matchLabels:

app: web

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: web

spec:

containers:

- image: httpd:latest

name: httpd

ports:

- containerPort: 80

resources: {}

status: {}

---

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: web

name: web

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: web

type: ClusterIP

status:

loadBalancer: {}

#执行引导文件

kubectl apply -f web.yaml

# 查看容器IP,本地测试是否能否访问

root@ecs-183b:~# kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-797945c744-vt5gf 1/1 Running 0 4m13s 10.42.0.8 ecs-183b <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 89m <none>

service/web ClusterIP 10.43.155.220 <none> 80/TCP 21s app=web

root@ecs-183b:~# curl 10.42.0.8

<html><body><h1>It works!</h1></body></html>

创建ingress yaml文件

生产TLS证书

mkdir ssl && cd ssl

openssl genrsa -out test-ingress-1.key 2048

openssl req -x509 -nodes -days 365 -newkey rsa:2048 \

-out aks-ingress-tls.crt \

-keyout aks-ingress-tls.key \

-subj "/CN=www.testingress.com/O=aks-ingress-tls"

#创建secrets

kubectl create secret tls test-ingress-tls \

--key aks-ingress-tls.key \

--cert aks-ingress-tls.crt

创建ingress.yaml 使用TLS

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: web-ingress

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/rewrite-target: /$2

spec:

tls:

- hosts:

- www.testingress.com

secretName: test-ingress-tls

rules:

- host: www.testingress.com

http:

paths:

- backend:

serviceName: web

servicePort: 80

执行,浏览器访问测试

echo "94.74.110.156 www.testingress.com"

#本地windoes hosts 文件

#路径:C:\Windows\System32\drivers\etc\hosts

94.74.110.156 www.testingress.com

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)