基于单目视觉的实时3d人脸重建

论文:Real-time Facial Surface Geometry from Monocular Video on Mobile GPUsGithub:https://github.com/thepowerfuldeez/facemesh.pytorchhttps://google.github.io/mediapipe/solutions/face_mesh.html论文提出了端到端的3d

论文:Real-time Facial Surface Geometry from Monocular Video on Mobile GPUs

Github:https://github.com/thepowerfuldeez/facemesh.pytorch

https://google.github.io/mediapipe/solutions/face_mesh.html

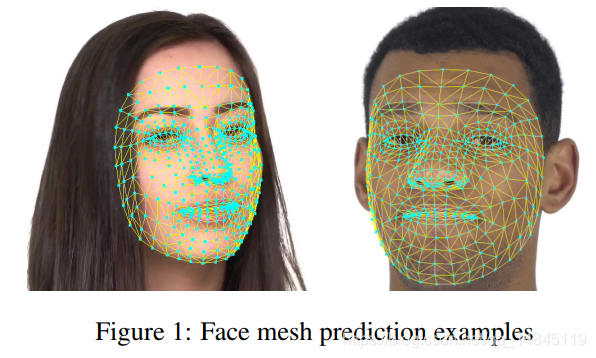

论文提出了端到端的3d人脸重建方法,3dmesh包含468个人脸3d关键点。同时在手机上可以实现100-1000FPS。

论文基于残差网络模型输出468个3d人脸坐标。然后基于 CatmullClark方法进行更加精细的三角剖分,得到更加精细平滑的3d人脸模型。

整体处理流程:

- 基于一个轻量化的人脸检测模型,首先进行人脸检测。可以得到人脸框和5个人脸关键点。使用关键点对人脸进行对齐操作,将人脸图像都调整为两只眼睛的连线保持水平方向。

- 基于人脸框对图片进行crop操作。输出256*256(对于大模型),128*128(对于小模型)。然后将图片输入本文的模型,就可以得到3d人脸关键点坐标。其中x,y和2d的x,y一样,z为相对于3d模型的中心参考平面的深度。

数据,标注,训练:

数据集为30K 使用手机拍摄的照片。训练过程中使用了标准的裁切,其他图像处理方法,模拟相机传感器噪声,基于图像密度直方图的随机非线性参数变换。

基于2种监督方式训练基础模型,

- 一方面基于3dmm方法生成3d坐标。另一方面,使用独立的分支预测人脸轮廓的2d关键点。通过使用该2d关键点来约束3d关键点中的x,y。

- 经过上面的训练,所有数据中30%的数据已经满足更加精细调优的状态。然后迭代的优化x,y坐标。对于误差比较大的,基于人工对3d关键点进行调整。

针对视频的时域稳定滤波:

为了克服视频中逐帧检测造成的坐标点抖动,作者引入了Euro filter来稳定检测结果,并按照特定时间窗口内人脸尺寸的变化速度来更新滤波器参数。这样处理可以消除肉眼可见抖动

模型结构:

模型输入图片大小为192*192*3,输出包括2个部分,1个为置信度,大小为1*1,另一个为3d关键点,大小为468*3=1*1404,

推理代码:

facemesh.py

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

class FaceMeshBlock(nn.Module):

"""This is the main building block for architecture

which is just residual block with one dw-conv and max-pool/channel pad

in the second branch if input channels doesn't match output channels"""

def __init__(self, in_channels: int, out_channels: int, kernel_size: int = 3, stride: int = 1):

super(FaceMeshBlock, self).__init__()

self.stride = stride

self.channel_pad = out_channels - in_channels

# TFLite uses slightly different padding than PyTorch

# on the depthwise conv layer when the stride is 2.

if stride == 2:

self.max_pool = nn.MaxPool2d(kernel_size=stride, stride=stride)

padding = 0

else:

padding = (kernel_size - 1) // 2

self.convs = nn.Sequential(

nn.Conv2d(in_channels=in_channels, out_channels=in_channels,

kernel_size=kernel_size, stride=stride, padding=padding,

groups=in_channels, bias=True),

nn.Conv2d(in_channels=in_channels, out_channels=out_channels,

kernel_size=1, stride=1, padding=0, bias=True),

)

self.act = nn.PReLU(out_channels)

def forward(self, x):

if self.stride == 2:

h = F.pad(x, (0, 2, 0, 2), "constant", 0)

x = self.max_pool(x)

else:

h = x

if self.channel_pad > 0:

x = F.pad(x, (0, 0, 0, 0, 0, self.channel_pad), "constant", 0)

return self.act(self.convs(h) + x)

class FaceMesh(nn.Module):

"""The FaceMesh face landmark model from MediaPipe.

Because we won't be training this model, it doesn't need to have

batchnorm layers. These have already been "folded" into the conv

weights by TFLite.

The conversion to PyTorch is fairly straightforward, but there are

some small differences between TFLite and PyTorch in how they handle

padding on conv layers with stride 2.

This version works on batches, while the MediaPipe version can only

handle a single image at a time.

"""

def __init__(self):

super(FaceMesh, self).__init__()

self.num_coords = 468

self.x_scale = 192.0

self.y_scale = 192.0

self.min_score_thresh = 0.75

self._define_layers()

def _define_layers(self):

self.backbone = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=16, kernel_size=3, stride=2, padding=0, bias=True),

nn.PReLU(16),

FaceMeshBlock(16, 16),

FaceMeshBlock(16, 16),

FaceMeshBlock(16, 32, stride=2),

FaceMeshBlock(32, 32),

FaceMeshBlock(32, 32),

FaceMeshBlock(32, 64, stride=2),

FaceMeshBlock(64, 64),

FaceMeshBlock(64, 64),

FaceMeshBlock(64, 128, stride=2),

FaceMeshBlock(128, 128),

FaceMeshBlock(128, 128),

FaceMeshBlock(128, 128, stride=2),

FaceMeshBlock(128, 128),

FaceMeshBlock(128, 128),

)

self.coord_head = nn.Sequential(

FaceMeshBlock(128, 128, stride=2),

FaceMeshBlock(128, 128),

FaceMeshBlock(128, 128),

nn.Conv2d(128, 32, 1),

nn.PReLU(32),

FaceMeshBlock(32, 32),

nn.Conv2d(32, 1404, 3)

)

self.conf_head = nn.Sequential(

FaceMeshBlock(128, 128, stride=2),

nn.Conv2d(128, 32, 1),

nn.PReLU(32),

FaceMeshBlock(32, 32),

nn.Conv2d(32, 1, 3)

)

def forward(self, x):

# TFLite uses slightly different padding on the first conv layer

# than PyTorch, so do it manually.

x = nn.ReflectionPad2d((1, 0, 1, 0))(x)

b = x.shape[0] # batch size, needed for reshaping later

x = self.backbone(x) # (b, 128, 6, 6)

c = self.conf_head(x) # (b, 1, 1, 1)

c = c.view(b, -1) # (b, 1)

r = self.coord_head(x) # (b, 1404, 1, 1)

r = r.reshape(b, -1) # (b, 1404)

return [r, c]

def _device(self):

"""Which device (CPU or GPU) is being used by this model?"""

return self.conf_head[1].weight.device

def load_weights(self, path):

self.load_state_dict(torch.load(path))

self.eval()

def _preprocess(self, x):

"""Converts the image pixels to the range [-1, 1]."""

return x.float() / 127.5 - 1.0

def predict_on_image(self, img):

"""Makes a prediction on a single image.

Arguments:

img: a NumPy array of shape (H, W, 3) or a PyTorch tensor of

shape (3, H, W). The image's height and width should be

128 pixels.

Returns:

A tensor with face detections.

"""

if isinstance(img, np.ndarray):

img = torch.from_numpy(img).permute((2, 0, 1))

return self.predict_on_batch(img.unsqueeze(0))[0]

def predict_on_batch(self, x):

"""Makes a prediction on a batch of images.

Arguments:

x: a NumPy array of shape (b, H, W, 3) or a PyTorch tensor of

shape (b, 3, H, W). The height and width should be 128 pixels.

Returns:

A list containing a tensor of face detections for each image in

the batch. If no faces are found for an image, returns a tensor

of shape (0, 17).

Each face detection is a PyTorch tensor consisting of 17 numbers:

- ymin, xmin, ymax, xmax

- x,y-coordinates for the 6 keypoints

- confidence score

"""

if isinstance(x, np.ndarray):

x = torch.from_numpy(x).permute((0, 3, 1, 2))

assert x.shape[1] == 3

assert x.shape[2] == 192

assert x.shape[3] == 192

# 1. Preprocess the images into tensors:

x = x.to(self._device())

x = self._preprocess(x)

# 2. Run the neural network:

with torch.no_grad():

out = self.__call__(x)

# 3. Postprocess the raw predictions:

detections, confidences = out

detections[0:-1:3] *= self.x_scale

detections[1:-1:3] *= self.y_scale

return detections.view(-1, 3), confidences

demo.py

import numpy as np

import torch

import cv2

print("PyTorch version:", torch.__version__)

print("CUDA version:", torch.version.cuda)

print("cuDNN version:", torch.backends.cudnn.version())

gpu = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

from facemesh import FaceMesh

net = FaceMesh().to(gpu)

net.load_weights("facemesh.pth")

img = cv2.imread("test.jpg")

img = np.vstack([img,np.zeros_like(img)[:210,:,:]])

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = cv2.resize(img, (192, 192))

detections = net.predict_on_image(img).numpy()

print(detections.shape)

import matplotlib.pyplot as plt

plt.imshow(img, zorder=1)

x, y = detections[:, 0], detections[:, 1]

plt.scatter(x, y, zorder=2, s=1.0)

#plt.show()

plt.savefig("file1.png")

torch.onnx.export(

net,

(torch.randn(1,3,192,192, device='cpu'), ),

"facemesh.onnx",

input_names=("image", ),

output_names=("preds", "conf"),

opset_version=9

)

tflite代码:

import os

import numpy as np

import tensorflow as tf

import torch

import torch.nn as nn

from facemesh import FaceMesh

import cv2

"""

(Pdb) tf.__version__

'2.2.1'

(Pdb) torch.__version__

'1.5.1'

"""

sample_img = cv2.imread("test.jpg")

sample_img_192 = cv2.resize(sample_img, (192, 192))

input_data = np.expand_dims(sample_img_192, axis=0).astype(np.float32) / 127.5 - 1.0

interpreter = tf.lite.Interpreter(model_path="facemesh-lite.f16.tflite")

interpreter.allocate_tensors()

net = FaceMesh()

net.load_weights("facemesh.pth")

# Get input and output tensors.

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

input_shape = input_details[0]['shape']

# input_data = np.array(np.random.random_sample(input_shape), dtype=np.float32)

# tf inference

interpreter.set_tensor(input_details[0]['index'], input_data)

interpreter.invoke()

tf_coord_res = interpreter.get_tensor(output_details[0]['index'])

# torch inference

torch_output_data = net(torch.from_numpy(input_data.transpose(0, 3, 1, 2)))

torch_coord_res = torch_output_data[0].detach().numpy()

print(["torch", torch_coord_res[0]])

print(["tflite", tf_coord_res[0, 0, 0]])

print("diff %f" % (np.abs(torch_coord_res[0] - tf_coord_res[0, 0, 0]).mean()))

tflite模型路径,https://github.com/google/mediapipe/blob/master/mediapipe/modules/face_landmark/face_landmark.tflite

实验结果:

使用基于眼睛距离 interocular distance (IOD)归一化的平均绝对距离 mean absolute distance (MAD) ,作为x,y关键点的评价标准,z不进行评价。这样的归一化操作,可以消除人脸尺度问题引入的误差。

更多推荐

已为社区贡献6条内容

已为社区贡献6条内容

所有评论(0)