day2-----k8s部署组件flannel(2)

小结整理声明式资源管理方法:·声明式资源管理方法依赖于—资源配置清单( yaml/json)。查看资源配置清单的方法~]# kubectl get svc nginx-dp -o yaml -n kube-public·解释资源配置清单~]# kubectl explain service·创建资源配置清单~]#vi /root/nginx-ds-syc.yaml·应用资源配置清单~]#kubec

小结整理

声明式资源管理方法:

·声明式资源管理方法依赖于—资源配置清单( yaml/json)。查看资源配置清单的方法

~]# kubectl get svc nginx-dp -o yaml -n kube-public·解释资源配置清单

~]# kubectl explain service·创建资源配置清单

~]#vi /root/nginx-ds-syc.yaml·应用资源配置清单

~]#kubectl apply -f nginx-ds-svc.vaml·修改资源配置清单并应用

·在线修改

·离线修改删除资源配置清单

·陈述式删除·声明式删除

yaml文件和json文件是可以相互转换的

比如

[root@hdss7-21 ~]# kubectl get po -ojson nginx-ds-djjjj

声明式资源管理方法小结:

·声明式资源管理方法,依赖于统一资源配置清单文件对资源进行管理

·对资源的管理,是通过事先定义在统一资源配置清单内,再通过陈述式命令应用

到K8S集群里

·语法格式: kubectl create/apply/delete -f /path/to/yaml

·资源配置清单的学习方法︰

. tip1:多看别人(官方)写的,能读懂

. tip2:能照着现成的文件改着用

. tip3∶遇到不懂的,善用kubectl explain ...查

. tip4:初学切忌上来就无中生有,自己憋着写

安装部署flanneld组件

----解决容器跨宿主机相互通信我问题

Kubernetes设计了网络模型,但却将它的实现交给了网络插件,CNI网络插件最主要的功能就是实现POD资源能够跨宿主机进行通信

常见的CNI网络插件:

. Flannel

. Calico

. Canal

. Contiv

. OpenContrail

. NSx-T

. Kube-router

k8s的CNI网络插件–Flannel

集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| HDSS7-21.host.com | flannel | 10.4.7.21 |

| HDSS7-22.host.com | flannel | 10.4.7.22 |

注意:这里部署以hdss7-21为例,另一台类似部署

现在染患,解压,做软连接

下载地址:

wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@hdss7-21 ~]# cd /opt/src/

[root@hdss7-21 src]# ll

total 442992

-rw-r--r-- 1 root root 9850227 Aug 25 20:21 etcd-v3.1.20-linux-amd64.tar.gz

-rw-r--r-- 1 root root 443770238 Jun 1 20:56 kubernetes-server-linux-amd64-v1.15.2.tar.gz

[root@hdss7-21 src]# rz -E

rz waiting to receive.

[root@hdss7-21 src]# ll

total 452336

-rw-r--r-- 1 root root 9850227 Aug 25 20:21 etcd-v3.1.20-linux-amd64.tar.gz

-rw-r--r-- 1 root root 9565743 Jan 29 2019 flannel-v0.11.0-linux-amd64.tar.gz

-rw-r--r-- 1 root root 443770238 Jun 1 20:56 kubernetes-server-linux-amd64-v1.15.2.tar.gz

[root@hdss7-21 src]# scp flannel-v0.11.0-linux-amd64.tar.gz 10.4.7.22:/opt/src

The authenticity of host '10.4.7.22 (10.4.7.22)' can't be established.

ECDSA key fingerprint is SHA256:BJaB/WmqJlcDzjRp3SPa+r6RgPzgjeL2XraoDJVgWnQ.

ECDSA key fingerprint is MD5:a1:8b:bb:d6:d7:db:b3:b1:a7:f9:a6:2c:0f:8f:01:26.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.4.7.22' (ECDSA) to the list of known hosts.

root@10.4.7.22's password:

flannel-v0.11.0-linux-amd64.tar.gz 100% 9342KB 93.8MB/s 00:00

[root@hdss7-21 src]# mkdir /opt/flannel-0.11.0

[root@hdss7-21 src]# tar xf flannel-v0.11.0-linux-amd64.tar.gz -C /opt/flannel-0.11.0/

[root@hdss7-21 src]# ln -s /opt/flannel-0.11.0/ /opt/flannel

[root@hdss7-21 src]# cd ..

[root@hdss7-21 opt]# cd flannel

[root@hdss7-21 flannel]# mkdir cert

[root@hdss7-21 flannel]# cd cert/

[root@hdss7-21 cert]# scp hdss7-200:/opt/certs/ca.pem .

ca.pem 100% 1346 929.0KB/s 00:00

[root@hdss7-21 cert]# scp hdss7-200:/opt/certs/client.pem .

client.pem 100% 1363 1.1MB/s 00:00

[root@hdss7-21 cert]# scp hdss7-200:/opt/certs/client-key.pem .

client-key.pem 100% 1675 1.0MB/s 00:00

[root@hdss7-21 cert]# ll

total 12

-rw-r--r-- 1 root root 1346 Aug 28 15:03 ca.pem

-rw------- 1 root root 1675 Aug 28 15:04 client-key.pem

-rw-r--r-- 1 root root 1363 Aug 28 15:03 client.pem

[root@hdss7-21 cert]# cd ..

[root@hdss7-21 flannel]# ls

cert flanneld mk-docker-opts.sh README.md

[root@hdss7-21 flannel]# vi subnet.env

[root@hdss7-21 flannel]# cat subnet.env

FLANNEL_NETWORK=172.7.0.0/16

FLANNEL_SUBNET=172.7.21.1/24

FLANNEL_MTU=1500

FLANNEL_IPMASQ=false

[root@hdss7-21 flannel]# vi flanneld.sh

[root@hdss7-21 flannel]# cat flanneld.sh

#!/bin/sh

./flanneld \

--public-ip=10.4.7.21 \ ##注意22上这里要改为22

--etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--etcd-keyfile=./cert/client-key.pem \

--etcd-certfile=./cert/client.pem \

--etcd-cafile=./cert/ca.pem \

--iface=ens33 \

--subnet-file=./subnet.env \

--healthz-port=2401

[root@hdss7-21 flannel]# chmod +x flanneld.sh

[root@hdss7-21 flannel]# mkdir -p /data/logs/flanneld

[root@hdss7-21 flannel]# cd /opt/etcd

[root@hdss7-21 etcd]# ls

certs Documentation etcd etcdctl etcd-server-startup.sh README-etcdctl.md README.md READMEv2-etcdctl.md

[root@hdss7-21 etcd]# ./etcdctl member list

988139385f78284: name=etcd-server-7-22 peerURLs=https://10.4.7.22:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.22:2379 isLeader=false

5a0ef2a004fc4349: name=etcd-server-7-21 peerURLs=https://10.4.7.21:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.21:2379 isLeader=false

f4a0cb0a765574a8: name=etcd-server-7-12 peerURLs=https://10.4.7.12:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.12:2379 isLeader=true ##可以看到这个是主的

#下面是操作etcd增加host-gw 在21上操作了在22上就不用操作这一步了

[root@hdss7-21 etcd]# ./etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}'

{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}

[root@hdss7-21 etcd]# ./etcdctl get /coreos.com/network/config

{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}

[root@hdss7-21 etcd]# vi /etc/supervisord.d/flannel.ini

[root@hdss7-21 etcd]# cat /etc/supervisord.d/flannel.ini

[program:flanneld-7-21] ##注意这里在22上是需要改下的

command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/flannel ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

[root@hdss7-21 etcd]# supervisorctl update

flanneld-7-21: added process group

[root@hdss7-21 etcd]# supervisorctl status

etcd-server-7-21 RUNNING pid 9415, uptime 1 day, 19:08:30

flanneld-7-21 RUNNING pid 22210, uptime 0:08:51

kube-apiserver-7-21 RUNNING pid 9425, uptime 1 day, 19:08:30

kube-controller-manager-7-21 RUNNING pid 64928, uptime 4:28:51

kube-kubelet-7-21 RUNNING pid 11202, uptime 1 day, 18:10:17

kube-proxy-7-21 RUNNING pid 31943, uptime 1 day, 16:46:32

kube-scheduler-7-21 RUNNING pid 11975, uptime 0:39:53

[root@hdss7-21 etcd]# kubectl get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-djjjj 1/1 Running 0 40h 172.7.21.2 hdss7-21.host.com <none> <none>

nginx-ds-qwxxr 1/1 Running 0 40h 172.7.22.2 hdss7-22.host.com <none> <none>

[root@hdss7-21 etcd]# ping 172.7.22.2 ##若是在22上能ping同21那个地址说明你在22上也部署好了

PING 172.7.22.2 (172.7.22.2) 56(84) bytes of data.

64 bytes from 172.7.22.2: icmp_seq=1 ttl=63 time=0.392 ms

64 bytes from 172.7.22.2: icmp_seq=2 ttl=63 time=2.05 ms

^C

--- 172.7.22.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.392/1.225/2.058/0.833 ms

[root@hdss7-21 etcd]#

下面是查看日志的命令

[root@hdss7-21 etcd]# tail -fn 200 /data/logs/flanneld/flanneld.stdout.log

I0828 15:07:52.121059 22211 main.go:527] Using interface with name ens33 and address 10.4.7.21

I0828 15:07:52.121135 22211 main.go:540] Using 10.4.7.21 as external address

2021-08-28 15:07:52.121566 I | warning: ignoring ServerName for user-provided CA for backwards compatibility is deprecated

I0828 15:07:52.121607 22211 main.go:244] Created subnet manager: Etcd Local Manager with Previous Subnet: 172.7.21.0/24

I0828 15:07:52.121611 22211 main.go:247] Installing signal handlers

I0828 15:07:52.123199 22211 main.go:587] Start healthz server on 0.0.0.0:2401

I0828 15:07:52.137258 22211 main.go:386] Found network config - Backend type: host-gw

I0828 15:07:52.140687 22211 local_manager.go:201] Found previously leased subnet (172.7.21.0/24), reusing

I0828 15:07:52.142881 22211 local_manager.go:220] Allocated lease (172.7.21.0/24) to current node (10.4.7.21)

I0828 15:07:52.143157 22211 main.go:317] Wrote subnet file to ./subnet.env

I0828 15:07:52.143164 22211 main.go:321] Running backend.

了解一下flanneld工作原理

看下面是说明你若是想去找172.7.22.0那个网段的网络,都要通过一个网关是10.4.7.22那个网关

[root@hdss7-21 etcd]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.4.7.2 0.0.0.0 UG 100 0 0 ens33

10.4.7.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33

172.7.21.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

172.7.22.0 10.4.7.22 255.255.255.0 UG 0 0 0 ens33

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

flannel就是在各自的主机加上一个静态路由然10.4.7.22能做网关让172.7.21.0能通172.7.22.0

就相当于一个命令

[root@hdss7-21 etcd]# route add -net 172.7.22.0/24 gw 10.4.7.22 dev ens33

当然flannel网络必须有一个前提条件就是所有的宿主机都要在同一个二层网络上,也就是都指向网关10.4.7.1

下面了解下flannel的VxLAN模型

就是指的值宿主机在两个不同的二层网络如下图10.4.7.1和10.5.7.1

但是它们指向的一个route路由

vxlan模型就是在你的宿主机上给你虚拟出了两个fannel实列,然后给你打通一个fannel网络隧道

若你有兴趣可以自己做下实验,这里我不在做,下面是实验过程

首先需要停下flannel网络

两便操作几乎一样

[root@hdss7-21 etcd]# supervisorctl stop flanneld-7-21

找出它的进程然后杀掉

[root@hdss7-21 etcd]# ps aux |grep flannel

[root@hdss7-21 etcd]# kill -9 165523 杀掉后看还是通不通,通的话把路由也杀掉

[root@hdss7-21 etcd]# route del -net 172.7.22.0/24 gw 10.4.7.22

然后在主机上就无法平通对方的主机上的pod地址了

然后还需要把etcd添加的那个路由也给去掉

命令 ./etcdctl rm /coreos.com/network/config

然后重现添加新的vxlan模型

./etcdctl set /coreos.com/network/config ‘{“Network”: “172.7.0.0/16”, “Backed”: {“Type”: “VxLAN”}}’

在去查看应该已经变成vxlan了

然后去启动网络,然后去ping 应该是已经通了

在去查看下路由模型

其实在生产环境中基本不会把宿主机部署在不同的二层网络模型下的

所以大部分时候都是选择host-gw模型的

还有一个模型是直接路由模型

vxlan模型

‘{“Network”: “172.7.0.0/16”, “Backed”: {“Type”: “VxLAN”}}’

运用的host-gw和vxlan的混合模型,会自动判断是否在同一个二层网络下,然后去选择模型去工作

‘{“Network”: “172.7.0.0/16”, “Backend”: {“Type”: “VxLAN”, “Diretrouing”: true}}’

这里推荐一个博客可以去看更详细的flannel的讲解

https://www.cnblogs.com/kevingrace/p/6859114.html

flannel之SNAT规则优化

首先先把那个nginx-ds的容器开其一下

[root@hdss7-21 ~]# vi nginx-ds.yaml

[root@hdss7-21 ~]# cat nginx-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.od.com/public/nginx:curl

ports:

- containerPort: 80

[root@hdss7-21 ~]# kubectl apply -f nginx-ds.yaml

daemonset.extensions/nginx-ds configured

[root@hdss7-21 ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

nginx-ds-djjjj 1/1 Running 0 41h

nginx-ds-qwxxr 1/1 Running 0 41h

[root@hdss7-21 ~]# kubectl delete po nginx-ds-djjjj nginx-ds-qwxxr

pod "nginx-ds-djjjj" deleted

pod "nginx-ds-qwxxr" deleted

[root@hdss7-21 ~]# kubectl get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-bpcs2 1/1 Running 0 10s 172.7.22.2 hdss7-22.host.com <none> <none>

nginx-ds-d68sl 1/1 Running 0 18s 172.7.21.2 hdss7-21.host.com <none>

进入pod后然后进行操作

在这里留下一个问题,就是在容器中安装net-tools 的时候一直报错

报错如下---------没有解决,那位大神知道了麻烦告诉我一下

[root@hdss7-21 ~]# kubectl exec -it nginx-ds-pbql6 bash

root@nginx-ds-pbql6:/# apt-get install net-tools -y

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following NEW packages will be installed:

net-tools

0 upgraded, 1 newly installed, 0 to remove and 1 not upgraded.

Need to get 225 kB of archives.

After this operation, 803 kB of additional disk space will be used.

Err:1 http://mirrors.163.com/debian jessie/main amd64 net-tools amd64 1.60-26+b1

Temporary failure resolving 'mirrors.163.com'

E: Failed to fetch http://mirrors.163.com/debian/pool/main/n/net-tools/net-tools_1.60-26+b1_amd64.deb Temporary failure resolving 'mirrors.163.com'

E: Unable to fetch some archives, maybe run apt-get update or try with --fix-missing?

root@nginx-ds-pbql6:/# ^C

然后进入pod后curl另个主机的地址能curl通说明正常了

[root@hdss7-21 ~]# kubectl get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-m987l 1/1 Running 0 33m 172.7.22.2 hdss7-22.host.com <none> <none>

nginx-ds-pbql6 1/1 Running 0 40m 172.7.21.2 hdss7-21.host.com <none> <none>

[root@hdss7-21 ~]# kubectl exec -it nginx-ds-pbql6 bash

root@nginx-ds-pbql6:/# curl 172.7.22.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

root@nginx-ds-pbql6:/# exit

exit

[root@hdss7-21 ~]#

你到22上进程查看的时候发现访问的是物理机地址这样说明不对

优化规则

在各运算节点上优化iptables规则

注意:iptables规则在各主机执行略有不同

优化SNAT规则,各运算节点之间各POD之间的网络通信不再出网

10.4.7.21主机上的,来源172.7.0.0/24段的docker的ip目标ip不是172.7.0.0./16段,网络发包不在从docker0网桥出站,才进行SNAT转换

[root@hdss7-21 ~]# iptables-save |grep -i postrouting

:POSTROUTING ACCEPT [479723:115671061]

-A POSTROUTING -o virbr0 -p udp -m udp --dport 68 -j CHECKSUM --checksum-fill

:POSTROUTING ACCEPT [0:0]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 192.168.122.0/24 -d 224.0.0.0/24 -j RETURN

-A POSTROUTING -s 192.168.122.0/24 -d 255.255.255.255/32 -j RETURN

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -p tcp -j MASQUERADE --to-ports 1024-65535

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -p udp -j MASQUERADE --to-ports 1024-65535

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

[root@hdss7-21 ~]# yum -y install iptables-services

[root@hdss7-21 ~]# systemctl start iptables

[root@hdss7-21 ~]# systemctl enable iptables

Created symlink from /etc/systemd/system/basic.target.wants/iptables.service to /usr/lib/systemd/system/iptables.service.

[root@hdss7-21 ~]# iptables -t nat -D POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

[root@hdss7-21 ~]# iptables -t nat -I POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

[root@hdss7-21 ~]# iptables-save |grep -i postrouting

:POSTROUTING ACCEPT [508765:118801421]

-A POSTROUTING -o virbr0 -p udp -m udp --dport 68 -j CHECKSUM --checksum-fill

:POSTROUTING ACCEPT [0:0]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 192.168.122.0/24 -d 224.0.0.0/24 -j RETURN

-A POSTROUTING -s 192.168.122.0/24 -d 255.255.255.255/32 -j RETURN

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -p tcp -j MASQUERADE --to-ports 1024-65535

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -p udp -j MASQUERADE --to-ports 1024-65535

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

下面是报存规则

[root@hdss7-21 ~]# iptables-save > /etc/sysconfig/iptables

[root@hdss7-21 ~]# iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-21 ~]#

[root@hdss7-21 ~]# iptables-save > /etc/sysconfig/iptables

在22上进行配置

[root@hdss7-22 flannel]# systemctl start iptables

[root@hdss7-22 flannel]# systemctl enable iptables

Created symlink from /etc/systemd/system/basic.target.wants/iptables.service to /usr/lib/systeice.

[root@hdss7-22 flannel]# iptables-save |grep -i reject

-A INPUT -j REJECT --reject-with icmp-host-prohibited

-A FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-22 flannel]# iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-22 flannel]# iptables-save |grep -i postrouting

:POSTROUTING ACCEPT [1481386:220648057]

-A POSTROUTING -o virbr0 -p udp -m udp --dport 68 -j CHECKSUM --checksum-fill

:POSTROUTING ACCEPT [62:3726]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.7.22.0/24 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 192.168.122.0/24 -d 224.0.0.0/24 -j RETURN

-A POSTROUTING -s 192.168.122.0/24 -d 255.255.255.255/32 -j RETURN

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -p tcp -j MASQUERADE --to-ports 1024-65535

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -p udp -j MASQUERADE --to-ports 1024-65535

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

[root@hdss7-22 flannel]# iptables -t nat -D POSTROUTING -s 172.7.22.0/24 ! -o docker0 -j MASQUERADE

[root@hdss7-22 flannel]# iptables -t nat -I POSTROUTING -s 172.7.22.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

[root@hdss7-22 flannel]# iptables-save |grep -i postrouting

:POSTROUTING ACCEPT [1494952:222838309]

-A POSTROUTING -o virbr0 -p udp -m udp --dport 68 -j CHECKSUM --checksum-fill

:POSTROUTING ACCEPT [5:303]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -s 172.7.22.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 192.168.122.0/24 -d 224.0.0.0/24 -j RETURN

-A POSTROUTING -s 192.168.122.0/24 -d 255.255.255.255/32 -j RETURN

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -p tcp -j MASQUERADE --to-ports 1024-65535

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -p udp -j MASQUERADE --to-ports 1024-65535

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

[root@hdss7-22 flannel]# iptables-save > /etc/sysconfig/iptables

[root@hdss7-22 flannel]# iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-22 flannel]# iptables-save |grep -i reject

[root@hdss7-22 flannel]# iptables-save > /etc/sysconfig/iptables

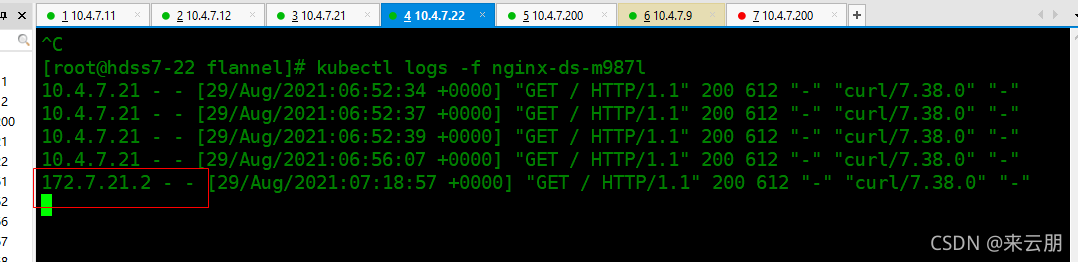

[root@hdss7-22 flannel]# kubectl get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-m987l 1/1 Running 0 56m 172.7.22.2 hdss7-22.host.com <none> <none>

nginx-ds-pbql6 1/1 Running 0 64m 172.7.21.2 hdss7-21.host.com <none> <none>

[root@hdss7-22 flannel]# kubectl logs -f nginx-ds-m987l

10.4.7.21 - - [29/Aug/2021:06:52:34 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.38.0" "-"

10.4.7.21 - - [29/Aug/2021:06:52:37 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.38.0" "-"

10.4.7.21 - - [29/Aug/2021:06:52:39 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.38.0" "-"

10.4.7.21 - - [29/Aug/2021:06:56:07 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.38.0" "-"

172.7.21.2 - - [29/Aug/2021:07:18:57 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.38.0" "-" 在curl的发现地址已经变化了

^C

[root@hdss7-22 flannel]#

```

k8s做优化是让 容器互相看到的是真实的容器ip地址

更多推荐

已为社区贡献14条内容

已为社区贡献14条内容

所有评论(0)