Kafka brokers down with fully written storages

I have tried produce as much messages as broker could handle. With fully written storages (8GB) brokers all stopped and they can't up again with this error

logs of brokers trying to restart

[2020-04-28 04:34:05,774] INFO [ThrottledChannelReaper-Request]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2020-04-28 04:34:05,774] INFO [ThrottledChannelReaper-Request]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2020-04-28 04:34:05,842] INFO [KafkaServer id=1] shut down completed (kafka.server.KafkaServer)

[2020-04-28 04:34:05,844] INFO Shutting down SupportedServerStartable (io.confluent.support.metrics.SupportedServerStartable)

[2020-04-28 04:33:58,847] INFO [ThrottledChannelReaper-Produce]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2020-04-28 04:34:05,844] INFO Closing BaseMetricsReporter (io.confluent.support.metrics.BaseMetricsReporter)

[2020-04-28 04:34:05,844] INFO Waiting for metrics thread to exit (io.confluent.support.metrics.SupportedServerStartable)

[2020-04-28 04:34:05,844] INFO Shutting down KafkaServer (io.confluent.support.metrics.SupportedServerStartable)

[2020-04-28 04:34:05,845] INFO [KafkaServer id=1] shutting down (kafka.server.KafkaServer)

[2020-04-28 04:33:58,847] INFO [ThrottledChannelReaper-Request]: Shutting down (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2020-04-28 04:33:59,847] INFO [ThrottledChannelReaper-Request]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2020-04-28 04:33:59,847] INFO [ThrottledChannelReaper-Request]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2020-04-28 04:33:59,854] INFO [KafkaServer id=0] shut down completed (kafka.server.KafkaServer)

[2020-04-28 04:33:59,942] INFO Shutting down SupportedServerStartable (io.confluent.support.metrics.SupportedServerStartable)

[2020-04-28 04:33:59,942] INFO Closing BaseMetricsReporter (io.confluent.support.metrics.BaseMetricsReporter)

[2020-04-28 04:33:59,942] INFO Waiting for metrics thread to exit (io.confluent.support.metrics.SupportedServerStartable)

[2020-04-28 04:33:59,942] INFO Shutting down KafkaServer (io.confluent.support.metrics.SupportedServerStartable)

[2020-04-28 04:33:59,942] INFO [KafkaServer id=0] shutting down (kafka.server.KafkaServer)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

[2020-04-28 04:37:18,938] INFO [ReplicaAlterLogDirsManager on broker 2] Removed fetcher for partitions Set(__consumer_offsets-22, kafka-connect-offset-15, kafka-connect-offset-16, kafka-connect-offset-2, __consumer_offsets-30, kafka-connect-offset-7, kafka-connect-offset-13, __consumer_offsets-8, __consumer_offsets-21, kafka-connect-offset-8, kafka-connect-status-2, kafka-connect-offset-5, __consumer_offsets-4, __consumer_offsets-27, __consumer_offsets-7, __consumer_offsets-9, __consumer_offsets-46, kafka-connect-offset-19, __consumer_offsets-25, __consumer_offsets-35, __consumer_offsets-41, __consumer_offsets-33, __consumer_offsets-23, __consumer_offsets-49, kafka-connect-offset-20, kafka-connect-offset-3, __consumer_offsets-47, __consumer_offsets-16, __consumer_offsets-28, kafka-connect-config-0, kafka-connect-offset-9, kafka-connect-offset-17, __consumer_offsets-31, __consumer_offsets-36, kafka-connect-status-1, __consumer_offsets-42, __consumer_offsets-3, __consumer_offsets-18, __consumer_offsets-37, __consumer_offsets-15, __consumer_offsets-24, kafka-connect-offset-10, kafka-connect-offset-24, kafka-connect-status-4, __consumer_offsets-38, __consumer_offsets-17, __consumer_offsets-48, kafka-connect-offset-23, kafka-connect-offset-21, kafka-connect-offset-0, __consumer_offsets-19, __consumer_offsets-11, kafka-connect-status-0, __consumer_offsets-13, kafka-connect-offset-18, __consumer_offsets-2, __consumer_offsets-43, __consumer_offsets-6, __consumer_offsets-14, kafka-connect-offset-14, kafka-connect-offset-22, kafka-connect-offset-6, perf-test4-0, kafka-connect-status-3, kafka-connect-offset-11, kafka-connect-offset-12, __consumer_offsets-20, __consumer_offsets-0, kafka-connect-offset-4, __consumer_offsets-44, __consumer_offsets-39, kafka-connect-offset-1, __consumer_offsets-12, __consumer_offsets-45, __consumer_offsets-1, __consumer_offsets-5, __consumer_offsets-26, __consumer_offsets-29, __consumer_offsets-34, __consumer_offsets-10, __consumer_offsets-32, __consumer_offsets-40) (kafka.server.ReplicaAlterLogDirsManager)

[2020-04-28 04:37:18,990] INFO [ReplicaManager broker=2] Broker 2 stopped fetcher for partitions __consumer_offsets-22,kafka-connect-offset-15,kafka-connect-offset-16,kafka-connect-offset-2,__consumer_offsets-30,kafka-connect-offset-7,kafka-connect-offset-13,__consumer_offsets-8,__consumer_offsets-21,kafka-connect-offset-8,kafka-connect-status-2,kafka-connect-offset-5,__consumer_offsets-4,__consumer_offsets-27,__consumer_offsets-7,__consumer_offsets-9,__consumer_offsets-46,kafka-connect-offset-19,__consumer_offsets-25,__consumer_offsets-35,__consumer_offsets-41,__consumer_offsets-33,__consumer_offsets-23,__consumer_offsets-49,kafka-connect-offset-20,kafka-connect-offset-3,__consumer_offsets-47,__consumer_offsets-16,__consumer_offsets-28,kafka-connect-config-0,kafka-connect-offset-9,kafka-connect-offset-17,__consumer_offsets-31,__consumer_offsets-36,kafka-connect-status-1,__consumer_offsets-42,__consumer_offsets-3,__consumer_offsets-18,__consumer_offsets-37,__consumer_offsets-15,__consumer_offsets-24,kafka-connect-offset-10,kafka-connect-offset-24,kafka-connect-status-4,__consumer_offsets-38,__consumer_offsets-17,__consumer_offsets-48,kafka-connect-offset-23,kafka-connect-offset-21,kafka-connect-offset-0,__consumer_offsets-19,__consumer_offsets-11,kafka-connect-status-0,__consumer_offsets-13,kafka-connect-offset-18,__consumer_offsets-2,__consumer_offsets-43,__consumer_offsets-6,__consumer_offsets-14,kafka-connect-offset-14,kafka-connect-offset-22,kafka-connect-offset-6,perf-test4-0,kafka-connect-status-3,kafka-connect-offset-11,kafka-connect-offset-12,__consumer_offsets-20,__consumer_offsets-0,kafka-connect-offset-4,__consumer_offsets-44,__consumer_offsets-39,kafka-connect-offset-1,__consumer_offsets-12,__consumer_offsets-45,__consumer_offsets-1,__consumer_offsets-5,__consumer_offsets-26,__consumer_offsets-29,__consumer_offsets-34,__consumer_offsets-10,__consumer_offsets-32,__consumer_offsets-40 and stopped moving logs for partitions because they are in the failed log directory /opt/kafka/data/logs. (kafka.server.ReplicaManager)

[2020-04-28 04:37:18,990] INFO Stopping serving logs in dir /opt/kafka/data/logs (kafka.log.LogManager)

[2020-04-28 04:37:18,992] WARN [Producer clientId=producer-1] 1 partitions have leader brokers without a matching listener, including [__confluent.support.metrics-0] (org.apache.kafka.clients.NetworkClient)

[2020-04-28 04:37:18,996] ERROR Shutdown broker because all log dirs in /opt/kafka/data/logs have failed (kafka.log.LogManager)

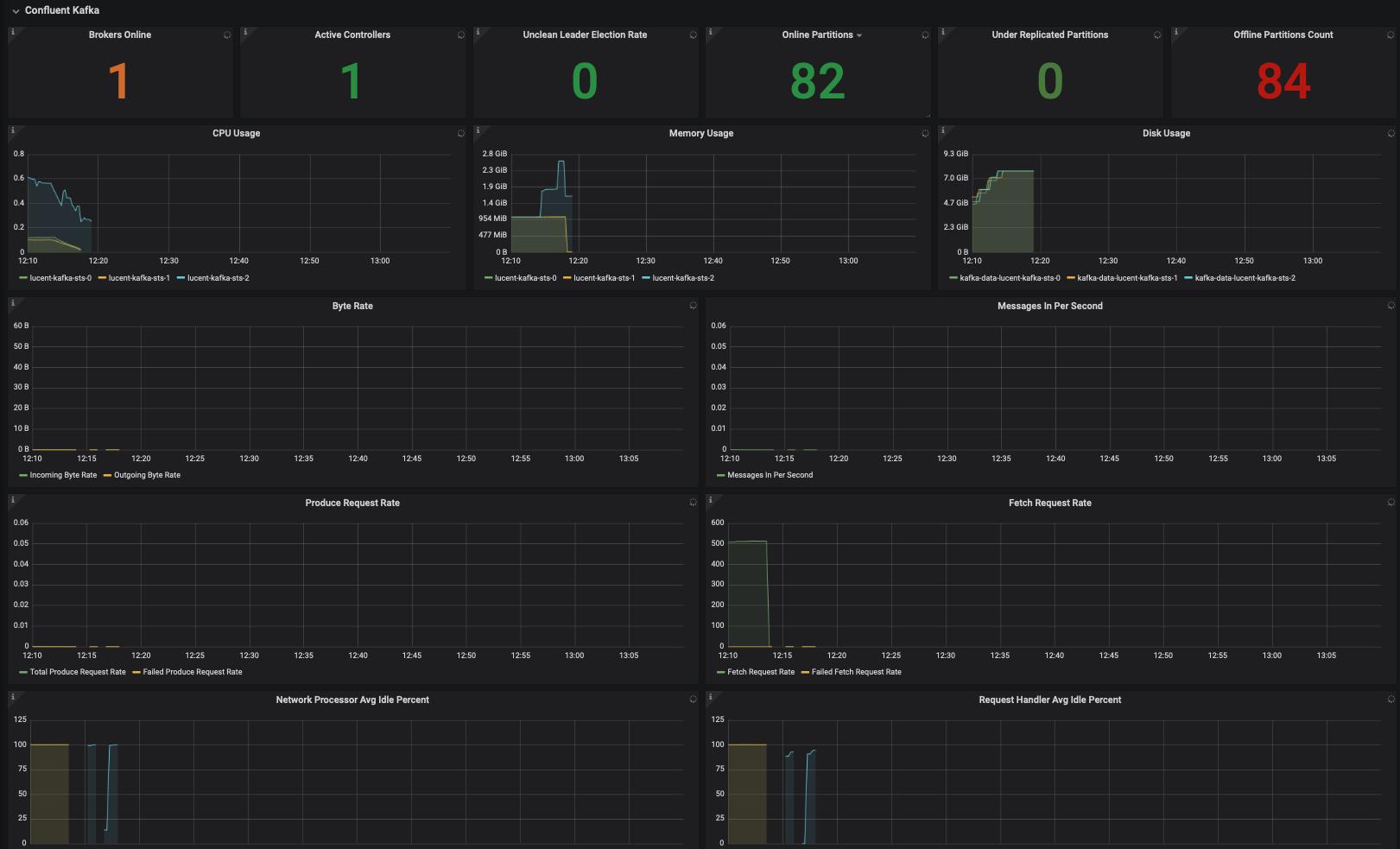

Prometheus monitoring snapshot

I hope to prevent this situation before broker down with solutions like remove previous messages for enough space or something. is there any best practice recommended for this ?

已为社区贡献20439条内容

已为社区贡献20439条内容

所有评论(0)