zookeeper-3.4.8单机与主从安装与配置

ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services. Allof these kinds of services are used in

ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services. All of these kinds of services are used in some form or another by distributed applications. Each time they are implemented there is a lot of work that goes into fixing the bugs and race conditions that are inevitable. Because of the difficulty of implementing these kinds of services, applications initially usually skimp on them ,which make them brittle in the presence of change and difficult to manage. Even when done correctly, different implementations of these services lead to management complexity when the applications are deployed.

Reference Link: http://zookeeper.apache.org/doc/trunk/zookeeperStarted.html

下载:

从官网下载最新的最稳定版本http://www.apache.org/dyn/closer.cgi/zookeeper/

在Linux下载的命令:wget http://mirrors.hust.edu.cn/apache/zookeeper/zookeeper-3.4.8/zookeeper-3.4.8.tar.gz

规划目录:

计划要安装主从,但先从单机开始,目录要规划好,可以在同一台机上部署三台,目录规划如下:

/usr/local/redis下zoo1,zoo2,zoo3

单机部署

在zoo1下解压下载的压缩包,并创建data,datalog,logs文件夹。

配置文件cd /usr/local/redis/zoo1/zookeeper-3.4.8/conf

cp zoo_sample.cfg zoo.cfg

vi zoo.cfg添加或修改data文件夹目录

dataDir=/usr/local/redis/zoo1/data

dataLogDir=/usr/local/redis/zoo1/datalog

启动zookeeper

在zookeeper-3.4.8下运行命令,启动zookeeper

[root@JfbIpadServer02 /usr/local/redis/zoo1/zookeeper-3.4.8]#bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/redis/zoo1/zookeeper-3.4.8/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

使用命令客户端连接至zookeeper

[root@JfbIpadServer02 /usr/local/redis/zoo1/zookeeper-3.4.8]#bin/zkCli.sh -server 127.0.0.1:2181

Connecting to 127.0.0.1:2181

[zk: 127.0.0.1:2181(CONNECTING) 0] 2016-03-29 11:53:08,156 [myid:] - INFO [main-SendThread(127.0.0.1:2181):ClientCnxn$SendThread@1299] - Session establishment complete on server 127.0.0.1/127.0.0.1:2181, sessionid = 0x153c07ff35f0000, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

看到这个就已连接上了,回车后出现下面的命令提示行:

[zk: 127.0.0.1:2181(CONNECTED) 0]

可以运行一些命令进行测试查看

[zk: 127.0.0.1:2181(CONNECTED) 0]help

[zk: 127.0.0.1:2181(CONNECTED) 1]ls /

[zookeeper]

[zk: 127.0.0.1:2181(CONNECTED) 2] create /zk_test my_data

Created /zk_test

[zk: 127.0.0.1:2181(CONNECTED) 3] ls /

[zookeeper, zk_test]

[zk: 127.0.0.1:2181(CONNECTED) 4] get /zk_test

my_data

cZxid = 0x2

ctime = Tue Mar 29 11:55:03 CST 2016

mZxid = 0x2

mtime = Tue Mar 29 11:55:03 CST 2016

pZxid = 0x2

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 7

numChildren = 0

[zk: 127.0.0.1:2181(CONNECTED) 5] set /zk_test junk

cZxid = 0x2

ctime = Tue Mar 29 11:55:03 CST 2016

mZxid = 0x3

mtime = Tue Mar 29 11:55:39 CST 2016

pZxid = 0x2

cversion = 0

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 4

numChildren = 0

[zk: 127.0.0.1:2181(CONNECTED) 6] get /zk_test

junk

cZxid = 0x2

ctime = Tue Mar 29 11:55:03 CST 2016

mZxid = 0x3

mtime = Tue Mar 29 11:55:39 CST 2016

pZxid = 0x2

cversion = 0

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 4

numChildren = 0

[zk: 127.0.0.1:2181(CONNECTED) 7] delete /zk_test

[zk: 127.0.0.1:2181(CONNECTED) 8] get /zk_test

Node does not exist: /zk_test

[zk: 127.0.0.1:2181(CONNECTED) 9] ls /

[zookeeper]

单机zookeeper就安装并启动好了。

Running Replicated ZooKeeper

Running ZooKeeper in standalone mode is convenient for evaluation, some development, and testing. But in production, you should run ZooKeeper in replicated mode. A replicated group of servers in the same application is called a quorum, and in replicated mode, all servers in the quorum have copies of the same configuration file.

For replicated mode, a minimum of three servers are required, and it is strongly recommended that you have an odd number of servers. If you only have two servers, then you are in a situation where if one of them fails, there are not enough machines to form a majority quorum. Two servers is inherently less stable than a single server, because there are two single points of failure.

其他两台,按照单机那台先部署。

进入data目录,创建一个myid的文件,里面写入一个数字,比如我这个是zoo1,那么就写一个1,zoo2对应myid文件就写入2,zoo3对应myid文件就写个3。

然后修改配置文件,The required conf/zoo.cfg file for replicated mode is similar to the one used in standalone mode, but with a few differences. Here is an example:

tickTime=2000

initLimit=5

syncLimit=2

dataDir=/usr/local/redis/zoo1/data #zoo1 zoo2 zoo3分别三台的目录

clientPort=2183 #2181 2182 2183 三台的端口

dataLogDir=/usr/local/redis/zoo1/datalog #zoo1 zoo2 zoo3分别三台的目录

server.1=127.0.0.1:2888:3888

server.2=127.0.0.1:2889:3889

server.3=127.0.0.1:2890:3890

最后几行需要注意的地方就是 server.X 这个数字就是对应 data/myid中的数字。你在3个server的myid文件中分别写入了1,2,3,那么每个server中的zoo.cfg都配server.1,server.2,server.3就OK了。因为在同一台机器上,后面连着的2个端口3个server都不要一样,否则端口冲突,其中第一个端口用来集群成员的信息交换,第二个端口是在leader挂掉时专门用来进行选举leader所用。

进入zookeeper/bin目录中,./zkServer.sh start启动一个server,这时会报大量错误?其实没什么关系,因为现在集群只起了1台server,zookeeper服务器端起来会根据zoo.cfg的服务器列表发起选举leader的请求,因为连不上其他机器而报错,那么当我们起第二个zookeeper实例后,leader将会被选出,从而一致性服务开始可以使用,这是因为3台机器只要有2台可用就可以选出leader并且对外提供服务(2n+1台机器,可以容n台机器挂掉)。

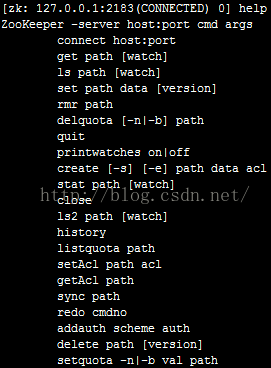

接下来就可以使用了,我们可以先通过 zookeeper自带的客户端交互程序来简单感受下zookeeper到底做一些什么事情。进入zookeeper/bin(3个server中任意一个)下,./zkCli.sh –server 127.0.0.1:2182,我连的是开着2182端口的机器。输入help命令,查看结果:

ls(查看当前节点数据),

ls2(查看当前节点数据并能看到更新次数等数据) ,

create(创建一个节点) ,

get(得到一个节点,包含数据和更新次数等数据),

set(修改节点)

delete(删除一个节点)

通过上述命令实践,我们可以发现,zookeeper使用了一个类似文件系统的树结构,数据可以挂在某个节点上,可以对这个节点进行删改。另外我们还发现,当改动一个节点的时候,集群中活着的机器都会更新到一致的数据。

通过java代码使用zookeeper

Zookeeper的使用主要是通过创建其jar包下的Zookeeper实例,并且调用其接口方法进行的,主要的操作就是对znode的增删改操作,监听znode的变化以及处理。

package com.jh.sms.test;

import org.apache.zookeeper.CreateMode;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooDefs.Ids;

import org.apache.zookeeper.ZooKeeper;

public class ZookeeperTest {

public static void zooTest()throws Exception{

//创建一个Zookeeper实例,第一个参数为目标服务器地址和端口,第二个参数为Session超时时间,第三个为节点变化时的回调方法

ZooKeeper zk = new ZooKeeper("192.168.0.149:2181", 500000,new Watcher() {

// 监控所有被触发的事件

public void process(WatchedEvent event) {

//dosomething

System.out.println(event.getState().name()+" int: "+event.getState().getIntValue());

}

});

//创建一个节点root,数据是mydata,不进行ACL权限控制,节点为永久性的(即客户端shutdown了也不会消失)

zk.create("/root", "mydata".getBytes(),Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

//在root下面创建一个childone znode,数据为childone,不进行ACL权限控制,节点为永久性的

zk.create("/root/childone","childone".getBytes(), Ids.OPEN_ACL_UNSAFE,CreateMode.PERSISTENT);

//取得/root节点下的子节点名称,返回List<String>

zk.getChildren("/root",true);

//取得/root/childone节点下的数据,返回byte[]

zk.getData("/root/childone", true, null);

//修改节点/root/childone下的数据,第三个参数为版本,如果是-1,那会无视被修改的数据版本,直接改掉

zk.setData("/root/childone","childonemodify".getBytes(), -1);

//删除/root/childone这个节点,第二个参数为版本,-1的话直接删除,无视版本

zk.delete("/root/childone", -1);

//关闭session

zk.close();

}

public static void main(String[] args)throws Exception {

ZookeeperTest.zooTest();

}

}[zk: 127.0.0.1:2182(CONNECTED) 1] ls /

[root, zookeeper, zk_test]

[zk: 127.0.0.1:2182(CONNECTED) 2] get /root

mydata

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)