Docker - Slow Network Conditions

Answer a question

I have a docker-compose setup with several services, like so:

version: '3.6'

services:

web:

build:

context: ./services/web

dockerfile: Dockerfile-dev

volumes:

- './services/web:/usr/src/app'

ports:

- 5001:5000

environment:

- FLASK_ENV=development

- APP_SETTINGS=project.config.DevelopmentConfig

- DATABASE_URL=postgres://postgres:postgres@web-db:5432/web_dev

- DATABASE_TEST_URL=postgres://postgres:postgres@web-db:5432/web_test

- SECRET_KEY=my_precious

depends_on:

- web-db

- redis

web-db:

build:

context: ./services/web/projct/db

dockerfile: Dockerfile

ports:

- 5435:5432

environment:

- POSTGRES_USER=postgres

- POSTGRES_PASSWORD=postgres

nginx:

build:

context: ./services/nginx

dockerfile: Dockerfile-dev

restart: always

ports:

- 80:80

depends_on:

- web

- client

#- redis

client:

build:

context: ./services/client

dockerfile: Dockerfile-dev

volumes:

- './services/client:/usr/src/app'

- '/usr/src/app/node_modules'

ports:

- 3000:3000

environment:

- NODE_ENV=development

- REACT_APP_WEB_SERVICE_URL=${REACT_APP_WEB_SERVICE_URL}

depends_on:

- web

swagger:

build:

context: ./services/swagger

dockerfile: Dockerfile-dev

volumes:

- './services/swagger/swagger.json:/usr/share/nginx/html/swagger.json'

ports:

- 3008:8080

environment:

- URL=swagger.json

depends_on:

- web

scrapyrt:

image: vimagick/scrapyd:py3

restart: always

ports:

- '9080:9080'

volumes:

- ./services/web:/usr/src/app

working_dir: /usr/src/app/project/api

entrypoint: /usr/src/app/entrypoint-scrapyrt.sh

depends_on:

- web

redis:

image: redis:5.0.3-alpine

restart: always

expose:

- '6379'

ports:

- '6379:6379'

monitor:

image: dev3_web

ports:

- 5555:5555

command: flower -A celery_worker.celery --port=5555 --broker=redis://redis:6379/0

depends_on:

- web

- redis

worker-analysis:

image: dev3_web

restart: always

volumes:

- ./services/web:/usr/src/app

- ./services/web/celery_logs:/usr/src/app/celery_logs

command: celery worker -A celery_worker.celery --loglevel=DEBUG --logfile=celery_logs/worker_analysis.log -Q analysis

environment:

- CELERY_BROKER=redis://redis:6379/0

- CELERY_RESULT_BACKEND=redis://redis:6379/0

- FLASK_ENV=development

- APP_SETTINGS=project.config.DevelopmentConfig

- DATABASE_URL=postgres://postgres:postgres@web-db:5432/web_dev

- DATABASE_TEST_URL=postgres://postgres:postgres@web-db:5432/web_test

- SECRET_KEY=my_precious

depends_on:

- web

- redis

- web-db

links:

- redis:redis

- web-db:web-db

worker-scraping:

image: dev3_web

restart: always

volumes:

- ./services/web:/usr/src/app

- ./services/web/celery_logs:/usr/src/app/celery_logs

command: celery worker -A celery_worker.celery --loglevel=DEBUG --logfile=celery_logs/worker_scraping.log -Q scraping

environment:

- CELERY_BROKER=redis://redis:6379/0

- CELERY_RESULT_BACKEND=redis://redis:6379/0

- FLASK_ENV=development

- APP_SETTINGS=project.config.DevelopmentConfig

- DATABASE_URL=postgres://postgres:postgres@web-db:5432/web_dev

- DATABASE_TEST_URL=postgres://postgres:postgres@web-db:5432/web_test

- SECRET_KEY=my_precious

depends_on:

- web

- redis

- web-db

links:

- redis:redis

- web-db:web-db

worker-emailing:

image: dev3_web

restart: always

volumes:

- ./services/web:/usr/src/app

- ./services/web/celery_logs:/usr/src/app/celery_logs

command: celery worker -A celery_worker.celery --loglevel=DEBUG --logfile=celery_logs/worker_emailing.log -Q email

environment:

- CELERY_BROKER=redis://redis:6379/0

- CELERY_RESULT_BACKEND=redis://redis:6379/0

- FLASK_ENV=development

- APP_SETTINGS=project.config.DevelopmentConfig

- DATABASE_URL=postgres://postgres:postgres@web-db:5432/web_dev

- DATABASE_TEST_URL=postgres://postgres:postgres@web-db:5432/web_test

- SECRET_KEY=my_precious

depends_on:

- web

- redis

- web-db

links:

- redis:redis

- web-db:web-db

worker-learning:

image: dev3_web

restart: always

volumes:

- ./services/web:/usr/src/app

- ./services/web/celery_logs:/usr/src/app/celery_logs

command: celery worker -A celery_worker.celery --loglevel=DEBUG --logfile=celery_logs/worker_ml.log -Q machine_learning

environment:

- CELERY_BROKER=redis://redis:6379/0

- CELERY_RESULT_BACKEND=redis://redis:6379/0

- FLASK_ENV=development

- APP_SETTINGS=project.config.DevelopmentConfig

- DATABASE_URL=postgres://postgres:postgres@web-db:5432/web_dev

- DATABASE_TEST_URL=postgres://postgres:postgres@web-db:5432/web_test

- SECRET_KEY=my_precious

depends_on:

- web

- redis

- web-db

links:

- redis:redis

- web-db:web-db

worker-periodic:

image: dev3_web

restart: always

volumes:

- ./services/web:/usr/src/app

- ./services/web/celery_logs:/usr/src/app/celery_logs

command: celery beat -A celery_worker.celery --schedule=/tmp/celerybeat-schedule --loglevel=DEBUG --pidfile=/tmp/celerybeat.pid

environment:

- CELERY_BROKER=redis://redis:6379/0

- CELERY_RESULT_BACKEND=redis://redis:6379/0

- FLASK_ENV=development

- APP_SETTINGS=project.config.DevelopmentConfig

- DATABASE_URL=postgres://postgres:postgres@web-db:5432/web_dev

- DATABASE_TEST_URL=postgres://postgres:postgres@web-db:5432/web_test

- SECRET_KEY=my_precious

depends_on:

- web

- redis

- web-db

links:

- redis:redis

- web-db:web-db

docker-compose -f docker-compose-dev.yml up -d and docker ps give me:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

396d7a1a5443 dev3_nginx "nginx -g 'daemon of…" 23 hours ago Up 18 minutes 0.0.0.0:80->80/tcp dev3_nginx_1

8ec7a51e2c2a dev3_web "celery worker -A ce…" 24 hours ago Up 19 minutes dev3_worker-analysis_1

e591e6445c64 dev3_web "celery worker -A ce…" 24 hours ago Up 19 minutes dev3_worker-learning_1

4d1fd17be3cb dev3_web "celery worker -A ce…" 24 hours ago Up 19 minutes dev3_worker-scraping_1

d25c40060fed dev3_web "celery beat -A cele…" 24 hours ago Up 17 seconds dev3_worker-periodic_1

76df1a600afa dev3_web "celery worker -A ce…" 24 hours ago Up 18 minutes dev3_worker-emailing_1

3442b0ce5d56 vimagick/scrapyd:py3 "/usr/src/app/entryp…" 24 hours ago Up 20 minutes 6800/tcp, 0.0.0.0:9080->9080/tcp dev3_scrapyrt_1

81d3ccea4de4 dev3_client "npm start" 24 hours ago Up 19 minutes 0.0.0.0:3000->3000/tcp dev3_client_1

aff5ecf951d2 dev3_web "flower -A celery_wo…" 24 hours ago Up 10 seconds 0.0.0.0:5555->5555/tcp dev3_monitor_1

864f17f39d54 dev3_swagger "/start.sh" 24 hours ago Up 19 minutes 80/tcp, 0.0.0.0:3008->8080/tcp dev3_swagger_1

e69476843236 dev3_web "/usr/src/app/entryp…" 24 hours ago Up 19 minutes 0.0.0.0:5001->5000/tcp dev3_web_1

22fd91b1ab6e redis:5.0.3-alpine "docker-entrypoint.s…" 24 hours ago Up 20 minutes 0.0.0.0:6379->6379/tcp dev3_redis_1

3a0b2115dd8e dev3_web-db "docker-entrypoint.s…" 24 hours ago Up 19 minutes 0.0.0.0:5435->5432/tcp dev3_web-db_1

They are all up, but I'm facing exceedingly slow network conditions, with a lot of instability. I have tried to check connectivity between containers and catch some eventual lag, like so:

docker container exec -it e69476843236 ping aff5ecf951d2

PING aff5ecf951d2 (172.18.0.13): 56 data bytes

64 bytes from 172.18.0.13: seq=0 ttl=64 time=0.504 ms

64 bytes from 172.18.0.13: seq=1 ttl=64 time=0.254 ms

64 bytes from 172.18.0.13: seq=2 ttl=64 time=0.191 ms

64 bytes from 172.18.0.13: seq=3 ttl=64 time=0.168 ms

but timing seems alright by these tests, though now and then I get ping: bad address 'aff5ecf951d2' when some service goes down.

Sometimes I get this error:

ERROR: An HTTP request took too long to complete. Retry with --verbose to obtain debug information.

If you encounter this issue regularly because of slow network conditions, consider setting COMPOSE_HTTP_TIMEOUT to a higher value (current value: 60).

And too many times I just have to restart Docker in order to make it work.

How can I docker inspect deeper into slow network conditions and figure out what is wrong? Can newtwork issues be related to volumes?

Answers

The problem manifested itself as the number of containers and the app complexity grew up, (as you always should be aware of).

In my case, I had changed one of the images from Alpine to Slim-Buster (Debian), which is significantly larger.

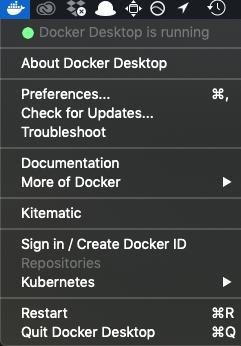

Turns out I could fix that by simply going to Docker 'Preferences':

clicking on 'Advanced' and increasing memory allocation.

Now it runs smoothly again.

更多推荐

已为社区贡献35528条内容

已为社区贡献35528条内容

所有评论(0)