iOS使用讯飞语音-语音识别(最新版)

引言去年在一家公司实习,接触了一点人工智能。既然接触人工智能,那么语音识别、语音合成、人脸识别等等都是必不可少的。本来已经是离开iOS开发这条路了,还剩下毕业设计要做,所以这段时间最后一次回来接触iOS了。以后,估计没什么机会再接触iOS,都往着机器学习、深度学习方向去了。废话不说了,记录一下,如何接入讯飞语音。步骤一、官网下载SDK去讯飞语音开放平台下载iOS的...

引言

去年在一家公司实习,接触了一点人工智能。既然接触人工智能,那么语音识别、语音合成、人脸识别等等都是必不可少的。

本来已经是离开iOS开发这条路了,还剩下毕业设计要做,所以这段时间最后一次回来接触iOS了。

以后,估计没什么机会再接触iOS,都往着机器学习、深度学习方向去了。

废话不说了,记录一下,如何接入讯飞语音。

步骤

- 一、官网下载SDK

去讯飞语音开放平台下载iOS的SDK

讯飞语音开放平台

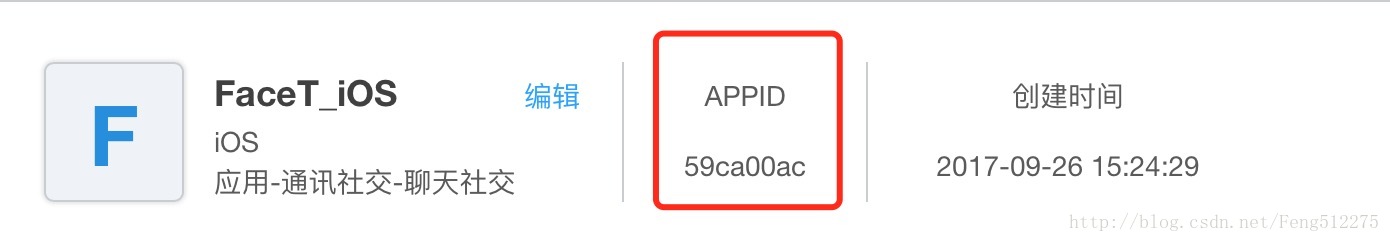

当然,你需要创建一个应用,然后才能下载iOS的SDK,应用选择语音识别的类型。(这里只是演示语音识别类型的接入,其余语音合成、人脸识别等等自己可以试试)

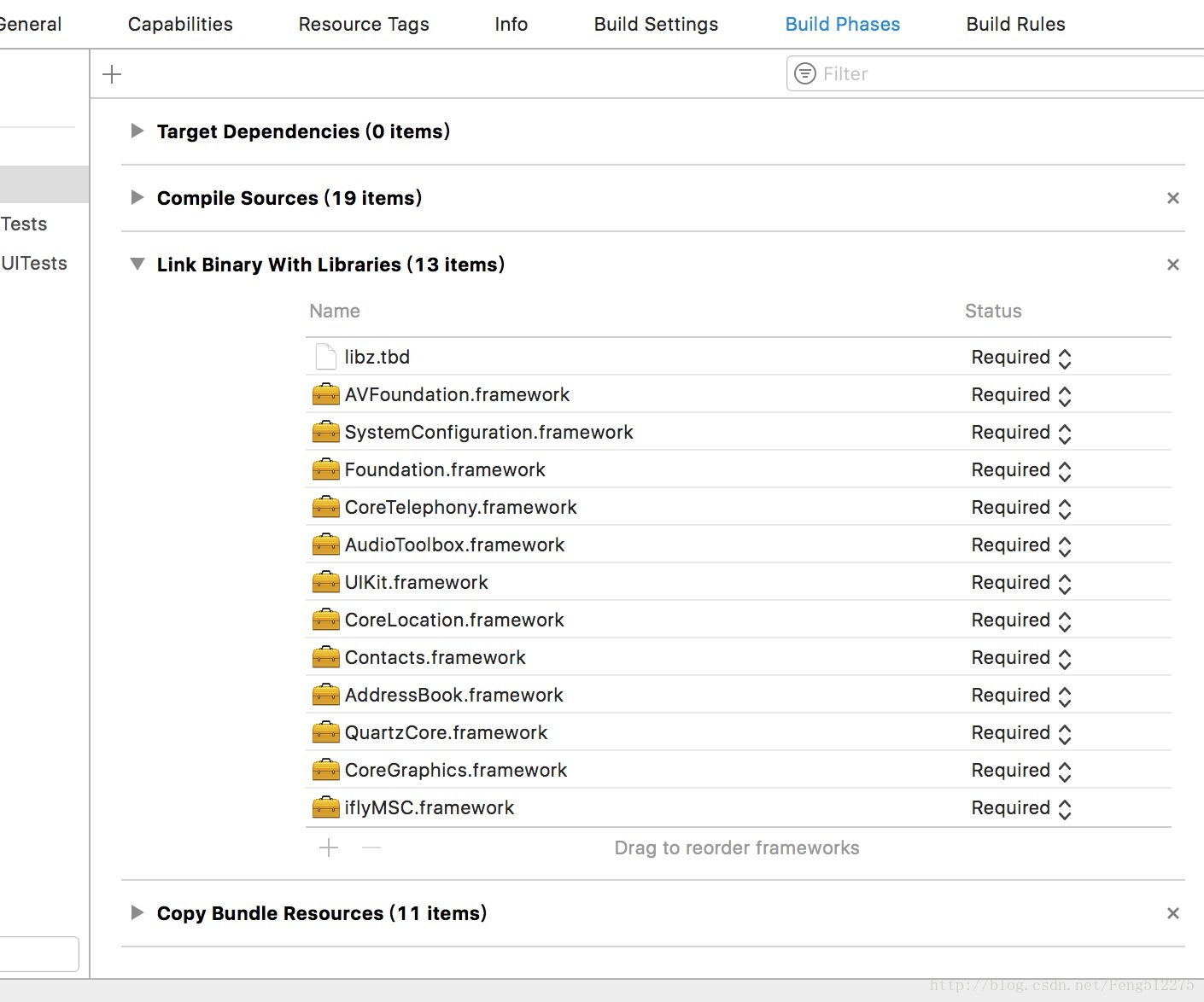

- 二、工程导入相应的包和文件

这里仅演示在线语音识别,离线的请按照文档来操作

iOS讯飞操作文档

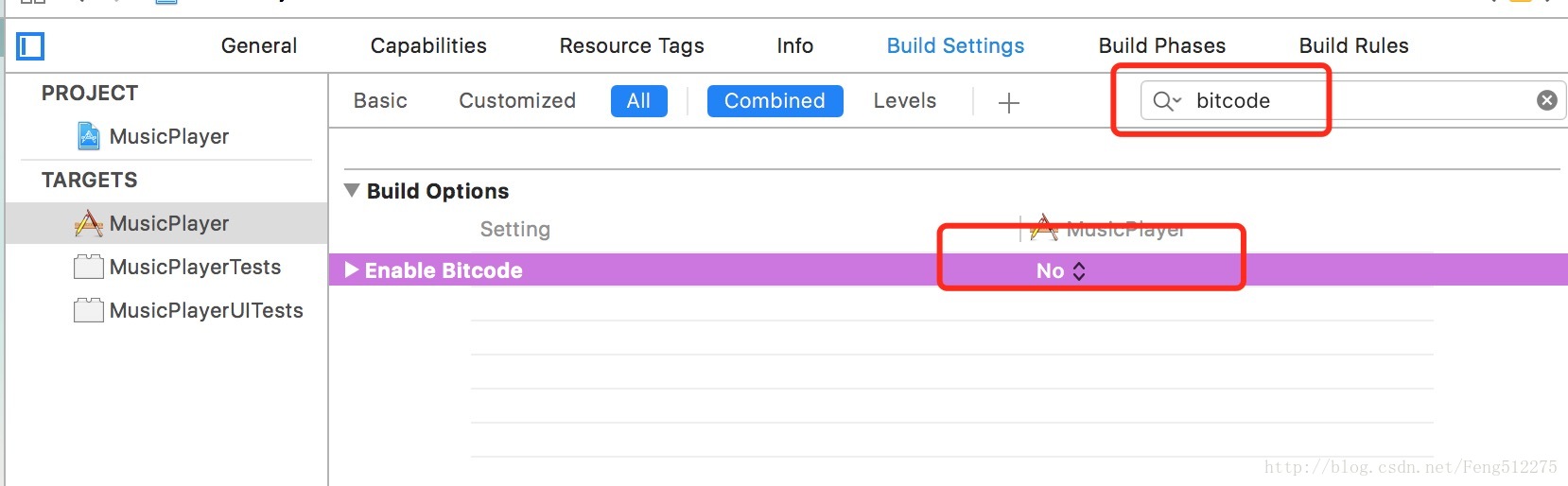

- 三、设置系统参数

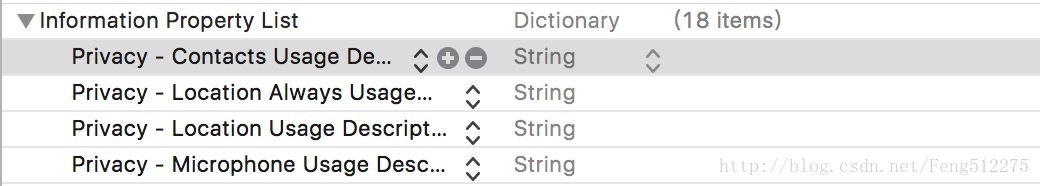

用户隐私权限配置

iOS 10发布以来,苹果为了用户信息安全,加入隐私权限设置机制,让用户来选择是否允许。

隐私权限配置可在info.plist 新增相关privacy字段,MSC SDK中需要用到的权限主要包括麦克风权限、联系人权限和地理位置权限:

<key>NSMicrophoneUsageDescription</key>

<string></string>

<key>NSLocationUsageDescription</key>

<string></string>

<key>NSLocationAlwaysUsageDescription</key>

<string></string>

<key>NSContactsUsageDescription</key>

<string></string>- 四、代码初始化

//Appid是应用的身份信息,具有唯一性,初始化时必须要传入Appid。

NSString *initString = [[NSString alloc] initWithFormat:@"appid=%@",xunfeiID];

[IFlySpeechUtility createUtility:initString];ID就是对应图片的ID。

建议把上面这段代码放在AppDelegate.m中

- (BOOL)application:(UIApplication *)application didFinishLaunchingWithOptions:(NSDictionary *)launchOptions方法里面初始化。

PS:要导入头文件#import "IFlyMSC/IFlyMSC.h"这个头文件,没有智能提示,文档里面我好像也没看到,还有我去年写的时候有备份(下面我会狠狠吐槽讯飞)

示例代码

- (BOOL)application:(UIApplication *)application didFinishLaunchingWithOptions:(NSDictionary *)launchOptions {

//Appid是应用的身份信息,具有唯一性,初始化时必须要传入Appid。

NSString *initString = [[NSString alloc] initWithFormat:@"appid=%@",xunfeiID];

[IFlySpeechUtility createUtility:initString];

}//

// MMusicViewController.m

// MusicPlayer

//

// Created by HZhenF on 2018/2/18.

// Copyright © 2018年 LiuZhu. All rights reserved.

//

#import "MMusicViewController.h"

#import "IFlyMSC/IFlyMSC.h"

#import "ISRDataHelper.h"

@interface MMusicViewController ()<IFlySpeechRecognizerDelegate>

//不带界面的识别对象

@property (nonatomic, strong) IFlySpeechRecognizer *iFlySpeechRecognizer;

/**生成的字符*/

@property(nonatomic,strong) NSString *resultStringFromJson;

/**当前是否可以进行录音*/

@property(nonatomic,assign) BOOL isStartRecord;

/**是否已经开始播放*/

@property(nonatomic,assign) BOOL ishadStart;

@end

@implementation MMusicViewController

#pragma mark - 懒加载

-(IFlySpeechRecognizer *)iFlySpeechRecognizer

{

if (!_iFlySpeechRecognizer) {

//创建语音识别对象

_iFlySpeechRecognizer = [IFlySpeechRecognizer sharedInstance];

//设置识别参数

//设置为听写模式

[_iFlySpeechRecognizer setParameter:@"iat" forKey:[IFlySpeechConstant IFLY_DOMAIN]];

//asr_audio_path 是录音文件名,设置 value 为 nil 或者为空取消保存,默认保存目录在 Library/cache 下。

[_iFlySpeechRecognizer setParameter:@"iat.pcm" forKey:[IFlySpeechConstant ASR_AUDIO_PATH]];

//设置最长录音时间:60秒

[_iFlySpeechRecognizer setParameter:@"-1" forKey:[IFlySpeechConstant SPEECH_TIMEOUT]];

//设置语音后端点:后端点静音检测时间,即用户停止说话多长时间内即认为不再输入, 自动停止录音

[_iFlySpeechRecognizer setParameter:@"10000" forKey:[IFlySpeechConstant VAD_EOS]];

//设置语音前端点:静音超时时间,即用户多长时间不说话则当做超时处理

[_iFlySpeechRecognizer setParameter:@"5000" forKey:[IFlySpeechConstant VAD_BOS]];

//网络等待时间

[_iFlySpeechRecognizer setParameter:@"2000" forKey:[IFlySpeechConstant NET_TIMEOUT]];

//设置采样率,推荐使用16K

[_iFlySpeechRecognizer setParameter:@"16000" forKey:[IFlySpeechConstant SAMPLE_RATE]];

//设置语言

[_iFlySpeechRecognizer setParameter:@"zh_cn" forKey:[IFlySpeechConstant LANGUAGE]];

//设置方言

[_iFlySpeechRecognizer setParameter:@"mandarin" forKey:[IFlySpeechConstant ACCENT]];

//设置是否返回标点符号

[_iFlySpeechRecognizer setParameter:@"0" forKey:[IFlySpeechConstant ASR_PTT]];

//设置代理

_iFlySpeechRecognizer.delegate = self;

}

return _iFlySpeechRecognizer;

}

#pragma mark - 系统方法

- (void)viewDidLoad {

[super viewDidLoad];

self.view.backgroundColor = [UIColor whiteColor];

self.isStartRecord = YES;

//初始化字符串,否则无法拼接

self.resultStringFromJson = @"";

CGRect btnRect = CGRectMake((ZFScreenW - 100)*0.5, 200, 100, 100);

UIButton *btn = [[UIButton alloc] initWithFrame:btnRect];

btn.backgroundColor = [UIColor orangeColor];

[btn setTitle:@"语音" forState:UIControlStateNormal];

[self.view addSubview:btn];

UILongPressGestureRecognizer *longpress = [[UILongPressGestureRecognizer alloc] initWithTarget:self action:@selector(longPressActionFromLongPressBtn:)];

longpress.minimumPressDuration = 0.1;

[btn addGestureRecognizer:longpress];

}

#pragma mark - 自定义方法

-(void)longPressActionFromLongPressBtn:(UILongPressGestureRecognizer *)longPress

{

// NSLog(@"AIAction");

CGPoint currentPoint = [longPress locationInView:longPress.view];

if (self.isStartRecord) {

self.resultStringFromJson = @"";

//启动识别服务

[self.iFlySpeechRecognizer startListening];

self.isStartRecord = NO;

self.ishadStart = YES;

//开始声音动画

// [self TipsViewShowWithType:@"start"];

}

//如果上移的距离大于60,就提示放弃本次录音

if (currentPoint.y < -60) {

//变成取消发送图片

// [self TipsViewShowWithType:@"cancel"];

self.ishadStart = NO;

}

else

{

if (self.ishadStart == NO) {

//开始声音动画

// [self TipsViewShowWithType:@"start"];

self.ishadStart = YES;

}

}

if (longPress.state == UIGestureRecognizerStateEnded) {

self.isStartRecord = YES;

if (currentPoint.y < -60) {

[self.iFlySpeechRecognizer cancel];

}

else

{

[self.iFlySpeechRecognizer stopListening];

}

//让声音播放动画消失

// [self TipsViewShowWithType:@"remove"];

}

}

//IFlySpeechRecognizerDelegate协议实现

//识别结果返回代理

- (void) onResults:(NSArray *) results isLast:(BOOL)isLast{

NSMutableString *resultString = [[NSMutableString alloc] init];

NSDictionary *dic = results[0];

for (NSString *key in dic) {

[resultString appendFormat:@"%@",key];

}

//持续拼接语音内容

self.resultStringFromJson = [self.resultStringFromJson stringByAppendingString:[ISRDataHelper stringFromJson:resultString]];

NSLog(@"self.resultStringFromJson = %@",self.resultStringFromJson);

}

//识别会话结束返回代理

- (void)onError: (IFlySpeechError *) error{

NSLog(@"error = %@",[error description]);

}

//停止录音回调

-(void)onEndOfSpeech

{

self.isStartRecord = YES;

}

//开始录音回调

-(void)onBeginOfSpeech

{

// NSLog(@"onbeginofspeech");

}

//音量回调函数

-(void)onVolumeChanged:(int)volume

{

}

//会话取消回调

-(void)onCancel

{

// NSLog(@"取消本次录音");

}

@end

//

// ISRDataHelper.h

// HYAIRobotSDK

//

// Created by HZhenF on 2017/9/7.

// Copyright © 2017年 GZHYTechnology. All rights reserved.

//

#import <Foundation/Foundation.h>

@interface ISRDataHelper : NSObject

// 解析命令词返回的结果

+ (NSString*)stringFromAsr:(NSString*)params;

/**

解析JSON数据

****/

+ (NSString *)stringFromJson:(NSString*)params;//

/**

解析语法识别返回的结果

****/

+ (NSString *)stringFromABNFJson:(NSString*)params;

@end

//

// ISRDataHelper.m

// HYAIRobotSDK

//

// Created by HZhenF on 2017/9/7.

// Copyright © 2017年 GZHYTechnology. All rights reserved.

//

#import "ISRDataHelper.h"

@implementation ISRDataHelper

/**

解析命令词返回的结果

****/

+ (NSString*)stringFromAsr:(NSString*)params;

{

NSMutableString * resultString = [[NSMutableString alloc]init];

NSString *inputString = nil;

NSArray *array = [params componentsSeparatedByString:@"\n"];

for (int index = 0; index < array.count; index++)

{

NSRange range;

NSString *line = [array objectAtIndex:index];

NSRange idRange = [line rangeOfString:@"id="];

NSRange nameRange = [line rangeOfString:@"name="];

NSRange confidenceRange = [line rangeOfString:@"confidence="];

NSRange grammarRange = [line rangeOfString:@" grammar="];

NSRange inputRange = [line rangeOfString:@"input="];

if (confidenceRange.length == 0 || grammarRange.length == 0 || inputRange.length == 0 )

{

continue;

}

//check nomatch

if (idRange.length!=0) {

NSUInteger idPosX = idRange.location + idRange.length;

NSUInteger idLength = nameRange.location - idPosX;

range = NSMakeRange(idPosX,idLength);

NSString *idValue = [[line substringWithRange:range]

stringByTrimmingCharactersInSet: [NSCharacterSet whitespaceAndNewlineCharacterSet] ];

if ([idValue isEqualToString:@"nomatch"]) {

return @"";

}

}

//Get Confidence Value

NSUInteger confidencePosX = confidenceRange.location + confidenceRange.length;

NSUInteger confidenceLength = grammarRange.location - confidencePosX;

range = NSMakeRange(confidencePosX,confidenceLength);

NSString *score = [line substringWithRange:range];

NSUInteger inputStringPosX = inputRange.location + inputRange.length;

NSUInteger inputStringLength = line.length - inputStringPosX;

range = NSMakeRange(inputStringPosX , inputStringLength);

inputString = [line substringWithRange:range];

[resultString appendFormat:@"%@ 置信度%@\n",inputString, score];

}

return resultString;

}

/**

解析听写json格式的数据

params例如:

{"sn":1,"ls":true,"bg":0,"ed":0,"ws":[{"bg":0,"cw":[{"w":"白日","sc":0}]},{"bg":0,"cw":[{"w":"依山","sc":0}]},{"bg":0,"cw":[{"w":"尽","sc":0}]},{"bg":0,"cw":[{"w":"黄河入海流","sc":0}]},{"bg":0,"cw":[{"w":"。","sc":0}]}]}

****/

+ (NSString *)stringFromJson:(NSString*)params

{

if (params == NULL) {

return nil;

}

NSMutableString *tempStr = [[NSMutableString alloc] init];

NSDictionary *resultDic = [NSJSONSerialization JSONObjectWithData: //返回的格式必须为utf8的,否则发生未知错误

[params dataUsingEncoding:NSUTF8StringEncoding] options:kNilOptions error:nil];

if (resultDic!= nil) {

NSArray *wordArray = [resultDic objectForKey:@"ws"];

for (int i = 0; i < [wordArray count]; i++) {

NSDictionary *wsDic = [wordArray objectAtIndex: i];

NSArray *cwArray = [wsDic objectForKey:@"cw"];

for (int j = 0; j < [cwArray count]; j++) {

NSDictionary *wDic = [cwArray objectAtIndex:j];

NSString *str = [wDic objectForKey:@"w"];

[tempStr appendString: str];

}

}

}

return tempStr;

}

/**

解析语法识别返回的结果

****/

+ (NSString *)stringFromABNFJson:(NSString*)params

{

if (params == NULL) {

return nil;

}

NSMutableString *tempStr = [[NSMutableString alloc] init];

NSDictionary *resultDic = [NSJSONSerialization JSONObjectWithData:

[params dataUsingEncoding:NSUTF8StringEncoding] options:kNilOptions error:nil];

NSArray *wordArray = [resultDic objectForKey:@"ws"];

for (int i = 0; i < [wordArray count]; i++) {

NSDictionary *wsDic = [wordArray objectAtIndex: i];

NSArray *cwArray = [wsDic objectForKey:@"cw"];

for (int j = 0; j < [cwArray count]; j++) {

NSDictionary *wDic = [cwArray objectAtIndex:j];

NSString *str = [wDic objectForKey:@"w"];

NSString *score = [wDic objectForKey:@"sc"];

[tempStr appendString: str];

[tempStr appendFormat:@" 置信度:%@",score];

[tempStr appendString: @"\n"];

}

}

return tempStr;

}

@end

小吐槽

在这里,我要狠狠地吐槽一番讯飞语音开放平台,产品功能怎么样我不说,单单是开发文档的说明我就很想骂人了。以前的开发文档还会有详细的说明,现在SDK也更新了,对应的接口也少了,连Demo也很简单了。但是!能不能别告诉我,我要设置其他的东西,好歹也说明现在不对外开放了。还有,现在下载版的docsets文档,我知道你们的本意是方便iOS开发者导入Document目录下,直接方便开发者查看文档,呵呵,我还不如看以前你们给的文档,要啥有啥。早知如此,我还不如直接去用百度的。本来是想抱着尝试的态度,对你们更新的SDK摸索研究一下,现在,我彻底失望了。虽然是完成了任务,成功使用了语音听写功能,老实说,我太失望了。

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)