100行python代码爬取5万条网易新闻评论

前几天学习了一下如何爬取网易新闻动态评论,以demo为基础扩展成了100行的小程序,一次可以获取5万多条评论(当然,这取决于当时的评论总数),代码贴上:from bs4 import BeautifulSoupimport requestsimport json#global valuesheaders = {'User-Agent':'Mozilla/5.0 (X11; Linux x86_

·

前几天学习了一下如何爬取网易新闻动态评论,以demo为基础扩展成了100行的小程序,一次可以获取5万多条评论(当然,这取决于当时的评论总数),代码贴上:

from bs4 import BeautifulSoup

import requests

import json

#global values

headers = {'User-Agent':'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/\

537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11'}

offset = 0 #获取评论的起点

limit = 40 #一次获取评论数

count_comments = 0 #评论计数

count_news = 0 #新闻计数

##################################以下函数模块###########################################

#创建获取评论数据的url in:str,int,int out:str

def createUrl(commentUrl,offset,limit):

s1 = 'http://comment.news.163.com/api/v1/products/a2869674571f77b5a0867c3d71db5856/threads/'

s2 = '/comments/newList?offset='

name = commentUrl.split('/')[-1].split('.')[0]

u = s1 + str(name) + s2 + str(offset) + '&limit=' + str(limit)

return u

#从单个json文件中获取评论 in:dict out:set

def getItemsList(data):

setComment = set([])

for key in data['comments'].keys():

setComment.add(data['comments'][key]['content'])

return setComment

#通过url获取一篇新闻评论 in:str out:list

def getComments(commentUrl):

global limit

global offset

global count_comments

comments = set([])

while(1):

res = requests.get(url=createUrl(commentUrl,offset,limit),headers=headers,timeout=10).content

data = json.loads(res.decode())

if 'comments' in data.keys() and len(data['comments'].keys()) != 0:

comments = getItemsList(data) | comments

offset += (limit+1)

else:

break

count_comments += len(list(comments))

offset = 1

return list(comments)

#存储评论到txt文件 in:str,list

def store2Txt(filename,commentList):

fw = open(filename,'a',encoding='utf-8')

for string in commentList:

fw.write(string+'\n')

fw.close()

#获取新闻链接 out:list

def getcommentUrlList():

global count_comments

rightUrlList = ["news.163.com","sports.163.com","war.163.com","money.163.com","lady.163.com","renjian.163.com","zajia.news.163.com"]

commentUrlList = []

url = 'http://www.163.com/'

res = requests.get(url=url,headers=headers)

tag = BeautifulSoup(res.content.decode('gbk'))

tag = tag.find_all(name='a',limit=10000)

for aTag in tag:

if 'href' in aTag.attrs.keys():

if aTag['href'].split('.')[-1] == 'html' and len(aTag['href'].split('/')[-1].split('.')[0]) == 16 and aTag['href'].split('/')[2] in rightUrlList:

commentUrlList.append(aTag['href'])

return commentUrlList

#从txt文件获取评论数 in:str

def getNumFromTxt(filename):

c = 0

fr = open(filename,'rb+')

for line in fr.readlines():

c += 1

return c

#获取网易新闻当天所有的评论 in:str

def getTodayComments(filename):

global count_news

commentUrlList = getcommentUrlList()

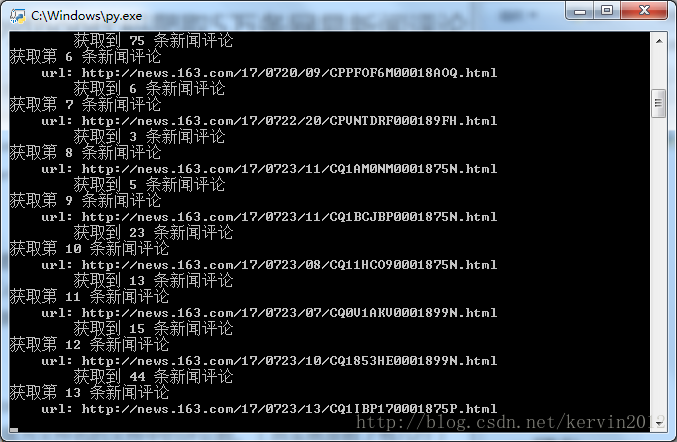

print("获取到 "+str(len(commentUrlList))+" 条新闻链接")

for commentUrl in commentUrlList:

count_news += 1

print("获取第 "+str(count_news)+" 条新闻评论")

print(" url: "+str(commentUrl))

try:

comments = getComments(commentUrl)

except Exception:

print(" 获取失败!!!")

print(" 获取到 "+str(len(comments))+" 条新闻评论")

store2Txt(filename,comments)

print("共获取了 "+str(count_comments)+" 条评论")

##################################操作区域###########################################

getTodayComments("1.txt")

# print(getNumFromTxt("1.txt"))解释两个函数:

getTodayComments(“1.txt”)

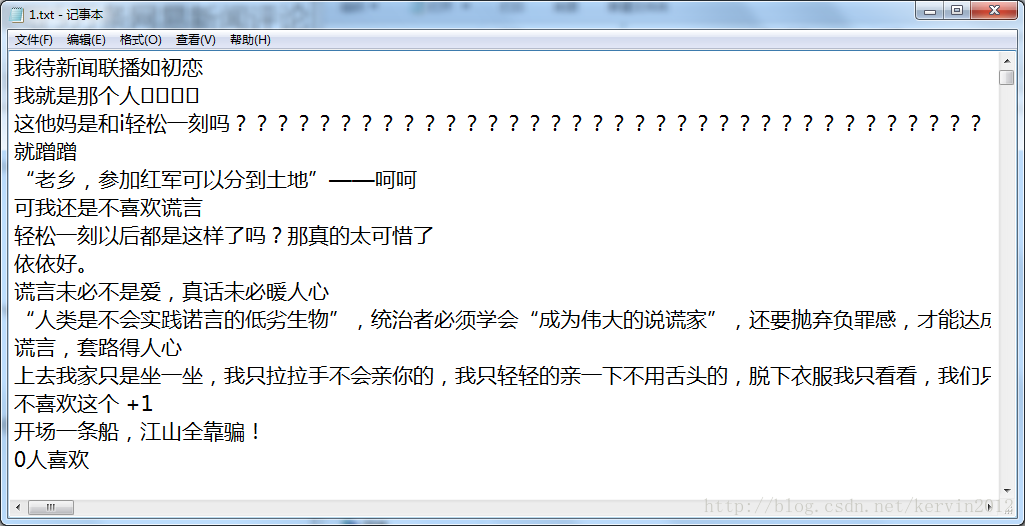

获取今天的新闻评论,传入一个str参数,获取到的数据存储在以这个参数值为文件名的文件当中。

getNumFromTxt(“1.txt”)

返回以参数值为文件名的文件中的评论数。(其实就是数了有几行)

执行过程及结果:

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)