k8s高可用和ingress

k8s高可用实现 网上好一些vip什么的,但是经过查阅,实现k8s的高可用无需vip,官网给出的图如下:压根就没什么vip,网上倒是有和这个类似的图,在lb那里加了个ka做vip,难道是要实现ingress的高可用?简单讲:高可用指的是scheduler和controller的高可用也就是说lb要实现对apiserver的负载,注意,也就是说apiserver可以同时存在多台并

其他相关的k8s文字:

一步一步学习k8syaml

k8s高可用实现

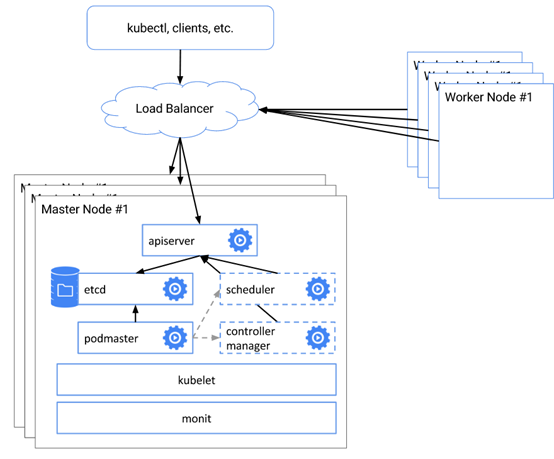

网上好一些vip什么的,但是经过查阅,实现k8s的高可用无需vip,官网给出的图如下:

压根就没什么vip,网上倒是有和这个类似的图,在lb那里加了个ka做vip,难道是要实现ingress的高可用?

简单讲:高可用指的是scheduler和controller的高可用

也就是说lb要实现对apiserver的负载,注意,也就是说apiserver可以同时存在多台并行工作,

而scheduler和controller则同时只能一台工作,有—leader-clock来选出,总的来说信息都在etcd里,所以maseter状态能互相感知。

具体实现api的负载有2种思想:

-

单独搭建一台nginx做负载

-

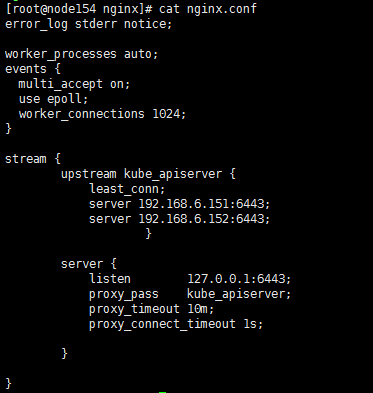

给每个node装1个nginx做负载,感觉这种更好,因为我不用在拿出一台vm来做负载了。以下是我的node上的nginx配置

Starting the API Server

Once these files exist, copy the kube-apiserver.yaml into /etc/kubernetes/manifests/ on each master node.

The kubelet monitors this directory, and will automatically create an instance of the kube-apiserver container using the pod definition specified in the file.

Load balancing

At this point, you should have 3 apiservers all working correctly. If you set up a network load balancer, you should be able to access your cluster via that load balancer, and see traffic balancing between the apiserver instances. Setting up a load balancer will depend on the specifics of your platform, for example instructions for the Google Cloud Platform can be found here

Note, if you are using authentication, you may need to regenerate your certificate to include the IP address of the balancer, in addition to the IP addresses of the individual nodes.

For pods that you deploy into the cluster, the kubernetes service/dns name should provide a load balanced endpoint for the master automatically.

For external users of the API (e.g. the kubectl command line interface, continuous build pipelines, or other clients) you will want to configure them to talk to the external load balancer's IP address.

Master elected components

So far we have set up state storage, and we have set up the API server, but we haven't run anything that actually modifies cluster state, such as the controller manager and scheduler. To achieve this reliably, we only want to have one actor modifying state at a time, but we want replicated instances of these actors, in case a machine dies. To achieve this, we are going to use a lease-lock in the API to perform master election. We will use the --leader-elect flag for each scheduler and controller-manager, using a lease in the API will ensure that only 1 instance of the scheduler and controller-manager are running at once.

The scheduler and controller-manager can be configured to talk to the API server that is on the same node (i.e. 127.0.0.1), or it can be configured to communicate using the load balanced IP address of the API servers. Regardless of how they are configured, the scheduler and controller-manager will complete the leader election process mentioned above when using the --leader-elect flag.

In case of a failure accessing the API server, the elected leader will not be able to renew the lease, causing a new leader to be elected. This is especially relevant when configuring the scheduler and controller-manager to access the API server via 127.0.0.1, and the API server on the same node is unavailable.

Installing configuration files

First, create empty log files on each node, so that Docker will mount the files not make new directories:

touch /var/log/kube-scheduler.log

touch /var/log/kube-controller-manager.log

Next, set up the descriptions of the scheduler and controller manager pods on each node. by copying kube-scheduler.yaml and kube-controller-manager.yaml into the /etc/kubernetes/manifests/ directory.

ingress暴漏访问:

怎麼回事呢?pod要提供对外访问,实现思想是这样的,单独装一台lb(用于对外访问)这台lb内网ip是cluster的ip,也就是说这台lb可以访问cluster里的svc地址。

而lb的外网ip是你机器的物理ip,外部本来就可以访问。

我们每增加1个svc,都有把这个svc暴漏出去的需求。

传统思想,那就是动态的更新lb的配置了,如果是haproxy,那你就要多加一个域名+后端ip、port是吧(外网统一通过lb的url 的80端口访问,也即统一了端口是吧)?那么这个动作是否可以自动化,当我创建1个svc的时候,我创建1个ingress,直接就相当于修改了lb的配置文件。

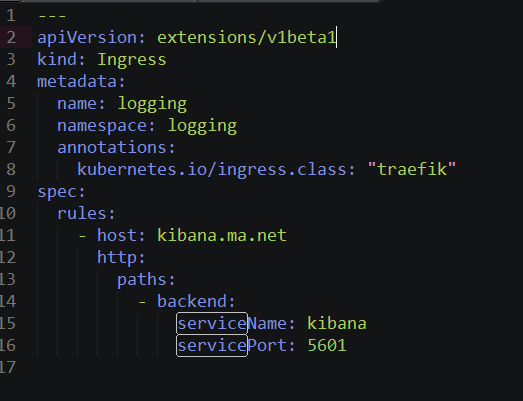

举个栗子,我新建1个svc:

此时我要暴漏他,也即我要修改lb的配置文件,紧接着我创建ingress

我用的trafix,新建后就可以通过外网访问了。

lb实现有几种方案:

-

nginxpulus

我照着做了一遍,搞成功了,不过好像收费的。

https://www.nginx.com/blog/nginx-plus-ingress-controller-kubernetes-load-balancing/

-

trafix,免费好用

-

haproxy,如果自己配置的话还是算了,rancher和openshift用他。如果自己搭建k8s环境,则用第2种

更多推荐

已为社区贡献21条内容

已为社区贡献21条内容

所有评论(0)