gstreamer-基础教程8-appsrc和appsink的应用

在appsrc上,需要设置的第一个属性appsrc是caps,它指定元素将要生成的数据类型,因此 GStreamer 可以检查是否可以与下游元素链接(也就是说,下游元素是否会理解这种数据)。这个教程通过appsrc元素,将应用程序数据注入 GStreamer 管道,并且使用appsink元素将 GStreamer 数据提取回应用程序。文件有哪些,比如用gstreamer最近的版本编译的pc文件有下

基础教程 8:Short-cutting the pipeline goal

这个教程通过appsrc元素,将应用程序数据注入 GStreamer 管道,并且使用appsink元素将 GStreamer 数据提取回应用程序。通过本教程,可以学习到:

-

如何将外部数据注入通用 GStreamer 管道。

-

如何从通用 GStreamer 管道中提取数据。

-

如何访问和操作这些数据。

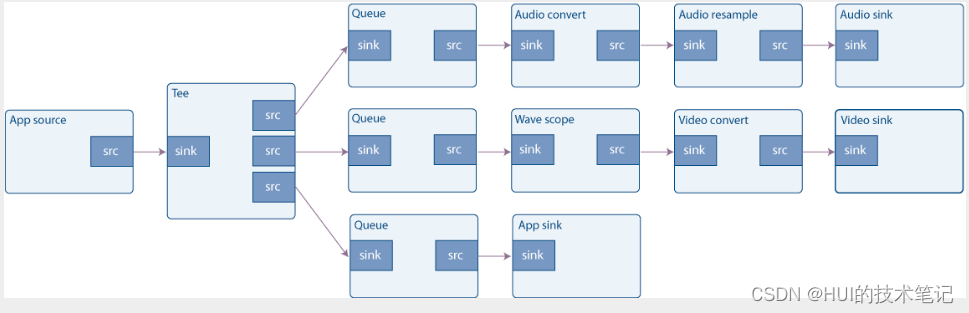

说明

- appsrc将生成音频数据

- tee分成三路:

- audio sink一路播放音频

- video sink一路显示波形图像

- app sink一路通知用户已收到数据

关于appsrc和appsink元素的配置:

/* Configure appsrc */

gst_audio_info_set_format (&info, GST_AUDIO_FORMAT_S16, SAMPLE_RATE, 1, NULL);

audio_caps = gst_audio_info_to_caps (&info);

g_object_set (data.app_source, "caps", audio_caps, NULL);

g_signal_connect (data.app_source, "need-data", G_CALLBACK (start_feed), &data);

g_signal_connect (data.app_source, "enough-data", G_CALLBACK (stop_feed), &data);

在appsrc上,需要设置的第一个属性appsrc是caps,它指定元素将要生成的数据类型,因此 GStreamer 可以检查是否可以与下游元素链接(也就是说,下游元素是否会理解这种数据)。该属性必须是一个GstCaps对象,它可以很容易地从gst_caps_from_string()获得.

然后我们连接到need-data和enough-data信号,它们分别在appsrc内部数据队列运行不足或者满时触发。

/* Configure appsink */

g_object_set (data.app_sink, "emit-signals", TRUE, "caps", audio_caps, NULL);

g_signal_connect (data.app_sink, "new-sample", G_CALLBACK (new_sample), &data);

gst_caps_unref (audio_caps)

在appsink上,我们连接new-sample信号到new_sample处理函数,appsink接收到新的buffer时会触发这个signal。此外,需要通过该emit-signals属性启用信号发射,因为默认情况下它是禁用的。

全部代码路径:

gst-docs/examples/tutorials

#include <gst/gst.h>

#include <gst/audio/audio.h>

#include <string.h>

#define CHUNK_SIZE 1024 /* Amount of bytes we are sending in each buffer */

#define SAMPLE_RATE 44100 /* Samples per second we are sending */

/* Structure to contain all our information, so we can pass it to callbacks */

typedef struct _CustomData

{

GstElement *pipeline, *app_source, *tee, *audio_queue, *audio_convert1,

*audio_resample, *audio_sink;

GstElement *video_queue, *audio_convert2, *visual, *video_convert,

*video_sink;

GstElement *app_queue, *app_sink;

guint64 num_samples; /* Number of samples generated so far (for timestamp generation) */

gfloat a, b, c, d; /* For waveform generation */

guint sourceid; /* To control the GSource */

GMainLoop *main_loop; /* GLib's Main Loop */

} CustomData;

/* This method is called by the idle GSource in the mainloop, to feed CHUNK_SIZE bytes into appsrc.

* The idle handler is added to the mainloop when appsrc requests us to start sending data (need-data signal)

* and is removed when appsrc has enough data (enough-data signal).

*/

static gboolean

push_data (CustomData * data)

{

GstBuffer *buffer;

GstFlowReturn ret;

int i;

GstMapInfo map;

gint16 *raw;

gint num_samples = CHUNK_SIZE / 2; /* Because each sample is 16 bits */

gfloat freq;

/* Create a new empty buffer */

buffer = gst_buffer_new_and_alloc (CHUNK_SIZE);

/* Set its timestamp and duration */

GST_BUFFER_TIMESTAMP (buffer) =

gst_util_uint64_scale (data->num_samples, GST_SECOND, SAMPLE_RATE);

GST_BUFFER_DURATION (buffer) =

gst_util_uint64_scale (num_samples, GST_SECOND, SAMPLE_RATE);

/* Generate some psychodelic waveforms */

gst_buffer_map (buffer, &map, GST_MAP_WRITE);

raw = (gint16 *) map.data;

data->c += data->d;

data->d -= data->c / 1000;

freq = 1100 + 1000 * data->d;

for (i = 0; i < num_samples; i++) {

data->a += data->b;

data->b -= data->a / freq;

raw[i] = (gint16) (500 * data->a);

}

gst_buffer_unmap (buffer, &map);

data->num_samples += num_samples;

/* Push the buffer into the appsrc */

g_signal_emit_by_name (data->app_source, "push-buffer", buffer, &ret);

/* Free the buffer now that we are done with it */

gst_buffer_unref (buffer);

if (ret != GST_FLOW_OK) {

/* We got some error, stop sending data */

return FALSE;

}

return TRUE;

}

/* This signal callback triggers when appsrc needs data. Here, we add an idle handler

* to the mainloop to start pushing data into the appsrc */

static void

start_feed (GstElement * source, guint size, CustomData * data)

{

if (data->sourceid == 0) {

g_print ("Start feeding\n");

data->sourceid = g_idle_add ((GSourceFunc) push_data, data);

}

}

/* This callback triggers when appsrc has enough data and we can stop sending.

* We remove the idle handler from the mainloop */

static void

stop_feed (GstElement * source, CustomData * data)

{

if (data->sourceid != 0) {

g_print ("Stop feeding\n");

g_source_remove (data->sourceid);

data->sourceid = 0;

}

}

/* The appsink has received a buffer */

static GstFlowReturn

new_sample (GstElement * sink, CustomData * data)

{

GstSample *sample;

/* Retrieve the buffer */

g_signal_emit_by_name (sink, "pull-sample", &sample);

if (sample) {

/* The only thing we do in this example is print a * to indicate a received buffer */

g_print ("*");

gst_sample_unref (sample);

return GST_FLOW_OK;

}

return GST_FLOW_ERROR;

}

/* This function is called when an error message is posted on the bus */

static void

error_cb (GstBus * bus, GstMessage * msg, CustomData * data)

{

GError *err;

gchar *debug_info;

/* Print error details on the screen */

gst_message_parse_error (msg, &err, &debug_info);

g_printerr ("Error received from element %s: %s\n",

GST_OBJECT_NAME (msg->src), err->message);

g_printerr ("Debugging information: %s\n", debug_info ? debug_info : "none");

g_clear_error (&err);

g_free (debug_info);

g_main_loop_quit (data->main_loop);

}

int

main (int argc, char *argv[])

{

CustomData data;

GstPad *tee_audio_pad, *tee_video_pad, *tee_app_pad;

GstPad *queue_audio_pad, *queue_video_pad, *queue_app_pad;

GstAudioInfo info;

GstCaps *audio_caps;

GstBus *bus;

/* Initialize cumstom data structure */

memset (&data, 0, sizeof (data));

data.b = 1; /* For waveform generation */

data.d = 1;

/* Initialize GStreamer */

gst_init (&argc, &argv);

/* Create the elements */

data.app_source = gst_element_factory_make ("appsrc", "audio_source");

data.tee = gst_element_factory_make ("tee", "tee");

data.audio_queue = gst_element_factory_make ("queue", "audio_queue");

data.audio_convert1 =

gst_element_factory_make ("audioconvert", "audio_convert1");

data.audio_resample =

gst_element_factory_make ("audioresample", "audio_resample");

data.audio_sink = gst_element_factory_make ("autoaudiosink", "audio_sink");

data.video_queue = gst_element_factory_make ("queue", "video_queue");

data.audio_convert2 =

gst_element_factory_make ("audioconvert", "audio_convert2");

data.visual = gst_element_factory_make ("wavescope", "visual");

data.video_convert =

gst_element_factory_make ("videoconvert", "video_convert");

data.video_sink = gst_element_factory_make ("autovideosink", "video_sink");

data.app_queue = gst_element_factory_make ("queue", "app_queue");

data.app_sink = gst_element_factory_make ("appsink", "app_sink");

/* Create the empty pipeline */

data.pipeline = gst_pipeline_new ("test-pipeline");

if (!data.pipeline || !data.app_source || !data.tee || !data.audio_queue

|| !data.audio_convert1 || !data.audio_resample || !data.audio_sink

|| !data.video_queue || !data.audio_convert2 || !data.visual

|| !data.video_convert || !data.video_sink || !data.app_queue

|| !data.app_sink) {

g_printerr ("Not all elements could be created.\n");

return -1;

}

/* Configure wavescope */

g_object_set (data.visual, "shader", 0, "style", 0, NULL);

/* Configure appsrc */

gst_audio_info_set_format (&info, GST_AUDIO_FORMAT_S16, SAMPLE_RATE, 1, NULL);

audio_caps = gst_audio_info_to_caps (&info);

g_object_set (data.app_source, "caps", audio_caps, "format", GST_FORMAT_TIME,

NULL);

g_signal_connect (data.app_source, "need-data", G_CALLBACK (start_feed),

&data);

g_signal_connect (data.app_source, "enough-data", G_CALLBACK (stop_feed),

&data);

/* Configure appsink */

g_object_set (data.app_sink, "emit-signals", TRUE, "caps", audio_caps, NULL);

g_signal_connect (data.app_sink, "new-sample", G_CALLBACK (new_sample),

&data);

gst_caps_unref (audio_caps);

/* Link all elements that can be automatically linked because they have "Always" pads */

gst_bin_add_many (GST_BIN (data.pipeline), data.app_source, data.tee,

data.audio_queue, data.audio_convert1, data.audio_resample,

data.audio_sink, data.video_queue, data.audio_convert2, data.visual,

data.video_convert, data.video_sink, data.app_queue, data.app_sink, NULL);

if (gst_element_link_many (data.app_source, data.tee, NULL) != TRUE

|| gst_element_link_many (data.audio_queue, data.audio_convert1,

data.audio_resample, data.audio_sink, NULL) != TRUE

|| gst_element_link_many (data.video_queue, data.audio_convert2,

data.visual, data.video_convert, data.video_sink, NULL) != TRUE

|| gst_element_link_many (data.app_queue, data.app_sink, NULL) != TRUE) {

g_printerr ("Elements could not be linked.\n");

gst_object_unref (data.pipeline);

return -1;

}

/* Manually link the Tee, which has "Request" pads */

tee_audio_pad = gst_element_request_pad_simple (data.tee, "src_%u");

g_print ("Obtained request pad %s for audio branch.\n",

gst_pad_get_name (tee_audio_pad));

queue_audio_pad = gst_element_get_static_pad (data.audio_queue, "sink");

tee_video_pad = gst_element_request_pad_simple (data.tee, "src_%u");

g_print ("Obtained request pad %s for video branch.\n",

gst_pad_get_name (tee_video_pad));

queue_video_pad = gst_element_get_static_pad (data.video_queue, "sink");

tee_app_pad = gst_element_request_pad_simple (data.tee, "src_%u");

g_print ("Obtained request pad %s for app branch.\n",

gst_pad_get_name (tee_app_pad));

queue_app_pad = gst_element_get_static_pad (data.app_queue, "sink");

if (gst_pad_link (tee_audio_pad, queue_audio_pad) != GST_PAD_LINK_OK ||

gst_pad_link (tee_video_pad, queue_video_pad) != GST_PAD_LINK_OK ||

gst_pad_link (tee_app_pad, queue_app_pad) != GST_PAD_LINK_OK) {

g_printerr ("Tee could not be linked\n");

gst_object_unref (data.pipeline);

return -1;

}

gst_object_unref (queue_audio_pad);

gst_object_unref (queue_video_pad);

gst_object_unref (queue_app_pad);

/* Instruct the bus to emit signals for each received message, and connect to the interesting signals */

bus = gst_element_get_bus (data.pipeline);

gst_bus_add_signal_watch (bus);

g_signal_connect (G_OBJECT (bus), "message::error", (GCallback) error_cb,

&data);

gst_object_unref (bus);

/* Start playing the pipeline */

gst_element_set_state (data.pipeline, GST_STATE_PLAYING);

/* Create a GLib Main Loop and set it to run */

data.main_loop = g_main_loop_new (NULL, FALSE);

g_main_loop_run (data.main_loop);

/* Release the request pads from the Tee, and unref them */

gst_element_release_request_pad (data.tee, tee_audio_pad);

gst_element_release_request_pad (data.tee, tee_video_pad);

gst_element_release_request_pad (data.tee, tee_app_pad);

gst_object_unref (tee_audio_pad);

gst_object_unref (tee_video_pad);

gst_object_unref (tee_app_pad);

/* Free resources */

gst_element_set_state (data.pipeline, GST_STATE_NULL);

gst_object_unref (data.pipeline);

return 0;

}

编译

这里除了用到gstreamer-1.0的基础库,还需要指定audio的库,所以pkg-config后面要跟gstreamer-audio-1.0参数:

gcc basic-tutorial-8.c -o tutorial-8 `pkg-config --cflags --libs gstreamer-audio-1.0`

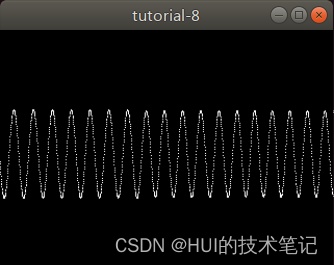

编译后,运行tutorial-8,就可以看到下图的效果:

pkg-config的用法

$ pkg-config --cflags --libs gstreamer-audio-1.0

-pthread -I/usr/local/include/gstreamer-1.0 -I/usr/local/include/orc-0.4 -I/usr/local/include/gstreamer-1.0 -I/usr/include/glib-2.0 -I/usr/lib/x86_64-linux-gnu/glib-2.0/include -L/usr/local/lib/x86_64-linux-gnu -lgstaudio-1.0 -lgstbase-1.0 -lgstreamer-1.0 -lgobject-2.0 -lglib-2.0

pkg-config后面的参数可以跟那些,完全取决于安装或者编译生成的.pc文件有哪些,比如用gstreamer最近的版本编译的pc文件有下面这么多,那么都可以通过pkg-config命令查到。

gst-editing-services-1.0.pc gstreamer-base-1.0.pc gstreamer-gl-egl-1.0.pc gstreamer-pbutils-1.0.pc gstreamer-riff-1.0.pc gstreamer-tag-1.0.pc orc-0.4.pc

gstreamer-1.0.pc gstreamer-check-1.0.pc gstreamer-gl-prototypes-1.0.pc gstreamer-photography-1.0.pc gstreamer-rtp-1.0.pc gstreamer-transcoder-1.0.pc orc-test-0.4.pc

gstreamer-allocators-1.0.pc gstreamer-codecparsers-1.0.pc gstreamer-gl-x11-1.0.pc gstreamer-play-1.0.pc gstreamer-rtsp-1.0.pc gstreamer-video-1.0.pc

gstreamer-app-1.0.pc gstreamer-controller-1.0.pc gstreamer-insertbin-1.0.pc gstreamer-player-1.0.pc gstreamer-rtsp-server-1.0.pc gstreamer-webrtc-1.0.pc

gstreamer-audio-1.0.pc gstreamer-fft-1.0.pc gstreamer-mpegts-1.0.pc gstreamer-plugins-bad-1.0.pc gstreamer-sctp-1.0.pc gst-validate-1.0.pc

gstreamer-bad-audio-1.0.pc gstreamer-gl-1.0.pc gstreamer-net-1.0.pc gstreamer-plugins-base-1.0.pc gstreamer-sdp-1.0.pc nice.pc

比如:

pkg-config --cflags --libs gstreamer-play-1.0

pkg-config --cflags --libs gstreamer-rtsp-server-1.0

pkg-config --cflags --libs pkg-config --cflags --libs gstreamer-webrtc-1.0

更多推荐

已为社区贡献7条内容

已为社区贡献7条内容

所有评论(0)