web浏览器页面人脸检测(vue、opencv.js)

opencv.js官方示例代码做了些简单调整。public目录index.html<!DOCTYPE html><html lang=""><head><meta charset="utf-8" /><meta http-equiv="X-UA-Compatible" content="IE=edge" /><meta name="

·

opencv.js官方示例代码做了些简单调整。

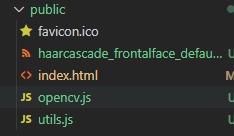

public目录

index.html

<!DOCTYPE html>

<html lang="">

<head>

<meta charset="utf-8" />

<meta http-equiv="X-UA-Compatible" content="IE=edge" />

<meta name="viewport" content="width=device-width,initial-scale=1.0" />

<link rel="icon" href="<%= BASE_URL %>favicon.ico" />

<title><%= htmlWebpackPlugin.options.title %></title>

<script src="utils.js" type="text/javascript"></script>

</head>

<body>

<noscript>

<strong

>We're sorry but <%= htmlWebpackPlugin.options.title %> doesn't work

properly without JavaScript enabled. Please enable it to

continue.</strong

>

</noscript>

<div id="app"></div>

<!-- built files will be auto injected -->

</body>

</html>

app.vue页面

<template>

<div class="home">

<div class="control">

<button id="startAndStop" disabled @click="fnClick">Start</button>

</div>

<table cellpadding="0" cellspacing="0" width="0" border="0">

<tr>

<td>

<video id="videoInput" width="320" height="240"></video>

</td>

<td>

<canvas id="canvasOutput" width="320" height="240"></canvas>

</td>

<td></td>

<td></td>

</tr>

<tr>

<td>

<div class="caption">videoInput</div>

</td>

<td>

<div class="caption">canvasOutput</div>

</td>

<td></td>

<td></td>

</tr>

</table>

<p class="err" id="errorMessage"></p>

</div>

</template>

<script>

export default {

name: "Home",

data() {

return {

startAndStop: "",

canvasOutput: "",

canvasContext: "",

videoInput: "",

streaming: false,

utils: "",

};

},

methods: {

init() {},

fnClick() {

if (!this.streaming) {

this.utils.clearError();

this.utils.startCamera("qvga", this.onVideoStarted, "videoInput");

} else {

this.utils.stopCamera();

this.onVideoStopped();

}

},

onVideoStarted() {

this.streaming = true;

this.startAndStop.innerText = "Stop";

this.videoInput.width = this.videoInput.videoWidth;

this.videoInput.height = this.videoInput.videoHeight;

this.fnStart();

},

onVideoStopped() {

this.streaming = false;

this.canvasContext.clearRect(

0,

0,

this.canvasOutput.width,

this.canvasOutput.height

);

this.startAndStop.innerText = "Start";

},

fnStart() {

let _this = this;

let video = document.getElementById("videoInput");

let cv = window.cv;

let src = new cv.Mat(video.height, video.width, cv.CV_8UC4);

let dst = new cv.Mat(video.height, video.width, cv.CV_8UC4);

let gray = new cv.Mat();

let cap = new cv.VideoCapture(video);

let faces = new cv.RectVector();

let classifier = new cv.CascadeClassifier();

// load pre-trained classifiers

classifier.load("/haarcascade_frontalface_default.xml");

const FPS = 30;

function processVideo() {

try {

if (!_this.streaming) {

// clean and stop.

src.delete();

dst.delete();

gray.delete();

faces.delete();

classifier.delete();

return;

}

let begin = Date.now();

// start processing.

cap.read(src);

src.copyTo(dst);

cv.cvtColor(dst, gray, cv.COLOR_RGBA2GRAY, 0);

// detect faces.

classifier.detectMultiScale(gray, faces, 1.1, 3, 0);

// draw faces.

for (let i = 0; i < faces.size(); ++i) {

let face = faces.get(i);

let point1 = new cv.Point(face.x, face.y);

let point2 = new cv.Point(

face.x + face.width,

face.y + face.height

);

cv.rectangle(dst, point1, point2, [255, 0, 0, 255]);

}

cv.imshow("canvasOutput", dst);

// schedule the next one.

let delay = 1000 / FPS - (Date.now() - begin);

setTimeout(processVideo, delay);

} catch (err) {

_this.utils.printError(err);

}

}

// schedule the first one.

setTimeout(processVideo, 0);

},

},

mounted() {

let _this = this;

_this.startAndStop = document.getElementById("startAndStop");

_this.canvasOutput = document.getElementById("canvasOutput");

_this.videoInput = document.getElementById("videoInput");

_this.canvasContext = _this.canvasOutput.getContext("2d");

_this.utils = new window.Utils("errorMessage");

_this.utils.loadOpenCv(() => {

let faceCascadeFile = "/haarcascade_frontalface_default.xml";

_this.utils.createFileFromUrl(faceCascadeFile, faceCascadeFile, () => {

_this.startAndStop.removeAttribute("disabled");

});

});

},

};

</script>

utils.js

function Utils(errorOutputId) {

// eslint-disable-line no-unused-vars

let self = this;

this.errorOutput = document.getElementById(errorOutputId);

const OPENCV_URL = "/opencv.js";

this.loadOpenCv = function(onloadCallback) {

let script = document.createElement("script");

script.setAttribute("async", "");

script.setAttribute("type", "text/javascript");

script.addEventListener("load", async () => {

let cv = window.cv;

if (cv.getBuildInformation) {

console.log(cv.getBuildInformation());

onloadCallback();

} else {

// WASM

if (cv instanceof Promise) {

cv = await cv;

console.log(cv.getBuildInformation());

onloadCallback();

} else {

cv["onRuntimeInitialized"] = () => {

console.log(cv.getBuildInformation());

onloadCallback();

};

}

}

});

script.addEventListener("error", () => {

self.printError("Failed to load " + OPENCV_URL);

});

script.src = OPENCV_URL;

let node = document.getElementsByTagName("script")[0];

node.parentNode.insertBefore(script, node);

};

this.createFileFromUrl = function(path, url, callback) {

let request = new XMLHttpRequest();

request.open("GET", url, true);

request.responseType = "arraybuffer";

request.onload = function(ev) {

if (request.readyState === 4) {

if (request.status === 200) {

let data = new Uint8Array(request.response);

cv.FS_createDataFile("/", path, data, true, false, false);

callback();

} else {

self.printError(

"Failed to load " + url + " status: " + request.status

);

}

}

};

request.send();

};

this.loadImageToCanvas = function(url, cavansId) {

let canvas = document.getElementById(cavansId);

let ctx = canvas.getContext("2d");

let img = new Image();

img.crossOrigin = "anonymous";

img.onload = function() {

canvas.width = img.width;

canvas.height = img.height;

ctx.drawImage(img, 0, 0, img.width, img.height);

};

img.src = url;

};

this.executeCode = function(textAreaId) {

try {

this.clearError();

let code = document.getElementById(textAreaId).value;

eval(code);

} catch (err) {

this.printError(err);

}

};

this.clearError = function() {

this.errorOutput.innerHTML = "";

};

this.printError = function(err) {

if (typeof err === "undefined") {

err = "";

} else if (typeof err === "number") {

if (!isNaN(err)) {

if (typeof cv !== "undefined") {

err = "Exception: " + cv.exceptionFromPtr(err).msg;

}

}

} else if (typeof err === "string") {

let ptr = Number(err.split(" ")[0]);

if (!isNaN(ptr)) {

if (typeof cv !== "undefined") {

err = "Exception: " + cv.exceptionFromPtr(ptr).msg;

}

}

} else if (err instanceof Error) {

err = err.stack.replace(/\n/g, "<br>");

}

this.errorOutput.innerHTML = err;

};

this.loadCode = function(scriptId, textAreaId) {

let scriptNode = document.getElementById(scriptId);

let textArea = document.getElementById(textAreaId);

if (scriptNode.type !== "text/code-snippet") {

throw Error("Unknown code snippet type");

}

textArea.value = scriptNode.text.replace(/^\n/, "");

};

this.addFileInputHandler = function(fileInputId, canvasId) {

let inputElement = document.getElementById(fileInputId);

inputElement.addEventListener(

"change",

(e) => {

let files = e.target.files;

if (files.length > 0) {

let imgUrl = URL.createObjectURL(files[0]);

self.loadImageToCanvas(imgUrl, canvasId);

}

},

false

);

};

function onVideoCanPlay() {

if (self.onCameraStartedCallback) {

self.onCameraStartedCallback(self.stream, self.video);

}

}

this.startCamera = function(resolution, callback, videoId) {

const constraints = {

qvga: { width: { exact: 320 }, height: { exact: 240 } },

vga: { width: { exact: 640 }, height: { exact: 480 } },

};

let video = document.getElementById(videoId);

if (!video) {

video = document.createElement("video");

}

let videoConstraint = constraints[resolution];

if (!videoConstraint) {

videoConstraint = true;

}

navigator.mediaDevices

.getUserMedia({ video: videoConstraint, audio: false })

.then(function(stream) {

video.srcObject = stream;

video.play();

self.video = video;

self.stream = stream;

self.onCameraStartedCallback = callback;

video.addEventListener("canplay", onVideoCanPlay, false);

})

.catch(function(err) {

self.printError("Camera Error: " + err.name + " " + err.message);

});

};

this.stopCamera = function() {

if (this.video) {

this.video.pause();

this.video.srcObject = null;

this.video.removeEventListener("canplay", onVideoCanPlay);

}

if (this.stream) {

this.stream.getVideoTracks()[0].stop();

}

};

}

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)