k8s 安装_k8s配置安装全解

服务器:10.0.0.151 master (外网ip:172.16.0.252)10.0.0.126 node10.0.0.145 node修改hostnamesudo hostname et-k8s-master-151sudo hostname et-k8s-node-126sudo hostname et-k8s-node-145操作系统环境 (cat /etc/issue 、uname

服务器:

10.0.0.151 master (外网ip:172.16.0.252)

10.0.0.126 node

10.0.0.145 node

修改hostname

sudo hostname et-k8s-master-151

sudo hostname et-k8s-node-126

sudo hostname et-k8s-node-145

操作系统环境 (cat /etc/issue 、uname -a)

操作系统:Ubuntu 18.04.1 LTS

内核: 4.15.0-39-generic

=============master、node均需操作 start==================================:

1、关闭防火墙

$ sudo ufw disable

2、禁用selinux

ubuntu默认不安装selinux(通过执行命令getenforce 查看是否安装),假如安装了的话,按如下步骤禁用selinux

临时禁用:sudo setenforce 0

永久禁用:

$ sudo vi /etc/selinux/config

SELINUX=permissive

3、内核开启ipv4转发

$ sudo vim /etc/sysctl.conf

net.ipv4.ip_forward = 1 #开启ipv4转发,允许内置路由

$sudo sysctl -p

4、禁用swap

$ sudo swapoff -a

同时还需要修改/etc/fstab文件,注释掉 SWAP 的自动挂载,防止机子重启后swap启用(把/etc/fstab文件中带有swap的行注释,当前系统中没有)。

5、配置iptables参数,使得流经网桥的流量也经过iptables/netfilter防火墙

$ sudo tee /etc/sysctl.d/k8s.conf <

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

$ sudo sysctl --system

6、安装docker

0) 卸载旧docker

$ sudo apt-get remove docker docker-engine docker.io

(中间设置了一次科大的源,不知道有没有起作用 http://www.runoob.com/docker/ubuntu-docker-install.html, 好像是科大的起作用了,阿里的没有起作用)

sudo cp /etc/apt/sources.list /etc/apt/sources.list.bak

sudo sed -i 's/archive.ubuntu.com/mirrors.ustc.edu.cn/g' /etc/apt/sources.list

sudo apt update

0) sudo apt-get update

1) 安装依赖,使得apt可以使用https

>sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

>curl -fsSL https://mirrors.ustc.edu.cn/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://mirrors.ustc.edu.cn/docker-ce/linux/ubuntu

$(lsb_release -cs) stable"

sudo apt update

(https://ubuntu.pkgs.org/16.04/ubuntu-main-amd64/libltdl7_2.4.6-0.1_amd64.deb.html)

$ wget http://archive.ubuntu.com/ubuntu/pool/main/libt/libtool/libltdl7_2.4.6-2_amd64.deb

$ sudo dpkg -i libltdl7_2.4.6-2_amd64.deb

4)安装指定版本docker-ce

$ apt-cache madison docker-ce

sudo apt-get install -y docker-ce=18.06.1~ce~3-0~ubuntu (安装该版本)

5)启动并设置开机自启动docker

$ sudo systemctl enable docker && sudo systemctl start docker

6) 将当前登录用户加入docker用户组中

sudo usermod -aG docker ubuntu

9、docker启动参数配置

为docker做如下配置:

设置阿里云镜像库加速dockerhub的镜像。国内访问dockerhub不稳定,将对dockerhub的镜像拉取代理到阿里云镜像库

配上禁用iptables的设置

如果想让podIP可路由的话,设置docker不再对podIP做MASQUERADE,否则docker会将podIP这个源地址SNAT成nodeIP

设置docker存储驱动为overlay2(需要linux kernel版本在4.0以上,docker版本大于1.12)

根据业务规划修改容器实例存储根路径(默认路径是/var/lib/docker)

最终配置如下:

$ sudo tee /etc/docker/daemon.json <

{

"iptables": false,

"ip-masq": false,

"storage-driver": "overlay2",

"graph": "/home/ubuntu/docker",

"insecure-registries":["172.16.0.185:5000"]

}

EOF

$ sudo systemctl restart docker

将7trvj11i替换成阿里云为你生成的镜像代理仓库前缀

为docker设置http代理

假如机器在内网环境无法直接访问外网的话,还需要为docker设置一个http_proxy。

$ sudo mkdir /etc/systemd/system/docker.service.d

$ sudo tee /etc/systemd/system/docker.service.d/http-proxy.conf <

[Service]

Environment="HTTP_PROXY=http://xxx.xxx.xxx.xxx:xxxx"

Environment="NO_PROXY=localhost,127.0.0.0/8"

EOF

$ sudo systemctl daemon-reload

$ sudo systemctl restart docker

==========================================

安装kubeadm、kubelet、kubectl

sudo apt-get install -y apt-transport-https curl

sudo curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

sudo tee /etc/apt/sources.list.d/kubernetes.list <

deb http://mirrors.ustc.edu.cn/kubernetes/apt kubernetes-xenial main

deb https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main

EOF

sudo apt-get update (不必理会报错信息ustc.edu.cn public key is not available: NO_PUBKEY 6A030B21BA07F4FB)

wget http://archive.ubuntu.com/ubuntu/pool/main/s/socat/socat_1.7.3.2-2ubuntu2_amd64.deb

sudo dpkg -i socat_1.7.3.2-2ubuntu2_amd64.deb

安装指定版本:

sudo apt-get install kubernetes-cni=0.6.0-00

sudo apt-get install -y kubelet=1.11.5-00 kubeadm=1.11.5-00 kubectl=1.11.5-00

=============master、node均需操作 end==================================

=============仅仅master需操作 start==================================

$ kubeadm config images list --kubernetes-version=v1.11.5

k8s.gcr.io/kube-apiserver-amd64:v1.11.5

k8s.gcr.io/kube-controller-manager-amd64:v1.11.5

k8s.gcr.io/kube-scheduler-amd64:v1.11.5

k8s.gcr.io/kube-proxy-amd64:v1.11.5

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd-amd64:3.2.18

k8s.gcr.io/coredns:1.1.3

//image-process.sh

#!/bin/bash

images=(kube-apiserver-amd64:v1.11.5 kube-controller-manager-amd64:v1.11.5 kube-scheduler-amd64:v1.11.5 kube-proxy-amd64:v1.11.5 pause:3.1 etcd-amd64:3.2.18)

for imageName in ${images[@]} ; do

docker pull mirrorgooglecontainers/$imageName

docker tag mirrorgooglecontainers/$imageName k8s.gcr.io/$imageName

docker rmi mirrorgooglecontainers/$imageName

done

docker pull coredns/coredns:1.1.3

docker tag coredns/coredns:1.2.6 k8s.gcr.io/coredns:1.1.3

docker rmi coredns/coredns:1.1.3

使用: sudo /bin/bash ./image-process.sh 执行

(参考https://yq.aliyun.com/articles/498760)

http://www.bubuko.com/infodetail-2147528.html

sudo kubeadm init --kubernetes-version=v1.11.5 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=0.0.0.0

[init] using Kubernetes version: v1.11.5

[preflight] running pre-flight checks

I0115 11:11:26.403393 996 kernel_validator.go:81] Validating kernel version

I0115 11:11:26.403582 996 kernel_validator.go:96] Validating kernel config

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 18.06.1-ce. Max validated version: 17.03

[preflight/images] Pulling images required for setting up a Kubernetes cluster

[preflight/images] This might take a minute or two, depending on the speed of your internet connection

[preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[preflight] Activating the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [et-k8s-master-130 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.0.130]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Generated etcd/ca certificate and key.

[certificates] Generated etcd/server certificate and key.

[certificates] etcd/server serving cert is signed for DNS names [et-k8s-master-130 localhost] and IPs [127.0.0.1 ::1]

[certificates] Generated etcd/peer certificate and key.

[certificates] etcd/peer serving cert is signed for DNS names [et-k8s-master-130 localhost] and IPs [10.0.0.130 127.0.0.1 ::1]

[certificates] Generated etcd/healthcheck-client certificate and key.

[certificates] Generated apiserver-etcd-client certificate and key.

[certificates] valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests"

[init] this might take a minute or longer if the control plane images have to be pulled

[apiclient] All control plane components are healthy after 60.502878 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.11" in namespace kube-system with the configuration for the kubelets in the cluster

[markmaster] Marking the node et-k8s-master-130 as master by adding the label "node-role.kubernetes.io/master=''"

[markmaster] Marking the node et-k8s-master-130 as master by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "et-k8s-master-130" as an annotation

[bootstraptoken] using token: 4d48hm.emhkarzmederd9i5

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 10.0.0.151:6443 --token yp9pwa.krkyjz87qf7upg5d --discovery-token-ca-cert-hash sha256:d521e1c940220baeb74746abbf0b9cd4ca7cda8b2256df8aadb92fa7d5ab1810

(执行的时候提示什么已经存在就删什么)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

测试命名:kubectl get nodes

master中产生永不过期的token:

kubeadm token generate

>yp9pwa.krkyjz87qf7upg5d

kubeadm token create yp9pwa.krkyjz87qf7upg5d --print-join-command --ttl=0

设置–ttl=0代表永不过期

测试:

http://dockone.io/article/2514

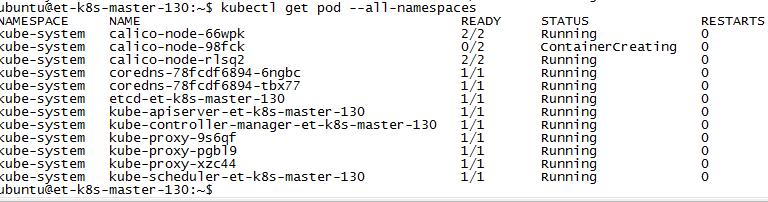

kubectl get pods --all-namespaces

(清理重新初始化 sudo kubeadm reset,当前别操作如果需要的时候执行)

4.2 网络部署

1、获取yaml文件

$ cd ~/kubeadm

$ wget https://raw.githubusercontent.com/coreos/flannel/v0.10.0/Documentation/kube-flannel.yml

2.修改配置文件

2.1 修改其中net-conf.json中的Network参数使其与kubeadm init时指定的--pod-network-cidr保持一致(默认不需要修改)。

这里v0.10.0版有一个bug,需要为启动flannel的daemonset添加toleration,以允许在尚未Ready的节点上部署flannel pod:

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

#添加下面这个toleration

- key: node.kubernetes.io/not-ready

operator: Exists

effect: NoSchedule

3.镜像下载

下载yaml文件中所需镜像(quay.io/coreos/flannel:v0.10.0-amd64)

quay.io镜像被墙下不了可以从这里下载:https://hub.docker.com/r/jmgao1983/flannel/tags/ , 之后再打回原tag。

sudo docker pull jmgao1983/flannel:v0.10.0-amd64

sudo docker tag jmgao1983/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0-amd64

4.部署

kubectl apply -f kube-flannel.yml

部署好后集群可以正常运行了。

node节点操作========================126、145================================

安装必要的镜像

sudo docker pull mirrorgooglecontainers/pause:3.1

sudo docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

sudo docker rmi mirrorgooglecontainers/pause:3.1

sudo docker pull mirrorgooglecontainers/kube-proxy-amd64:v1.11.5

sudo docker tag mirrorgooglecontainers/kube-proxy-amd64:v1.11.5 k8s.gcr.io/kube-proxy-amd64:v1.11.5

sudo docker rmi mirrorgooglecontainers/kube-proxy-amd64:v1.11.5

sudo kubeadm join 10.0.0.151:6443 --token yp9p4wa.krkyjz87qf7upg5d --discovery-token-ca-cert-hash sha256:d521e1c940220baeb74746abbf0b9cd4c6a7cda8b2256df8aadb92fa7d5ab1810

master节点操作:

查看节点:kubectl get nodes -o wide

kubectl get pods --all-namespaces

kubectl get nodes

kubectl describe pod

错误:configmaps "kubelet-config-1.13" is forbidden

解决方法:

apt-get remove cri-tools

sudo apt-get install kubelet=1.13.2-00 kubeadm=1.13.2-00

sudo rm /etc/kubernetes/pki/ca.crt

sudo rm /etc/kubernetes/bootstrap-kubelet.conf

sudo kubeadm join 10.0.0.151:6443 --token yp9p4wa.krkyjz87qf7upg5d --discovery-token-ca-cert-hash sha256:d521e1c940220baeb74746abbf0b9cd4c6a7cda8b2256df8aadb92fa7d5ab1810

错误:通过kubectl get pods --all-namespaces 查看node一直处于NotReady状态

解决方法:参照“查找具体的pod错误”笔记 (最终解决方法通过执行image-process.sh)

参考:https://blog.csdn.net/liukuan73/article/details/83116271

(没有操作:load后发现镜像都是calico/为前缀,而yaml文件里配置的镜像前缀是quay.io/calico/,所以需要重新打一下tag或改一下yaml里的前缀。)

检查配置是否正确:kubectl cluster-info

master上执行kubectl get pod --all-namespaces进行查看节点是否加入 (需要几分钟)

如果一直没有Runing,可以通过命令查看原因:

kubectl describe pod kube-proxy-cxwx4 -n kube-system

终于搞定,痛苦流泪!

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)