k8s minio

If you’ve just stumbled upon looking up for ways to backup and restore containerised stateful workload on Kubernetes, then I hope you won’t get disappointed. Before you delve into this more a word of caution this one is for specific use case not a typical one, that’s the reason of this blog.

如果您只是偶然发现了在Kubernetes上备份和还原容器化有状态工作负载的方法,那么希望您不要失望。 在深入研究之前,请谨慎使用,这是针对特定用例而不是典型的用例,这就是此博客的原因。

Here I am going to show you how to deploy a simple stateful MySQL POD deployment and injecting some data and give you intricacies and details of using Kasten and Kanister with screenshots and commands which will help you understand how it woks and try it yourselves like DIY.

在这里,我将向您展示如何部署一个简单的有状态MySQL POD部署并注入一些数据,并为您提供使用Kasten和Kanister的复杂性和详细信息以及屏幕截图和命令,这些信息将帮助您了解它的运行方式,并像DIY一样尝试一下。

I would highly recommend you have a look at my earlier blog on backup and restore of applications running on Kubernetes using Velero.

我强烈建议您浏览我以前的博客,该博客使用Velero备份和还原在Kubernetes上运行的应用程序。

There are glaring differences between how Velero and Kasten works in this space of backup and restore of Kubernetes applications. I don’t want to provide what those difference are or their pros or cons, because I guess that will bring in bias to the reader.

Velero和Kasten在Kubernetes应用程序的备份和还原空间中的工作方式之间存在明显差异。 我不想提供这些区别是什么或它们的优缺点,因为我想这会给读者带来偏见。

One thing you should know before you read on is that Kasten K10 Platform is proprietary and comes with license, though for smaller deployments it’s free, and they have different variants of pricing. But Kanister is an OpenSource project from Kasten(like Velero(heptio ark) is an Open Source project from VMware)

在继续阅读之前,您应该了解的一件事是,Kasten K10平台是专有的并带有许可证,尽管对于较小的部署,它是免费的,并且它们具有不同的定价方式。 但是Kanister是Kasten的开源项目(就像Velero(heptio ark)是VMware的开源项目一样)

What is Mutating Web Hooks?

什么是变异网钩?

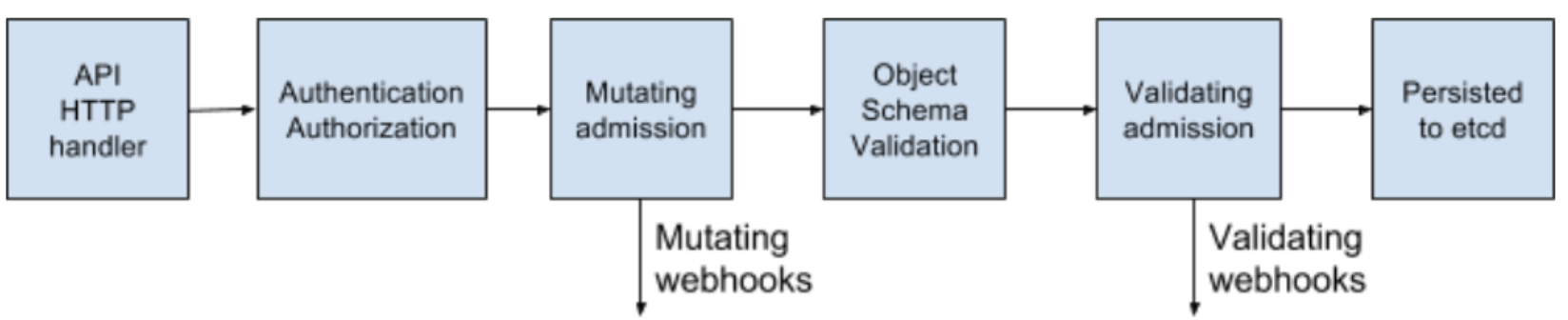

For understanding this you’ll need to understand “Dynamic Admission Control” and what it is in Kubernetes.

为了理解这一点,您需要了解“动态准入控制”及其在Kubernetes中的含义。

An admission controller is a piece of code that intercepts requests to the Kubernetes API server prior to persistence of the object, but after the request is authenticated and authorised. […] Admission controllers may be “validating”, “mutating”, or both. Mutating controllers may modify the objects they admit; validating controllers may not. […] If any of the controllers in either phase reject the request, the entire request is rejected immediately and an error is returned to the end-user.

一个 接纳控制器是一段代码之前拦截请求到Kubernetes API服务器到持久性的对象,但该请求被认证和授权之后。 […]准入控制器可能是“正在验证”,“正在变异”或两者兼而有之。 变异控制器可以修改其允许的对象; 验证控制器可能不会。 […]如果任一阶段中的任何控制器拒绝该请求,则整个请求将立即被拒绝,并且错误将返回给最终用户。

Many advanced features in Kubernetes require an admission controller to be enabled in order to properly support the feature. As a result, a Kubernetes API server that is not properly configured with the right set of admission controllers is an incomplete server and will not support all the features you expect. There are list of Admission Controllers and lot of features native to Kubernetes are done by the Admission Controllers.

Kubernetes中的许多高级功能都需要启用接纳控制器才能正确支持该功能。 因此,未正确配置正确的访问控制器集的Kubernetes API服务器是不完整的服务器,将不支持您期望的所有功能。 这里有准入控制器列表,而Kubernetes固有的许多功能都是由准入控制器完成的。

MutatingAdmissionWebhook and ValidatingAdmissionWebhook are the ones used here. If you want to read more about MutatingAdmissionWebhook there is nicely written blog by Alex Leonhardt on this topic.

这里使用MutatingAdmissionWebhook和ValidatingAdmissionWebhook。 如果您想了解有关MutatingAdmissionWebhook的更多信息,请参阅Alex Leonhardt撰写的有关该主题的精美博客 。

There are different use cases for MutatingAdmissionWebhook, one among them is for injecting Sidecar into your workload or application. K10 implements a Mutating Webhook Server which mutates workload objects by injecting a Kanister sidecar into the workload when the workload is created(Read more..)

MutatingAdmissionWebhook有多种用例,其中一种是将Sidecar注入您的工作负载或应用程序中。 K10实现了一个可变Webhook服务器,该服务器通过在创建工作负载时将Kanister边车注入工作负载来使工作负载对象发生变化( 阅读更多.. )

The Kubernetes cluster I am using is IBM Cloud IKS and my workload runs in this managed service platform. As of today the underlying storage provider used in IKS doesn’t support K10 so the route it takes to overcome that limitation is to use generic backup and which needs addition of a sidecar container to your workload POD and annotations to your application deployment.

我正在使用的Kubernetes集群是IBM Cloud IKS ,我的工作负载在此托管服务平台上运行。 到目前为止,IKS中使用的基础存储提供程序不支持K10,因此克服此限制的方法是使用通用备份,这需要在工作负载POD中添加sidecar容器,并为应用程序部署添加注释。

Now let’s get into the deployment of K10 and all necessary dependancies. There are specific pre-requisites scripts to be run before you deploy K10. We will use Helm to deploy K10 and also please refer to K10 documentation for more details about pre-requisites and procedure.

现在让我们进入K10的部署和所有必要的依赖关系。 部署K10之前,需要先运行特定的先决条件脚本。 我们将使用Helm部署K10,并且还请参阅K10文档以了解有关先决条件和步骤的更多详细信息。

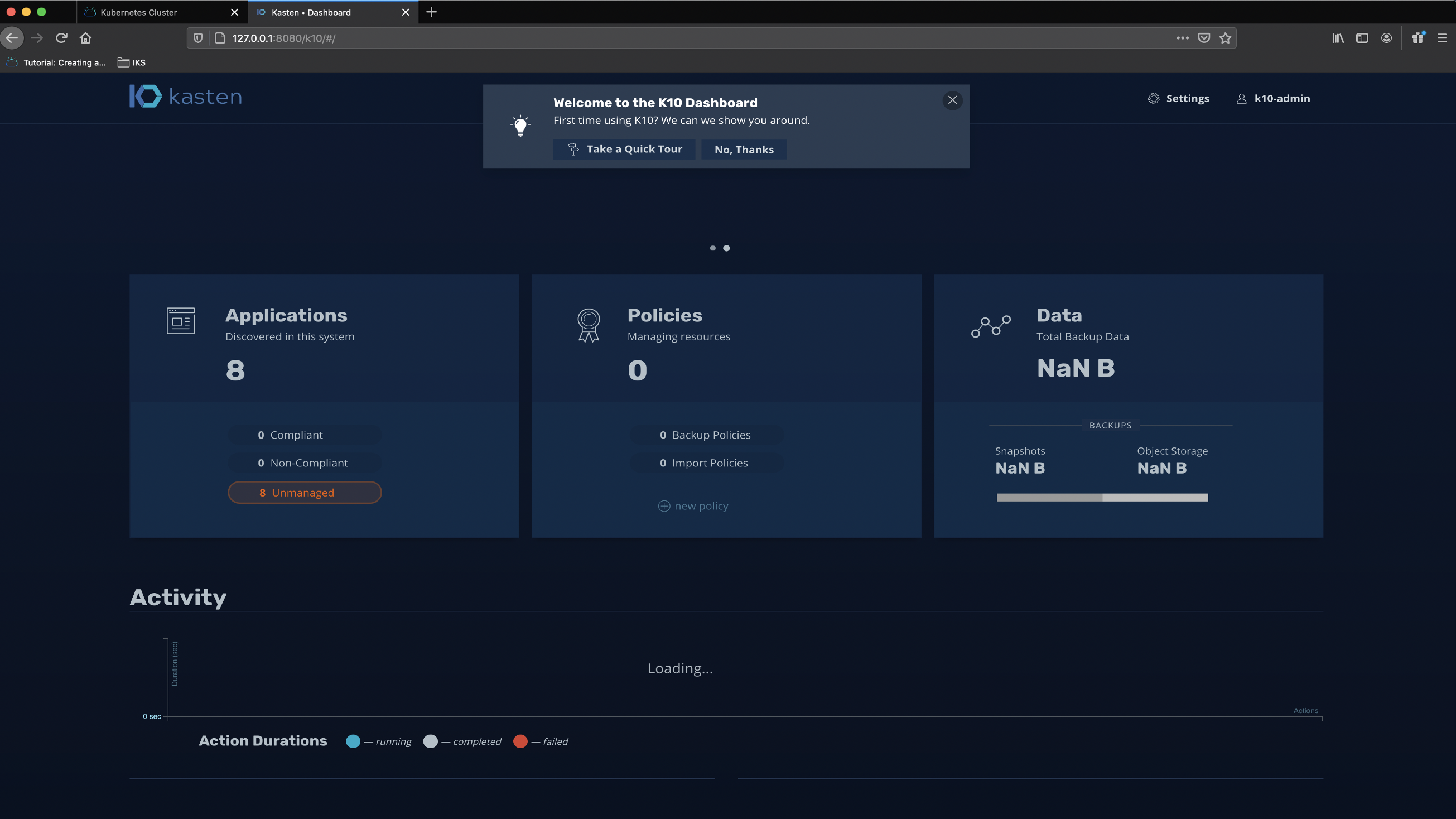

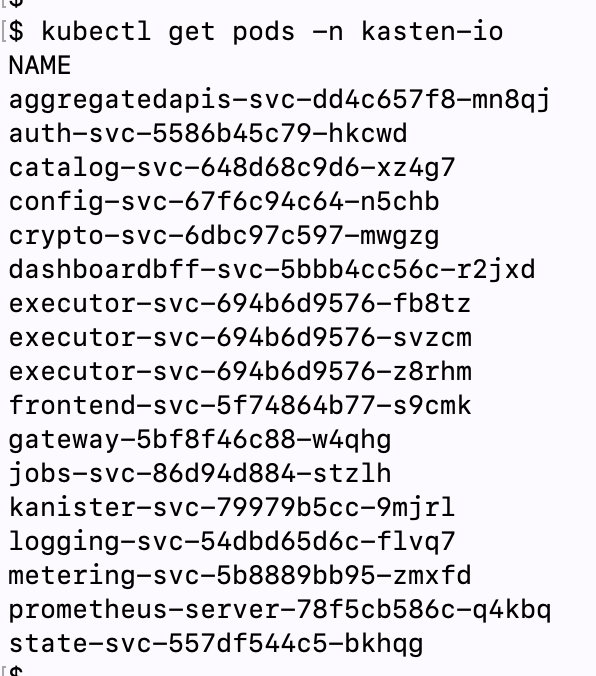

K10 gets deployed in its own namespace and comes with all bunch of PODs like shown below

K10部署在自己的命名空间中,并附带所有如下所示的POD束

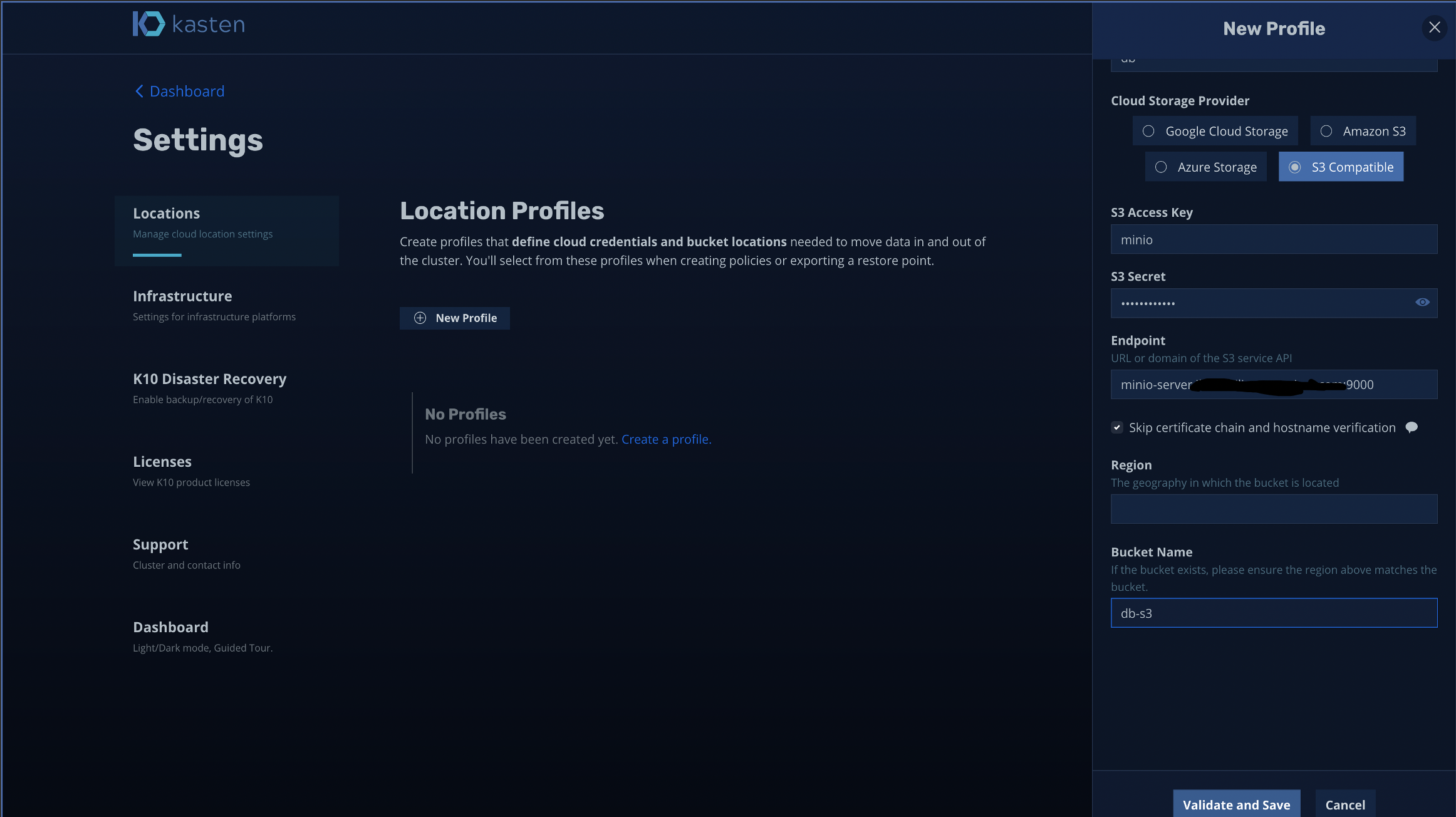

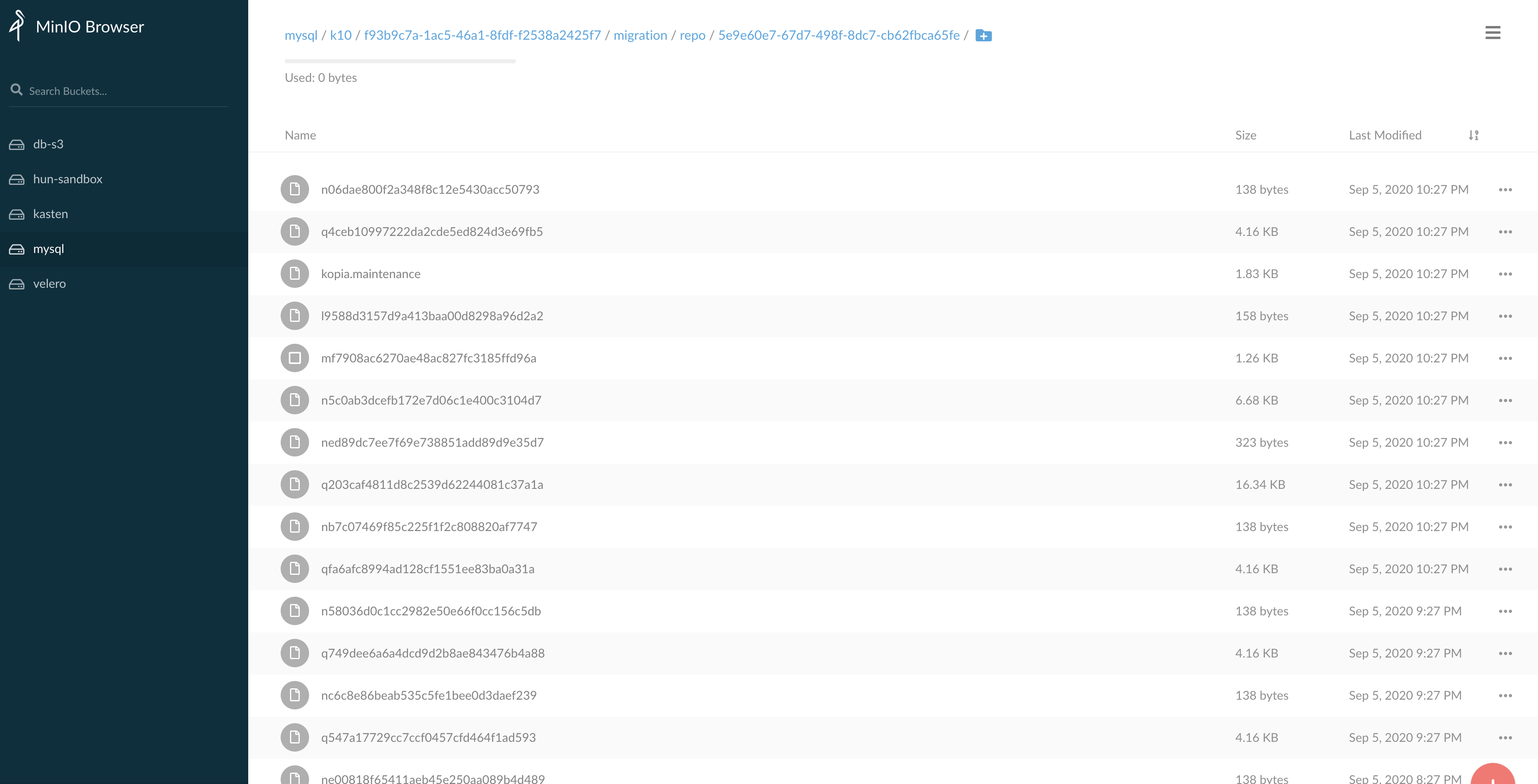

The next step will be setting up Location profiles for K10. Location profiles are nothing but backup storage target. Its supports major cloud object store solutions also supports any other S3 compatible storage. I am using a Minio instance for backup.

下一步将为K10设置位置配置文件。 位置配置文件不过是备份存储目标。 它支持主要的云对象存储解决方案,也支持任何其他S3兼容存储。 我正在使用Minio实例进行备份。

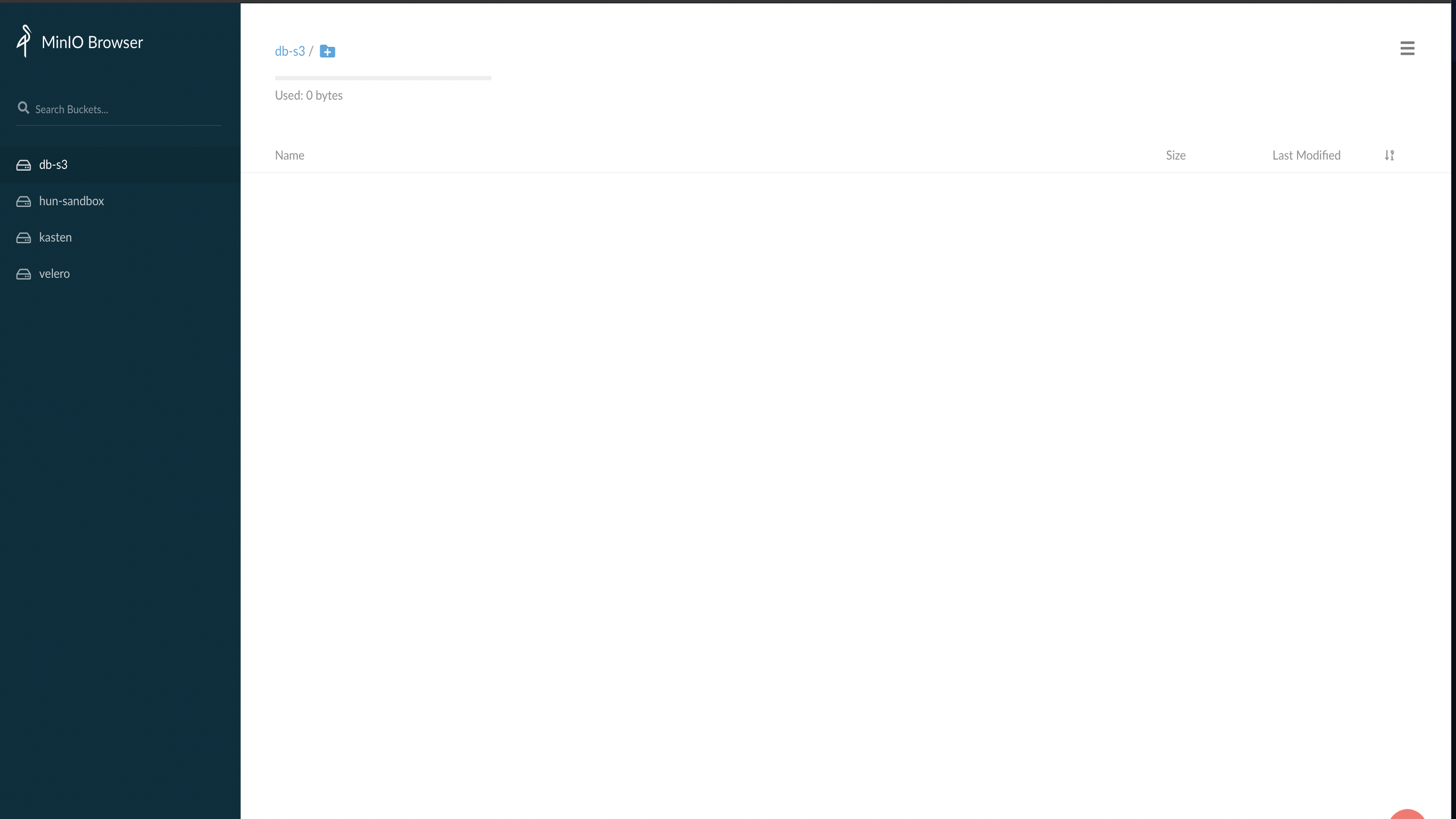

I have configured new Minio bucket (called db-s3)and Minio credentials (key and secrets) for Kasten as below

我为Kasten配置了新的Minio存储桶(称为db-s3 )和Minio凭据(密钥和机密),如下所示

I should mention something very significantly different in Kasten from what Velero provides is the ability to create multiple profiles for backup target storage. This lets you have multiple S3 compatible or cloud object stores like AWS S3 or GCP/Azure object stores. I could see this use case for support of multiple different preferences according to the application needs and Kubernetes architecture, in case where multiple tenants are utilising one Kubernetes cluster.

我要提到的是,Kasten中与Velero提供的功能有很大不同的地方是可以为备份目标存储创建多个配置文件。 这使您可以拥有多个S3兼容或云对象存储,例如AWS S3或GCP / Azure对象存储。 在多个租户利用一个Kubernetes集群的情况下,我可以看到此用例根据应用程序需求和Kubernetes架构支持多种不同的首选项。

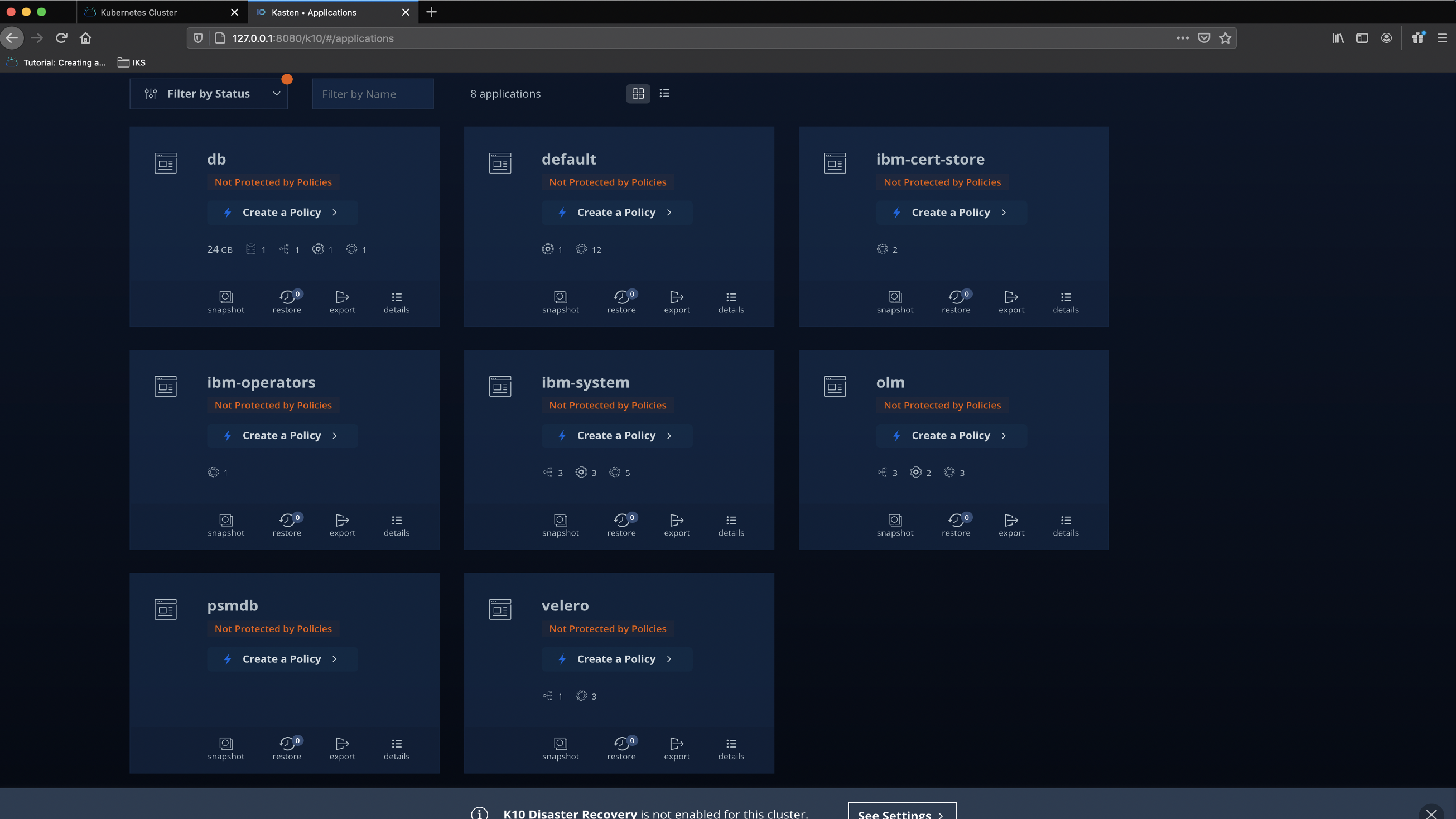

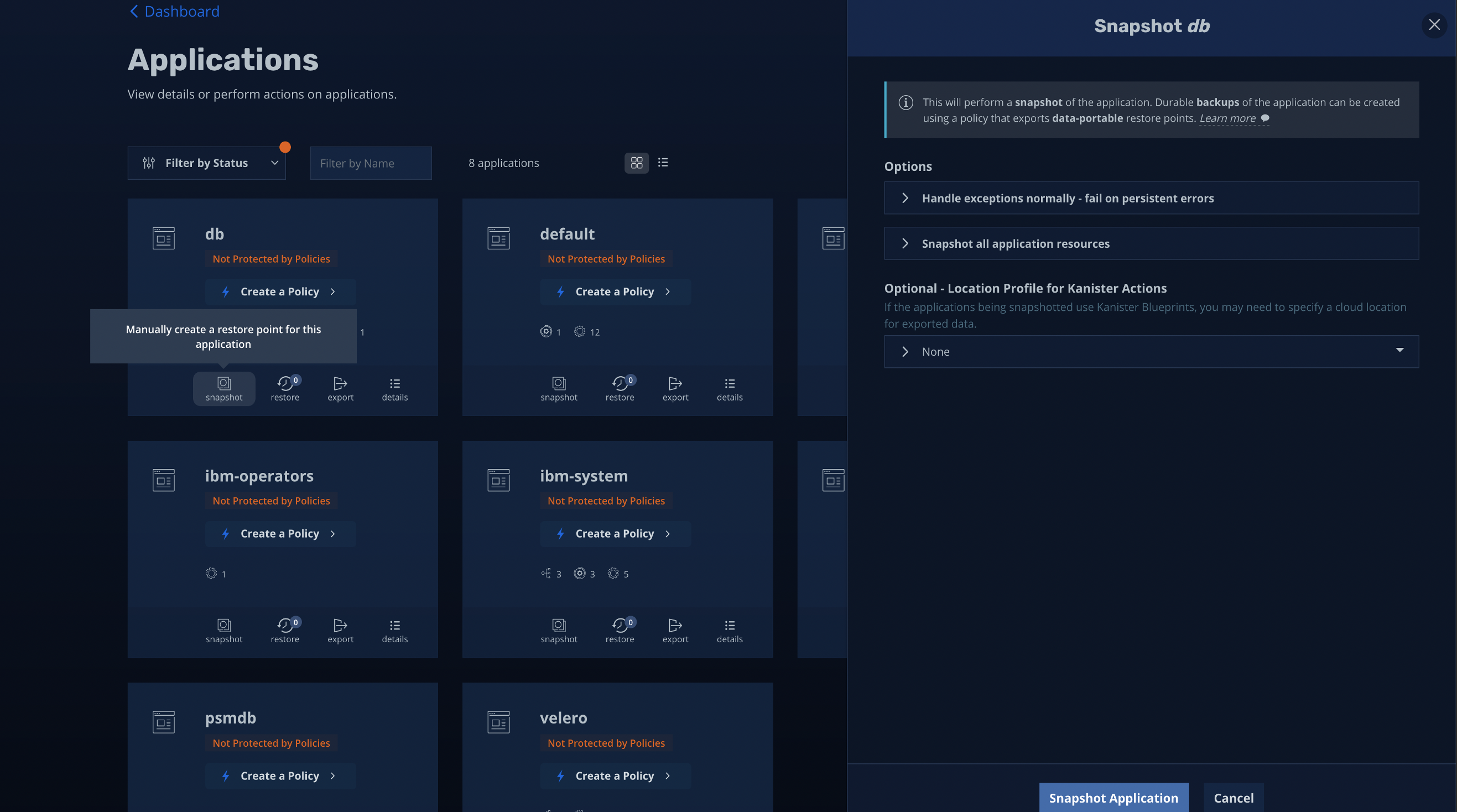

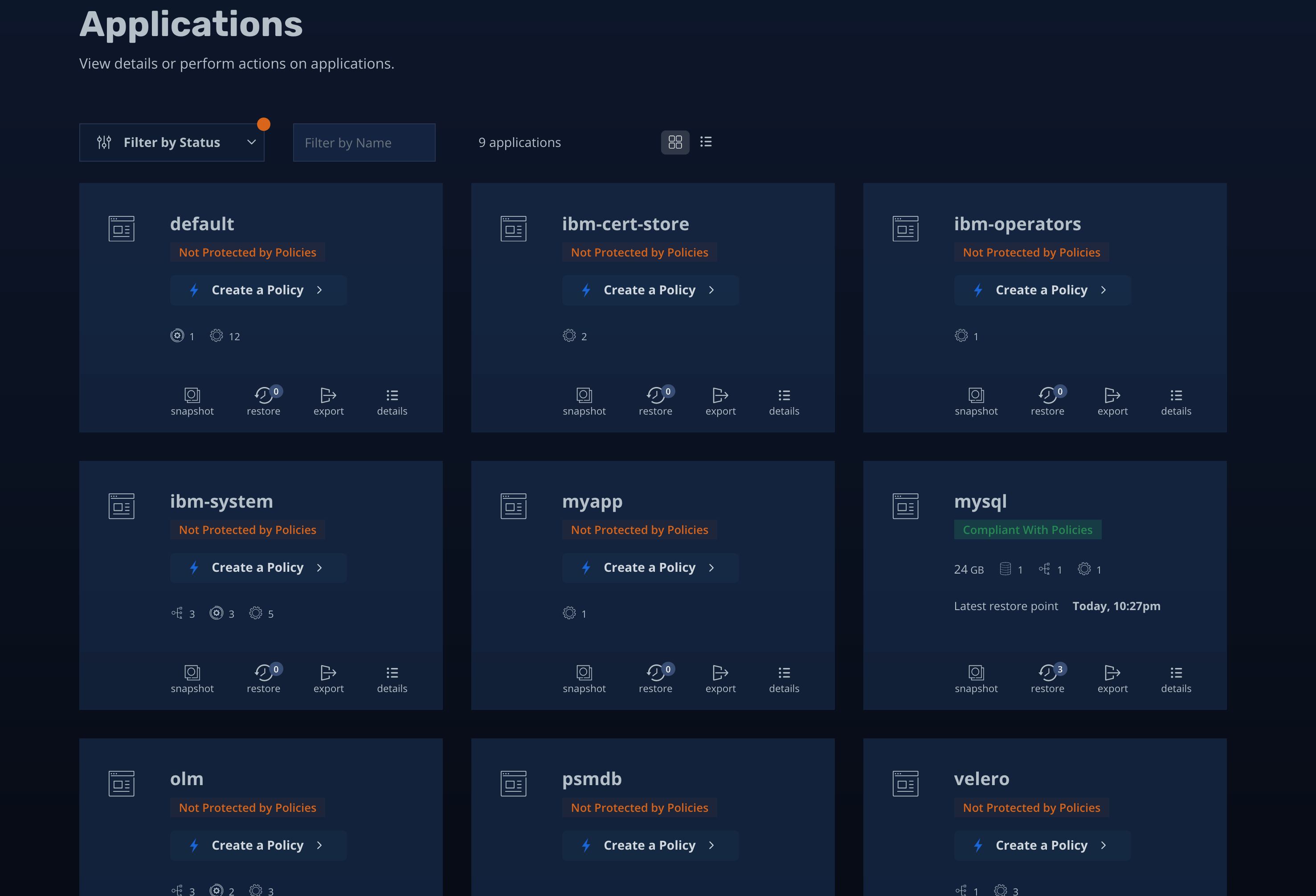

Kasten also discovers Application which are part of that cluster and automatically pulls all meta data information and objects associated with each application like its, Persistent volumes, Secrets, Config-maps, Services etc. It also gives you a nice visualisation of each Namespaces and also lists if there are Backup policies defined and if those policies are protecting the Applications. This gives a great view and provides visibility of the Kubernetes workload.

Kasten还发现属于该集群的Application,并自动提取与每个应用程序相关的所有元数据信息和对象,例如其持久性卷,机密,配置映射,服务等。它还为您提供了每个命名空间以及其他信息的漂亮可视化列出是否定义了备份策略以及这些策略是否在保护应用程序。 这样可以很好地查看并提供Kubernetes工作负载的可见性。

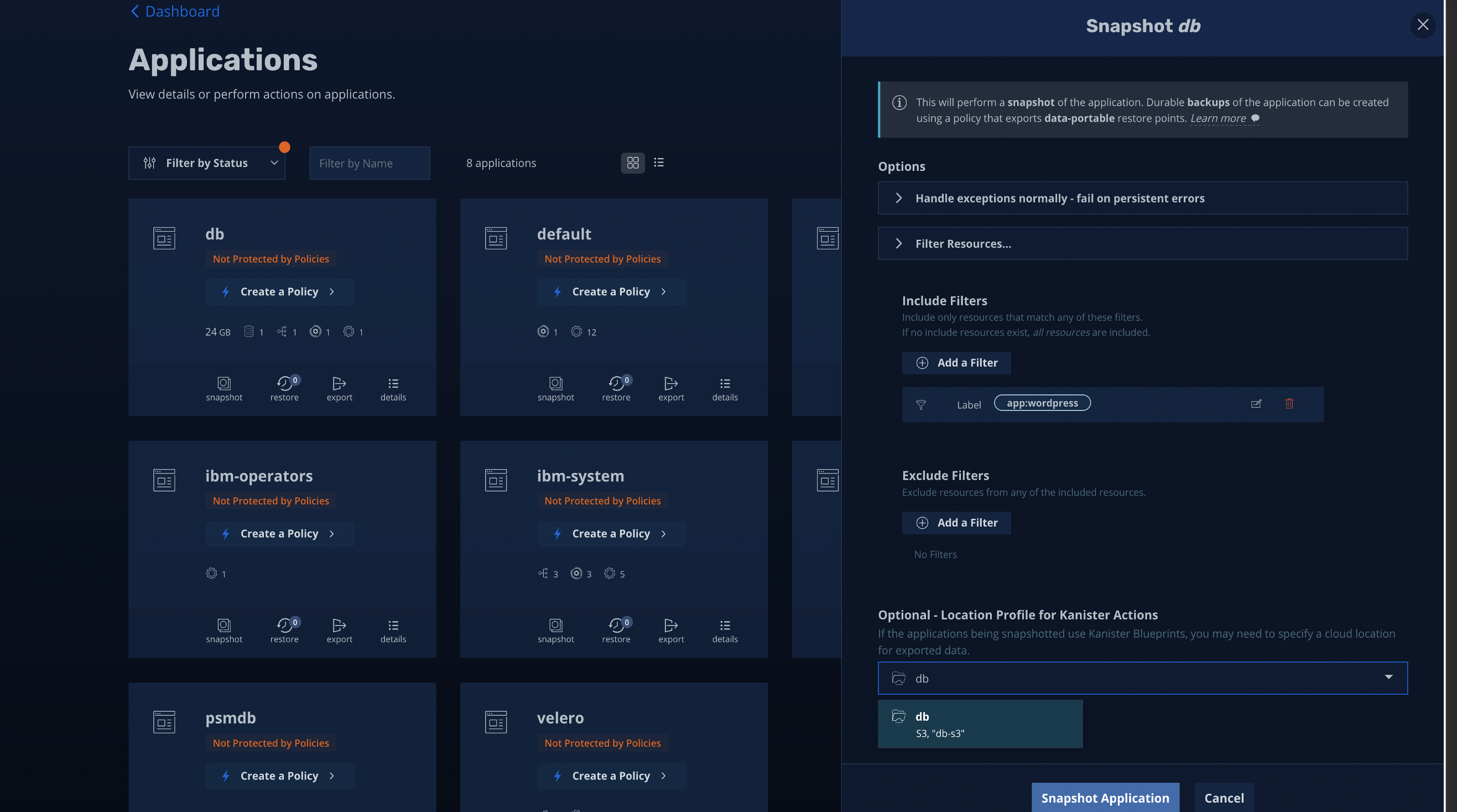

It gives you the ability to do a manual snapshot or create a policy and then run the policy against the application or namespace which needs to be protected. In the above image you can see that I am trying to take a manual spanshot and it provides me options to either take snapshot of the complete application or just specify specific labels in the application.

它使您能够执行手动快照或创建策略,然后针对需要保护的应用程序或名称空间运行策略。 在上面的图像中,您可以看到我正在尝试手动拍张照片,它为我提供了拍摄整个应用程序快照或仅在应用程序中指定特定标签的选项。

The above image shows how I could choose a label from all the discovered lables which shows up when I list it in the drop-down menu. This is very helpful and can make creating backup policies much more simpler, the only thing I need to follow is using a meaningful name tag as my label for all of my Kubernetes application deployment yaml.

上图显示了如何从所有发现的标签中选择标签,当我在下拉菜单中列出标签时,该标签就会显示出来。 这非常有帮助,并且可以使创建备份策略更加简单,我唯一需要遵循的就是使用有意义的名称标签作为我所有Kubernetes应用程序部署yaml的标签。

创建具有持久卷MySQL部署 (Creating a MySQL deployment with Persistent Volume)

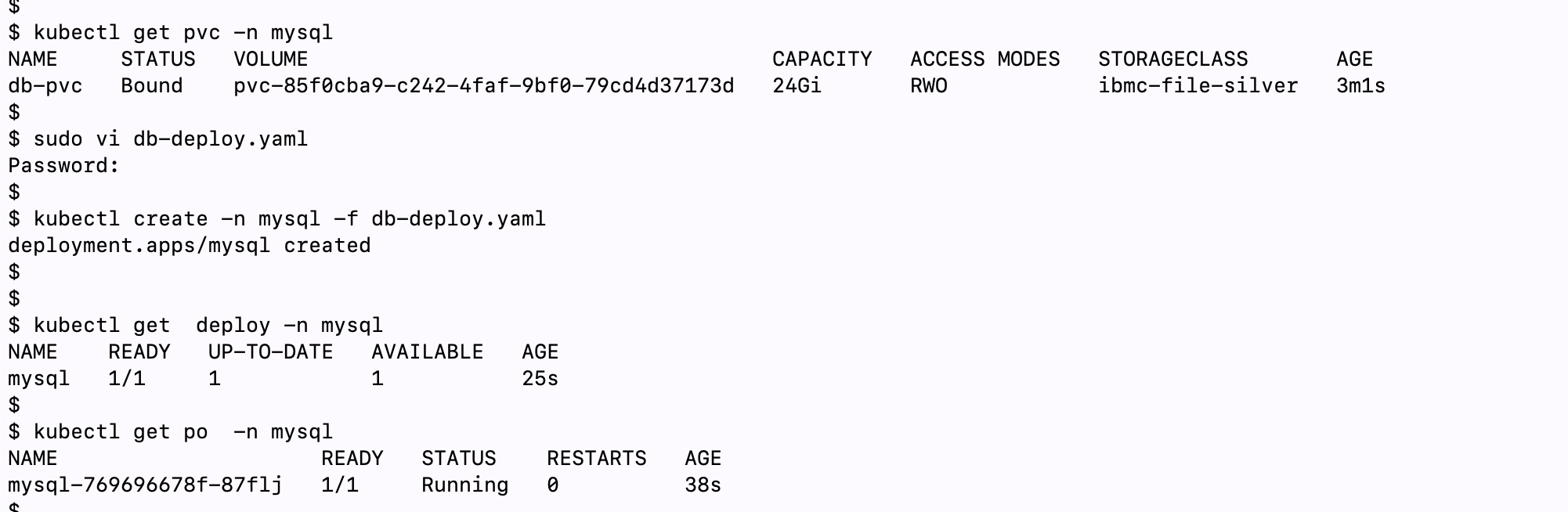

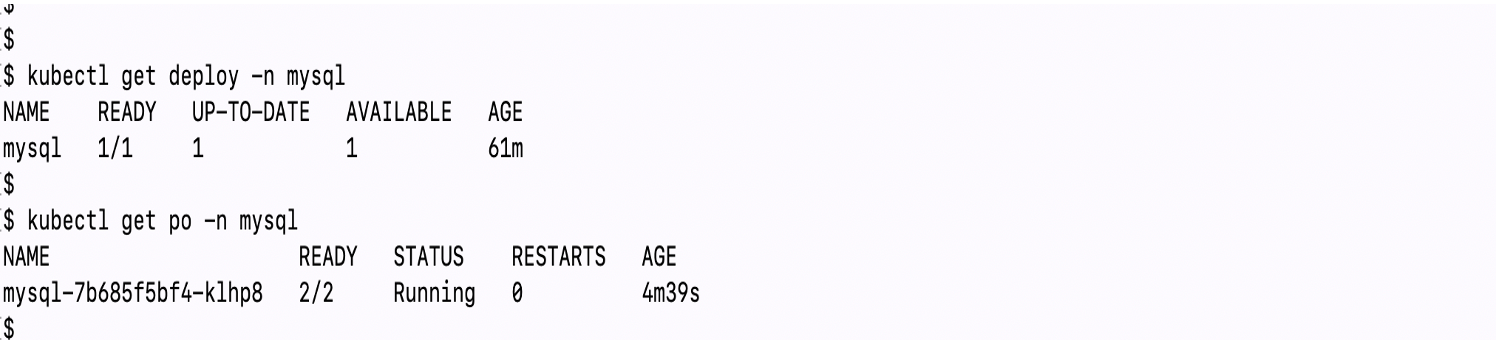

Below you can see a typical MySQL deployment with Persistent Volume associated. Please note the READY section it says 1/1 which means that the replica is just 1. This is normal as I have set my replica for MySQL to be only 1, but we will come back later in this article when we will look into this in more detail.

在下面,您可以看到与持久卷相关联的典型MySQL部署。 请注意READY部分显示1/1,这意味着该副本仅为1。这是正常的,因为我将MySQL副本设置为1,但是稍后我们将在本文后面再次介绍更详细地。

I will create some dummy database and inject some sample data to persists in the database in turn I am emulating that the data is in the persistent volume mapped to this POD.

我将创建一些虚拟数据库,并注入一些示例数据以将其持久化在数据库中,而我正在模拟该数据在映射到此POD的持久化卷中。

边车注入 (Sidecar Injection)

Earlier in the blog we talked about Mutating Webhook and its significance. Mutating webhook is a Kubernetes admission control which we use here with Kasten for injecting Kanister sidecar container which is required to take generic backup. I reiterate that the reason for using a sidecar container is for for mounting the data volume of the application that needs protection.

在博客的前面,我们讨论了Muting Webhook及其意义。 更改webhook是Kubernetes允许控件,我们在这里将它与Kasten一起用于注入Kanister边车容器,这是进行常规备份所必需的。 我重申,使用sidecar容器的原因是为了装入需要保护的应用程序的数据量。

Now the sidecar container can be deployed using Helm upgrade or using normal Kubernetes resources and patching them with the necessary changes in the manifests. Here as I used Helm to install K10 I would continue using Helm to add Kanister sidecar container. Run the following command to get that done.

现在可以使用Helm升级或正常的Kubernetes资源并使用清单中的必要更改对其进行修补,从而部署sidecar容器。 在这里,当我使用Helm安装K10时,我将继续使用Helm添加Kanister边车集装箱。 运行以下命令以完成该操作。

helm upgrade k10 kasten/k10 --namespace=kasten-io -f k10_val.yaml \

--set injectKanisterSidecar.enabled=true \

--set-string injectKanisterSidecar.namespaceSelector.matchLabels.name=mysqlAfter you run the above Helm command a sidecar container gets created along with your workload POD which needs to be protected. If you look closely at the command (second section of it) as shown below.

运行上述Helm命令后,将创建一个sidecar容器以及需要保护的工作负载POD。 如果您仔细查看命令(命令的第二部分),如下所示。

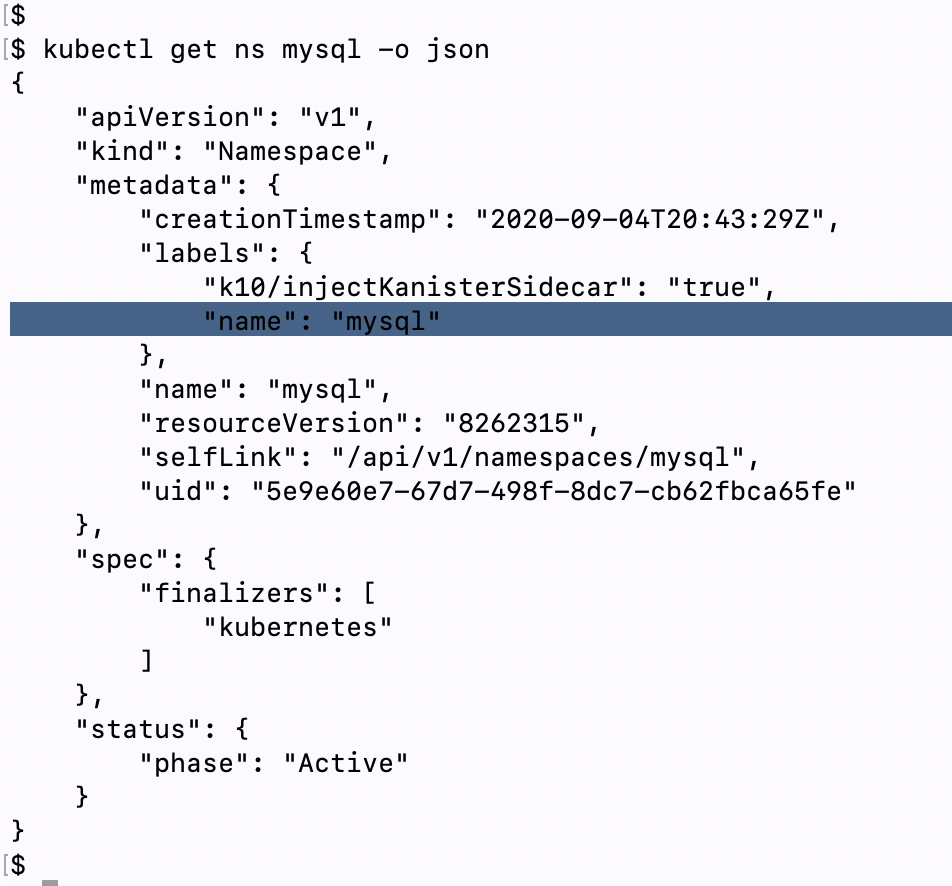

--set-string injectKanisterSidecar.namespaceSelector.matchLabels.k10/injectKanisterSidecar=trueThis is for selecting a namespace using namespaceSelector which has the application or workload that you intend to protect. In my case it is the namespace called mysql in which I have deployed the MySql deployment in that namespace. Now typically we do not label namespaces we create, buts its imperative that we do that here because we are using the labels to let know Helm, which namespace to protect. See below the json file of the namespace ‘mysql’.

这是用于使用namespaceSelector选择具有您要保护的应用程序或工作负载的命名空间。 就我而言,它是一个名为mysql的名称空间,在该名称空间中我已将MySql部署部署在该名称空间中。 现在通常我们不标记我们创建的名称空间,但是必须在这里这样做,因为我们正在使用标签让Helm知道要保护哪个名称空间。 请参见下面的命名空间“ mysql”的json文件。

You can also alternatively choose to protect specific POD/workload using objectSelector using the below command in the Helm upgrade.

您还可以使用以下命令在Helm升级中选择使用objectSelector保护特定的POD /工作负载。

--set-string injectKanisterSidecar.objectSelector.matchLabels.key=valueNow this Helm upgrade has set to add a sidecar container in the namespace ‘mysql’ and annotate the workload or POD that is within that namespace.

现在,此Helm升级已设置为在名称空间“ mysql”中添加sidecar容器,并注释该名称空间中的工作负载或POD。

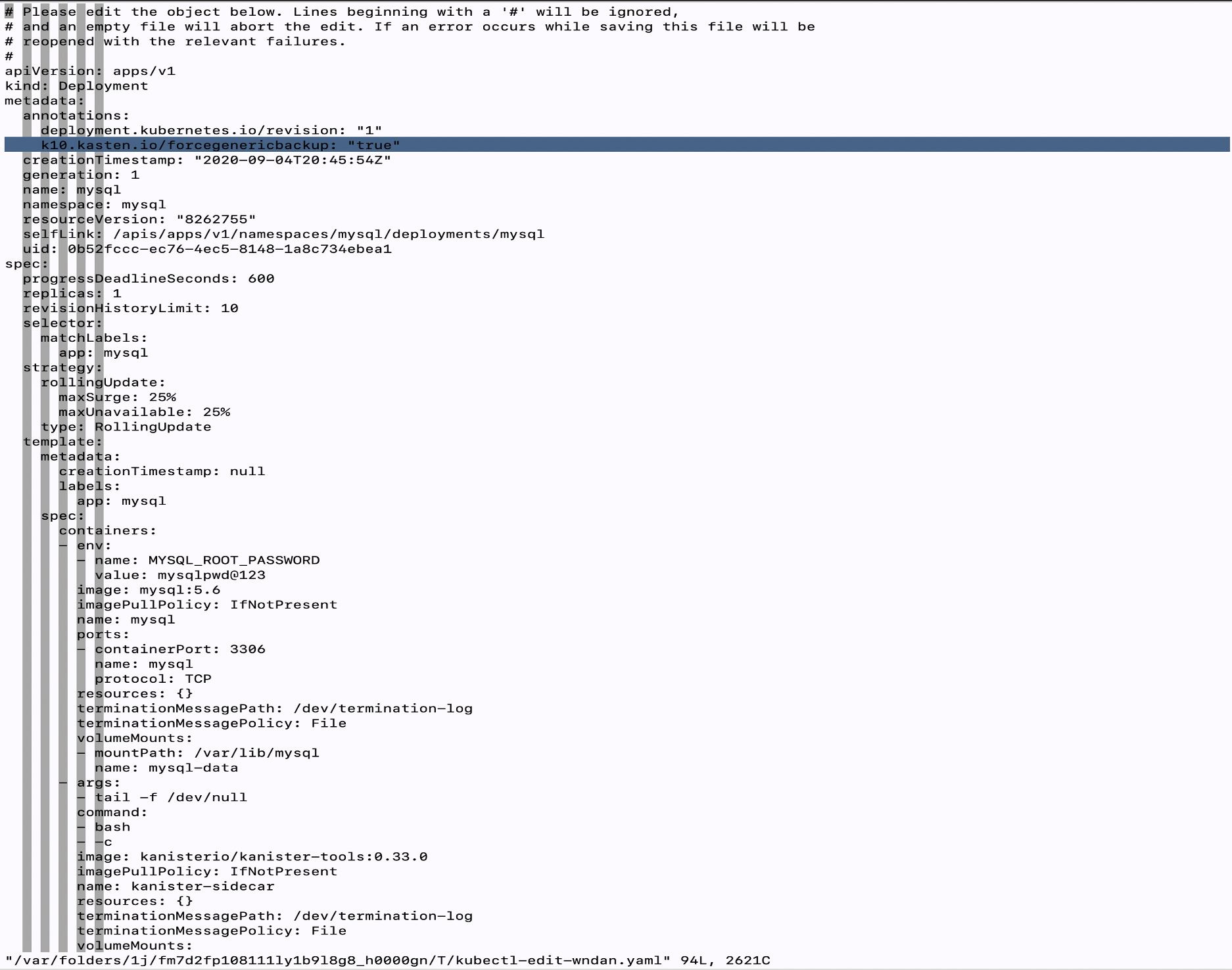

Here in the image below have a look at my MySQL deployment and notice the selected line in the yaml file, you can see the annotation: k10.kasten.io/forcegenericbackup: “true”

在下面的图像中,查看我MySQL部署,并注意到yaml文件中的选定行,您可以看到注释: k10.kasten.io/forcegenericbackup:“ true”

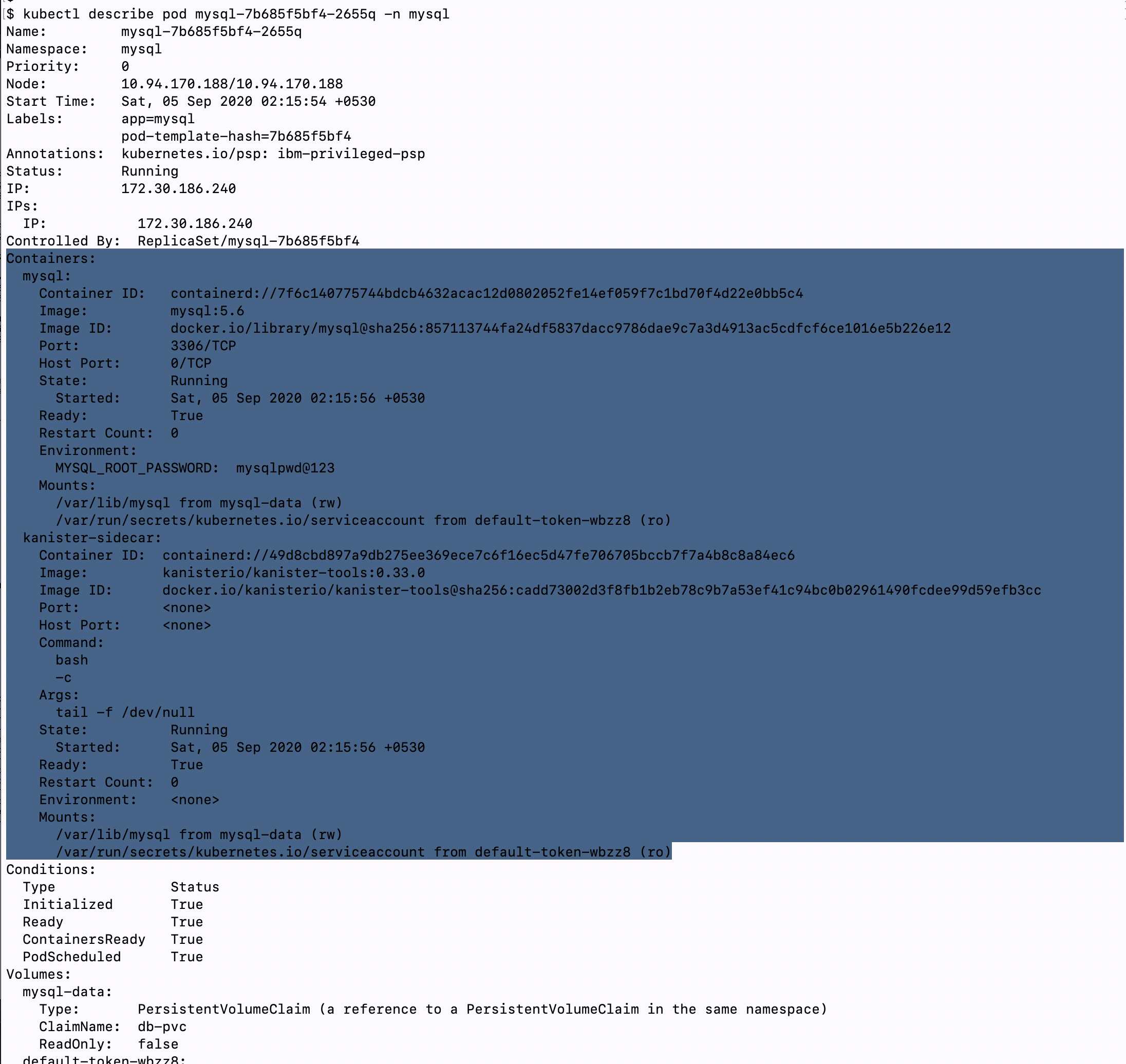

Look at the above image and closely notice the ‘READY’ section and you will see it reads 2/2, which means there are two containers in the MySQL pod. If you remember from earlier section we had just one container for MySQL as my replica is only one. After the Helm upgrade the sidecar container is added to the same POD.

查看上图并密切注意“ READY”部分,您将看到它读为2/2,这意味着MySQL容器中有两个容器。 如果您还记得前面的部分,我们MySQL容器只有一个,因为我的副本只是一个。 头盔升级后,将边车容器添加到同一POD。

See the above image which is the output of POD description you can see two containers one is the actual workload MySQL and the second one is the Kanister sidecar container. Also take a note of the volume mounts you will see the sidecar container mountpoint is the exact mountpoint as the MySQL container which is what required to enable the generic backup we discussed earlier.

看到上图是POD描述的输出,您可以看到两个容器,一个是MySQL的实际工作量,第二个是Kanister sidecar容器。 还要注意一下卷挂载,您将看到sidecar容器挂载点是与MySQL容器完全相同的挂载点,这是启用我们前面讨论的通用备份所必需的。

I should say here not all deployments of applications would prefer this way of a sidecar container being used for backup, but when we have scenarios like this one where the underlying storage provider doesn’t support Kasten this is the method K10 adopts to solve it.

我在这里应该说,并不是所有的应用程序部署都希望使用这种sidecar容器进行备份的方式,但是当我们遇到这样的场景时,底层存储提供程序不支持Kasten,这就是K10采取的解决方法。

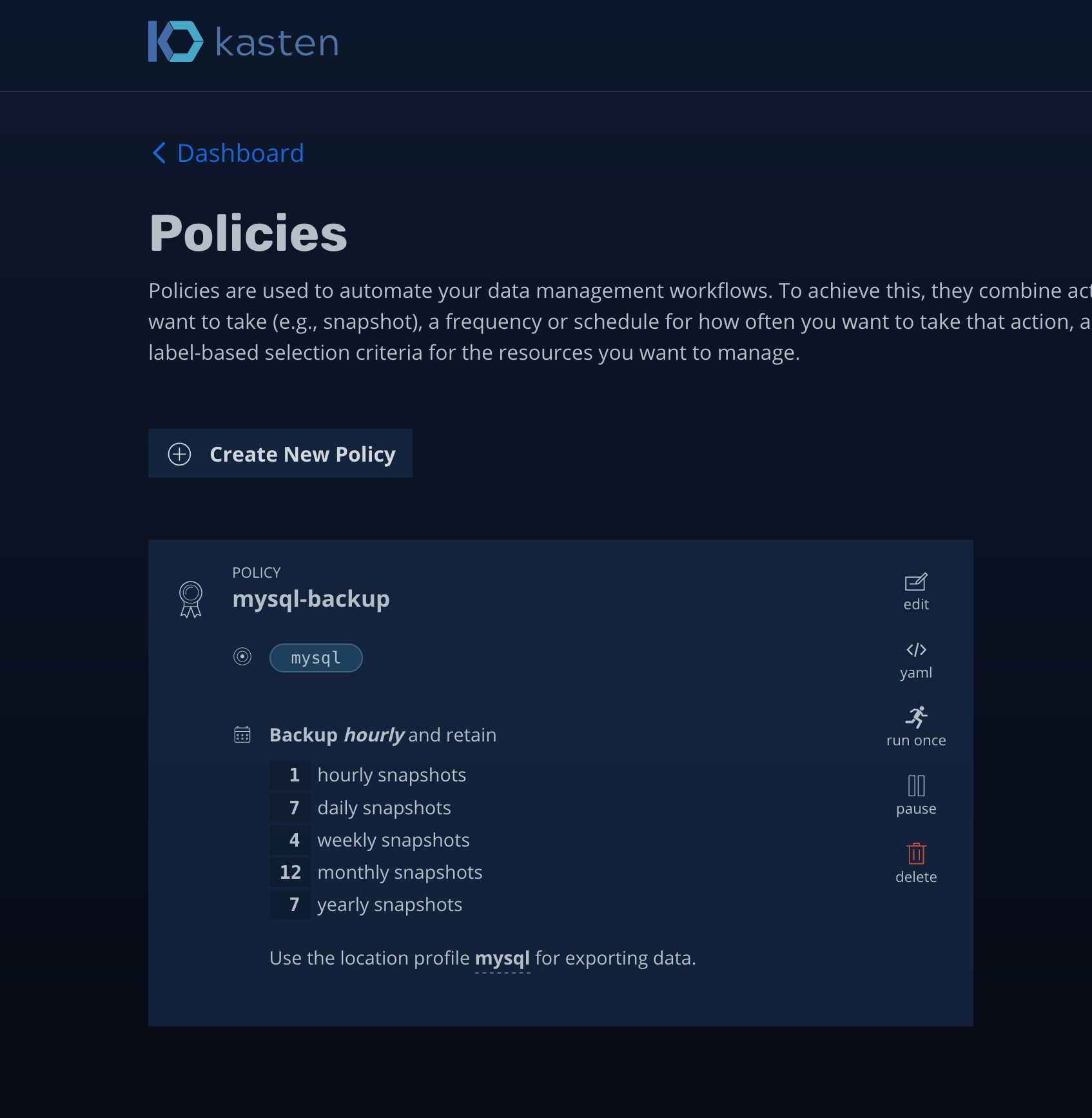

创建备份策略 (Creating Backup policy)

Now lets create a backup policy in K10 and run it.

现在,让我们在K10中创建一个备份策略并运行它。

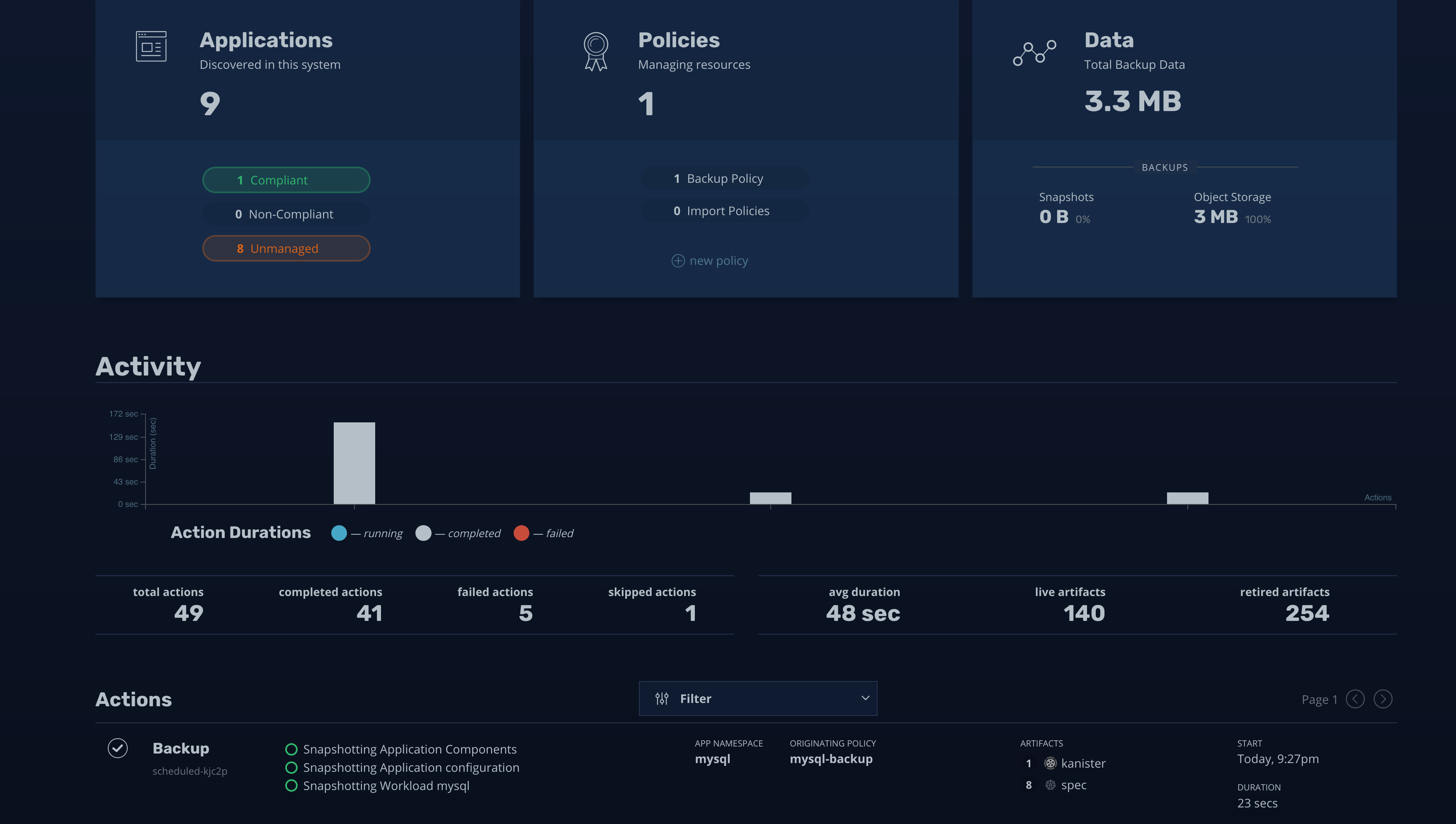

In the above images you can see that I have created a backup policy and ran it. There are different parameters which you can tweak and adjust while creating policies and K10 page had good amount of walk through of hows that’s done, so I am not going to show that here.

在上面的图像中,您可以看到我已经创建了一个备份策略并运行了它。 在创建策略时,您可以调整和调整不同的参数,K10页面上有大量的操作方法,因此在这里我将不再显示。

The above image shows backup taken in the Minio bucket that I have configured via the ‘Location profile’.

上图显示了我通过“位置配置文件”配置的Minio存储桶中进行的备份。

删除工作负载名称空间并还原 (Deleting Workload namespace and Restoring)

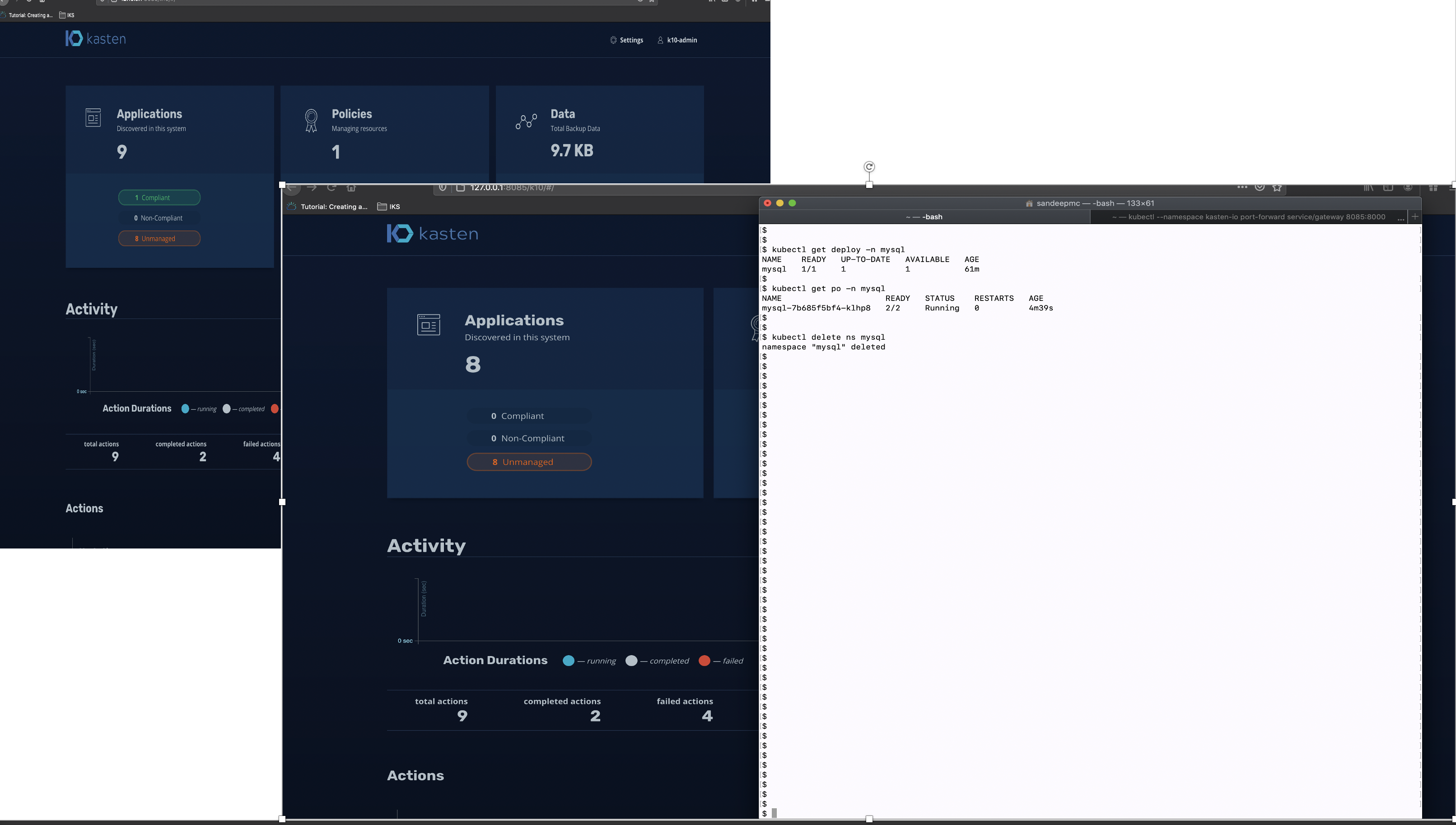

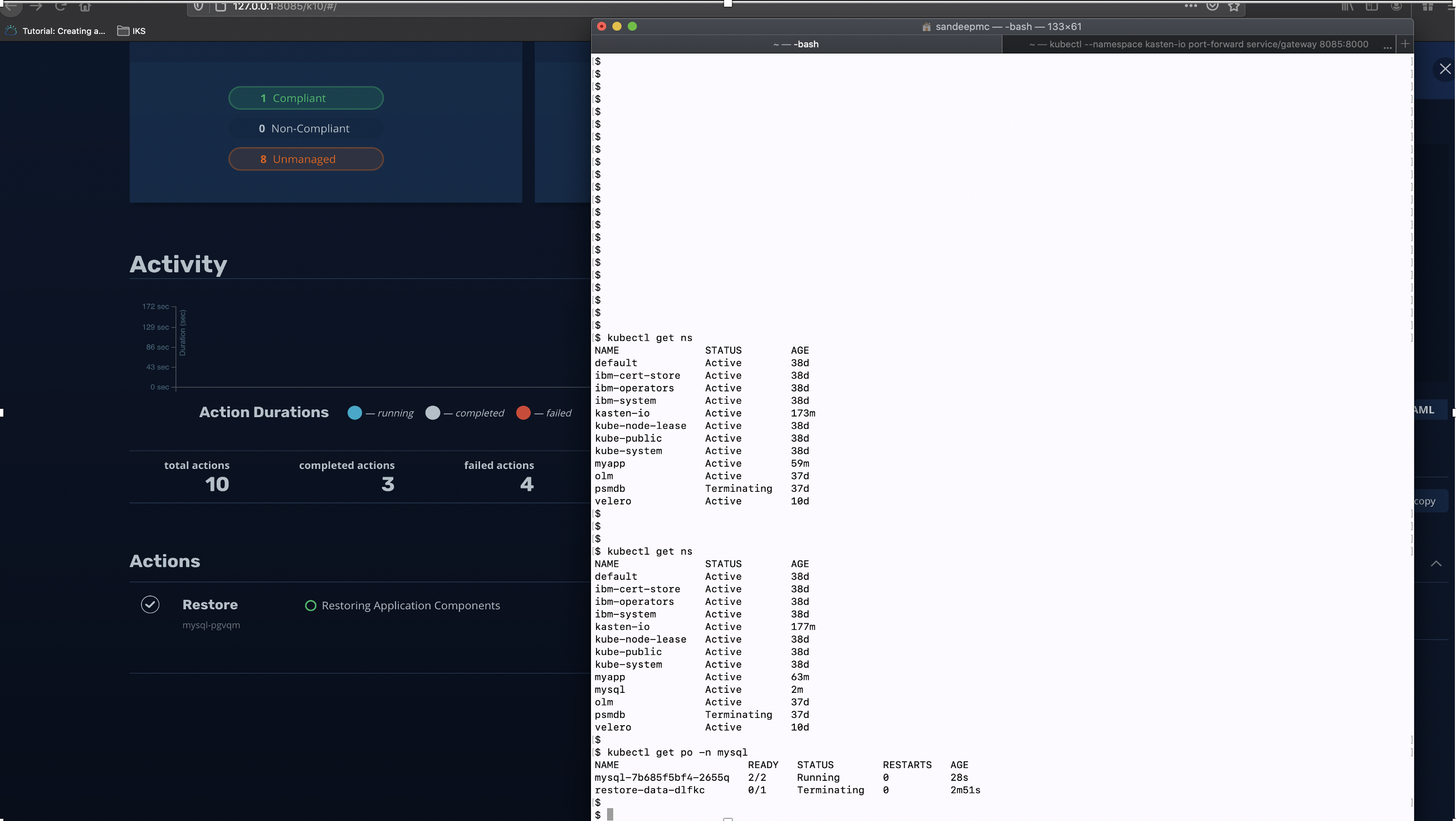

See the below image, there are two screen superimposed one on the left shows 9 in the Applications card and one is Compliant which is the MySQl application in namespace mysql. Now look at the screen on the right the terminal shows that namespace ‘mysql’ is deleted and the number of Applications in the card goes down to 8.

参见下图,有两个屏幕叠加在一起,一个屏幕显示在左侧的Applications卡中的9个,一个是Compliant,这是命名空间mysql中的MySQl应用程序。 现在看一下终端机右侧的屏幕,显示名称空间“ mysql”已删除,并且卡中的应用程序数量降至8。

If you remember I had chosen to annotate the namespace as a whole so if there were other PODs within that namespace then all the PODs would be protected. In this example I don’t have another POD, but I would try and add one later and come back to edit the blog. For the moment its just one workload POD for MySQL.

如果您还记得我选择了整体注释该命名空间,那么如果该命名空间中还有其他POD,则所有POD都将受到保护。 在此示例中,我没有另一个POD,但是我会尝试稍后添加一个POD,然后返回编辑博客。 目前,它只是MySQL的一个工作负载POD。

从Minio恢复MySQL (Restoring MySQL from Minio)

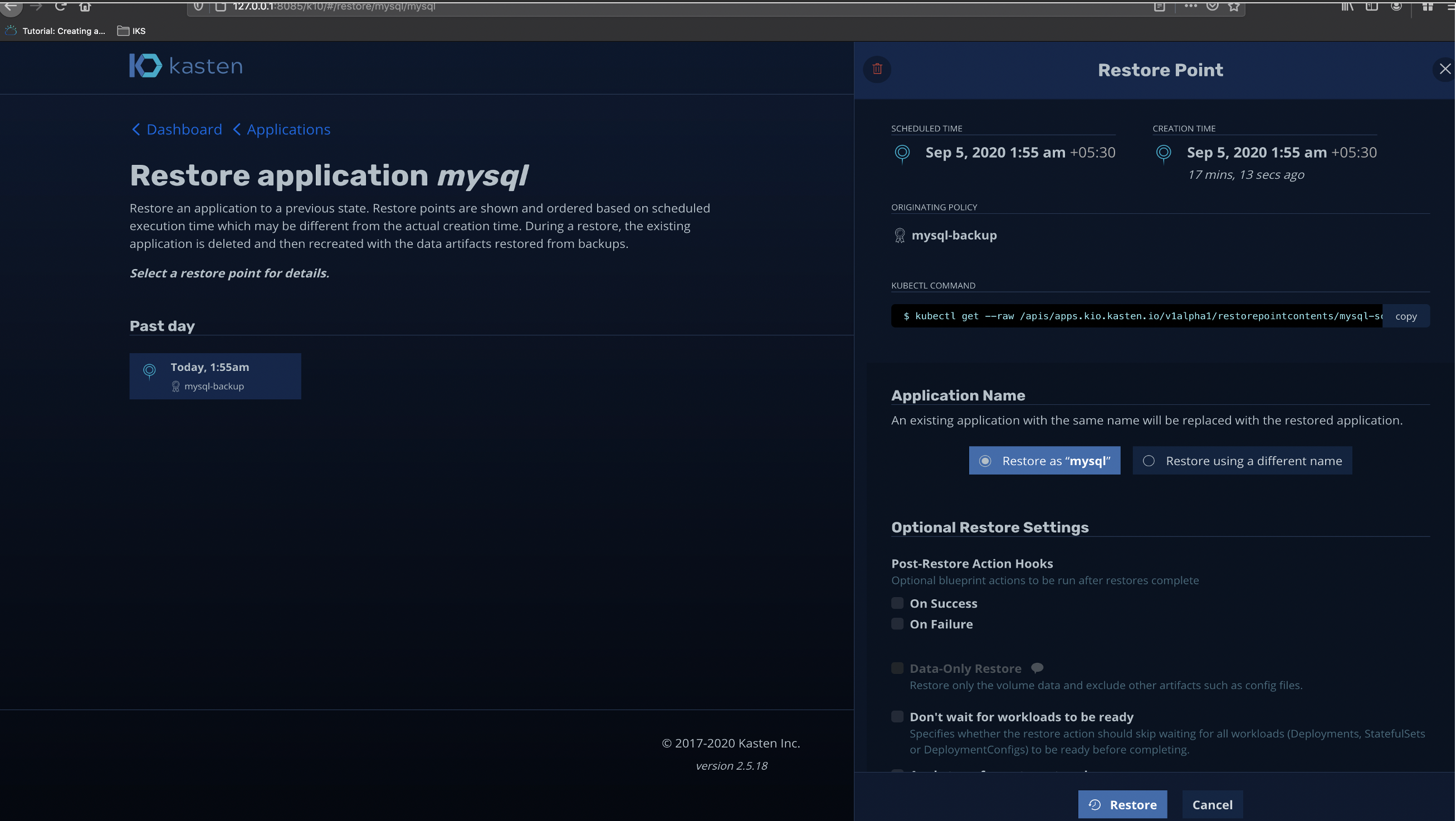

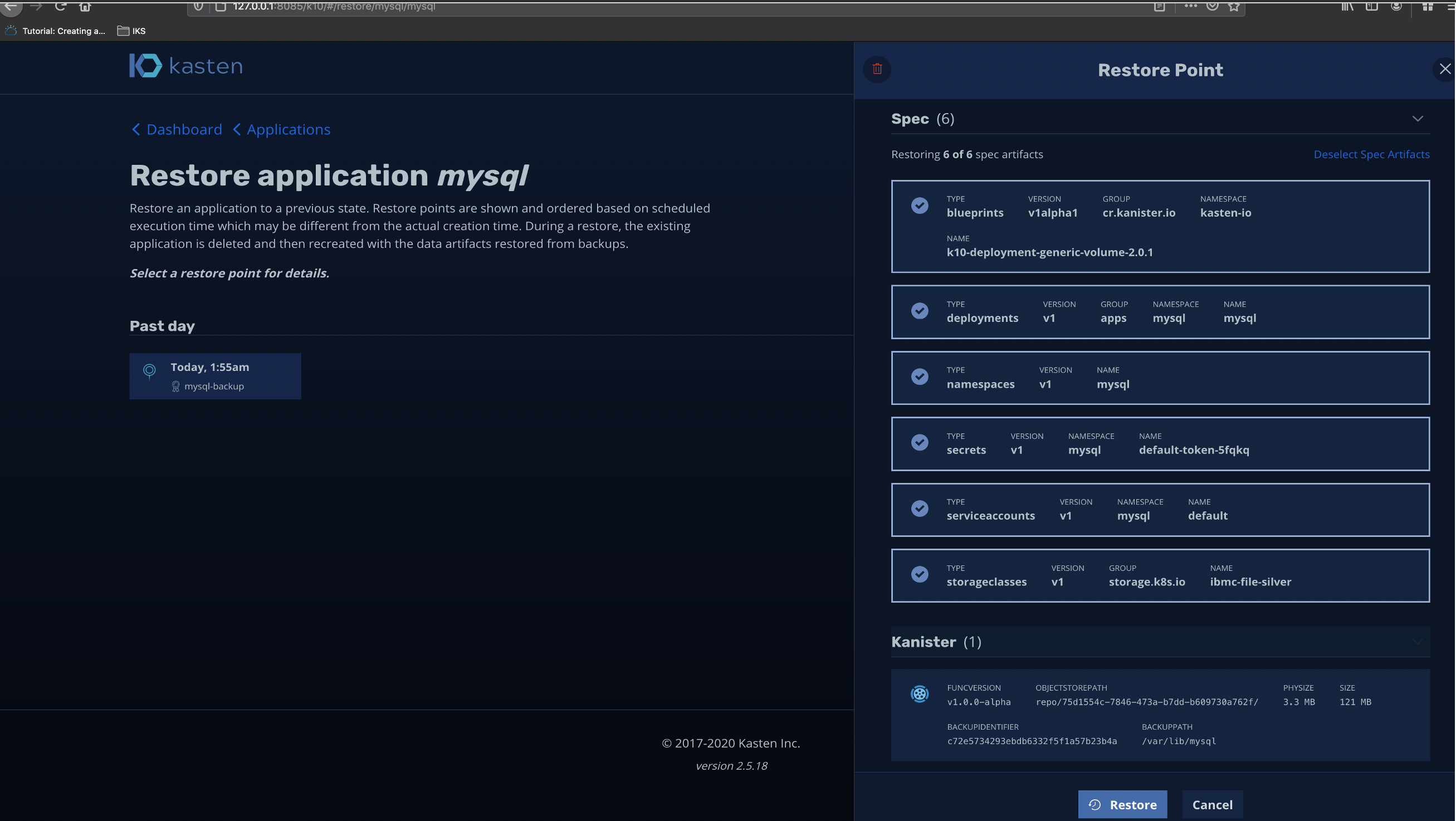

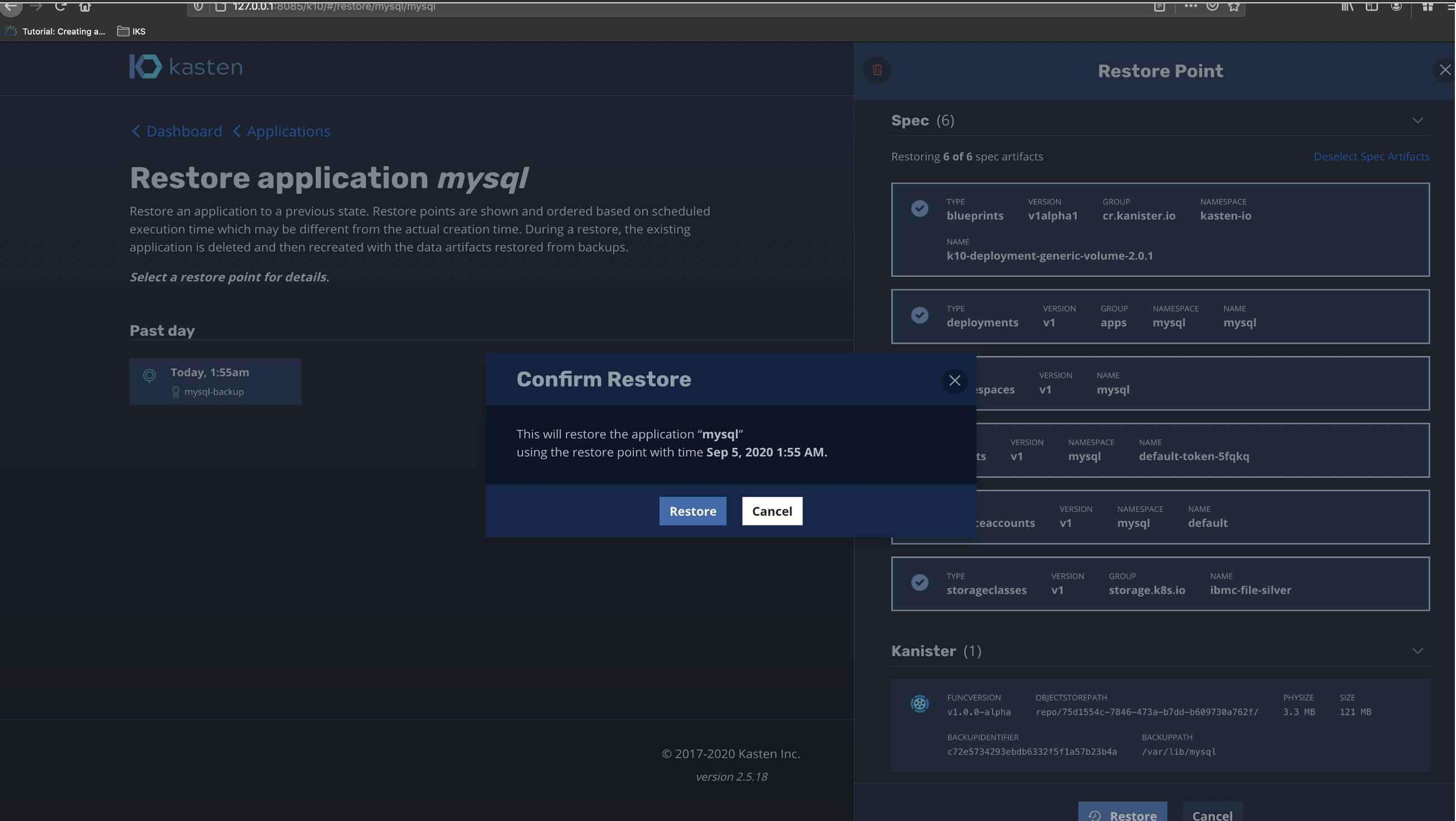

The below images shows stepwise restoration from the restore point, here in this case I have taken just one backup and so I have one restore point.

下图显示了从还原点开始的逐步还原,在这种情况下,我仅进行了一次备份,因此有一个还原点。

Look closely at spec sections and find all those resources that is going to be restored. Its lists blueprints(kanister), deployments, namespaces, secrets, serviceaccounts, storageclass. Also in the last image look closely at the Kanister section its shows the exact backup path for MySQL which is the exact mount volume path while I had created the MySQL deployment in the first place.

仔细查看规格部分,找到将要还原的所有那些资源。 它列出了蓝图(kanister),部署,名称空间,机密,服务帐户,存储类。 同样在最后一个图像中,仔细查看Kanister部分,它显示了MySQL的确切备份路径,这是我最初创建MySQL部署时的确切安装卷路径。

Now we can see the MySQL pod getting deployed and note there is a restore data pod which is created during the process of restoration and gets torn-off once the restoration is complete.

现在我们可以看到已部署了MySQL Pod,并注意到有一个还原数据Pod,它是在还原过程中创建的,一旦还原完成,它就会被撕掉。

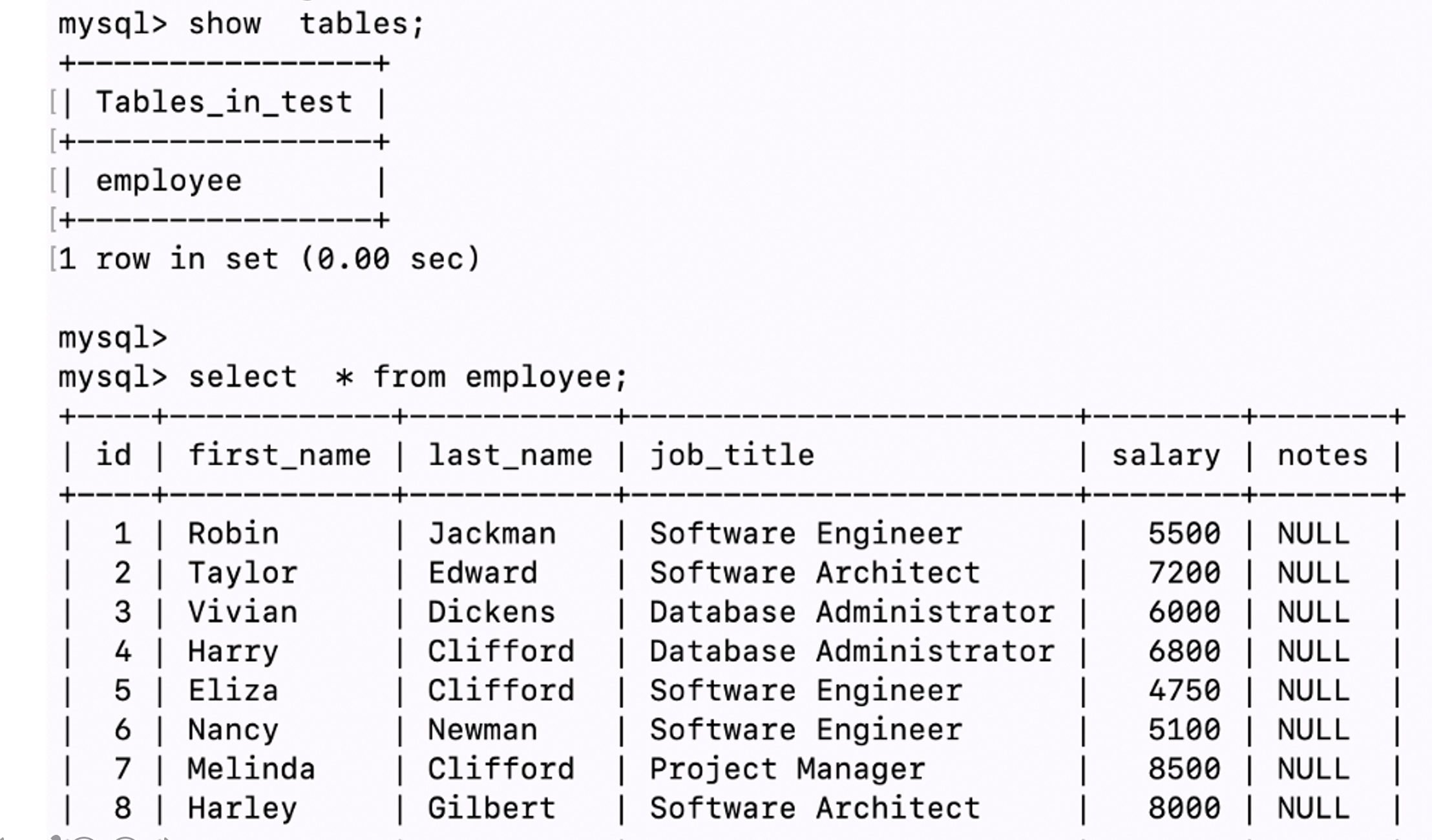

Now it’s time we verify that the POD that had come up has the persistent data it had restored from the backup. Lets verify by checking the MySQL databases and running some mysql query.

现在是时候验证一下即将出现的POD具有从备份中恢复的持久性数据了。 让我们通过检查MySQL数据库并运行一些mysql查询来进行验证。

We exec into the POD and run mysql query to find that the database and the tables are intact and has been restored from the backup.

我们执行到POD并运行mysql查询以发现数据库和表是完整的,并已从备份中还原。

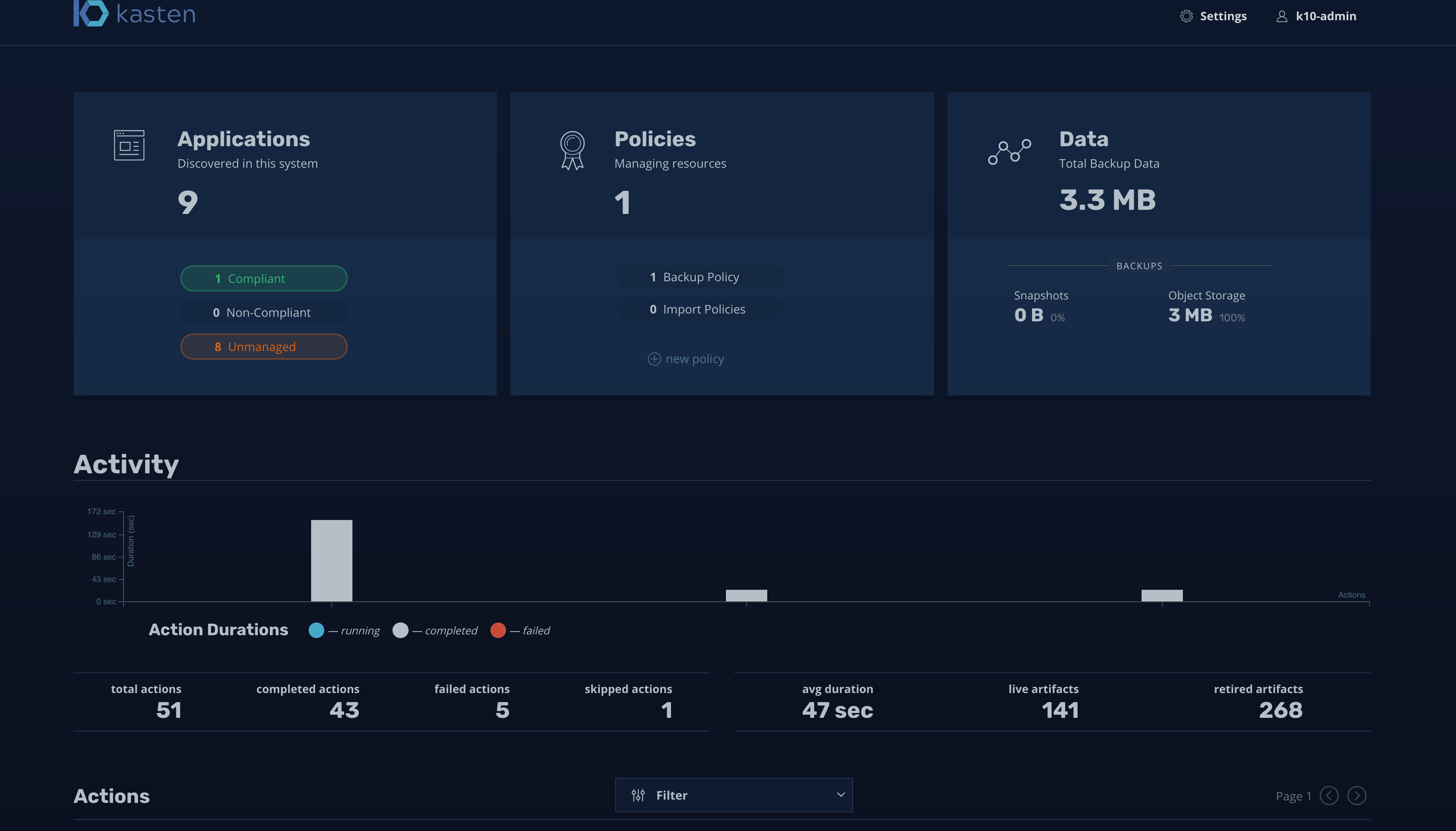

The above images shows that the MySQL is listed in the Applications and its compliant, which means it’s protected by K10. We can now go ahead and specify better backup policies and retention time and other important parameters specific to the solution we need.

上图显示MySQL已在应用程序及其兼容版本中列出,这意味着它已受到K10的保护。 现在,我们可以继续进行操作,并指定更好的备份策略和保留时间以及其他特定于我们所需解决方案的重要参数。

Finally I would like to point out that Kasten is a great solution for backup and restore of applications or workload running in Kubernetes. But there are caveats, issues and limitations. With this article my key point was to showcase Kubernetes application or namespace backup when the underlying storage provider doesn’t support solutions like Kasten. In this solution we used Kanister’s capability to inject sidecar into the workload and use Kasten’s capability to create policies and restore stateful MySQL application.

最后,我想指出,Kasten是备份和还原Kubernetes中运行的应用程序或工作负载的绝佳解决方案。 但是有一些警告,问题和局限性。 在本文中,我的重点是在底层存储提供程序不支持像Kasten这样的解决方案时展示Kubernetes应用程序或名称空间备份。 在此解决方案中,我们使用了Kanister的功能将sidecar注入工作负载中,并使用Kasten的功能来创建策略并还原有状态MySQL应用程序。

结论 (Conclusion)

I have been researching and building out test beds for use cases of backup and restore of workload running on Kubernetes. I have been focussing mostly of application specific use cases, unlike the traditional backup/restore of only volumes. Application aware backup and restore gives a better insight into the needs and complexity of the application which is hosted within Kubernetes.

我一直在研究和建立测试平台,用于备份和还原Kubernetes上运行的工作负载的用例。 我一直专注于特定于应用程序的用例,这与传统的仅备份/还原卷不同。 具有应用程序意识的备份和还原可以更好地了解Kubernetes中托管的应用程序的需求和复杂性。

My focus and work in this space is on Velero and Kasten and I am looking forward to do more architectures, specific use cases and share what I have learnt with the community. If you want to see what I do and chat with me find me tweeting about Kubernetes, OpenSource, Linux @sandeepkallazhi, DM is open.

我在这个领域的工作重点是Velero和Kasten,我期待做更多的架构,特定的用例,并与社区分享我学到的东西。 如果您想查看自己的工作并与我聊天,可以找我发关于Kubernetes,OpenSource,Linux @sandeepkallazhi的推文 ,DM已打开。

Until next blog, See you and stay safe!

直到下一个博客,再见,并保持安全!

Cheers!

干杯!

k8s minio

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)