orbslam3编译加ros运行

1.准备环境c++11,opencv3以上Pangolin,eigen3.1以上和python2.7,这些一般跑slam系统都会安装的常见包就不说了,dbow2和g2o是git clone下来的三方包自带的。说一下可能会出的问题是编译三方包的g2o时可能回报引用找不到<Eigen/Core>等找不到eigen相关文件的错,这里原因是ubuntu16.04安装eigen时一般是装在/us

1.准备环境

c++11,

opencv3以上

Pangolin,

eigen3.1以上

和python2.7,这些一般跑slam系统都会安装的常见包就不说了,

dbow2和g2o是git clone下来的三方包自带的。

说一下可能会出的问题是编译三方包的g2o时可能回报引用找不到<Eigen/Core>等找不到eigen相关文件的错,这里原因是ubuntu16.04安装eigen时一般是装在/usr/include/eigen3/Eigen中,所以一般我们使用eigen3文件引用都是写的<eigen3/Eigen/Core>,三方包g20默认eigen安装在/usr/include/Eigen中,所以它写的是<Eigen/Core>。

所以出现类似报错可以做的,一个是在/usr/include/Eigen建立与/usr/include/eigen3/Eigen的软链接,但是我不推荐,

还有一个就是直接在三方包的g2o所有引用<Eigen/XXX>文件的地方换成<eigen3/Eigen/XXX>,使用全部替换的时候要注意其中一个se3mat.h文件用的就是<eigen3/Eigen/XXX>,(这个操作有点神奇)。

之后就是git clone https://github.com/UZ-SLAMLab/ORB_SLAM3.git,

然后cd ORB_SLAM3目录下,chmod +x build.sh改sh文件权限

然后直接./build.sh执行编译,ok,这样可以执行非ros环境下的数据集读取

2.ros环境下的vislam模块使用

首先根据配置文件EuRoc.yaml和TUM_512.yaml仿写自己的相机和imu的配置文件,编写完后比如放到ORB_SLAM3目录下的test.yaml, 主要注意相机模型只有两种PinHole和KannalaBrandt8(opencv鱼眼模型)可选,然后和orbslam2_ros一样,新开终端

sudo gedit ~/.bashrc

然后在里面加入ORBSLAM3的ros环境export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/XXX/ORB_SLAM3/Examples/ROS

XXX是对应自己下载的orbslam3目录

保存后source ~/.bashrc

echo $ROS_PACKAGE_PATH

检查是否导入环境成功。

成功后。打开ORBSLAM3/Examples/ROS下的CMakelists.txt文件。他这里直接copy的orbslam2的ros程序,没有写完,得改改。

首先在其中find_package部分加上ros相关模块。

find_package(catkin REQUIRED COMPONENTS

roscpp

std_msgs

geometry_msgs

nav_msgs

tf

cv_bridge

)

然后把include_directories换成以下这个

include_directories(

${PROJECT_SOURCE_DIR}

${PROJECT_SOURCE_DIR}/../../../

${PROJECT_SOURCE_DIR}/../../../include/CameraModels/

${PROJECT_SOURCE_DIR}/../../../include

${Pangolin_INCLUDE_DIRS}

${EIGEN3_INCLUDE_DIR}

${catkin_INCLUDE_DIRS}

)

然后把下面它自带的rosbuild_add_executable和连接的全部注释编写我们自己ros节点程序。我这里程序叫ros_vi.cc

# Node for Mono imu camera

rosbuild_add_executable(MonoVi src/ros_vi.cc)

target_link_libraries(MonoVi ${LIBS})

# # Node for monocular camera

# rosbuild_add_executable(Mono

# src/ros_mono.cc

# )

# target_link_libraries(Mono

# ${LIBS}

# )

# Node for monocular camera (Augmented Reality Demo)

# rosbuild_add_executable(MonoAR

# src/AR/ros_mono_ar.cc

# src/AR/ViewerAR.h

# src/AR/ViewerAR.cc

# )

# target_link_libraries(MonoAR

# ${LIBS}

# )

# # Node for stereo camera

# rosbuild_add_executable(Stereo

# src/ros_stereo.cc

# )

# target_link_libraries(Stereo

# ${LIBS}

# )

# # Node for RGB-D camera

# rosbuild_add_executable(RGBD

# src/ros_rgbd.cc

# )

# target_link_libraries(RGBD

# ${LIBS}

# )

之后在ORB_SLAM3/Examples/ROS/ORB_SLAM3/src/编写我们自己的节点程序,我这就是ros_vi.cc;

程序可以直接照搬我这个

#include<iostream>

#include<algorithm>

#include<fstream>

#include<chrono>

#include<ros/ros.h>

#include <cv_bridge/cv_bridge.h>

#include <sensor_msgs/Image.h>

#include <sensor_msgs/Imu.h>

#include <queue>

#include <mutex>

#include <condition_variable>

#include<opencv2/core/core.hpp>

#include"../../../include/System.h"

#include "../../../include/ImuTypes.h"

using namespace std;

std::mutex m_buf;

std::mutex pic_buf;

std::condition_variable con;

queue<ORB_SLAM3::IMU::Point> imu_buf;

queue<pair<cv::Mat,double>> images_buf;

class RosdataProcess

{

public:

RosdataProcess(ORB_SLAM3::System* pSLAM):mpSLAM(pSLAM){

std::cout<<"start slam!!!!!!!!"<<std::endl;

}

void GrabImage(const sensor_msgs::ImageConstPtr& msg);

void GrabImu(const sensor_msgs::Imu::ConstPtr& imu_msg);

std::vector<std::pair<std::pair<cv::Mat,double>,std::vector<ORB_SLAM3::IMU::Point>>> gettotalMeasurements();

void processData();

ORB_SLAM3::System* mpSLAM;

double last_imu_t = 0;

double last_img_t = 0;

};

int main(int argc, char **argv)

{

ros::init(argc, argv, "Mono");

ros::start();

if(argc != 3)

{

cerr << endl << "Usage: rosrun ORB_SLAM3 Mono path_to_vocabulary path_to_settings" << endl;

ros::shutdown();

return 1;

}

// Create SLAM system. It initializes all system threads and gets ready to process frames.

ORB_SLAM3::System SLAM(argv[1],argv[2],ORB_SLAM3::System::IMU_MONOCULAR,true);

RosdataProcess igb(&SLAM);

ros::NodeHandle nodeHandler;

ros::Subscriber sub = nodeHandler.subscribe("/camera/fisheye1/image_raw", 2000, &RosdataProcess::GrabImage,&igb);

ros::Subscriber subimu = nodeHandler.subscribe("/camera/imu", 2000, &RosdataProcess::GrabImu,&igb);

std::thread tracking_thread = std::thread(std::bind(&RosdataProcess::processData,igb));

ros::spin();

// Stop all threads

SLAM.Shutdown();

// Save camera trajectory

SLAM.SaveKeyFrameTrajectoryTUM("KeyFrameTrajectory.txt");

ros::shutdown();

return 0;

}

void RosdataProcess::GrabImage(const sensor_msgs::ImageConstPtr& msg)

{

// Copy the ros image message to cv::Mat.

//std::cout<<"get image"<<std::endl;

cv_bridge::CvImageConstPtr cv_ptr;

try

{

cv_ptr = cv_bridge::toCvShare(msg);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

if (msg->header.stamp.toSec() <= last_img_t)

{

ROS_WARN("image message in disorder!");

return;

}

last_img_t = msg->header.stamp.toSec();

cv_bridge::CvImagePtr ptr = cv_bridge::toCvCopy(msg);

cv::Mat image = ptr->image.clone();

cv::Mat pro_image;

int camera_num = 0;

if(image.channels()==3){

cv::cvtColor(image,pro_image,cv::COLOR_RGB2GRAY);

}

else{

pro_image = image.clone();

}

pic_buf.lock();

images_buf.push(std::make_pair(pro_image,last_img_t));

//std::cout<<"images_buf size:"<<images_buf.size()<<std::endl;

pic_buf.unlock();

con.notify_one();

}

void RosdataProcess::GrabImu(const sensor_msgs::Imu::ConstPtr& imu_msg)

{

//std::cout<<"get imu"<<std::endl;

if (imu_msg->header.stamp.toSec() <= last_imu_t)

{

ROS_WARN("imu message in disorder!");

return;

}

last_imu_t = imu_msg->header.stamp.toSec();

float data[6];

data[0] = (float)imu_msg->linear_acceleration.x;

data[1] = (float)imu_msg->linear_acceleration.y;

data[2] = (float)imu_msg->linear_acceleration.z;

data[3] = (float)imu_msg->angular_velocity.x;

data[4] = (float)imu_msg->angular_velocity.y;

data[5] = (float)imu_msg->angular_velocity.z;

m_buf.lock();

imu_buf.push(ORB_SLAM3::IMU::Point(data[0],data[1],data[2],data[3],data[4],data[5],last_imu_t));

//std::cout<<"imu buf size:"<<imu_buf.size()<<std::endl;

m_buf.unlock();

con.notify_one();

last_imu_t = imu_msg->header.stamp.toSec();

}

std::vector<std::pair<std::pair<cv::Mat,double>,std::vector<ORB_SLAM3::IMU::Point>>>

RosdataProcess::gettotalMeasurements(){

std::vector<std::pair<std::pair<cv::Mat,double>,std::vector<ORB_SLAM3::IMU::Point>>> measurements;

//std::cout<<"同步处理"<<std::endl;

while (true)

{

if (imu_buf.empty() || images_buf.empty()){

return measurements;

}

if (!(imu_buf.back().t > images_buf.front().second))

{

std::cout<<"wait for imu, only should happen at the beginning"<<std::endl;

return measurements;

}

if (!(imu_buf.front().t < images_buf.front().second))

{

std::cout<<"throw image"<<std::endl;

images_buf.pop();

continue;

}

std::pair<cv::Mat,double> imagedata = images_buf.front();

images_buf.pop();

std::vector<ORB_SLAM3::IMU::Point> IMUs;

while (imu_buf.front().t < imagedata.second)

{

IMUs.emplace_back(imu_buf.front());

imu_buf.pop();

}

IMUs.emplace_back(imu_buf.front());

if (IMUs.empty())

ROS_WARN("no imu between two image");

std::pair<std::pair<cv::Mat,double>,std::vector<ORB_SLAM3::IMU::Point>> data;

data.first = imagedata;

data.second = IMUs;

measurements.emplace_back(data);

}

return measurements;

}

void RosdataProcess::processData(){

while (true)

{

std::vector<std::pair<std::pair<cv::Mat,double>,std::vector<ORB_SLAM3::IMU::Point>>> datas;

std::unique_lock<std::mutex> lk(m_buf);

//得到每张图片对应的多个imu和轮式里程计数据

con.wait(lk, [&]

{

return (datas = gettotalMeasurements()).size() != 0;

});

lk.unlock(); // 从缓存队列中读取数据完成,解锁

for(auto &data:datas){

double imagetime = data.first.second;

ORB_SLAM3::IMU::Point last_imu = data.second[data.second.size()-2];

ORB_SLAM3::IMU::Point fin_imu = data.second[data.second.size()-1];

float imudata[6];

//这里是改成和vins一样每帧图像数据对应的多个imu数据加多一个插值到图像时间上的imu数据

double dt_1 = imagetime - last_imu.t;

double dt_2 = fin_imu.t - imagetime;

double w1 = dt_2 / (dt_1 + dt_2);

double w2 = dt_1 / (dt_1 + dt_2);

imudata[0] = (float)w1 * last_imu.a.x + (float)w2 * fin_imu.a.x;

imudata[1] = (float)w1 * last_imu.a.y + (float)w2 * fin_imu.a.y;

imudata[2] = (float)w1 * last_imu.a.z + (float)w2 * fin_imu.a.z;

imudata[3] = (float)w1 * last_imu.w.x + (float)w2 * fin_imu.w.x;

imudata[4] = (float)w1 * last_imu.w.y + (float)w2 * fin_imu.w.y;

imudata[5] = (float)w1 * last_imu.w.z + (float)w2 * fin_imu.w.z;

data.second[data.second.size()-1] = ORB_SLAM3::IMU::Point(imudata[0],imudata[1],imudata[2],

imudata[3],imudata[4],imudata[5],imagetime);

mpSLAM->TrackMonocular(data.first.first,data.first.second,data.second); // TODO change to monocular_inertial

}

}

}

这里直接把vins的imu与相机同步程序拿来用了,大家使用时记得把程序这里的相机话题和imu话题换成自己的,写好程序之后回到ORB_SLAM3目录下,chmod +x build_ros.sh,然后执行这个脚本文件就能编译成功,使用方式是调用/home/XXX/ORB_SLAM3/Examples/ROS/ORB_SLAM3/目录下生成的之前在cmake中写的可执行文件,我这是/home/XXX/ORB_SLAM3/Examples/ROS/ORB_SLAM3/MonoVi文件。使用方式是

./执行文件 词袋库文件 配置文件

./home/XXX/ORB_SLAM3/Examples/ROS/ORB_SLAM3/MonoVi /home/XXX/ORB_SLAM3/Vocabulary/ORBvoc.txt /home/XXX/ORB_SLAM3/test.yaml

等程序加载完词袋库就可以播放数据集。

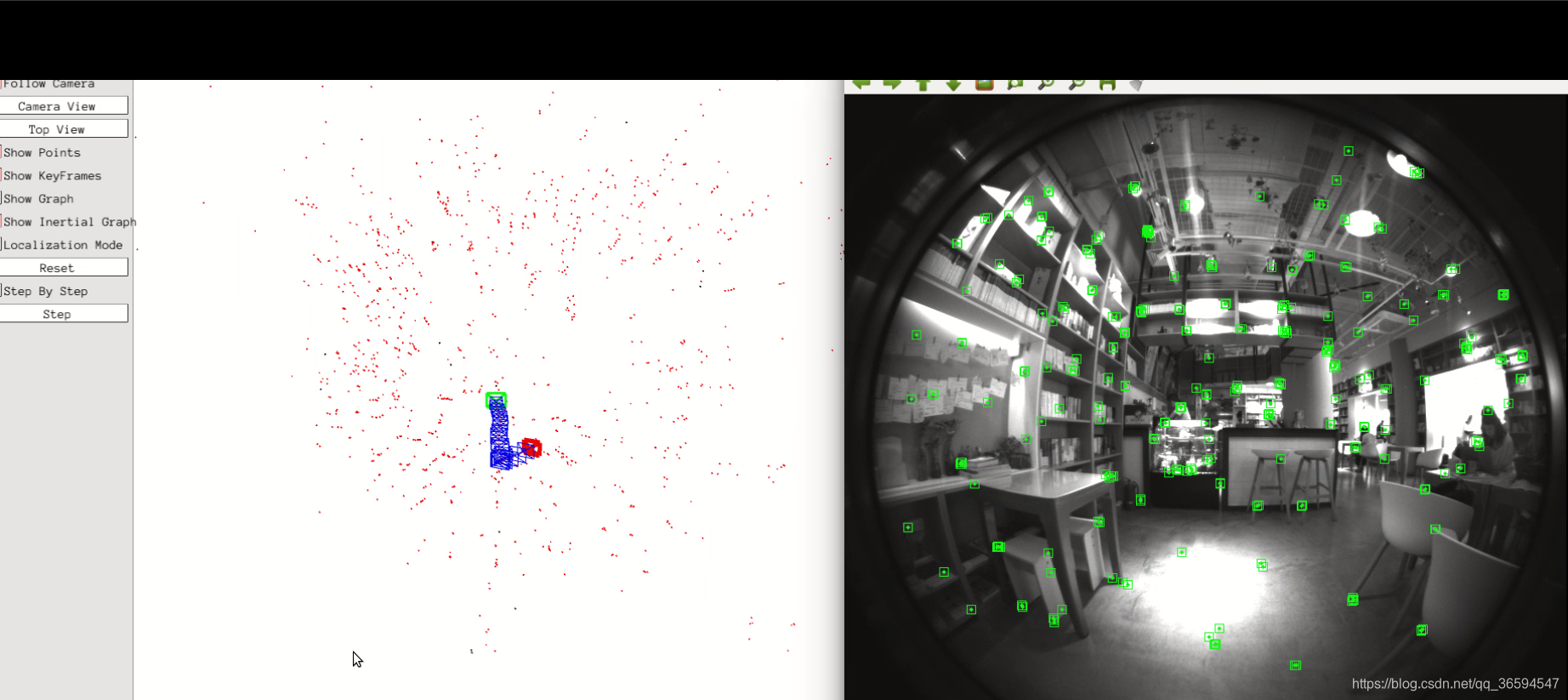

成果

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)