For some time, Apache Airflow has become a highlight open source tool for building pipelines and automating tasks in the world of data engineering with languages such as Python, from ETL processes to data mining.

Also, it offers a great variety, customization, use of different database managers, and integration with many cloud technologies, databases, virtual machines, etc.

According to the current revolution in managing cloud database platforms in the cloud for agility and cost-effectiveness in monetization and time of use, Amazon Relational Database Service, or Amazon RDS, has become a simple tool for it.

The main reason is the easy configuration, use, and stability of a SQL and NoSQL database in the cloud at small, medium, and large scales.

So, it is possible to get a large concentration of applications to give them fast performance, high availability, security, and compatibility they need to have with a database.

In this article, we will work on the easiest way to automate an SQL query in an existing PostgreSQL database on Amazon RDS.

Steps:

1. Creating the PostgreSQL Database on AWS RDS

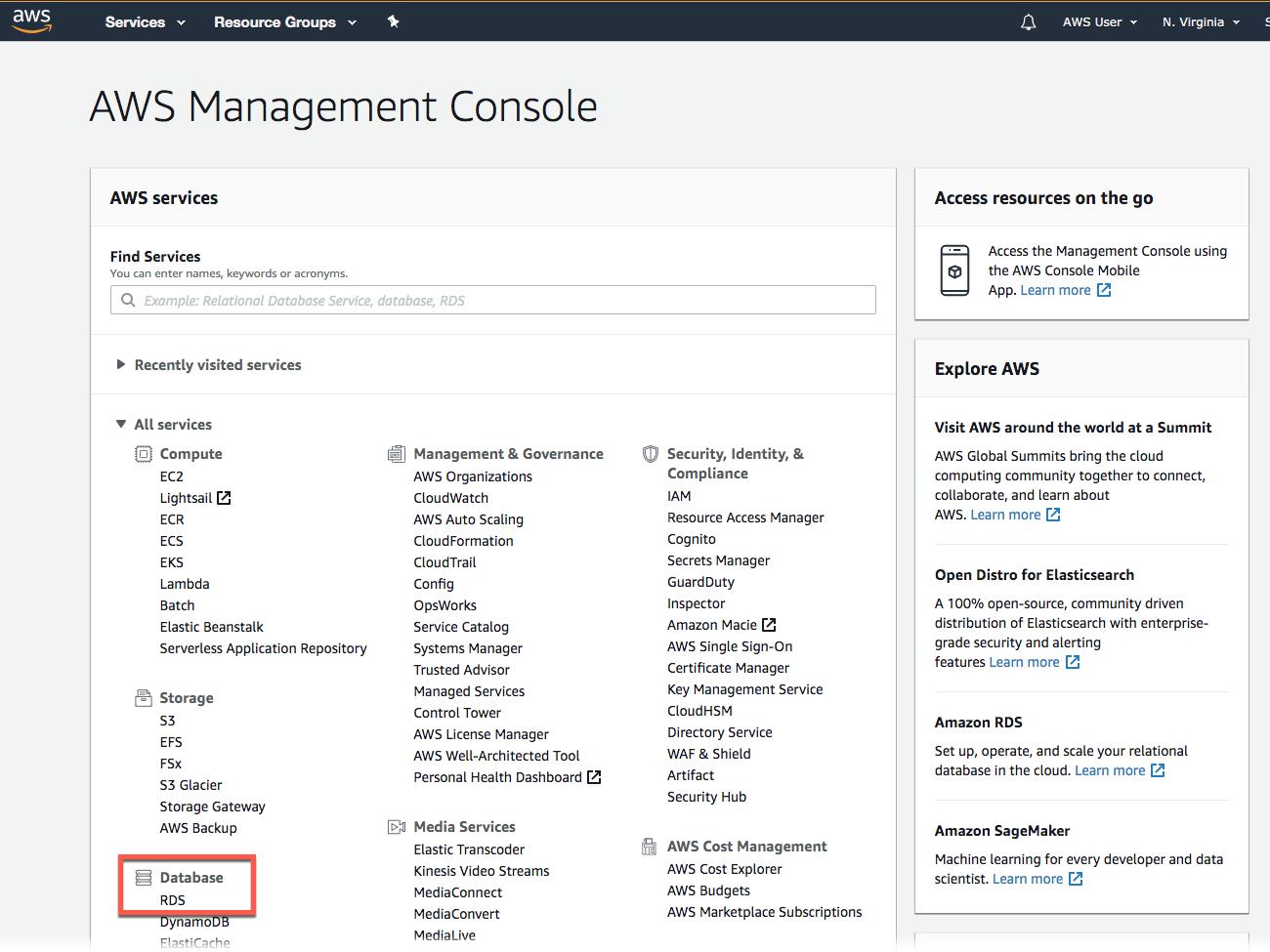

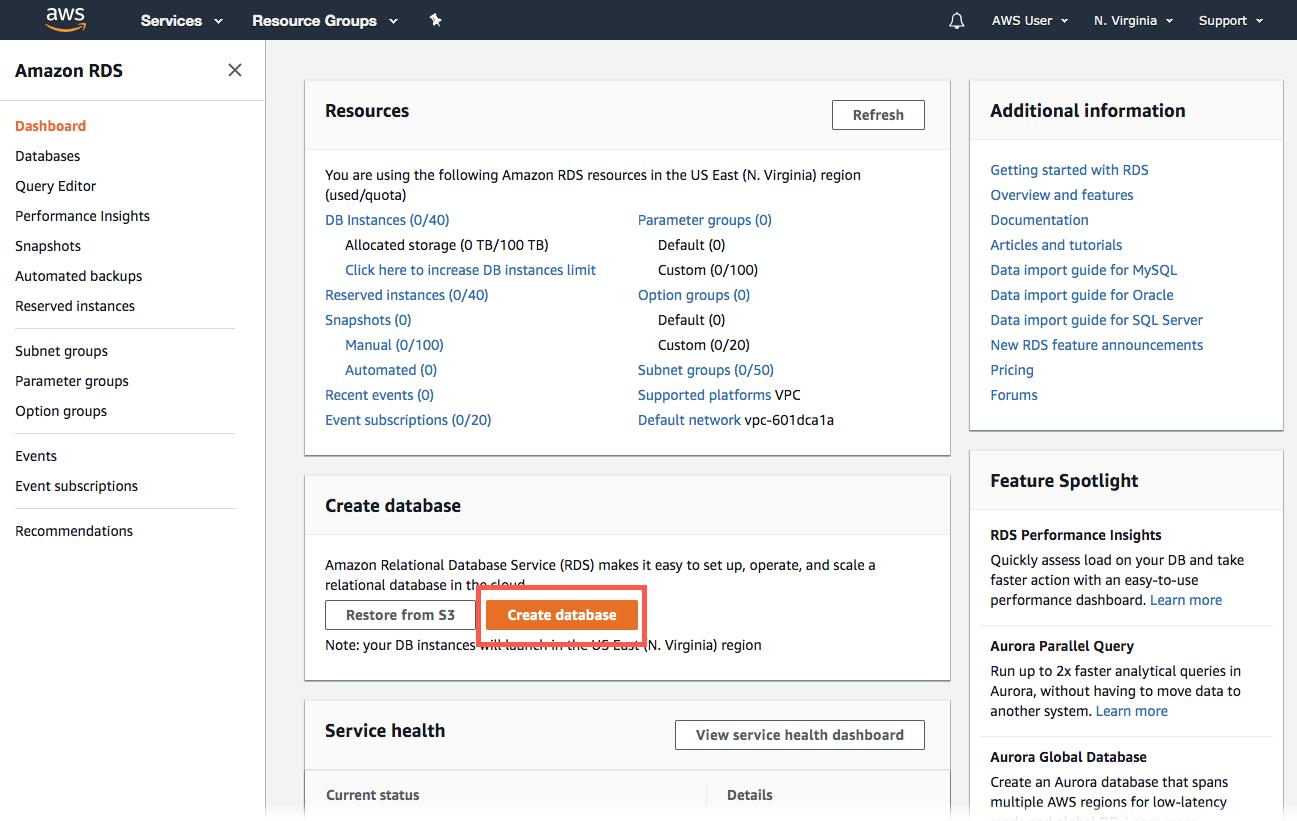

First, sign in to the AWS Management Console and open the Amazon RDS console at https://console.aws.amazon.com/rds/.

In the navigation pane, choose Databases and then, Create database option

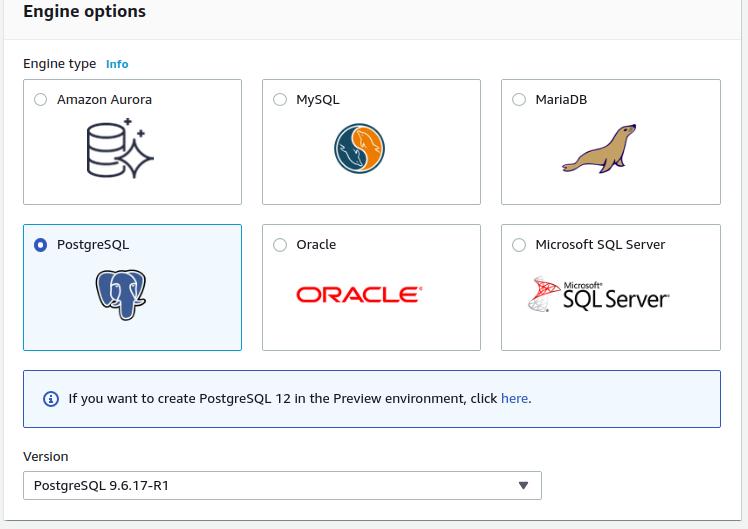

In Choose a database creation method, select Standard Create and as a database to PostgreSQL.

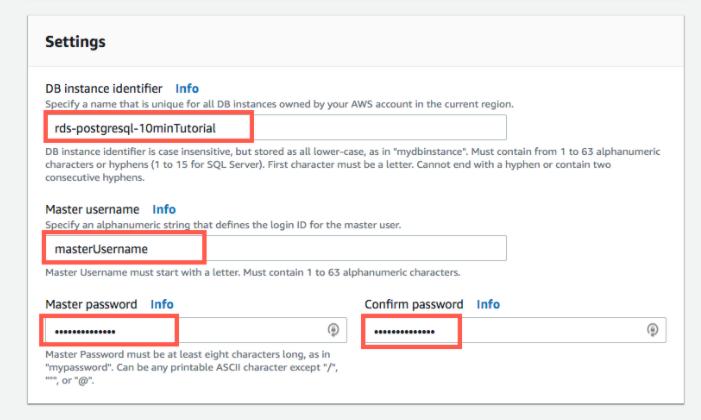

Then, we fill out the database configuration credentials.

Finally, we created the database instance. Once this process is done, we open the instance created and should copy the endpoint and port for future connections.

It’s needed to have Python installed. In not, you must access the official site.

2. Installation of Apache Airflow

When all tools are installed, the following statements are written from the Python console:

export AIRFLOW_HOME=~/airflow

pip install apache-airflow

3. Connecting Apache Airflow and AWS RDS

Now, we will connect Apache airflow with the database we created earlier. To do this, go to the folder where airflow was installed and open the file called airflow.cfg and we look for the following line: sql_alchemy_conn.

There it will appear something like:

sqlite:////home/user/airflow/unittests.db

We are going to change this line for:

postgresql+psycopg2://<username>:<password>@<endpoint>:<port>/<dbname>postgresql+psycopg2://postgres:postgres@postgres-db.xxxxxxxxx-east-5.rds.amazonaws.com:5432/rds-postgresql-10minutestutorial

Finally, we proceed to Start airflow by typing the following command:

airflow initdb

4. Creating the Python Pipeline

To start the connection with the database created, we create a class that contains the main methods to work with the database through queries

Then, we create the table to use during the task in Apache Airflow and the method to insert data in it.

5. Creating DAG to use the Pipeline

Where we installed Airflow, will create a directory called ‘dags’ and inside it, we will create a python file.

That file contains all necessary dependencies and the dag declaration:

Then we execute the python script for the creation of the dag. Next, we open the terminal and execute the following command: airflow webserver -p 8080

This command will run the Airflow UI inside a localhost server. Then we go to the following link: http://localhost:8080/

There you will find the list of DAGs we have. Now, we proceed to activate the DAG we added and activate the scheduler with the following command:

airflow scheduler

References

Creating an Amazon RDS DB instance

Creating an Amazon RDS DB instance — Amazon Relational Database Service The basic building block of Amazon RDS is the…

docs.aws.amazon.com

PostgreSQL on Amazon RDS

Amazon RDS supports DB instances running several versions of PostgreSQL. You can create DB instances and DB snapshots…

docs.aws.amazon.com

Cree flujos de trabajo de aprendizaje automático de extremo a extremo con Amazon SageMaker y Apache…

Los flujos de trabajo de aprendizaje automático (ML) organizan y automatizan secuencias de tareas de ML al permitir la…

sitiobigdata.com

Apache Airflow. Create ETL pipeline like a boss.

From my last article. Introduction of Airflow. tool for create ETL pipeline. we introduce basic and feature of Apache…

medium.com

Airflow vs Amazon RDS | What are the differences?

Amazon RDS gives you access to the capabilities of a familiar MySQL, Oracle or Microsoft SQL Server database engine…

stackshare.io

RDS Postgres and Containerized Airflow | TypeScript

View Code A Pulumi program to deploy an RDS Postgres instance and containerized Airflow. Deploying and running the…

www.pulumi.com

Example utilizing RDS or other external Postgres instance? · Issue #149 · puckel/docker-airflow

Dismiss GitHub is home to over 50 million developers working together to host and review code, manage projects, and…

github.com

已为社区贡献19907条内容

已为社区贡献19907条内容

所有评论(0)