Centos7.7 deploy kubernetes v1.16.4 many master

环境HostnameIPRoleK8S-master01192.168.16.120master01K8S-master02192.168.16.121master02K8S-node01192.168.16.122node01K8S-node02192.168.16.123node02K8S VIP192.168.16.130...

环境

| Hostname | IP | Role |

|---|---|---|

| K8S-master01 | 192.168.16.120 | master01 |

| K8S-master02 | 192.168.16.121 | master02 |

| K8S-node01 | 192.168.16.122 | node01 |

| K8S-node02 | 192.168.16.123 | node02 |

| K8S VIP | 192.168.16.130 |

PS:系统安装时不用创建swap,避免再次手动关闭

文章导航

一、前期准备

以下操作(k8s-master01/02、k8s-node01/02)均要执行

1、添加域名解析

# cat >> /etc/hosts << EOF

> 192.168.16.120 k8s-master01

> 192.168.16.121 k8s-master02

> 192.168.16.122 k8s-node01

> 192.168.16.123 k8s-node02

> EOF

2、设置主机名

# systemctl set-hostname xxx

3、设置时间同步

# yum -y install chrony

# vim /etc/chrony.conf

server ntp.aliyun.com iburst #我这里使用阿里云时间服务器

# systemctl start chronyd

# systemctl enable chronyd

# chronyc sources -v

210 Number of sources = 1

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current synced, '+' = combined , '-' = not combined,

| / '?' = unreachable, 'x' = time may be in error, '~' = time too variable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 203.107.6.88 2 10 377 26 +2914us[+4672us] +/- 34ms

4、配置防火墙规则

k8s-master01/02firewall rule:

firewall-cmd --permanent --add-port=6443/tcp

firewall-cmd --permanent --add-port=8443/tcp #此为haproxy监听端口,个人自定义端口

firewall-cmd --permanent --add-port=2379/tcp

firewall-cmd --permanent --add-port=2380/tcp

firewall-cmd --permanent --add-port=10250-10252/tcp

firewall-cmd --permanent --add-port=10255/tcp

firewall-cmd --permanent --add-port=8472/udp

firewall-cmd --permanent --add-port=443/udp

firewall-cmd --permanent --add-port=53/tcp

firewall-cmd --permanent --add-port=53/udp

firewall-cmd --permanent --add-port=9153/tcp

firewall-cmd --add-masquerade --permanent #打开NAT

firewall-cmd --permanent --add-port=30000-32767/tcp

firewall-cmd --reload

firewall-cmd --query-masquerade #查询是否允许NAT转发

k8s-node01/02firewall rule:

firewall-cmd --permanent --add-port=10250/tcp

firewall-cmd --permanent --add-port=10255/tcp

firewall-cmd --permanent --add-port=8472/udp

firewall-cmd --permanent --add-port=443/udp

firewall-cmd --permanent --add-port=30000-32767/tcp

firewall-cmd --permanent --add-port=53/tcp

firewall-cmd --permanent --add-port=53/udp

firewall-cmd --permanent --add-port=9153/tcp

firewall-cmd --add-masquerade --permanent

firewall-cmd --reload

firewall-cmd --query-masquerade #查询是否允许NAT转发

5、配置内核参数,将桥接的IPv4流量传递到iptables链路

# cat > /etc/sysctl.d/k8s.conf << EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> EOF

# sysctl --system

二、配置源

以下操作(k8s-master01/02、k8s-node01/02)均要执行

1、配置yum源为阿里源

# cd /etc/yum.repos.d/

# mv CentOS-Base.repo CentOS-Base.repo.bak

# curl -o CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

# sed -i 's?gpgcheck=1?gpgcheck=0?g' /etc/yum.repos.d/CentOS-Base.repo

# curl -o epel.repo https://mirrors.aliyun.com/repo/epel-7.repo

2、配置Docker源

# curl -o docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

3、配置kubernetes源为阿里源

# vim kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

4、更新缓存

# yum clean all

# yum makecache

# yum repolist

三、安装Docker

以下操作(k8s-master01/02、k8s-node01/02)均要执行

1、安装

PS:kubernetes1.16.4最高支持docker18.09

# yum list docker-ce --showduplicates | sort -r # 列出所有版本

# yum -y install docker-ce-18.09.9 docker-ce-cli-18.09.9

# systemctl start docker

# systemctl enable docker

# docker version

Client: Docker Engine - Community

Version: 19.03.5

API version: 1.40

Go version: go1.12.12

Git commit: 633a0ea

Built: Wed Nov 13 07:25:41 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.5

API version: 1.40 (minimum version 1.12)

Go version: go1.12.12

Git commit: 633a0ea

Built: Wed Nov 13 07:24:18 2019

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.2.10

GitCommit: b34a5c8af56e510852c35414db4c1f4fa6172339

runc:

Version: 1.0.0-rc8+dev

GitCommit: 3e425f80a8c931f88e6d94a8c831b9d5aa481657

docker-init:

Version: 0.18.0

GitCommit: fec3683

# rpm -qi {docker-ce,docker-ce-cli} |grep Version

Version : 18.09.9

Version : 18.09.9

2、设置Docker镜像加速器

# vim /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-file": "3",

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"registry-mirrors": ["https://www.docker-cn.com"]

}

#

# systemctl daemon-relod

# systemctl restart docker

# docker info |tail -5

127.0.0.0/8

Registry Mirrors:

https://www.docker-cn.com/ #加速URL

Live Restore Enabled: false

四、Master HA

只需在K8S-master01/02上操作

1、haproxy(k8s-master01/02)配置相同

PS:此处的haproxy为apiserver提供反向代理,haproxy将所有请求轮询转发到每个master节点上

# yum -y install haproxy

# cd /etc/haproxy/

# vim haproxy.cfg

global

chroot /var/lib/haproxy

daemon

group haproxy

user haproxy

log 127.0.0.1:514 local0 warning

pidfile /var/lib/haproxy.pid

maxconn 20000

spread-checks 3

nbproc 8

defaults

log global

mode tcp

retries 3

option redispatch

listen https-apiserver

bind 0.0.0.0:8443 # 指定绑定的端口,ip都设置为0.0.0.0,我这里使用8443端口

mode tcp

balance roundrobin

timeout server 15s

timeout connect 15s

server apiserver1 192.168.16.120:6443 check port 6443 inter 5000 fall 5 #转发到k8s-master1的apiserver上,apiserver端口默认是6443

server apiserver2 192.168.16.121:6443 check port 6443 inter 5000 fall 5 #转发到k8s-master2的apiserver上,apiserver端口默认是6443

# systemctl start haproxy

# systemctl enable haproxy

# netstat -nltup |grep 8443

2、在k8s-master01上部署keepalived

[root@k8s-master01 ~]# yum -y install keepalived

[root@k8s-master01 ~]# cd /etc/keepalived/

[root@k8s-master01 keepalived]# cp keepalived.conf keepalived.conf.bak

[root@k8s-master01 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

router_id k8s-master01

script_user root

enable_script_security

}

vrrp_script chk_status {

script "/ /etc/keepalived/chk_status.sh"

interval 2 #脚本执行间隔,每2s检测一次

weight -5 #脚本结果导致的优先级变更,检测失败(脚本返回非0)则优先级减5

fall 2 #检测连续2次失败才算确定是真失败。会用weight减少优先级(1-255之间)

rise 2 #检测2次成功就算成功。但不修改优先级

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.16.120 # 发送多播数据包时的源IP地址, 本地ip

virtual_router_id 50 #路由器标识,两个节点的设置必须一样,以指明各个节点属于同一VRRP组

priority 100 #定义优先级,数字越大,优先级越高,故障恢复后抢占vip

advert_int 2 #健康检查,VRRP心跳包发送时间间隔,两个节点设置必须一样

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.16.130

}

track_script {

chk_status #主备切换检查脚本

}

}

# firewall-cmd --direct --permanent --add-rule ipv4 filter INPUT 0 \

> --in-interface ens33 --destination 224.0.0.18 --protocol vrrp -j ACCEPT

# firewall-cmd --direct --permanent --add-rule ipv4 filter OUTPUT 0 \

> --out-interface ens33 --destination 224.0.0.18 --protocol vrrp -j ACCEPT

# firewall-cmd –reload

3、在k8s-master02上部署keepalived

[root@k8s-master02 ~]# yum -y install keepalived

[root@k8s-master02 ~]# cd /etc/keepalived/

[root@k8s-master02 keepalived]# cp keepalived.conf keepalived.conf.bak

[root@k8s-master02 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

router_id k8s-master02

script_user root

enable_script_security

}

vrrp_script chk_status {

script "/etc/keepalived/chk_status.sh"

interval 2 #脚本执行间隔,每2s检测一次

weight -5 #脚本结果导致的优先级变更,检测失败(脚本返回非0)则优先级减5

fall 2 #检测连续2次失败才算确定是真失败。会用weight减少优先级(1-255之间)

rise 2 #检测2次成功就算成功。但不修改优先级

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

mcast_src_ip 192.168.16.121 # 发送多播数据包时的源IP地址, 本地ip

virtual_router_id 50 #路由器标识,两个节点的设置必须一样,以指明各个节点属于同一VRRP组

priority 90 #定义优先级,数字越大,优先级越高,故障恢复后抢占vip

advert_int 2 #健康检查,VRRP心跳包发送时间间隔,两个节点设置必须一样

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.16.130

}

track_script {

chk_status #主备切换检查脚本

}

}

# firewall-cmd --direct --permanent --add-rule ipv4 filter INPUT 0 \

> --in-interface ens33 --destination 224.0.0.18 --protocol vrrp -j ACCEPT

# firewall-cmd --direct --permanent --add-rule ipv4 filter OUTPUT 0 \

> --out-interface ens33 --destination 224.0.0.18 --protocol vrrp -j ACCEPT

# firewall-cmd –reload

4、编写keepalived健康检查脚本k8s-master01/02相同

# vim /etc/keepalived/chk_status.sh

#!/bin/bash

gateway=192.168.16.2

/usr/bin/ping -c 5 ${gateway} > /dev/null 2>&1

gateway_stat=$?

haproxy_status1=$(ps -C haproxy --no-heading|wc -l)

if [ "${haproxy_status1}" = "0" ]; then

systemctl start haproxy

sleep 2

haproxy_status2=$(ps -C haproxy --no-heading|wc -l)

if [ "${haproxy_status1}" = "0" ]; then

systemctl stop keepalived

fi

elif [ "${gateway_stat}" != "0" ];then

systemctl stop keepalived

fi

# chmod +x /etc/keepalived/chk_status.sh

五、安装kubeadm、kubelet、kubectl

1、安装

(k8s-master01/02 and k8s-node01/02)均需要安装kubeadm、kubelet、kubectl

PS:安装kubeadm、kubelet、kubectl,注意这里默认安装当前最新版本,建议先查看所有版本,安装时加上版本号,总之版本很重要,先去“https://github.com/kubernetes/kubernetes”下载kubeadm source code,再决定安装的版本,因为kubeadm默认证书有效期只有一年,所以等kubeadm安装成功后,编译kubeadm替换掉默认的kubeadm,后面初始化k8s生成的证书才是100年,本人只能下载1.16.4,所以部署此版本

github下载源码:https://github.com/kubernetes/kubernetes

# yum list kubeadm --showduplicates | sort -r

# yum list kubelet --showduplicates | sort -r

# yum list kubectl --showduplicates | sort -r

# yum -y install kubeadm-1.16.4 kubelet-1.16.4 kubectl-1.16.4

# rpm -qi {kubeadm,kubelet,kubectl} |grep Version

Version : 1.16.4

Version : 1.16.4

Version : 1.16.4

2、 初始化master

PS:只需要初始化k8s-master01即可,初始化之前检查haproxy、keepalived是否正常运作

[root@k8s-master01 ~]# kubeadm config images list # 查看所需的镜像

#开始初始化

[root@k8s-master01 ~]# kubeadm init \

> --apiserver-advertise-address=192.168.16.120 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version v1.16.4 \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.244.0.0/16 \

> --control-plane-endpoint 192.168.16.130:8443 \

> --upload-certs

参数说明:

–apiserver-advertise-address:用于指定kube-apiserver监听的ip地址,就是 master本机IP地址。

–image-repository: 不能翻墙时使用指定国内源

–kubernetes-version: 用于指定k8s版本

–service-cidr:用于指定SVC的网络范围

–pod-network-cidr:用于指定Pod的网络范围;10.244.0.0/16

–control-plane-endpoint:指定keepalived的虚拟ip

–upload-certs:上传证书

PS:执行完kubeadm init才会在/etc/kubernetes/生成 ”pki” 相关证书,证书2小时会失效,建议在2小时内添加master02 and node01/02

[root@k8s-master01 ~]# systemctl start kubelet

[root@k8s-master01 ~]# systemctl enable kubelet

按提示需要执行以下命令

oot@k8s-master01 ~]# mkdir -p $HOME/.kube

[root@k8s-master01 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master01 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master01 ~]# kubectl get node

PS:状态为“NotReady”是因为没有安装网络

3、修改k8s-master01 kubeadm证书有效期

3.1、修改kubernetes源码

[root@k8s-master01 ~]# cd /usr/local/src/

[root@k8s-master01 src]# tar -xzf kubernetes-1.16.4.tar.gz

[root@k8s-master01 src]# cd kubernetes-1.16.4/

[root@k8s-master01 kubernetes-1.16.4]# vim ./staging/src/k8s.io/client-go/util/cert/cert.go

[root@k8s-master01 kubernetes-1.16.4]# vim ./cmd/kubeadm/app/constants/constants.go

3.2、编译kubeadm

PS:到https://hub.docker.com搜索 kube-cross

[root@k8s-master01 ~]# docker pull mirrorgooglecontainers/kube-cross:v1.12.10-1

[root@k8s-master01 ~]# docker run -it --rm -v /usr/local/src/kubernetes-1.16.4:/go/src/kubernetes mirrorgooglecontainers/kube-cross:v1.12.10-1

root@9a9f26f35b5d:/go# cd /go/src/kubernetes/ # 进入到容器挂载路径

root@9a9f26f35b5d:/go/src/kubernetes# more ./cmd/kubeadm/app/constants/constants.go |grep time.Hour # 查看内容是否对

编译kubeadm即可

root@9a9f26f35b5d:/go/src/kubernetes# make all WHAT=cmd/kubeadm GOFLAGS=-v

PS:编译完成数据输出到当前路径的“_output/bin/”下面,其中“bin”为软连接,真实路径为“./_output/local/bin/linux/amd64/”

3.3、替换掉现有的kubeadm

[root@k8s-master01 kubernetes-1.16.4]# mv /usr/bin/kubeadm /usr/bin/kubeadm.bak

[root@k8s-master01 kubernetes-1.16.4]# cp ./_output/local/bin/linux/amd64/kubeadm /usr/bin/

3.4、执行命令更新证书

检查证书到期时间

[root@k8s-master01 ~]# kubeadm alpha certs check-expiration

续订全部证书

[root@k8s-master01 ~]# kubeadm alpha certs renew all

再次检查证书到期时间

[root@k8s-master01 ~]# kubeadm alpha certs check-expiration

六、部署flannel网络

在k8s-master01上操作

1、下载flannel修改配置

[root@k8s-master01 src]# curl -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

PS:防止服务器存在多个网卡,以及flannel创建失败,使用“–iface”指定网卡名称,配置文件里有两个image:quay.io/coreos/flannel:v0.11.0-arm64,只需要在第一个containers下面添加。

请确保能够下载“quay.io/coreos/flannel:v0.11.0-amd64”

[root@k8s-master01 src]# vim kube-flannel.yml

2、安装flannel network

[root@k8s-master01 src]# kubectl apply -f ./kube-flannel.yml

3、查看状态

[root@k8s-master01 src]# kubectl get node

[root@k8s-master01 src]# kubectl get pod -n kube-system

七、添加k8s-master02

在k8s-master02上操作

1、把k8s-master02加入master

PS:请提前下载“quay.io/coreos/flannel:v0.11.0-amd64”

[root@k8s-master02 ~]# docker pull quay.io/coreos/flannel:v0.11.0-amd64

[root@k8s-master02 ~]# kubeadm join 192.168.16.130:8443 --token 2zlkoy.decom53afzzzpgkz \

> --discovery-token-ca-cert-hash sha256:8170fd6ce541242a399eb819824f978ba9116a3eccf319f85cc2a2c8e983b879 \

> --control-plane --certificate-key 0151f6d863003ff41b3aeca630f65f86e2df2e6dfea3b87eb3c78e40d06757a7

如果出现以下异常:

PS:因为时间太久了证书过期导致无法认证

在k8s-master01上重新生成证书

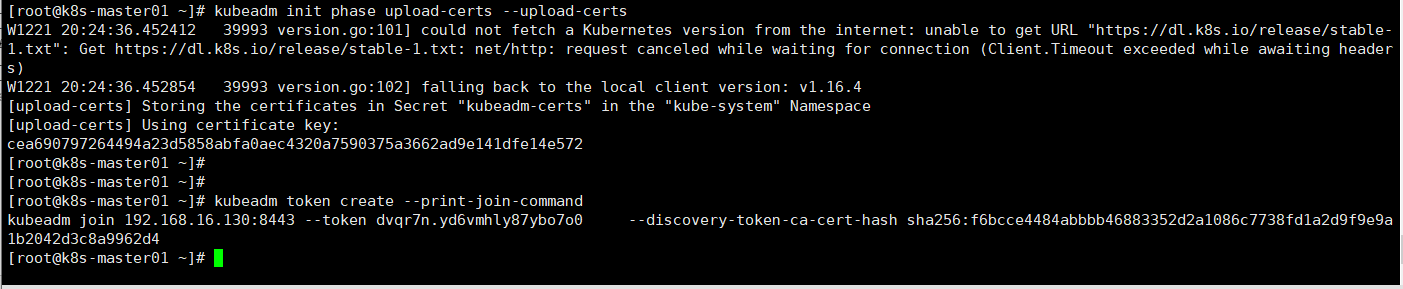

[root@k8s-master01 ~]# kubeadm init phase upload-certs --upload-certs

在k8s-master01上重新生成token

[root@k8s-master01 ~]# kubeadm token create --print-join-command

再次加入master01

[root@k8s-master02 ~]# systemctl start kubelet

[root@k8s-master02 ~]# systemctl enable kubelet

[root@k8s-master02 ~]# mkdir -p $HOME/.kube

[root@k8s-master02 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master02 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

2、 分别在k8s-master01/02查看状态

PS:k8s-master02成功加入后ROLES会自动显示为master

3、修改k8s-master02 kubeadm证书有效期

3.1、复制k8s-master01上编译好的kubeadm

[root@k8s-master02 ~]# mv /usr/bin/kubeadm /usr/bin/kubeadm.bak

[root@k8s-master02 ~]# scp @192.168.16.120:/usr/local/src/kubernetes-1.16.4/_output/local/bin/linux/amd64/kubeadm /usr/bin/

3.2、执行命令更新证书

续订全部证书

[root@k8s-master02 ~]# kubeadm alpha certs renew all

查看证书时间

[root@k8s-master02 ~]# kubeadm alpha certs check-expiration

八、添加k8s-node01/02

K8s-node/02操作相同

1、把k8s-node01/02加入master

PS:请提前下载“quay.io/coreos/flannel:v0.11.0-amd64”

[root@k8s-node01 ~]# docker pull quay.io/coreos/flannel:v0.11.0-amd64

[root@k8s-node01 ~]# systemctl start kubelet

[root@k8s-node01 ~]# systemctl enable kubelet

[root@k8s-node01 ~]# kubeadm join 192.168.16.130:8443 --token 2zlkoy.decom53afzzzpgkz \

> --discovery-token-ca-cert-hash sha256:8170fd6ce541242a399eb819824f978ba9116a3eccf319f85cc2a2c8e983b879

2、在k8s-master01上修改角色

PS:node成功加入后默认角色状态为“none”

2.1、先在node上查看flannel是否正常

2.2、修改角色为“node”

[root@k8s-master01 ~]# kubectl label nodes k8s-node01 node-role.kubernetes.io/node=

[root@k8s-master01 ~]# kubectl label nodes k8s-node02 node-role.kubernetes.io/node=

[root@k8s-master01 ~]# kubectl get nodes

九、验证双master

1、关闭k8s-master01网络

未关闭网络前

关闭网络后

[root@k8s-master01 ~]# systemctl stop network

2、再次开启k8s-master01网络

[root@k8s-master01 ~]# systemctl start network

[root@k8s-master01 ~]# systemctl start keepalived

PS:k8s-master02要再次重启keepalived service, VIP才会消失,暂时不知道原因

Completion !

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)