解决异常:library initialization failed - unable to allocate file descriptor table - out of memoryAborted

当前实验在Dcker镜像下调用Single Node模式的Hadoop服务Step1 docker 环境执行hadoop的wordcount.jar发生异常参照Hadoop官网文档执行wordcount功能测试,因运存不足无法给进程分配更多的文件句柄数而异常退出(该异常提示并不准确)# 执行指令如下[root@ligy-pc:/opt/hadoop-3.2.1]# bin/hadoo...

·

当前实验在Dcker镜像下调用Single Node模式的Hadoop服务

Step1 docker 环境执行hadoop的wordcount.jar发生异常

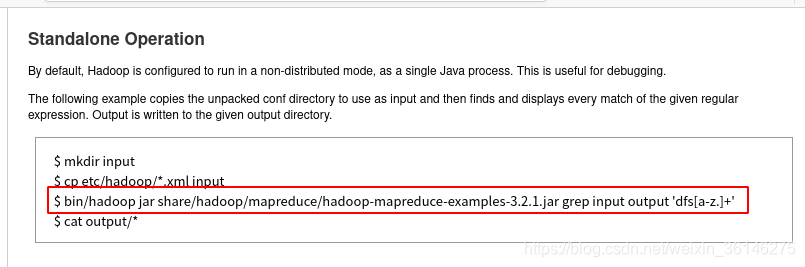

参照Hadoop官网文档执行wordcount功能测试,因运存不足无法给进程分配更多的文件句柄数而异常退出(该异常提示并不准确)

# 执行指令如下

[root@ligy-pc:/opt/hadoop-3.2.1]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+'

library initialization failed - unable to allocate file descriptor table - out of memoryAborted (core dumped)

Step2 上调容器启动内存:为Docker分配大内存重试

# 为Docker分配10G内存(-m 10G)

[root@ligy-pc:/opt/hadoop-3.2.1]# nvidia-docker run -it --net host -v /data/docker-images/:/sys/fs/cgroup -m 10G e0eedd2db3a1 bash

# 可见问题依旧

[root@ligy-pc:/opt/hadoop-3.2.1]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+'

library initialization failed - unable to allocate file descriptor table - out of memoryAborted (core dumped)

Step3 调整系统的"最小句柄数"

# 系统默认句柄数是:1073741816

# 当前Llinux系统其文件句柄数默认值非常高,通常默认值是:1024

[root@ligy-pc:/opt/hadoop-3.2.1]# ulimit -n

1073741816

# 下调系统默认句柄数:10000

# 程序已经正常执行,问接解决了

[root@ligy-pc:/opt/hadoop-3.2.1]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+'

2020-02-28 15:47:50,882 INFO impl.MetricsConfig: Loaded properties from hadoop-metrics2.properties

2020-02-28 15:47:50,914 INFO impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

2020-02-28 15:47:50,914 INFO impl.MetricsSystemImpl: JobTracker metrics system started

具体原因是JDK8启动程序时会尝试为系统设置的"1073741816"个文件句柄分配内存,因为文件句柄数量十分巨大,就导致了即便分配10G运存还是Out Of Memory。旧版的Linux默认句柄数为1024,则不会出现该异常。

参考文档:Error upon jar execution - unable to allocate file descriptor table(StackOverFlow)

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)